-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

VGLeaks - Orbis GPU Detailed - compute, queues and pipelines

- Thread starter artist

- Start date

MikeE21286

Member

It means we need 16GB GDDR5 on the next Xbox.

8GB GDDR5 of RAM, it's all that matters...ALL.

It's means it's going to put all that RAM to good use!

lol

thanks

better than was rumored???

Whats the best GPU a console manufacturer could use for a fall 2013 release? Would 7950 be possible?

Well my choice would be a 7870 as the best candidate GPU to put into both next gen consoles, although that is right on the edge. A 7950 is probably pushing the TDP limits of the consoles too far. The PS4 GPU is very good GPU for a console that is most probably going to run on less than 200 Watts.

Whats the best GPU a console manufacturer could use for a fall 2013 release? Would 7950 be possible?

Too hot to put into a console box, TDP is around 200.

Valkyr Junkie

Member

So are we to still believe the information they've been trickling out is still accurate despite the apparent recent changes to the PS4?

Saberus

Member

IS the GPU equal to 7870? Or is it close to it?

That's really hard to say since this is a APU and it works differently that a single CPU/GPU and the APU works as a single entity with the CPU/GPU feeding off each other, kind of like chocolate and peanut butter, on their own, they are good, but put them together and WAH!!!

Would have to downclock it quite considerably and the perf advantage wouldnt be there.Whats the best GPU a console manufacturer could use for a fall 2013 release? Would 7950 be possible?

From the diagram looks like CPU.What I don't understand yet is how the load balancing between the individual queues/pipelines will be performed or controlled.

Galacticos

Banned

2.0.GCN 2.0 vs 1.0? What is it and why does it matter?

And it means better GPU utilization.

btw, why call it orbis? lol.

GCN is AMDs current GPU architecture, and 2.0 is obviously its successor. Since we have no idea what exactly this entails, throwing around the "GCN 2.0" moniker in console tech discussions is pretty meaningless IMHO.GCN 2.0 vs 1.0? What is it and why does it matter?

So developers can control the priority of the individual pielines? That would be pretty neat. I want to program one.CPU.

That's really hard to say since this is a APU and it works differently that a single CPU/GPU and the APU works as a single entity with the CPU/GPU feeding off each other, kind of like chocolate and peanut butter, on their own, they are good, but put them together and WAH!!!

Yeah we haven't seen an APU as powerful as the PS4 - or at least I haven't seen one - so it will be interesting what it can do.

It would be kind of neat but solely goiing on what is in the diagram.So developers can control the priority of the individual pielines? That would be pretty neat. I want to program one.

Would have to downclock it quite considerably and the perf advantage wouldnt be there.

CPU.

No, the point of the ACEs is to handle task allocation within the GPU. There may be some need for developers to chunk their code up in such a way to encourage the GPU to be more efficient though.

I wonder if each ACE is directly addressable by the developer?

It came up with the Durango GPU. It's at ~1.2Tflops but 100% efficiency and the 360 was rated at 60% efficiency.

You can throw a profiler and look at utilisation across a sampling of games and say 'that's how efficient that GPU is'. That's one measure of 'efficiency', if you really want. And you can look at the things that get in the way of typical code going faster on a chip and try to improve that in future hardware. And that's what GPU providers have been doing, where brute power aside, architectural changes and tweaks are aimed at. And this is another example here. But you're not going to have '100%' utilisation across even a more cherry-picked sample of games. And it's a narrow-ish (even if perhaps most meaningful) way to define 'efficiency'. Saying there's x% utilisation on average across a suite of games doesn't limit the GPU to that threshold, for example - other outlier games might do better with their code. 'Efficiency' in this context is a dance between software and hardware, and hardware can do things to help with typical cases and approaches, but it's not the only part of the equation, and the equation will never get to 100%

Sorry if I'm rambling a bit.

The 8 ACE would load balance it between their (64) rings and it seems like each ACE can be addressed by the CPU.No, the point of the ACEs is to handle task allocation within the GPU. There may be some need for developers to chunk their code up in such a way to encourage the GPU to be more efficient though.

I wonder if each ACE is directly addressable by the developer?

Galacticos

Banned

I wouldnt say its meaningless, the diagram screams GCN 2.0.GCN is AMDs current GPU architecture, and 2.0 is obviously its successor. Since we have no idea what exactly this entails, throwing around the "GCN 2.0" moniker in console tech discussions is pretty meaningless IMHO.

Yea, that's actually one of the reasons I want BF5 to return to WWII. True destruction in a war that was pretty much all about destruction would be epic, especially if they brought naval battles back. Imagine a destroyer shelling a village along the coast with all of its weapons...MMMmmmm.

I hope more MP games are willing to take the risk on destruction and realize the potential instead of just thinking it would ruin the flow of a map.

Even with Battlefield 3 limited destruction, there isn't a better site then seeing the map transform before your eyes.

THE:MILKMAN

Member

So are we to still believe the information they've been trickling out is still accurate despite the apparent recent changes to the PS4?

Well the RAM thing is the only thing that's been "wrong" so far and that was a last minute change. VGleaks obviously have a lot of detailed docs for PS4 (a 95 page PDF IIRC?) Why they choose to react to rumours I don't know.

I personally would just post everything......

Well the RAM thing is the only thing that's been "wrong" so far and that was a last minute change. VGleaks obviously have a lot of detailed docs for PS4 (a 95 page PDF IIRC?) Why they choose to react to rumours I don't know.

I personally would just post everything......

Get people coming back to their site.

The 8 ACE would load balance it between their rings but it seems like each ACE itself can be addressed by the CPU.

Also looks like the 8 CSes are dedicated to compute, with the 8 process buffers upstream. So you'd fill them up with your compute tasks, and they'd be fed down into the compute units when a graphics task is stalled waiting for something?

So you can have 8 parallel compute threads running compared to 2 on standard AMD GPUs.

It's appreciated.Sorry if I'm rambling a bit.

I used to be a boring software programmer so my profiling usually ended up in showing the bottle neck in network or disk I/O. ;-)

Osiris

I permanently banned my 6 year old daughter from using the PS4 for mistakenly sending grief reports as it's too hard to watch or talk to her

I wouldnt say its meaningless, the diagram screams GCN 2.0.

I wouldn't say "screams", but certainly suggests, the prime complaint about general compute on GCN was a lack of granularity and the resulting high cost of context switching that this brought about.

This modification seems to be directly aimed at improving that aspect of the GCN design.

I would now be highly unsurprised to see this outlined as the solution when GCN 2.0 details are revealed.

Lovely Salsa

Banned

Well my choice would be a 7870 as the best candidate GPU to put into both next gen consoles, although that is right on the edge. A 7950 is probably pushing the TDP limits of the consoles too far. The PS4 GPU is very good GPU for a console that is most probably going to run on less than 200 Watts.

Interesting

So Sony is basically going with the best out there

How do you know what GCN 2.0 looks like? Are you really expecting the actual shader processors and render backends to be more or less unchanged?I wouldnt say its meaningless, the diagram screams GCN 2.0.

And even if it were to be GCN 2.0, how would naming it as such now help us, since we have nothing to compare it to? I could just see it creating another "GDDR5" buzzword phenomenon.

I wouldnt say its meaningless, the diagram screams GCN 2.0.

Do we know what GCN2.0 is meant to look like?

Maybe it's GCN 2.0, maybe it's borrowing one aspect of GCN 2.0, or maybe it's a PS4-specific optimisation. Not sure it's obvious which it is at this stage.

Also, yeah, the suggested relationship between CPU and those process buffers is...interesting. I wonder how much scope there is for programmer guidance in how they're used.

edit - kind of beaten

Think of it as the GPU is able to reach it's full potential.

100% efficiency.

it means a dev can use the PS4 GPGPU as a power CPU whenever they feel like it & choose how powerful they want the CPU to be vs the GPU.

that's not how it go but it's easier for some people to understand it that way.

it means that a dev can use like 70% of the GPU to compute a Ray-tracing engine with 550GFLOPS left for the GPU.

will this work? hell if I know but at least they can try it if they wanted to.

that's not how it go but it's easier for some people to understand it that way.

it means that a dev can use like 70% of the GPU to compute a Ray-tracing engine with 550GFLOPS left for the GPU.

will this work? hell if I know but at least they can try it if they wanted to.

Interesting

So Sony is basically going with the best out there

For a 200w maximum box, I would say yes. People who are more technical minded than me at these sort of things are free to disagree with me.

THE:MILKMAN

Member

Get people coming back to their site.

Well yes of course! It's just wearing a little thin now. I understand why they do it, but I just find it annoying as hell.

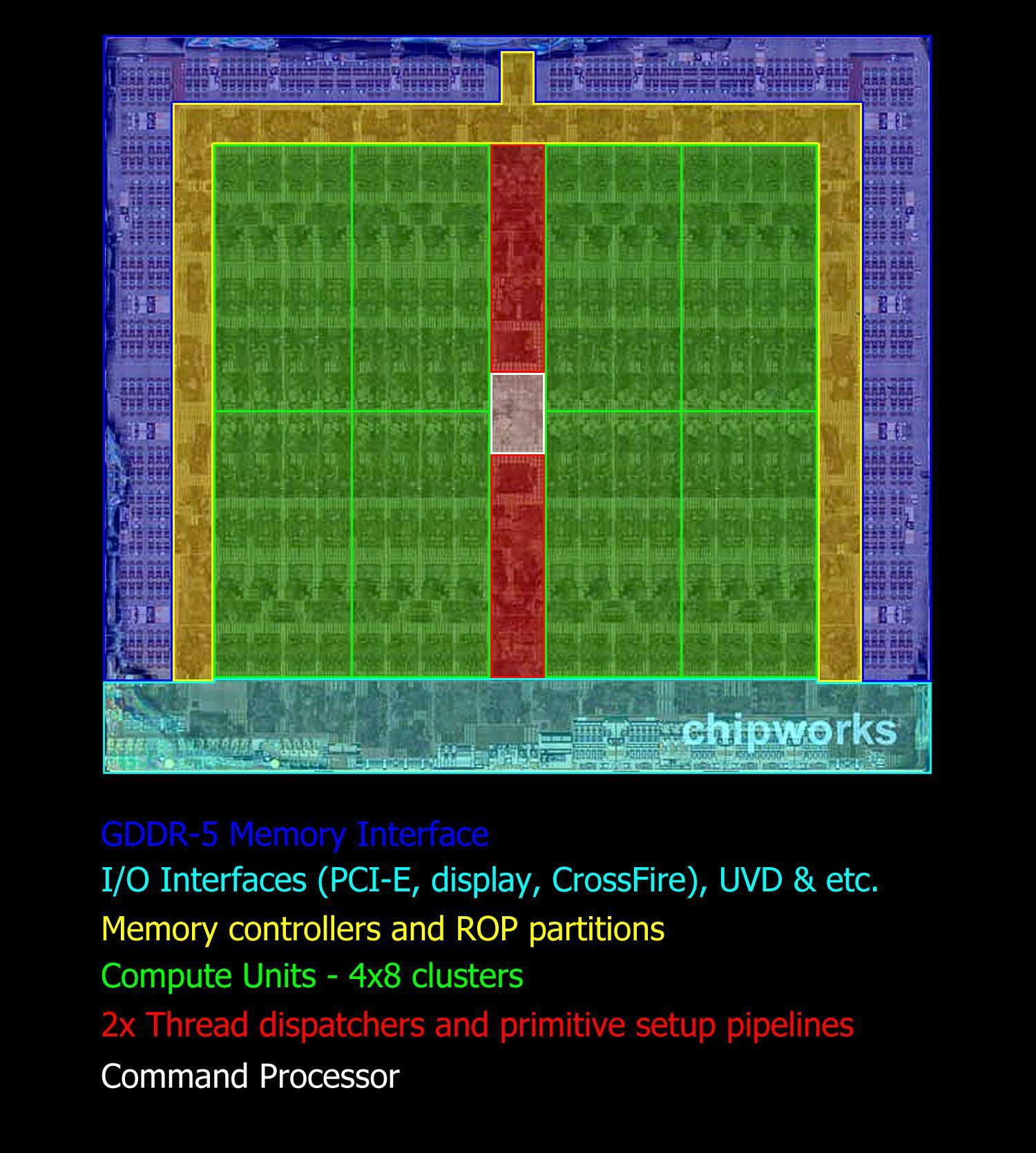

For anyone interested, the (die area) cost of adding the ACE could be signficant. Here is Tahiti die shot break down from fellix on B3D;

Well yes of course! It's just wearing a little thin now. I understand why they do it, but I just find it annoying as hell.

I am totally with you.

LiquidMetal14

hide your water-based mammals

http://i.imgur.com/UYQq2Z8.jpg[/mg]

[url]https://mega.co.nz/#!cdNGFLyC!cDj8ajt1WT0JNSQKEVdRbmk464QVFfVZF4OX7nRdMek[/rl][/QUOTE]

Dude.......

R10Neymarfan

Member

lol NeoGAF!!kkkkkk

Kun Aguero

Member

Just put me off my sausage sandwich dude.

Glorified G

Member

Galacticos

Banned

I wouldn't say "screams", but certainly suggests, the prime complaint about general compute on GCN was a lack of granularity and the resulting high cost of context switching that this brought about.

This modification seems to be directly aimed at improving that aspect of the GCN design.

I would now be highly unsurprised to see this outlined as the solution when GCN 2.0 details are revealed.

How do you know what GCN 2.0 looks like? Are you really expecting the actual shader processors and render backends to be more or less unchanged?

And even if it were to be GCN 2.0, how would naming it as such now help us, since we have nothing to compare it to? I could just see it creating another "GDDR5" buzzword phenomenon.

Well, it might be just EGCN (Enhanced GCN), but the diagram clearly shows 8 compute pipelines with 8 user-level queues, which is what GCN 2.0 is all about (less data waiting in line to be processed).

dragonelite

Member

ooh god... NSFW

LiquidMetal14

hide your water-based mammals

Well, it might be just EGCN (Enhanced GCN), but the diagram clearly shows 8 compute pipelines with 8 user-level queues, which is what GCN 2.2 is all about (less data waiting in line to be processed).

Can only be good for a closed box that will never really upgrade.