-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD Crimson ReLive Driver Leaked Slides

- Thread starter RazorbackDB

- Start date

LelouchZero

Member

This looks great! Glad to see new recording software for AMD GPUs!

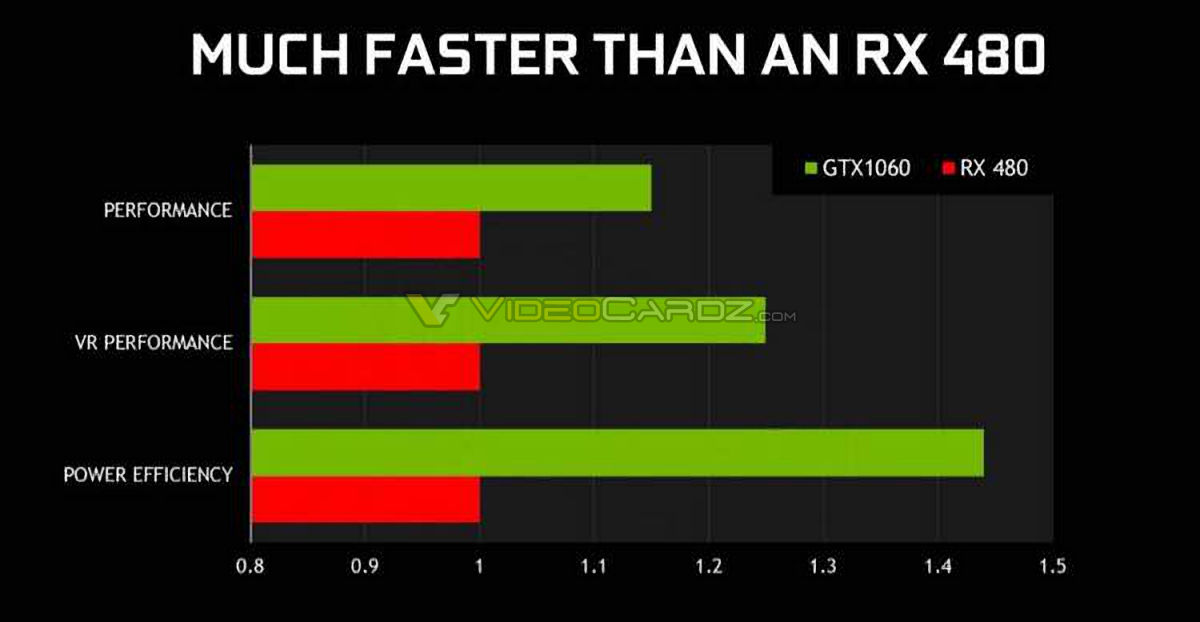

No not really, unless you consider 5-15% performance gains a worthy upgrade. In most cases it's within 10% of a GTX 970, and some more under DX12 games.

The GTX 970 also has ridiculous overclocking headroom that can make cards on-par with a stock GTX 980 or a little above that, making it recoup the performance difference between the GTX 970 and the RX 480.

Here's a review from Digital Foundry: Nvidia GeForce GTX 1060 review

The ram boost is great but it's probably better to get a stronger card like a GTX 1070 or an equivalent when AMD releases higher-end GPUs.

These charts are all kinds of confusing. So is a RX480 8gb a worthy upgrade from a GTX970 from a performance perspective?

No not really, unless you consider 5-15% performance gains a worthy upgrade. In most cases it's within 10% of a GTX 970, and some more under DX12 games.

The GTX 970 also has ridiculous overclocking headroom that can make cards on-par with a stock GTX 980 or a little above that, making it recoup the performance difference between the GTX 970 and the RX 480.

Here's a review from Digital Foundry: Nvidia GeForce GTX 1060 review

The ram boost is great but it's probably better to get a stronger card like a GTX 1070 or an equivalent when AMD releases higher-end GPUs.

This might be a good place to ask. I got a 1060 a couple of months ago but I'm considering getting a 480 since that supports freesync and my monitor does too. Would it be worth it?

I was in your position a few months ago. Freesync is pretty awesome especially on my 43" 4K monitor. Drivers have matured to the point where both cards have pretty similar performance so if you can get a 1:1 swap just do it.

Still, you might want to wait till the end of the year and see what Vega is (assuming they do announce it this month)

I guess my 280X is all but abandoned now. Sucks.

Yep. I have the same card and have this feeling. F*cking great.

NeOak

Member

I guess my 280X is all but abandoned now. Sucks.

Just for Wattman. It is a rebranded 7970 after all.Yep. I have the same card and have this feeling. F*cking great.

Pop-O-Matic

Banned

I guess my 280X is all but abandoned now. Sucks.

Yep. I have the same card and have this feeling. F*cking great.

Your card is still gonna get driver updates. You're only gonna be missing out on little niceties like the overclocking utility.

Just for Wattman. It is a rebranded 7970 after all.

IIRC they changed the way cards handle power management in GCN 1.1

Learning from the best /s

NeoRaider

Member

I guess my 280X is all but abandoned now. Sucks.

Yep. I have the same card and have this feeling. F*cking great.

Maybe they will do something about it later?? But i doubt it. Our cards are considered "old" now and when you think better. 280 and 280x really are old because they are rebranded 7970.

I remember my 280x not supporting VSR when AMD released it, but some less powerful GPUs did, and i felt bad about it. But later AMD added VSR support for 280x, 1440p only but it's better than nothing i guess.

What A Time To Be Alive

Member

I mean the 280X is basically a rebranded 7970 which is really old.I guess my 280X is all but abandoned now. Sucks.

Also I think you guys will still get driver updates just not stuff like this.

Yeah I guess there are driver updates, although I'm suspicious of them to be honest. With my older card I swear it kept getting slower with each update.  I don't think I've updated my drivers since Doom.

I don't think I've updated my drivers since Doom.

And I'm not too pissed, my 280X has served me well for almost three years, but I admit I didn't do my homework and didn't know it was a rebranded 7970 from 2011 when I bought it.

And I'm not too pissed, my 280X has served me well for almost three years, but I admit I didn't do my homework and didn't know it was a rebranded 7970 from 2011 when I bought it.

NeOak

Member

Yeah I guess there are driver updates, although I'm suspicious of them to be honest. With my older card I swear it kept getting slower with each update.I don't think I've updated my drivers since Doom.

And I'm not too pissed, my 280X has served me well for almost three years, but I admit I didn't do my homework and didn't know it was a rebranded 7970 from 2011 when I bought it.

AMD doesn't gimp the performance of older cards.

AMD doesn't gimp the performance of older cards.

What can I say, that seemed to be the case with my old 4670. I imagine not on purpose though, more a case of shit drivers for card they didn't care about.

Tacklemacain

Member

Thats weird the 7970 and 280(X) got faster over the years. They are at least faster now then their Nvidia counterpart.

http://www.babeltechreviews.com/hd-7970-vs-gtx-680-2013-revisited/

http://www.babeltechreviews.com/hd-7970-vs-gtx-680-2013-revisited/

PHOENIXZERO

Member

AMD doesn't gimp the performance of older cards.

They only gimp them when they're new.

It also helps that AMD used the same architecture for several generations and did a lot of re-badging.

I guess my 280X is all but abandoned now. Sucks.

Only for the Wattman stuff, apparently, not a huge deal unless you're into GPU oc'ing

Maybe they will do something about it later?? But i doubt it. Our cards are considered "old" now and when you think better. 280 and 280x really are old because they are rebranded 7970.

I remember my 280x not supporting VSR when AMD released it, but some less powerful GPUs did, and i felt bad about it. But later AMD added VSR support for 280x, 1440p only but it's better than nothing i guess.

Not like you want to push the 280X beyond 1440P in most cases anyway.

thelastword

Banned

Good strategy, at least they can improve things later on. There's always incentive to buy AMD cards with that strategy. TBH, I just think they have a vested interest in improving their drivers and software, it was their worse attribute, it was never their hardware. So fps gains with better drivers is pretty much guaranteed.They only gimp them when they're new.

It also helps that AMD used the same architecture for several generations and did a lot of re-badging.

QuantumSquid

Member

come over bb we can radeon and chill ( ͡° ͜ʖ ͡°)

15 benchmarks into radeon and chill and he gives you this look

Nikodemos

Member

Seriously. It's like opposite day, now that nVidia had a couple of high-visibility stinkers recently.AMD has really stepped up their driver game in the last year.

Only for the Wattman stuff, apparently, not a huge deal unless you're into GPU oc'ing

Not like you want to push the 280X beyond 1440P in most cases anyway.

It actually performs quite nicely at 1440p and 1800p in older games using GeDoSaTo. I even tried 4K on Valkyria Chronicles but I couldn't hold 60fps there.

Unknown Soldier

Member

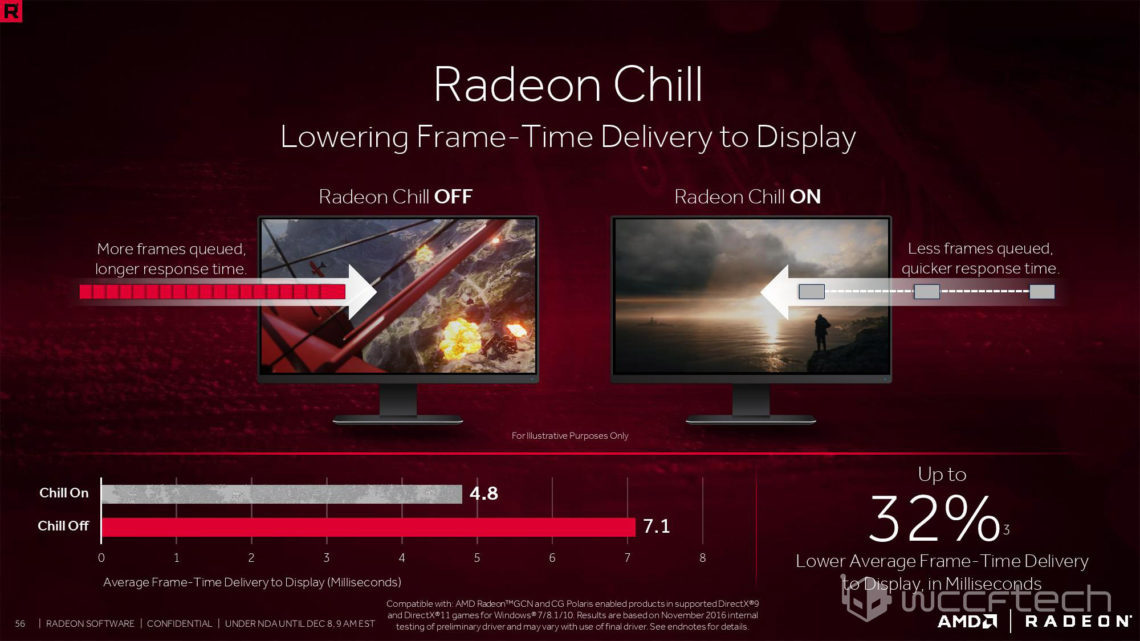

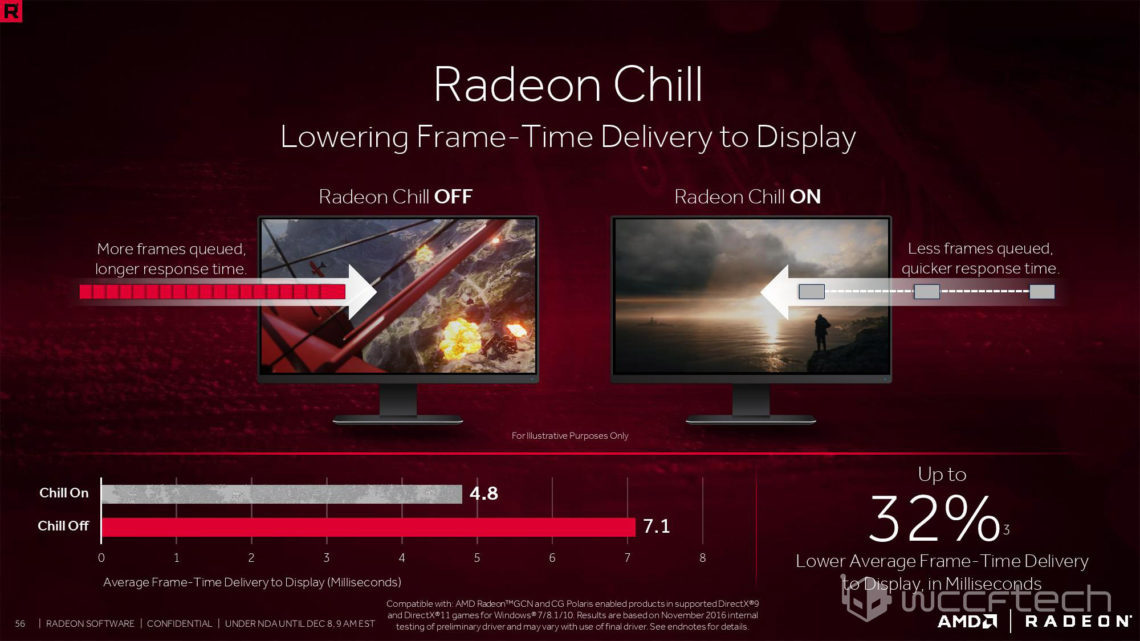

Radeon Chill sounds like it works exactly like the Optimal Power setting that Nvidia has.

I have an R9 290 4gb, can someone give me a rundown on how this will benefit me? I'm dumb.

Like this, will this just mean freesync + no vysnc therefore more frames?

Don't forget FreeSync Borderless Fullscreen Support which is HUGE

Like this, will this just mean freesync + no vysnc therefore more frames?

AMD has really stepped up their driver game in the last year.

Agreed this looks pretty nice.

RazorbackDB

Member

NeOak

Member

Better catchphrase!Radeon Chill sounds like it works exactly like the Optimal Power setting that Nvidia has.

lulz

This might be a good place to ask. I got a 1060 a couple of months ago but I'm considering getting a 480 since that supports freesync and my monitor does too. Would it be worth it?

Dude yes (assuming you can swap them). That's the combo I have and it's amazing. Screen tearing is my #1 pet peeve but I also hate the input lag vsynch brings, so this combo is literally perfect. Titanfall on max settings and 100+ fps with no tearing and no input lag is incredibly smooth. Dunno how I'm going to go back to some console games honestly (and some crap pc ports).

It actually performs quite nicely at 1440p and 1800p in older games using GeDoSaTo. I even tried 4K on Valkyria Chronicles but I couldn't hold 60fps there.

Oh really? Well shame on AMD for restricting it for no reason. I knew 1440p was no issue for 360 era ports, but 1800p as well huh?

NeOak

Member

Oh really? Well shame on AMD for restricting it for no reason. I knew 1440p was no issue for 360 era ports, but 1800p as well huh?

I'm sure the lack of hardware for it in the GCN 1.0 chip had nothing to do with it. Yeah.

Irobot82

Member

Still waiting for Vega 😐🏧

Me too. My 7950 is getting old. She's served me well but it is time.

I'm sure the lack of hardware for it in the GCN 1.0 chip had nothing to do with it. Yeah.

If you can downsample with GeDoSaTo there's literally zero reason AMD couldn't have let you customize the downsampling resolutions instead of restricting it to 1440p. Not talking about Wattman here.

If you can downsample with GeDoSaTo there's literally zero reason AMD couldn't have let you customize the downsampling resolutions instead of restricting it to 1440p. Not talking about Wattman here.

The method AMD uses to implement downsampling is different. AMD does it in the hardware scaler, as such it is pretty limited in comparison to gedosato.

PlayBoyMoney

Banned

280x=7970= 5 year old hardware, Nvidia sure as hell isn't adding features to their 5 year old cards!

The method AMD uses to implement downsampling is different. AMD does it in the hardware scaler, as such it is pretty limited in comparison to gedosato.

Oh, I see, thank for the clarification

Locuza

Member

Your point still holds up.Oh, I see, thank for the clarification

Nothing forced AMD to solve VSR through the Display Engine, they could have done it through a sofware solution like GeDoSaTo or DSR from Nvidia.

They still should do it, the Display Engine solution is too restrictive, doesn't support a wide range of products, doesn't support a wide range of aspect ratios, doesn't support different or advanced downsampling algorithms.

Also Nvidia could move their butt too, started with a bad Gauss Filter and still using it.

So much potential left aside.

Does that include the 280X?Lmao, Wattman support for literally everything but the 270-280 series.

Like, why 260, 285 but not 280 and 270? Why the R7 260?

NeOak

Member

Does that include the 280X?

Already answered above

Yeah, no Wattman for 280X was the entire point of my post actually, since I own one myself. Fineprint for Chill only specifies GCN and GCN Polaris so it should come to 1.0's as well.

If you look at the Wattman one the fineprint specifies the specific cards that get it, implies that Chill is for everyone, which is cool

If you look at the Wattman one the fineprint specifies the specific cards that get it, implies that Chill is for everyone, which is cool

Yeah, I was about to edit my post after I actually read the thread. Sincere apologies!Already answered above

hooijdonk17

Member

AMD FineWineTM technology at work again.

Caffeine

Member

That's pretty interesting. But it looks like a GUI for presentmon lol

Bar chart magic!

I like how the bars are going like 150% higher lol.

5-8% would be a really tiny bar tbh.

abracadaver

Member

Chill sounds really good.

I don't care about the power consumption but it reduces frametimes as well?

Only in selected games though and not in DX12/Vulkan yet

Is that CS:GO or CS 1.6?

I don't care about the power consumption but it reduces frametimes as well?

Only in selected games though and not in DX12/Vulkan yet

Is that CS:GO or CS 1.6?

Chill sounds really good.

I don't care about the power consumption but it reduces frametimes as well?

..

I don't see how it can reduce the time taken for the GPU to render a frame, which is what we mean when we talk about frame times, unless they somehow reduce the scene complexity. Here they seem to be talking about time to display the frame. To me it looks like they dynamically alter the DirectX render queue length. This would also explain why support is on a per game basis, as messing with the queue length may not work well with all games.

Having a deeper queue keeps the GPU busier. Perhaps busier than it needs to be, if you're trying to keep power and heat down to a minimum. Another problem with a deep queue is latency. Say you're on a 60 Hz display with Vsync enabled and a 3 frame queue. You only need a new frame every 16.7ms. Let's say you start producing new frames every 12.5ms (80fps). The extra frames that don't need to be displayed yet are written to a queue and shown at the next 16.7ms interval. With a 3 frame queue, you're effectively seeing the past on your display, as far as the game engine is concerned. Game input from your controller is being processed every 12.5ms, but you're seeing the results of those inputs 3 frames later on, at 16.7ms intervals. This is what results in floaty, laggy control response sometimes.

So, if you're not moving your character, or looking at the floor, perhaps they reduce the queue to 1 frame instead of 3. This would reduce your GPU usage a little (it's told not to queue up frames), making these savings they're talking about.

The compromise would be that as the frames are delivered "on demand", so to speak, you're vulnerable to an unexpected drop in performance. If you can't meet the 16.7ms interval, you've not got a frame ready in your buffer to keep things smooth and so the last frame might be repeated. This will look like stutter/hitching/judder. Smaller queue will reduce latency of your controls, which is what AMD could be showing here. Just my guesswork.

TL;DR - Chill could be the driver dynamically controlling the length of the DirectX render queue.

EDIT: Apparently there was no need for speculation, as AMD acquired this software recently: http://www.hialgo.com/TechnologyCHILL.html

So, it's a dynamic frame rate limiter.

Chill prevents underclocking? So I could theoretically play Knights of the Old Republic at something that isn't 30 FPS now? Because no matter what I've tried I haven't had any luck getting my GPU to run at high load speeds in that game, it's all "Nah, gonna idle while you play this". It's annoying having to "OC" my GPU every time I want to play it.

Edit

Edit

Ew. That makes zero sense with the stuff on the website "CHILL is for you -- if you experience performance drops while playing.". Repeat frame stutters are nasty.If you can't meet the 16.7ms interval, you've not got a frame ready in your buffer to keep things smooth and so the last frame might be repeated. This will look like stutter/hitching/judder

Can I get a link to that monitor?I was in your position a few months ago. Freesync is pretty awesome especially on my 43" 4K monitor. Drivers have matured to the point where both cards have pretty similar performance so if you can get a 1:1 swap just do it.

Still, you might want to wait till the end of the year and see what Vega is (assuming they do announce it this month)

chaosblade

Unconfirmed Member

This sounds pretty great. A Shadowplay alternative and borderless freesync were two of my most wanted features. Just need an integer scaling option now.

Ew. That makes zero sense with the stuff on the website "CHILL is for you -- if you experience performance drops while playing.". Repeat frame stutters are nasty.

That's because it's marketing garbage. The performance drops they're talking about are specifically if your GPU is throttling due to heat.