What he says is contradictory.

We already know that the initial OS RAM allocation is around ~3GB. 15min H.264 720p30 videos require ~1GB of space. With further optimization and the latest (v3.50) SDK, then perhaps the total OS RAM allocation could be brought down to ~1.5-2GB (half of that is just for the PVR). Ideally FreeBSD shouldn't need more than 512MB of RAM (the PS4 OS/UI is extremely barebones for the time being).

The H.264 decoder/encoder is inside the APU, otherwise it wouldn't be able to function, since it needs direct access to the GDDR5 pool and the GPU framebuffer.

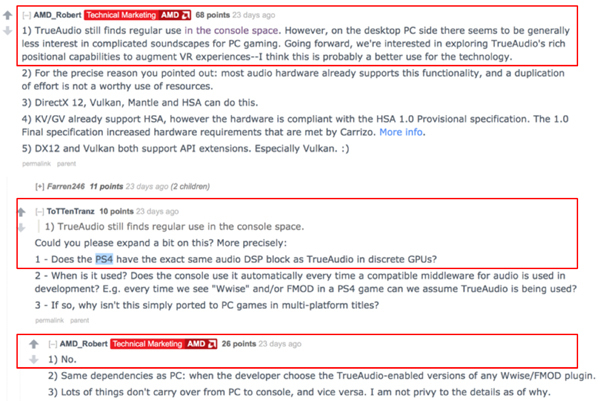

It's the same thing with the TrueAudio DSP: it has an allocation of 64MB GDDR5 RAM and it needs the GPU for audio processing (GPGPU).

It doesn't make any sense to put these dedicated co-processors in the southbridge, not to mention the bus between the APU and the SB would cause a severe bottleneck.

The SB has 256MB of DDR3 (or is it LPDDR3?), which is too little and too slow for these kinds of multimedia tasks.

1) First the Xtensa DPU is a stream processor which has memory of it's own and needs very little system memory to process a video stream.

2) You have a hard disk running connected to Southbridge

3) the video buffer is in Southbridge. The video buffer for DRM reasons has to be in the TEE with the codec, player, DRM etc. ARM recommends the southbridge be in the same TEE too.

4) Since the video buffer is in southbridge, southbridge can read that buffer, h.264 encode it, send it to the Hard disk and at the same time send video to the HDMI. Video only needs to move one way from APU to Southbridge through the PCIe.

The OS is a combination of the APU and Southbridge. My understanding is that with full screen video the GPU is off and GDDR5 memory is in self refresh/standby. It can wake in a few clock cycles when video ends or some condition requires it. This is a requirement that Media be less than 21 watts for a blu-ray player or computer IPTV. There is no way to meet this 21 watt requirement with the GPU on and no way to meet network standby requirements with GDDR5 memory. There are multiple reasons for an ARM block in the XB1 APU using DDR3 memory with power islands that allow the GPU and other components to be turned off and in the PS4 with the Southbridge as an ARM block with it's own DDR3 memory with the APU and GDDR5 able to be "turned off" put into self refresh.

And before you jump on me, the above is what is supposed to happen but hasn't been implemented yet....The PS4 draws more power in Video playback mode than the PS3. With the HTML5 <video> MSE EME enabled in Southbridge, Sony will enable the media power mode, APPs will drop in size and three or four apps including video chat can be in memory at the same time. Since it uses the Zero memory move embedded scheme where the player software is always loaded and used by all apps, only registers, pointers and copies of stacks need to be kept in memory for each application.

The PS4 commercial WebMAF framework uses a 2 Megabyte Mono engine that calls Web browser native libraries and everything is loaded into memory at boot by the ARM block as a trusted boot.

You are really saying the XB1 with DDR3 memory can't support HEVC with the Xtensa stream processors when in June they announced both HEVC encode and decode?