-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

New Leaks on AMD ZEN 2 and NAVI

- Thread starter gspat

- Start date

ZywyPL

Banned

4 core/4 thread CPU's are finished they are going the way of the dual core dodo

The "more cores" posts in this thread are true...for like 2012 lol some of you guys are WAY outta the loop

I heard that almost 2 years ago with Ryzen release, guess what? Nothing has changed, absolutely nothing. The developers won't learn how to utilize more than 4 threads overnight, the sooner AMD fanboys (not meaning you here) realise that the better for them. Let me put it that way - I can lock a Bugatti into a closed room, without and door, and while having in fact 1000hp it will still do 0mph. Hardware is not the issue, 8-threadded i7s exist for a looong time on the market, it's the software that cannot keep up. It took the devs a decade to go from 2 threads to 4, and you really expect that to change all of a sudden? Firstly the market has to be well saturated with 8+ threadded CPUs, and secondly, even then the games will still be written for extremely weak Jaguar CPU, so we go back to the first point - people won't see any reason to buy expennsive, multicore CPUs when a simple 2C/4T CPU can run all the games in 60FPS perfectly. It's a closed loop, and it won't end untill next-gen consoles show up, because the software will be finally utilizing all those threads. Until then it's just a show and no go.

joe_zazen

Member

I heard that almost 2 years ago with Ryzen release, guess what? Nothing has changed, absolutely nothing. The developers won't learn how to utilize more than 4 threads overnight, the sooner AMD fanboys (not meaning you here) realise that the better for them. Let me put it that way - I can lock a Bugatti into a closed room, without and door, and while having in fact 1000hp it will still do 0mph. Hardware is not the issue, 8-threadded i7s exist for a looong time on the market, it's the software that cannot keep up. It took the devs a decade to go from 2 threads to 4, and you really expect that to change all of a sudden? Firstly the market has to be well saturated with 8+ threadded CPUs, and secondly, even then the games will still be written for extremely weak Jaguar CPU, so we go back to the first point - people won't see any reason to buy expennsive, multicore CPUs when a simple 2C/4T CPU can run all the games in 60FPS perfectly. It's a closed loop, and it won't end untill next-gen consoles show up, because the software will be finally utilizing all those threads. Until then it's just a show and no go.

the way progress has slowed down, you'll be able to get ten+ years out of your next enthusiast cpu, So if you need to build a pc and want to keep the base (cpu/mobo/mem) for a decade, why'd you go 2C/4T.

ethomaz

Banned

It is not really about learning how to utilize more than 4 threads.I heard that almost 2 years ago with Ryzen release, guess what? Nothing has changed, absolutely nothing. The developers won't learn how to utilize more than 4 threads overnight, the sooner AMD fanboys (not meaning you here) realise that the better for them. Let me put it that way - I can lock a Bugatti into a closed room, without and door, and while having in fact 1000hp it will still do 0mph. Hardware is not the issue, 8-threadded i7s exist for a looong time on the market, it's the software that cannot keep up. It took the devs a decade to go from 2 threads to 4, and you really expect that to change all of a sudden? Firstly the market has to be well saturated with 8+ threadded CPUs, and secondly, even then the games will still be written for extremely weak Jaguar CPU, so we go back to the first point - people won't see any reason to buy expennsive, multicore CPUs when a simple 2C/4T CPU can run all the games in 60FPS perfectly. It's a closed loop, and it won't end untill next-gen consoles show up, because the software will be finally utilizing all those threads. Until then it's just a show and no go.

The fact is that paralelize code is hard... a way hard task to do and even so some tasks can't be parallelized at all.

It is better for a production OS where you open tons of programs that really utilize all theses threads of your CPU but for a single program like games it is really hard to paralelize.

Last edited:

Shai-Tan

Banned

I heard that almost 2 years ago with Ryzen release, guess what? Nothing has changed, absolutely nothing. The developers won't learn how to utilize more than 4 threads overnight, the sooner AMD fanboys (not meaning you here) realise that the better for them. Let me put it that way - I can lock a Bugatti into a closed room, without and door, and while having in fact 1000hp it will still do 0mph. Hardware is not the issue, 8-threadded i7s exist for a looong time on the market, it's the software that cannot keep up. It took the devs a decade to go from 2 threads to 4, and you really expect that to change all of a sudden? Firstly the market has to be well saturated with 8+ threadded CPUs, and secondly, even then the games will still be written for extremely weak Jaguar CPU, so we go back to the first point - people won't see any reason to buy expennsive, multicore CPUs when a simple 2C/4T CPU can run all the games in 60FPS perfectly. It's a closed loop, and it won't end untill next-gen consoles show up, because the software will be finally utilizing all those threads. Until then it's just a show and no go.

We are just this year getting games that benefit from 6c/12t but it's mostly constrained by what console cpu are capable of and right now the work loads in games can mostly be done on a 4c/8t processor due to the weakness in console cpu (edit: 4c/4t ARE suffering performance problems in some recent games). There are games that benefit from 8 threads as expected when console are many cores - it's just the load is so low it doesn't matter much for more powerful pc processors.

Last edited:

PhoenixTank

Member

Are we really having the "4 cores is enough" argument again? If you're intending to build a gaming rig solely to get you through 2019 sure, but I don't see why you'd do that unless you are on a tight budget. No judging if that is the case. Nothing stopping someone from getting a decent mobo and cheap ryzen then dropping in a shiny 7nm CPU later on.

Intel have been sandbagging core counts in the mainstream CPUs for years now. AMD forced their hand with Zen and that is changing but not at a lightning pace.

Yes, it takes time for hardware and software adoption and of course it won't be overnight.

Modern engines are already scaling out pretty well on the extra cores for 6-8. I'm sure as hell not expecting 16 cores to be mainstream for a rather long time, but the reality is we're stalling on node shrinks (some fabs more than others). Can't chase the GHz dragon forever and the way to keep Moore's law going will be to scale out with smaller chips, like AMD is doing. If games are to get more complex and/or target higher framerates they will need to split out as best as they can to take advantage of it. Easier said than done. Of course, Amdahl's law applies and yes it will mean diminishing returns at certain points depending on the workload.

Intel have been sandbagging core counts in the mainstream CPUs for years now. AMD forced their hand with Zen and that is changing but not at a lightning pace.

Yes, it takes time for hardware and software adoption and of course it won't be overnight.

Modern engines are already scaling out pretty well on the extra cores for 6-8. I'm sure as hell not expecting 16 cores to be mainstream for a rather long time, but the reality is we're stalling on node shrinks (some fabs more than others). Can't chase the GHz dragon forever and the way to keep Moore's law going will be to scale out with smaller chips, like AMD is doing. If games are to get more complex and/or target higher framerates they will need to split out as best as they can to take advantage of it. Easier said than done. Of course, Amdahl's law applies and yes it will mean diminishing returns at certain points depending on the workload.

TeamGhobad

Banned

sounds fooking grrrrreeat

ZywyPL

Banned

the way progress has slowed down, you'll be able to get ten+ years out of your next enthusiast cpu, So if you need to build a pc and want to keep the base (cpu/mobo/mem) for a decade, why'd you go 2C/4T.

While your theory is logical and damn tempting, it doesn't work that way I'm afraid - sure, you can get 32-threadded Ryzen 3 when it arrives, but when we finally reach the moment those 32 threads will be actually utilized, there will be newer, more up-to-date CPUs on the market that will utilize those threads waaay more efficiently. One of the very basic PC rules - you can't buy for the future, focus on the now. Of course, once in a decade we get stuff like 2500K or GTX 970 that can last for a very very long time, but those are exceptions from the rule. And yeah, people who went for the first i3 were set up for a looong time, it's the previous year where quad-care CPUs became pretty much mandatory. And those who bought 2500K/2600K basically won a lottery, those CPU's will still remain relevant in the next 3 years or so. Consoles rule the gaming market, whether someone likes it or not.

It is not really about learning how to utilize more than 4 threads.

The fact is that paralelize code is hard... a way hard task to do and even so some tasks can't be parallelized at all.

Exactly - there are jobs that are paralel by their nature, like encoding/decoding, compression/decompression, etc. and in those tasks Ryzen and Threadripper excel like crazy. But games aren't and never will be paralel computing, hence it took so long for the devs to start fully utilizing quad-core CPU's, even tho they have been present on the market for such a long time. And then again - there are tasks like AI for example that cannot be paralized, so no matter how much the devs will learn and will be wanting to, the games will still be able to use only a limited amount of threads (effectively).

We are just this year getting games that benefit from 6c/12t but it's mostly constrained by what console cpu are capable of and right now the work loads in games can mostly be done on a 4c/8t processor due to the weakness in console cpu (edit: 4c/4t ARE suffering performance problems in some recent games). There are games that benefit from 8 threads as expected when console are many cores - it's just the load is so low it doesn't matter much for more powerful pc processors.

But then again, as statistics show the whole majority of monitors are 1080p 60Hz, so a regular Joe doesn't care that he can get let's say, 135FPS on a 8700K vs 105 on 2500K, as he's already waaay above his refresh rate. As long as people can play in 60FPS they really don't care, almost nobody can afford to upgrade their hardware every other year, especially when there's no real need to do so.

That being said, as I think to myself now - isnt' AMD late to the party? I mean, most everyone out there already has a rig that can handle 1080p60, 1440p144, 4K60, and what's not, what will new Ryzen and Navi offer that people don't already have? Like I said, before next-gen consoles arrive the CPU market won't move an inch, while on the GPU side they will (again) try to catch Nvidia's previous generation, aside die-hard fanboys, people who needed performance level above 1060/580 already bought it, so who's gonna buy their new lineup? I fear that Intel and Nvidia will go even more crazy with their high-end products pricing, seeing no real threat in that gaming segment.

Omega Supreme Holopsicon

Member

It will likely be used in apple computers, which I heard potentially inspired the tachibana computers used in that series.i know its not but i really wish NAVI was a Serial Experiments Lain reference

Last edited:

hououinkyouma00

Member

I fucking love you. Lain is my favorite anime of all time.i know its not but i really wish NAVI was a Serial Experiments Lain reference

Fun fact: The computer voice in Lain is actually a old Apple like text to speech voice.

Edit: Fucking auto correct...

Last edited:

Tenaciousmo

Member

I am not saying they aren't being competitive right now, I know what the meme used to represent, but they are still doing "more cores". this time hyperthreaded and more efficient cores, but it is still applicable literally and not what it used to be in essence.How is it any close to true when the roles completely reversed? to refresh your memory: This meme represented a incompetent amd whos only answer to intel was adding more cores while neglecting other aspects

Since then AMD made major strides with Zen arch if anything the meme applies to Intel who has been stagnant lately

30yearsofhurt

Member

I'm in for "moar cores".

Flapples

Member

I fucking love you. Lain is my favorite anime of all time.

Fun fact: The computer voice in Lain is actually a old Apple like text to speech voice.

Edit: Fucking auto correct...

Oh hell yeah that show is filled to the brim with 90s Tech references. liked it so much had to pick it up on blu ray

see https://tvtropes.org/pmwiki/pmwiki.php/ShoutOut/SerialExperimentsLain

also sorry for going off topic OP

Tbone3336

Gold Member

Found this rumor regarding the Nex Xbox using Zen 2. Might be a bit ot here but found no other appropriate thread to share it without starting a new one.

https://wccftech.com/xbox-scarlett-4k-60fps/

https://wccftech.com/xbox-scarlett-4k-60fps/

SonGoku

Member

Do you see the incompetent clown in the pic no? you claim the meme is still applicable to AMD because of more cores, going by that logic intel should be in the pic because all they are doing is adding more cores lately. Hell it could be used for every CPU manufacturer in the world: x86, powerpc and arm since they are all adding MORE CORES as you put it, so whats the purpose of singling amd out for?I am not saying they aren't being competitive right now, I know what the meme used to represent, but they are still doing "more cores". this time hyperthreaded and more efficient cores, but it is still applicable literally and not what it used to be in essence.

So i ask again how is it still applicable when AMD is bringing more architecture changes and upgrades with each new release than intel has in their last 5 arch releases? If anything Intel arch improvements are minimal and are relying more aggressively on increased core counts and frequencies for their new releases than amd is....

Do you not see the irony in any of that?

Last edited:

Ascend

Member

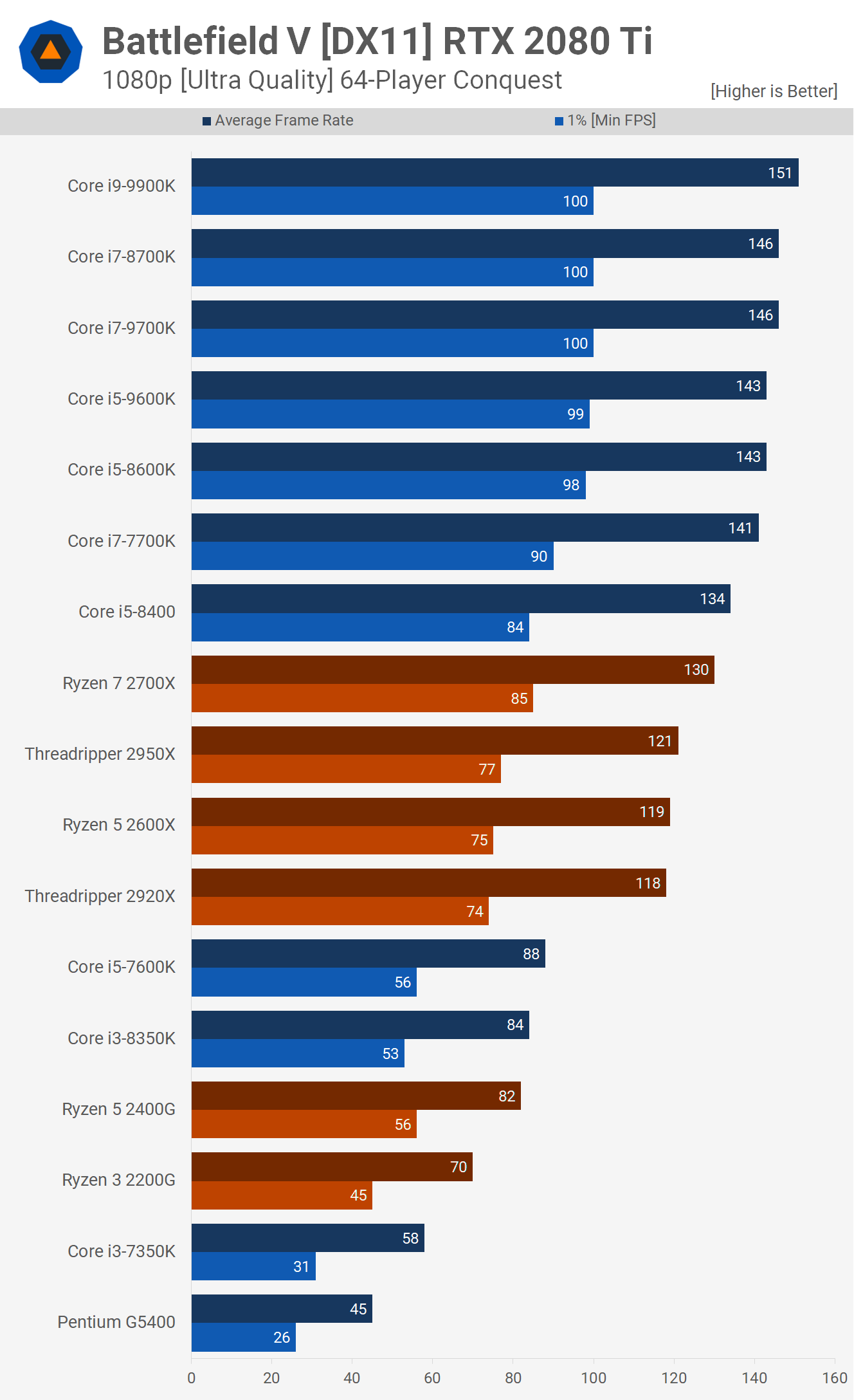

Lol... I think you're a few years behind. Not even a 4c/4t is enough anymore for modern games, let alone 2c/4t... Look at the i5 7600k. It gets beat by a lower clocked Ryzen 2600X in the example below... Right now, the minimum is 6 threads for optimal performance.even then the games will still be written for extremely weak Jaguar CPU, so we go back to the first point - people won't see any reason to buy expennsive, multicore CPUs when a simple 2C/4T CPU can run all the games in 60FPS perfectly.

https://www.techspot.com/review/1754-battlefield-5-cpu-multiplayer-bench/

Aside from all that, all you say is irrelevant. The Ryzen CPUs already have higher IPC than Intel's CPUs. Their issue was their max clock speed, failing to go much beyond 4GHz while Intel can reach 5 GHz.. If these leaks are correct, they have caught up on clock speed. Not to mention the architectural improvements, where Intel has been stagnant for a while. This is not sacrificing more cores for single core performance. They improved both single core IPC and amount of cores.

Last edited:

ZywyPL

Banned

Lol... I think you're a few years behind. Not even a 4c/4t is enough anymore for modern games, let alone 2c/4t... Look at the i5 7600k. It gets beat by a lower clocked Ryzen 2600X in the example below... Right now, the minimum is 6 threads for optimal performance.

You say that 4C/4T isn't enough anymore only to post benchmark results where almost every single CPU reaches well above 60FPS (and that's even with irrelevant Ultra settings)... I will repeat once again - average Joe doesn't care as long as he plays at 60FPS, anything above it - may it be 80, 100, 120 and so on - nobody with common sense cares, and won't throw few hundread bucks on a new CPU/GPU/mobo when the games are already running perfectly fine.

Ascend

Member

4c/4t is incapable of minimums above 60 fps.You say that 4C/4T isn't enough anymore only to post benchmark results where almost every single CPU reaches well above 60FPS (and that's even with irrelevant Ultra settings)... I will repeat once again - average Joe doesn't care as long as he plays at 60FPS, anything above it - may it be 80, 100, 120 and so on - nobody with common sense cares, and won't throw few hundread bucks on a new CPU/GPU/mobo when the games are already running perfectly fine.

ZywyPL

Banned

4c/4t is incapable of minimums above 60 fps.

Nobody cares about minimums either, especially that in most case scenarios those minimums are reached right after the loading screens, while the scene isn't yet generated and you have a black screen for a split second, and are never seen again during the actuall gameplay.

Ascend

Member

Minimums are an indication of smoothness. And there's a reason they picked 1% lows, rather than the absolute minimum. That is to account for that exact scenario you mention.Nobody cares about minimums either, especially that in most case scenarios those minimums are reached right after the loading screens, while the scene isn't yet generated and you have a black screen for a split second, and are never seen again during the actuall gameplay.

ZywyPL

Banned

Minimums are an indication of smoothness. And there's a reason they picked 1% lows, rather than the absolute minimum. That is to account for that exact scenario you mention.

And yet again - average consumer couldn't care less. I understand it might be very hard/impossible for you to acknowledge that not everyone is a gaming enthusiast and can't does't want to spend a fortune on his PC, but that's just the way things go. Ordinary people don't even go to the settings menu at all, they just launch the game and hit play immediately, play on default settings with whatever the fremarate is, and - wait for it - enjoy it! Average Joe is not a typical gaming forum nerd that cares about numbers first and foremost, all he cares in pure entertainment, and resoluton, framerate, etc. has nothing to do with it. I mean - people play on 200-250$ consoles in 900p 30FPS and fully enjoy the games, so people with pretty much ANY PC at this point have even less concerns regarding the performacne.

Ascend

Member

Those people indeed buy consoles, and most likely wouldn't game on PC.And yet again - average consumer couldn't care less. I understand it might be very hard/impossible for you to acknowledge that not everyone is a gaming enthusiast and can't does't want to spend a fortune on his PC, but that's just the way things go. Ordinary people don't even go to the settings menu at all, they just launch the game and hit play immediately, play on default settings with whatever the fremarate is, and - wait for it - enjoy it! Average Joe is not a typical gaming forum nerd that cares about numbers first and foremost, all he cares in pure entertainment, and resoluton, framerate, etc. has nothing to do with it. I mean - people play on 200-250$ consoles in 900p 30FPS and fully enjoy the games, so people with pretty much ANY PC at this point have even less concerns regarding the performacne.

But... Let's assume you're right. Even if we say you're right, the 6 cores that Zen 2/Ryzen 3000 offers, is the optimum for performance, compared to any CPU that can handle only 4T,. If the leak is accurate, of course.

Last edited:

ZywyPL

Banned

But... Let's assume you're right. Even if we say you're right, the 6 cores that Zen 2/Ryzen 3000 offers, is the optimum for performance, compared to any CPU that can handle only 4T,. If the leak is accurate, of course.

But even if, going all the way back to my initial post - how many people will really feel the need to upgrade their current CPU (or GPU)? Especially with the rumored Zen2 + Navi based, 4K60 consoles sitting around the corner? Ironically I think AMD will cannibalize their offer if the consoles specs turned out to be true.

PhoenixTank

Member

So, your argument is that lowest common denominator doesn't care even if they get 1% lows below 60FPS... so everything else doesn't matter?And yet again - average consumer couldn't care less. I understand it might be very hard/impossible for you to acknowledge that not everyone is a gaming enthusiast and can't does't want to spend a fortune on his PC, but that's just the way things go. Ordinary people don't even go to the settings menu at all, they just launch the game and hit play immediately, play on default settings with whatever the fremarate is, and - wait for it - enjoy it! Average Joe is not a typical gaming forum nerd that cares about numbers first and foremost, all he cares in pure entertainment, and resoluton, framerate, etc. has nothing to do with it. I mean - people play on 200-250$ consoles in 900p 30FPS and fully enjoy the games, so people with pretty much ANY PC at this point have even less concerns regarding the performacne.

Having lower standards means low end parts are acceptable to them. More thrilling news at 10pm? You're also forgetting the generally higher API overhead on PCs that consoles don't suffer from.

I tend to consider an average pc gamer to generally go for mid range parts i.e. the sweet spot for value and performance. I feel like those people would care if they knew their 1060 they paid good money for is being held back by a meh CPU.

The upgrade cycle exists. Not everyone is going to want to upgrade immediately. Some are due for upgrades, and sometimes parts die. You seem to be arguing that a lack of a massive sudden flood to upgrade means the whole thing is only worthy of our apathy. With all due respect, this is a ridiculous notion.But even if, going all the way back to my initial post - how many people will really feel the need to upgrade their current CPU (or GPU)? Especially with the rumored Zen2 + Navi based, 4K60 consoles sitting around the corner? Ironically I think AMD will cannibalize their offer if the consoles specs turned out to be true.

AMD won't give a damn if you forgo buying a Zen2 PC part to get one of the new consoles, they still win in either case.

If the consoles are a massive jump in power they're going to have the pricetag to match. Far more likely to see lower end parts with lower clockspeeds so they can hit palatable market prices, power budgets and fab yields.

ZywyPL

Banned

So, your argument is that lowest common denominator doesn't care even if they get 1% lows below 60FPS... so everything else doesn't matter?Having lower standards means low end parts are acceptable to them. More thrilling news at 10pm? You're also forgetting the generally higher API overhead on PCs that consoles don't suffer from.

That's actually correct. Again, you, Ascend etc. forget/reject the fact that whole majority of gaming community doesn't visit gaming forums/sites AT ALL, we all here (and other forums combined) represent MAYBE 5% of the entire gaming popultation, you guys assume that all of the BILLIONS of PC users all over the world visit gaming sites on a daily basis, with such a belief we can't have and constructive conversation. Typical gamer doesn't know about such thing as 1% lows, because, well, it's only 1%, to put it into perspective, within every 1h (3600s) of gameplay you get 36s of lowest measured framerate, and that's not even continuous 36s, rather half a second here, half a second there, so who with a common sense cares (or even notices it)? Where the rest 99% runs at 80-100FPS? 99% vs 1%, if you ask anyone on the street what's more important you'll get a total zero answers for the latter, guaranteed. The 1% (or even 0.1%) lows, frametimes, etc. started to be measured just recently, in the past 30 years or so every site used nothing else than a simple average framerate to measure performance, and yet, nobody had a problem with it, nobody even noticed or cared what's the lowest FPS is of the played games, people actually always cared what's max FPS to be fair. And then again, you act like 5xFPS for half a second is literally end of the world, no mentally healthy person will ever think "holy cow my PC is so outdated! Time to upgrade!". Hell, you would be shocked (as I am myself), for how many people 40FPS is perfectly smooth... Most people don't use FPS counter, to begin with. They simply play games, not numbers.

I tend to consider an average pc gamer to generally go for mid range parts i.e. the sweet spot for value and performance. I feel like those people would care if they knew their 1060 they paid good money for is being held back by a meh CPU.

So again you're living a false belief - i5/R5 + 1060/580 is (in the eyes of average person) an expensive gaming PC, that's the enthusiasts go-to setup (when the money doesn't allow them for more of course), while average Joe sits on a i3/R3 + 1050Ti/570 or below, and if you browse YT you would be surprised how well such rigs can handle 60FPS on Medium to High settings. And that's more what majority of people ever need, I mean - it's already twice of what consoles offer, and costs barely more.

The upgrade cycle exists. Not everyone is going to want to upgrade immediately. Some are due for upgrades, and sometimes parts die. You seem to be arguing that a lack of a massive sudden flood to upgrade means the whole thing is only worthy of our apathy. With all due respect, this is a ridiculous notion.

AMD won't give a damn if you forgo buying a Zen2 PC part to get one of the new consoles, they still win in either case.

If the consoles are a massive jump in power they're going to have the pricetag to match. Far more likely to see lower end parts with lower clockspeeds so they can hit palatable market prices, power budgets and fab yields.

Sort of, AMD will be a winner anyway, I cant' disagree, but bare in mind that margins on consoles are much much lower compared to PC, where AMD already sits on just 3x%, so from consoles they will barely have any profits (like they already do from PS4/XB1).

I could go on and on with the discussion, but I will end it right here, the time will eventually tell who was right, but seeing as both Ryzen and Polaris, as well as their refreshes, didn't storm the market at all, I see absolutely no reasonable reason to believe thing's will change with the upcoming hardware, as I said - they won't offer anything that's already on the market for quite a some time.

PhoenixTank

Member

I'm well aware of the niche status and assume no such thing. I haven't clubbed you in with "the unwashed masses" or anything similarly asinine (I don't think like that) so avoiding the hyperbole would be nice. I do not think FPS in the 50s is "literally the end of the world". I surely prefer 60+ but smoothness is key.That's actually correct. Again, you, Ascend etc. forget/reject the fact that whole majority of gaming community doesn't visit gaming forums/sites AT ALL, we all here (and other forums combined) represent MAYBE 5% of the entire gaming popultation, you guys assume that all of the BILLIONS of PC users all over the world visit gaming sites on a daily basis, with such a belief we can't have and constructive conversation. Typical gamer doesn't know about such thing as 1% lows, because, well, it's only 1%, to put it into perspective, within every 1h (3600s) of gameplay you get 36s of lowest measured framerate, and that's not even continuous 36s, rather half a second here, half a second there, so who with a common sense cares (or even notices it)? Where the rest 99% runs at 80-100FPS? 99% vs 1%, if you ask anyone on the street what's more important you'll get a total zero answers for the latter, guaranteed. The 1% (or even 0.1%) lows, frametimes, etc. started to be measured just recently, in the past 30 years or so every site used nothing else than a simple average framerate to measure performance, and yet, nobody had a problem with it, nobody even noticed or cared what's the lowest FPS is of the played games, people actually always cared what's max FPS to be fair. And then again, you act like 5xFPS for half a second is literally end of the world, no mentally healthy person will ever think "holy cow my PC is so outdated! Time to upgrade!". Hell, you would be shocked (as I am myself), for how many people 40FPS is perfectly smooth... Most people don't use FPS counter, to begin with. They simply play games, not numbers.

You seem to be arguing with a caricature of the PCMR subreddit. That isn't me.

If anything your definition of pc gamers seems so much wider than mine to the point of including every single person that has a PC and occasionally plays a game on it. Mine does not.

You're neglecting the other side of the coin on upgrades. If an average Joe PC is generally running slowly and getting in the way of use they will most often:

- Take it to a repair shop

- Just put up with it

- Outright buy a new one, even if the hardware is not at fault.

Unfortunately you're sorely missing the point. These 1%, 0.1% lows help to better quantify the experience. Having many low points far lower than average means a janky experience - not unplayable but janky. Whether they keep up with figures or not, jank is noticeable. It makes for a bad UX. People don't like it in web browsers and they don't like it in games. Can they live with it? Of course they can but given the choice what do you think they'd prefer?

Looking at the Steam hardware survey a 1060 is still the most popular card by a few solid % followed, yes, by a 1050Ti a 1050 then a 1070.So again you're living a false belief - i5/R5 + 1060/580 is (in the eyes of average person) an expensive gaming PC, that's the enthusiasts go-to setup (when the money doesn't allow them for more of course), while average Joe sits on a i3/R3 + 1050Ti/570 or below, and if you browse YT you would be surprised how well such rigs can handle 60FPS on Medium to High settings. And that's more what majority of people ever need, I mean - it's already twice of what consoles offer, and costs barely more.

In the AMD camp there are more 480s and 580s than 570s... and no sign of a 470 these days.

Volume is at the mid range, and thus the average.

If your definition of pc gaming is much much wider than Steam's trap net then of course we're going to disagree. For that OEM segment they might just put in a 3300G and call it a day. Or something in the Athlon branding but the 3300G might be beefy enough all round that they don't bother with a low end graphics card but I can't know - Navi's performance is much less of a known quantity than Zen2. It should, however, curb stomp Intel HD graphics.

Enough revenue to keep them alive even if it isn't a king's ransom. If lower binned/non-perfect zen PC silicon ends up in consoles they are going to be enjoying gravy there. Having a good use for "bad" silicon is a big efficiency improvement over pumping out custom Jaguars specifically for the consoles.Sort of, AMD will be a winner anyway, I cant' disagree, but bare in mind that margins on consoles are much much lower compared to PC, where AMD already sits on just 3x%, so from consoles they will barely have any profits (like they already do from PS4/XB1).

I make no assumptions nor guarantees on whether AMD will make real headways into the market. They've had the best parts in the past and not received the share it should command. It is what it is.I could go on and on with the discussion, but I will end it right here, the time will eventually tell who was right, but seeing as both Ryzen and Polaris, as well as their refreshes, didn't storm the market at all, I see absolutely no reasonable reason to believe thing's will change with the upcoming hardware, as I said - they won't offer anything that's already on the market for quite a some time.

I do however strongly see 6+ cores being more and more common. Intel has not been offering a mainstream 6+ core part for very long and market penetration takes time. It will become the baseline assuming the amount people want to pay remains constant.

DemonCleaner

Member

PS5 and Scarlett could really be intriguing...

95W Zen2 8 core chiplet

75W Navi chiplet

The big power use items are only 170W!

Also, since they aren't limited to the AM4 socket size due to it being custom, thee things could really be beasts.

they won't waste 95w on the CPU. it will be a 8core/16thread CPU but it will be underclocked and undervolted enough, that they can use the bulk of available power for the GPU. more like 120W+ to the GPU and 50W at most for the CPU.

THE:MILKMAN

Member

they won't waste 95w on the CPU. it will be a 8core/16thread CPU but it will be underclocked and undervolted enough, that they can use the bulk of available power for the GPU. more like 120W+ to the GPU and 50W at most for the CPU.

Even this is crazy talk. 170W is the higher end of what I expect the whole console to use at the wall. The CPU+GPU using that alone is just silly and isn't happening IMO.

Whether a chiplet design or a monolithic APU, the combined CPU/GPU won't use much more than 100W. Add in all the other bits like RAM/UHD/WiFi/BT/HDD/FAN and secondary chips/RAM and the total will soon head toward 170W....

DemonCleaner

Member

well while i think 200W of the wall would be viable. you're probably right. i just used his numbers to make to make it better understandable.

so let's do the math for a power target of 170W of the wall: you have PSU efficiency of about 85%. that would leave you with 145W at the rail. you'll need around 25W for auxiliaries (drives, mb, fans). like 15w for GDDR6. so that leaves you with 100W for GPU and CPU. in that case they would never in hell use more than 30W on the CPU.

that said that should be more than sufficient to run most advanced game simulation at 60hz. 70W for the GPU part would be kinda shitty though.

MS already said, they won't let sony get away with a power advantage again. considering that we won't see a major leap in silicon for at least the next three years, i think MS would be hard pressed to get as much out of 7nm navi as possible and therefore will go with a higher power budget even if that means more cost for PSU and cooling. im not fully convinced that sony won't cheap out on that front, after last gen's success, but i sure hope they won't.

so let's do the math for a power target of 170W of the wall: you have PSU efficiency of about 85%. that would leave you with 145W at the rail. you'll need around 25W for auxiliaries (drives, mb, fans). like 15w for GDDR6. so that leaves you with 100W for GPU and CPU. in that case they would never in hell use more than 30W on the CPU.

that said that should be more than sufficient to run most advanced game simulation at 60hz. 70W for the GPU part would be kinda shitty though.

MS already said, they won't let sony get away with a power advantage again. considering that we won't see a major leap in silicon for at least the next three years, i think MS would be hard pressed to get as much out of 7nm navi as possible and therefore will go with a higher power budget even if that means more cost for PSU and cooling. im not fully convinced that sony won't cheap out on that front, after last gen's success, but i sure hope they won't.

Last edited:

ethomaz

Banned

Zen for APU won’t be like Zen for desktop so any TDP comparison is useless.

APU Chips are more in line with mobile and have features cut off... I will be very surprise if SMT is implemented in APUs for consoles.

The Zen2 used in APUs is called Renoir or Dali... not Ryzen or Matisse like the Desktop version.

APU Chips are more in line with mobile and have features cut off... I will be very surprise if SMT is implemented in APUs for consoles.

The Zen2 used in APUs is called Renoir or Dali... not Ryzen or Matisse like the Desktop version.

Last edited:

Coulomb_Barrier

Member

Zen for APU won’t be like Zen for desktop so any TDP comparison is useless.

APU Chips are more in line with mobile and have features cut off... I will be very surprise if SMT is implemented in APUs for consoles.

The Zen2 used in APUs is called Renoir or Dali... not Ryzen or Matisse like the Desktop version.

Yes people here are mistaken because it's not Navi in PS5 it's called Arcturus, basically Navi customised from the ground up.

Last edited:

Panajev2001a

GAF's Pleasant Genius

Zen for APU won’t be like Zen for desktop so any TDP comparison is useless.

APU Chips are more in line with mobile and have features cut off... I will be very surprise if SMT is implemented in APUs for consoles.

The Zen2 used in APUs is called Renoir or Dali... not Ryzen or Matisse like the Desktop version.

Maybe, but SMT is about 5-6% die area cost and when the software is designed around it the efficiency gains more than pay for it.

ZywyPL

Banned

I think many people have no idea how HT/SMT actually works. Long story short - it's not a fully functional thread, that can do whatever the developer needs at any given moment. And that's the reason I am 99,99% sure we won't see it in any gaming console, ever. The optimization would be practically impossible, or the games would need to be developed 2-4x longer than they already are to use the multi-threadding to at least some extent. Given the IPC and clock speeds Ryzen has compared to Jaguar, we are talking about 4-5x processing power withi the same core count, think about it like the PS5/XB2 would have 32-40 core Jaguar. Really, those 12 threads (or even 12 cores as some suggest) are completely unnecessary, especially that they wouldn't come for free.

The original PS4, while playing a game at 1080p, drew approximately 148W per Digital Foundry's testing, but the refined PS4 Pro/PS4 Slim drew 155W (4K) and 80W (1080p) respectively. I probably wouldn't expect anymore than 150-160W @ 4K on 7nm.Even this is crazy talk. 170W is the higher end of what I expect the whole console to use at the wall. The CPU+GPU using that alone is just silly and isn't happening IMO.

Whether a chiplet design or a monolithic APU, the combined CPU/GPU won't use much more than 100W. Add in all the other bits like RAM/UHD/WiFi/BT/HDD/FAN and secondary chips/RAM and the total will soon head toward 170W....

Aintitcool

Banned

PS5 will definitely have more than 8 cores.

Armorian

Banned

PS5 will definitely have more than 8 cores.

It won't, maybe 16 threads.

ZywyPL

Banned

PS5 will definitely have more than 8 cores.

If PS5 is going fully into VR as the rumors say, then it's the GPU that's going to be the biggest concern, not the CPU.

Mecha Meow

Member

If that 3600X turns out to be true, I'll snag one later when it eventually goes on sale and replace my 2600X.

Zen is the first cpu series in 20 years where I bought a couple incremental upgrade for funsies.

1600

1700 (got it for $50 so why not)

2600X

2200G (just to mess around with)

Zen is the first cpu series in 20 years where I bought a couple incremental upgrade for funsies.

1600

1700 (got it for $50 so why not)

2600X

2200G (just to mess around with)

Last edited:

DemonCleaner

Member

Smt takes up quite a bit of tdp, at least on my oc 5960x. When you look at 9900k situation, it is still sucking up loads of tdp. I do not expect ps5 to use smt, is a good thing.

sorry that's just wrong. if you control for work done, SMT saves power and doesn't increase it. it prevents transitors to perform idle cycles, while having to be kept under current (which euqlas power draw without payoff). if that wasn't the case SMT wouldn't make any sense all.

DemonCleaner

Member

Yes people here are mistaken because it's not Navi in PS5 it's called Arcturus, basically Navi customised from the ground up.

sony silicon will have a customized feature set, no question. but the general power characteristics will still be those of Navi and 7nm Zen2.

I think they'll be needing to find a happy medium because VR requires a high framerate and it requires two different images for each eye. I'd personally be concerned about both.If PS5 is going fully into VR as the rumors say, then it's the GPU that's going to be the biggest concern, not the CPU.

ZywyPL

Banned

I think they'll be needing to find a happy medium because VR requires a high framerate and it requires two different images for each eye. I'd personally be concerned about both.

It just popped up in my head - checkerboard rendering for the rescue? They can render two images at a mere 720-900p at 90-120FPS, and then upscale the whole thing to 1440-1800p. They can also run it at just 60FPS and interpolate to 120, like PSVR already does. Those both techniques combined would make VR not so demanding as a "brute force" rendering does.

Definitely. Digital Foundry made a good argument about checkerboard rendering. I think it'll be common along with dynamic resolutions next generation.It just popped up in my head - checkerboard rendering for the rescue? They can render two images at a mere 720-900p at 90-120FPS, and then upscale the whole thing to 1440-1800p. They can also run it at just 60FPS and interpolate to 120, like PSVR already does. Those both techniques combined would make VR not so demanding as a "brute force" rendering does.

Panajev2001a

GAF's Pleasant Genius

I think many people have no idea how HT/SMT actually works. Long story short - it's not a fully functional thread, that can do whatever the developer needs at any given moment. And that's the reason I am 99,99% sure we won't see it in any gaming console, ever. The optimization would be practically impossible, or the games would need to be developed 2-4x longer than they already are to use the multi-threadding to at least some extent. Given the IPC and clock speeds Ryzen has compared to Jaguar, we are talking about 4-5x processing power withi the same core count, think about it like the PS5/XB2 would have 32-40 core Jaguar. Really, those 12 threads (or even 12 cores as some suggest) are completely unnecessary, especially that they wouldn't come for free.

There are some issues because you are either over supplying HW resources that will go unused or that do not pay for themselves. HT/SMT requires some duplication of resources (can increase contention to nasty levels if you do not balance the HW thread count and physical registers and caches for example), but beside that it refuses existing HW resources hence the 5/6% resources tax.

The essence of SMT is to maximise execution units utilisation: you have say three ALU’s, a scalar FPU, a vector unit, a few load and store units, etc... and the current thread does not have enough instruction level parallelism to keep a wide core like this fed (even looking ahead not enough non dependent work able to be started)? Why not “dispatch” instructions from other (full, versatile) threads?

These cores are pretty wide on the execution side of things so SMT could be useful, but yes some first party developers already optimise their software to the point that it may be a bit less useful and techniques such as software managed threads / fibers would make SMT a bit less effective,

ethomaz

Banned

It most decrease performace in games than help... it is a console for games after all.Maybe, but SMT is about 5-6% die area cost and when the software is designed around it the efficiency gains more than pay for it.

I can just post a bunch of recent releases that is better to turn SMT off for better performance.

But that is not my only point this gen devs praised how PS4 was easy to developer so why add something like SMT that will add difficult to optimize???

I’m 99% in the fence that a console APU won’t have SMT... SMT is awesome worth workstations that runs a lot of apps in parallel not consoles that the main focus is to run a single game.

Last edited: