CyberPanda

Banned

Old, but relevant I think.

devblogs.microsoft.com

devblogs.microsoft.com

No Stranger to the (Video) Game: Most Eighth Generation Gamers Have Previously Owned Consoles

PIX 1803.16-raytracing – DirectX Raytracing support

Brian

March 19th, 2018

Today we released PIX-1803.16-raytracing which adds experimental support for DirectX Raytracing (DXR).

As just announced at GDC this morning, Microsoft is adding support for hardware accelerated raytracing to DirectX 12 and with this release PIX on Windows supports DXR rendering so you can start experimenting with this exciting new feature right away. Please follow the setup guidelines in the documentation for how to get started.

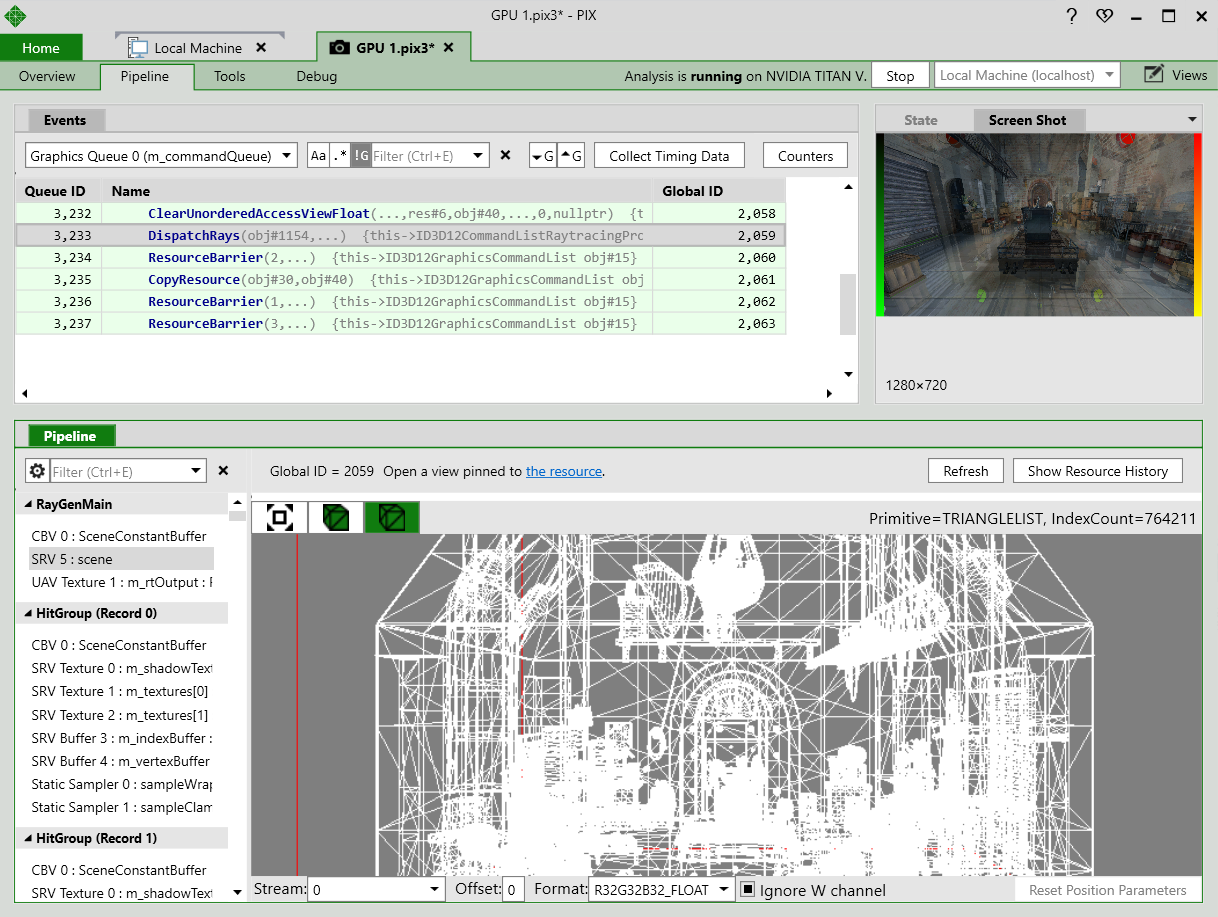

PIX on Windows supports capturing and analyzing frames rendered using DXR, so you can start debugging and improving your raytracing code. DXR support is seamlessly integrated with the features of PIX on Windows you already know and use for your D3D12 titles. Specifically, PIX allows you to inspect the following properties of your raytracing rendering:

Raytracing is an upcoming Windows 10 feature and a new paradigm for both DirectX and PIX on Windows and consequently we plan to evolve PIX on Windows significantly in this area based on input from developers. Please see the documentation for details on limitations of this initial release and use the feedback option to tell us about your experience with using PIX on Windows with your raytracing code.

- The Events view shows new API calls for DXR such as DispatchRays and BuildRaytracingAccelerationStructure.

- The Event Details view provides additional insight into each of the DXR API calls.

- The Pipeline view shows the resources used for raytracing such as buffers and textures as well as visualization of acceleration structures.

- The API Object view shows DXR API state objects to help you understand the setup of your raytracing.

Please note: This is an experimental release dedicated to support the upcoming DXR features in DirectX 12. We recommend using the latest regular release for non-DXR related work.

Inspecting DirectX Raytracing rendering in PIX on Windows

PIX 1803.16-raytracing - DirectX Raytracing support - PIX on Windows

Today we released PIX-1803.16-raytracing which adds experimental support for DirectX Raytracing (DXR). As just announced at GDC this morning, Microsoft is adding support for hardware accelerated raytracing to DirectX 12 and with this release PIX on Windows supports DXR rendering so you can start...

devblogs.microsoft.com

devblogs.microsoft.com

Power matters to people. This is an interesting article if you want to read it.Within our gamer bubble things like this seem important, but console sales over holiday 2020 are not going to be affected by this sort of thing.

As things stand, on the verge of a new generation, there is no doubt that Sony are in pole position. They have sold at least double the number of consoles in the current gen and have a raft of top notch AAA exclusive titles that are ready to drop over the last year of current gen and the first year(s) of the next. They have all the momentum, the onus is on Microsoft to show their hand first.

I have no doubt that Sony will keep their powder dry until Microsoft have announced.

No Stranger to the (Video) Game: Most Eighth Generation Gamers Have Previously Owned Consoles

_678x452.jpg)