I really don't think it's "green FUD" at all.

If all that AMD can do is simply almost match Nvidia ( but not quite in power efficiency, not quite in features and then not competing in the high end ) years later.... why should I bother?

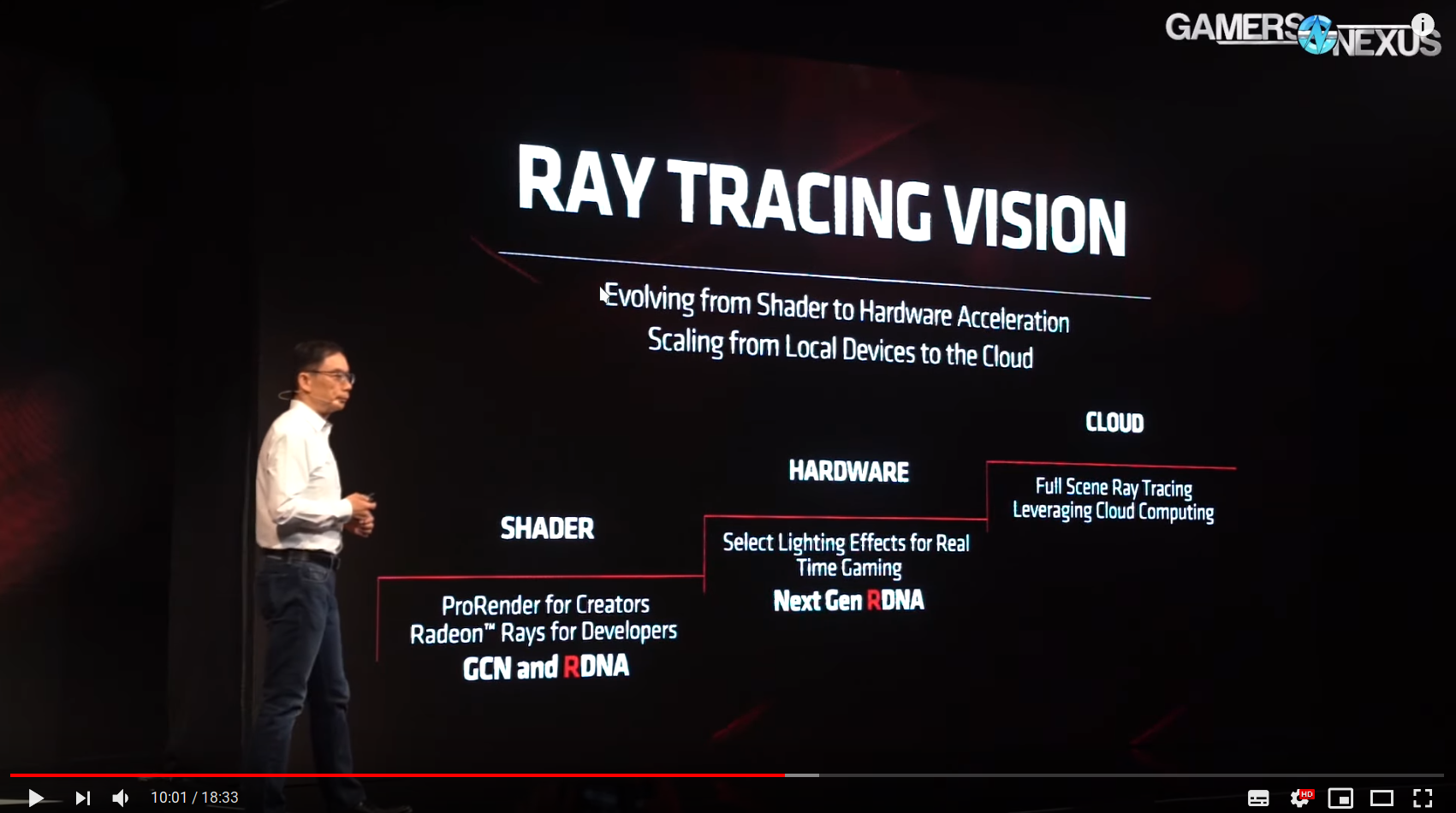

It's always a lose lose for AMD. If they add features that nVidia has, they're copying, and if they don't, they lack features. They added FidelityFX, which is basically a superior DLSS, but the only point that is relevant is ray tracing apparently, even though no one saw the relevance since launch. Suddenly now Ray Tracing is the biggest most important features ever. Lower input lag across all games? Nah, we don't need that! We need ray tracing!

I just don't understand how any of these Navi GPUs make any sense. If I wanted a 2060 or 2070 I could have already bought one. I could have already had those levels of performance for years on the Nvidia side.

And if these NAVI GPUs do have any advantage ( either in price or performance ) Nvidia will shortly erase it with either price cuts, a refresh or both.

The only AMD GPU that makes any sense is the 570 (the 570 not the 5700)... nothing else.

And yet everyone buys the more expensive 1050 Ti and GTX 1650 over it. The lower price better performance has not worked for them on multiple occasions. Why would they lower prices this time then, if people will still flock to nVidia anyway? They might as well simply keep their prices up to gain money from the small market that does see value in their products.

I'm baffled why AMD is only releasing these mid range NAVI cards this year. RIGHT NOW they have SOME advantage by being first at 7nm.

Is it not possible for them to release an 80CU Navi GPU that can compete with or even beat at 2080ti? Even if it consumes an ABSURD amount of electricity and no raytracing, just DO IT AMD. Hold that performance crown again even if it's only for a short time.

Yeah... How did that turn out with the 290X? It gained them nothing. They had the flagsip product for over 6 months, and yet, they still didn't succeed.

So what? "Wait for Big Navi next year"? By then Nvidia will be on 7nm too and take another giant leap forward and the gap between them will increase again. Opportunity lost.

You mean opportunity lost for them to lower nVidia prices so everyone goes out to buy their competition? Yeah. Great opportunity lost...

AMD is not close to Nvidia and if you think they are you haven't really thought about it.

Oh they are close. The whole node talk is yet another smokescreen to put AMD in a negative light. If these Navi cards were nVidia's, everyone would be praising them for beating AMD's and charging less. Power consumption wouldn't be an issue at all. It's only an issue when it happens to AMD.

Additionally, the lack of ray tracing would be irrelevant too if the roles were reversed. How I know? I saw no one skipping nVidia for the lack of async compute. I saw no one skipping nVidia for not having FreeSync at the time. I see no one skipping nVidia for having no Radeon Chill equivalent. And right now, the focus is only on exactly what nVidia has and AMD doesn't, not the opposite. Even though the features that AMD has are arguably way more useful than the one nVidia does have...