-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Lort

Banned

You can make your own prediction, feel free to put Zen3 , dGpu, deep pockets , more balanced...etc

Just leave my prediction alone

Lol i didnt mean to cause u offense i was just teasing a little .. i think your prob going to be close ... along with the other additions i just made.

As I already predicted it in the other thread about Scarlett. CPU side is the only thing that made sense in the context of that statement "x4 more processing power of Xbox One X".

They tried to be really vague in that marketing video. And I start to question if power leap in GPU is gonna be all that significant as some try to predict. Judging by the NAVI reveal, APU with a 12TF GPU seems like a stretch. Remember how GPU Tflops was the huge part of their marketing last gen?

Last edited:

Aceofspades

Banned

It will be all about ray tracing ...

Raytracing application for next gen consoles will be limited. We shouldn't expect anything big in that regards. Its still a very expensive tech on compute, even a 2080ti have to make huge resolution and fps sacrifices in order to implement Raytraced scenes.

Ellery

Member

Didn't some reliable person on Twitter confirm both consoles will definitely have more than Stadia's TFs?

I think they are aiming for it. I mean maybe AMD just gave us the bottom of the barrel cards this year and the chips inside the next gen consoles are based on different GPUs with more CUs.

Double digits is such a nice psychological line that, if I was in charge, would definitely want to break. 10 TF sounds so much better than 9.2 TF

We will see what time brings us. I am not happy at all with what I have seen from the AMD GPU side yesterday. Small 7nm chips with only 8GB GDDR6 RAM for a very high price.

That heavily influences my perception of it, because I wonder where the bigger chips are and why the small 5700 chips are so "expensive".

Also the complete lack of RT hardware, but we have confirmation that the next gen consoles will have RT Hardware.

So the question is will the PS5 have like a 5700XT chip + slightly lower clocks + additional custom RT hardware or do we get a different GPU that is not yet announced.

Edit : There is still so much room for cards with more than 40 CUs and also names higher than 5700 like 5800,5800XT/Pro, 5900, 5900XT/Pro etc. I have absolute no clue why AMD wouldn't release a 56-64 CU Navi card. Maybe the yields are so bad? i have no idea

Last edited:

TLZ

Banned

What if they're leaving them for next year? I'm not sure if it works that way.I think they are aiming for it. I mean maybe AMD just gave us the bottom of the barrel cards this year and the chips inside the next gen consoles are based on different GPUs with more CUs.

Double digits is such a nice psychological line that, if I was in charge, would definitely want to break. 10 TF sounds so much better than 9.2 TF

We will see what time brings us. I am not happy at all with what I have seen from the AMD GPU side yesterday. Small 7nm chips with only 8GB GDDR6 RAM for a very high price.

That heavily influences my perception of it, because I wonder where the bigger chips are and why the small 5700 chips are so "expensive".

Also the complete lack of RT hardware, but we have confirmation that the next gen consoles will have RT Hardware.

So the question is will the PS5 have like a 5700XT chip + slightly lower clocks + additional custom RT hardware or do we get a different GPU that is not yet announced.

Edit : There is still so much room for cards with more than 40 CUs and also names higher than 5700 like 5800,5800XT/Pro, 5900, 5900XT/Pro etc. I have absolute no clue why AMD wouldn't release a 56-64 CU Navi card. Maybe the yields are so bad? i have no idea

Last edited:

They were pretty clear about 5700XT being mid-range segment card and not some bottom of the barrel card. Besides, AMD only managed to match RTX 12nm in power efficiency on paper while being on the 7nm node. Doesn't bode well for larger chips in the console. 200W+ GPU in the console? Doubtful.I think they are aiming for it. I mean maybe AMD just gave us the bottom of the barrel cards this year and the chips inside the next gen consoles are based on different GPUs with more CUs.

Double digits is such a nice psychological line that, if I was in charge, would definitely want to break. 10 TF sounds so much better than 9.2 TF

We will see what time brings us. I am not happy at all with what I have seen from the AMD GPU side yesterday. Small 7nm chips with only 8GB GDDR6 RAM for a very high price.

That heavily influences my perception of it, because I wonder where the bigger chips are and why the small 5700 chips are so "expensive".

Also the complete lack of RT hardware, but we have confirmation that the next gen consoles will have RT Hardware.

So the question is will the PS5 have like a 5700XT chip + slightly lower clocks + additional custom RT hardware or do we get a different GPU that is not yet announced.

Edit : There is still so much room for cards with more than 40 CUs and also names higher than 5700 like 5800,5800XT/Pro, 5900, 5900XT/Pro etc. I have absolute no clue why AMD wouldn't release a 56-64 CU Navi card. Maybe the yields are so bad? i have no idea

Last edited:

Negotiator

Member

Why would you argue that, when nVidia does the same thing since the Maxwell era and everyone says they're more "efficient"?9TF Navi, beats 12.6Tflop Vega 64, as we see in AMD's official slides.

I'd argue against word "efficient" though. AMD simply shifted resources from raw computing power into other beef.

PowerVR actually has a more advanced RT solution (they accelerate more things on hardware) than Nvidia:Raytracing application for next gen consoles will be limited. We shouldn't expect anything big in that regards. Its still a very expensive tech on compute, even a 2080ti have to make huge resolution and fps sacrifices in order to implement Raytraced scenes.

Maybe AMD/Sony/MS could license that. Why not?

Personally I expect 2 modes for next-gen consoles: 4k30 with RT on vs 1080p60 with RT off. Cinematic eye candy vs fluid framerates.

Most likely. We're currently 18 months away and we know for a fact that Anaconda will have a monstrous monolithic APU die.There is still so much room for cards with more than 40 CUs and also names higher than 5700 like 5800,5800XT/Pro, 5900, 5900XT/Pro etc. I have absolute no clue why AMD wouldn't release a 56-64 CU Navi card. Maybe the yields are so bad? i have no idea

Ellery

Member

What if they're leaving them for next year? I'm not sure if it works that way.

Yeah they are going to release bigger cards. Most definitely, but I don't understand why they aren't doing it now. The only 2 reasons I can think of is the Vega VII 16GB HBM2 Radeon 7 card and the yield problems for 7nm.

The RX 5700 XT feels as close to the Radeon 7 as they dared to go. In benchmarks I guess it is like 5-10% over the 2070 and maybe 10% under the Vega VII.

If they had a RX 5800 with like 50 CUs (or higher) they would already beat the Vega VII

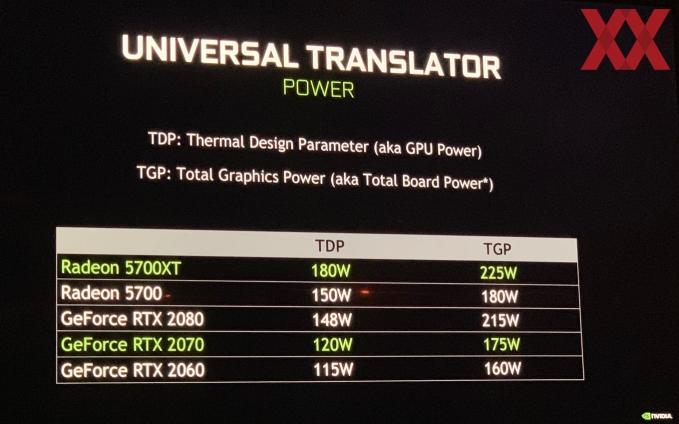

They were pretty clear about 5700XT being mid-range segment card and not some bottom of the barrel card. Besides, AMD only managed to match RTX 12nm in power efficiency while being on the 7nm node. Doesn't bode well for larger chips in the console. 200W+ GPU in the console? Doubtful.

well yeah AMD might call them that and it is fine, but for me it is the bottom of the barrel in terms of what I think is worth buying.

The 5700 card comes with roughly the same size (232mm²) as the RX 480 and roughly the same amount of Compute Units (36 CUs).

It even has the same amount of VRAM (8GB).

The RX 480 is a card that released for $229 3 years ago.

There is so much room left to go higher. I am just not happy with what I see. With the kind of performance for a $450 card in 2019 and the lack of certain features.

For me this is the bottom of the barrel, because I can't go any lower. If I go lower then performance is not even enough to fire my monitor.

For AMD this might not be the bottom of the barrel, because they could give us 20CU cards called RX 5500 that are basically good enough for Minesweeper and having an HDMI output.

I just don't really understand this product. The only reason we have this product at $450 is because the RTX 2070 is way overpriced and AMD think they are a premium brand now.

Honestly if Nvidia had a GTX equivalent to the RTX 2070 then the 5700XT would probably be a 349$ card tops.

I wouldn't worry too much about power consumption, because when you slightly undervolt/underclock AMD chips they magically have way lower power consumption.

Lets just hypothetically talk about the 5700 XT at 180W at 1900MHZ. Then I bet it would be like 140W at 1700MHZ/1600MHZ.

Don't get me wrong. I am not saying that Sony is giving us a huge 60 CU navi card with lower clock, because I doubt that myself, but it would be a possibility. (though the prices would rule it somewhat out I guess)

The only good thing here is that some people may still have hope that AMD puts in a bigger chip than that into the PS5 and Xbox, but I guess we get the 36 CU 5700 with custom RayTracing hardware accelerators

Works great for PR, and it’s doable in different forms, could well be screen space hardware accelerated path tracing for all we know ...Raytracing application for next gen consoles will be limited. We shouldn't expect anything big in that regards. Its still a very expensive tech on compute, even a 2080ti have to make huge resolution and fps sacrifices in order to implement Raytraced scenes.

I also think 36 CU is the max for consoles ...

Last edited:

Evilms

Banned

Works great for PR, and it’s doable in different forms, could well be screen space hardware accelerated path tracing for all we know ...

I also think 36 CU is the max for consoles ...

The xbox one x has 40 cu yet.

Evilms

Banned

TeamGhobad

Banned

The xbox one x has 40 cu yet.

they would have to double it 72 minimum

And? This is a new architecture.The xbox one x has 40 cu yet.

xool

Member

The other stuff is good but -23% power from 2 die shrinks is not good ..

It's supposed to be - -35% power just from 14/16nm to 10nm, and similar again to 7nm...

..makes me wonder if they actually managed to make a less power efficient architecture .. I just can't reason this for example Apple A12 is claimed to be (up to 50%) ie 40% less power than Apple A11 (7 vs 10 nm)

Something seems just wrong about AMD's product. The only thing that is on target is the density.

Last edited:

LordOfChaos

Member

I'd argue against word "efficient" though. AMD simply shifted resources from raw computing power into other beef.

I'd argue more efficient, or both.

With a pure compute workload, you can come closer to a cards theoretical Flops than on a graphical workload, Vega was set up for good GPGPU performance but meh performance per watt in gaming.

What Navi does is change the SIMD group setup, previously AMD was paying a 4 clock cycle penalty for any new execution from the front end, Navi will cut that down to 2, which is more in line with Nvidia (Although although Nvidia's Warp scheduler is quite different compared to AMD's wave scheduler, since the warp scheduler basically targets any free SP at a given moment.).

So this change should bring the % of graphical performance achieved from the theoretical, closer to the latter number.

The bit with infinity fabric references (and weird patent on what looked like GPU with HBM)

So just Infinity Fabric though right, nothing hinted at RT over IF? That doesn't sound doable to me from a bandwidth perspective

Last edited:

TeamGhobad

Banned

CPU: Ryzen 7 3700X

GPU: Radeon RX 5700

GPU: Radeon RX 5700

DeepEnigma

Gold Member

Well lookie here.

New Xbox Console "Project Scarlett" Coming Next Year and it "Eats Monsters for Breakfast"

Now that I have a killer 75" QLED I want a console that does 4K (have the One S for movies but now I wish I had waited for the X) I'm going to hold out for more info on Scarlett and PS5, but if neither of them have UHD drives I'm going to get a One X and just call it a day.

They most definitely were talking about the CPU. That way that statement is technically not a lie.

If anyone thinks there will be 4x the GPU strength, log out of your account please.

Last edited:

LordOfChaos

Member

The other stuff is good but -23% power from 2 die shrinks is not good ..

It's supposed to be - -35% power just from 14/16nm to 10nm, and similar again to 7nm...

..makes me wonder if they actually managed to make a less power efficient architecture .. I just can't reason this for example Apple A12 is claimed to be (up to 50%) ie 40% less power than Apple A11 (7 vs 10 nm)

Something seems just wrong about AMD's product. The only thing that is on target is the density.

I'm definitely thinking they Vega'ed it again at this point, meaning the retail cards are pushed too far past their optimal point trying to match targets from Nvidia (2070), while a small drop in clocks would lead to a disproportionate reduction in power as it did for Vega.

llien

Member

Ryzen 7 3700X—8C/16T, 3.6GHz to 4.4GHz, 36MB cache, 65W TDP, $329CPU: Ryzen 7 3700X

GPU: Radeon RX 5700

Why would you argue that, when nVidia does the same thing since the Maxwell era and everyone says they're more "efficient"?

I just don't see it as engineering compromise to be made.I'd argue more efficient, or both.

Power efficiency makes sense.

Perf per die size as a metric makes sense (as again, it directly affects product).

Performance per tflop makes as much sense as "performance per Ghz". Performance and power consumption is what affects users, that thing does not.

Yeah, just mySo just Infinity Fabric though right, nothing hinted at RT over IF? That doesn't sound doable to me from a bandwidth perspective

Evilms

Banned

All the more reason to offer more cu.And? This is a new architecture.

llien

Member

It's 1.5 times less power, or, 33% less power, if you wish.The other stuff is good but -23% power from 2 die shrinks is not good ..

And it's one die shrink.

Also, cool to see Navi be 15% ahead of V64.

LordOfChaos

Member

CEO Math™

/s, they're probably pretty close though as they can guesstimate memory and board power.

Last edited:

Those 8Tflop estimates don't sound all that laughable right now. I mean if you compare it to the previous gen launch spec then it's actually a significant step up. Also, take into account that AMD has switched to pure gaming focused GPU rather than compute heavy. I think people made the mistake of comparing possible next-gen spec to mid-gen refresh.

Last edited:

Munki

Member

Those 8Tflop estimates don't sound all that laughable right now. I mean if you compare it to the previous gen launch spec then it's actually a significant step up. Also, take into account that AMD has switched to pure gaming focused GPU rather than compute heavy. I think people made the mistake of comparing possible next-gen spec to mid-gen refresh.

So what are we at today? Max 10 TFs?

Nah... we getting RTX 2080 Ti performance with hardware accelerated ray tracing capabilities in a 500$ box!!! Hop on to the hype train before it leaves the station.So what are we at today? Max 10 TFs?

Last edited:

ethomaz

Banned

Good for ryzen.

That is what I’m talking... it is a big issue for consoles.

Imagine if NVidia shrink to 7nm... how the gap will widen?

Last edited:

demigod

Member

So what are we at today? Max 10 TFs?

We are back down to 8NvidiaTFlops.

Negotiator

Member

I'm still firm on 12-13 TF max.

Evilms

Banned

Microsoft's Xbox chief: Project Scarlett likely isn't the last console

Streaming services are all the rage, but don't go thinking that means we'll see fewer devices, says Phil Spencer, head of Xbox.

SpinningBirdKick

Banned

Hello all

I guess it's time to dust off the old gaf account.

I'll try my best not to upset anyone with my common sense. I've learned it's not always appreciated.

Now about those TF... j/k

I guess it's time to dust off the old gaf account.

I'll try my best not to upset anyone with my common sense. I've learned it's not always appreciated.

Now about those TF... j/k

ANIMAL1975

Member

Hello friend, we at Team 14.2 welcome you back and invite you for the ride of your life in our top of the line hype train! Join us today and come see the world!Hello all

I guess it's time to dust off the old gaf account.

I'll try my best not to upset anyone with my common sense. I've learned it's not always appreciated.

Now about those TF... j/k

FranXico

Member

LOL. Where did you find that?Funny picture ^^

Imtjnotu

Member

Really interesting read on that powervr. They can almost match nvidia at no where near the same power with machine learning and neural networks. Let's see if anyone goes with thisWhy would you argue that, when nVidia does the same thing since the Maxwell era and everyone says they're more "efficient"?

PowerVR actually has a more advanced RT solution (they accelerate more things on hardware) than Nvidia:

Maybe AMD/Sony/MS could license that. Why not?

Personally I expect 2 modes for next-gen consoles: 4k30 with RT on vs 1080p60 with RT off. Cinematic eye candy vs fluid framerates.

Most likely. We're currently 18 months away and we know for a fact that Anaconda will have a monstrous monolithic APU die.

SpinningBirdKick

Banned

You know what, I think there's a chance that Team14.2 has a shot. Honestly!Hello friend, we at Team 14.2 welcome you back and invite you for the ride of your life in our top of the line hype train! Join us today and come see the world!

I had a lot of time to pick over things during my enforced hiatus and... *Dr Strange voice* there is one way this (14.2) is possible.

It's not as crazy as you think. But it's probably not the right time to get into that now.

Last edited:

bhunachicken

Member

Has anyone identified what Sony's Secret Sauce is yet?

(I'm sure it's not this)

(I'm sure it's not this)

Evilms

Banned

On resetera.LOL. Where did you find that?

Last edited:

Insane Metal

Member

Yup.10TFlops max. Anything above that would be mind-blowing. Smallest GPU jump across generation, with most ambitious image quality target 4k and 8k upscaled. Man if navi was not so disappointing we could have had something nice.

I stated before. 10TF MAX for both machines. That is not bad at all since we're at a new architecture, I'm pretty sure those 10TF with Ryzen 2 CPUs will be able to produce some mind blowing stuff.

TeamGhobad

Banned

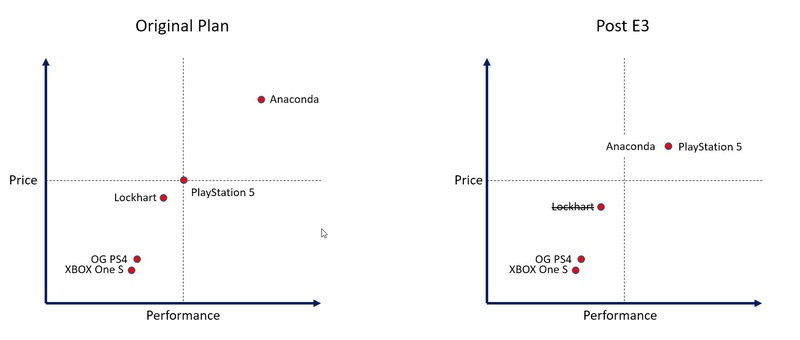

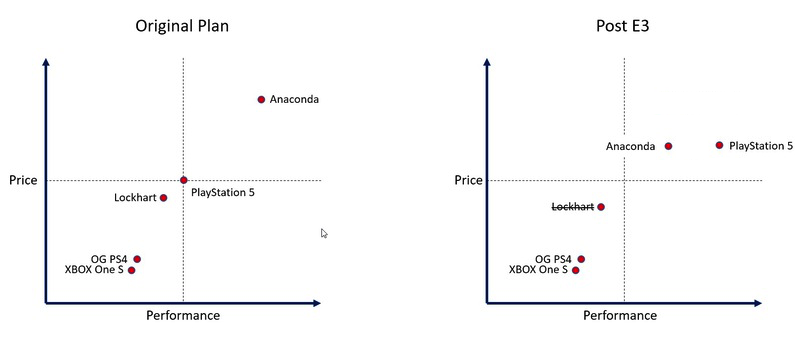

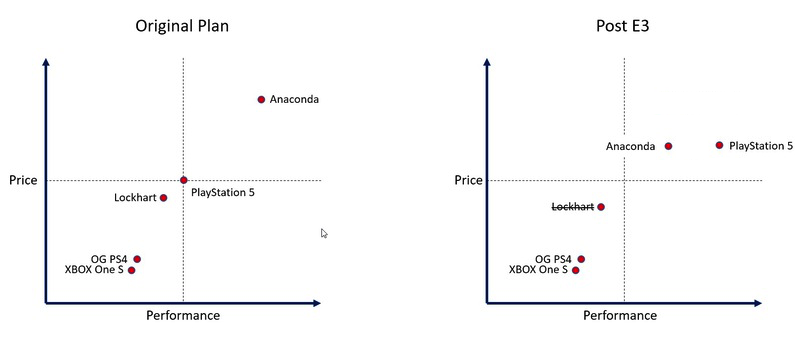

I believe Lockhart was cancelled because PS5 is more powerful than Anaconda. No point in having 2 consoles weaker than PS5.

Last edited:

My one concern is the push for native 4k. If they target 1440p and just upscale the visuals using radeon sharpening and FidelityFX I think we will have great consoles. If developers target native 4K it will be an underwhelming gen.Yup.

I stated before. 10TF MAX for both machines. That is not bad at all since we're at a new architecture, I'm pretty sure those 10TF with Ryzen 2 CPUs will be able to produce some mind blowing stuff.

- Status

- Not open for further replies.