Smaller than Atom

Member

Not gonna happen. Ever.All I ever want is compulsory 60fps in every game.

Not gonna happen. Ever.All I ever want is compulsory 60fps in every game.

My source has always told me between 1080 and 1080Ti. In real world calculations, that's not very powerful to me. There are several games that still struggle to hit 60FPS on the 2080Ti. Trying to run a game like Control on a 1080 with some RT cores isn't going to do much to the look. You'd have to drop so many samples to get it to run at 1080p/30FPS. I'm convinced that the consoles' weakest part is the GPU. It's just not powerful enough for another 6 years.

What exactly is this?Console optimizatios are a thing. Forza 7 is 4k, fully stable 60fps with hdr, and it runs on a 2013 modified mobile cpu.

Digital Foundry said:"GPU bottlenecks on PS3/360 aren't as much of an issue on Wii U, though there are occasions when the older consoles manage to pull ahead."

Wow .My flop is 10 inches

I have a Ryzen 2700x @4.3ghz with 32gb Ram and a 2080ti, and people on Gaf honestly expect the next gen consoles to even come close to it...

I say every single time.

Keep... Your expectations... In... Check.

Money.....There will be next gen exclusive launch games that wipe the floor with anything your PC currently plays. We've done this song and dance with the "lol Jaguar / laptop GPU lol" which then produced the likes of The Order, Uncharted 4, Horizon, Spider-Man and God of War...

Keep.... Your limitations... In... Check.

PC gamers get so defensive at this time of the gen haha!

So basically 1080Ti which'll me memory limited in a way 1080Ti is not.

Console optimizatios are a thing. Forza 7 is 4k, fully stable 60fps with hdr, and it runs on a 2013 modified mobile cpu.

There is no proof of modded console optimizations especially from 3rd party devs. Game after game after game still has shortcomings in the graphics department (see all the Digital Foundry articles on various AAA game comparisons). There is only but so much you can optimize with limited hardware. Dabbling in ray-tracing is a whole other level to getting good graphics.

What exactly is this?

I remember when there was huge controversy over the Wii U's power. In real world performance, it had more power than PS3/360 but it ALWAYS required sacrificing something.

Need for Speed was an example of this. They made it look visually better but there was still complaints about the CPU holding it back in some ways that no developer could get around.

Face-Off: Need for Speed: Most Wanted on Wii U

Digital Foundry compares the new Wii U edition of Most Wanted to the PC, 360 and PS3 alternatives.www.eurogamer.net

You can only do so much with the actual hardware you're given. I don't believe there's a magic "code to the metal" or whatever that's native to console. Look at multiplatform games as direct evidence of this.

also, again, RDNA (newest AMD architecture) has a way better performance to TFLOP ratio than GCN (AMD architecture used in current consoles)

so even a 6TFLOP RDNA GPU would massively outperform zhe GPU in the Xbox One X

if the new consoles have a 10 or even 11 TFLOP RDNA GPU the performance increase will be higher than what the numbers say.

that's not even talking about Memory

1080ti is about 2080 level of performance though. But that's not counting games that will be optimized with VRR and hardware RT. That'll be plenty enough for 3 more years (until the next mid-gen console).

BTW did you just change you tune ? Are you slowly accepting that PS5 could have more than 10tf ? I think it's the first time you talk about PS5 having 1080ti level of performance.You always talked only about 1080 before AFAIK.

When people say 'consoles are holding back PC's', they are talking soley about the majority of PC games being console ports, ports that are based on tech numerous levels below a lot of PC's. And that is fact.

I mean it works both ways of course, majority of big PC games are console ports, but because of that it means we can play games at higher settings, resolutions, and framerates. So there is always a sliver lining, and thats whats so good about PC gaming, the freedom and choice.

Tell me of another piece of hardware that runs 4k, 60fps with hdr using a 2013 mobile cpu.

kyubajin knows how to make games great.All I ever want is compulsory 60fps in every game.

In the grand context of everything, why would PC games be exempt from "optimization"?It means that since the hardware specs are fixed, devs are able to optimize games to that configuration.

In the grand context of everything, why would PC games be exempt from "optimization"?

Especially when you consider this is the same platform where PC gamers have had frame rates as high as 300fps since 2001. I really doubt it's in any developer's best interest to just dump a game and pray it works on PC.

Or as another example, consider that game engines are updated all the time. Yet, I have never heard Epic say that their version of UE4 runs better on console than on PC. 99% of the time, it's the complete opposite. Every new graphical feature is showcased on PC first, and then consoles get the same or a stripped down version of it later.

Case and point, look back at the Elemental demo. The PS4 version still had to make sacrifices that a more powerful PC didn't.

Or another infamous example, the original Crysis on PC vs Console. Even though the console version ran on an improved version of the engine with newer features, the memory limits still meant there was less geometry/lower texture resolutions compared to the original.

The base value of a reference card is 11.3 TFLOPs, so yeah, ~14 when OC'd.OC'd, a 1080ti is more like 13.9 Tflops.

As I've said, PS5 / XONE GPUs will be memory limited at some point, cuz there's absolutely no way that they'll have access to 11 GBs of VRAM and the more VRAM you've got, the better looking game you can make and the more you can do with it visualy, not to mention support of native resolutions without relience on temporal resolution scaling.The PS5 may end up having "1080Ti like performance" but just based on Tflops, there is a pretty big lead in favor of a 1080ti.

Look no further than Quantum Break / Control. 1080Ti is the one and only card capable of handling these games in native 1080p 60 on maxed settings (RTX cards don't count cuz you don't need them and they've less VRAM) . So at one point it's gonna be 1080p 60 GPU for modern games (depending on a game) - 2-3 years from now.... I mean, it kinda is now, but only cuz of lazy and incompitent devs who can't optimize their games properly - I'm not talking about Quantum Break or Control here.I'm definitely interested in seeing how my 1080ti handles next gen games compared to next gen consoles, before I upgrade

Anyone know how credible kleegamefan is?

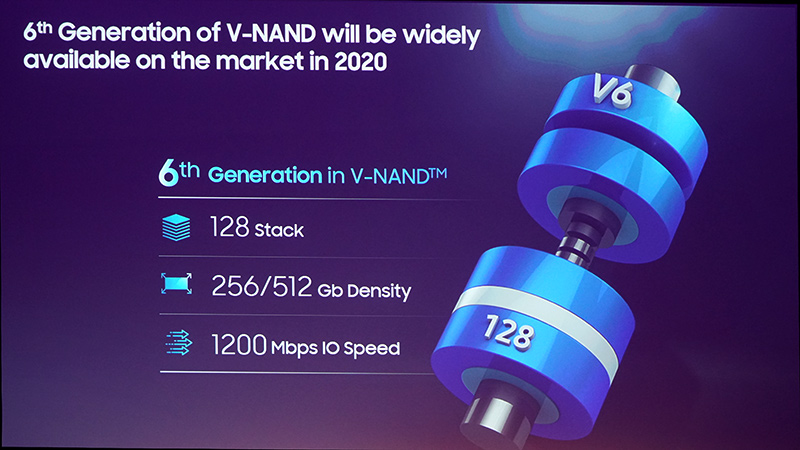

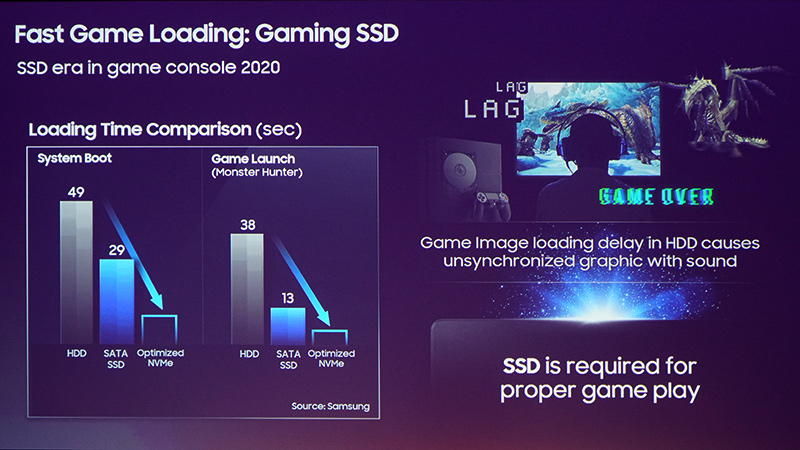

Still waiting to see what kind of SSD they will have but my money is on an NVMe running on PCIe 4.0. And even that will 18+ months old tech by the time they launch.

Except for the fact that current Pro and X is already currently outputting Dynamic 4K/60fps in some games.. What are you smoking, can I have some?I know this sounds cliche but why do people look to consoles for 60fps and not PC?

Unless your last game console was literally a SEGA Genesis from the 90s, it's almost 3 decades now where 60fps as a standard does not exist.

It's honestly up there with people asking "Why isn't Grand Theft Auto on Nintendo"? Just make peace with the idea that if it didn't happen the last 3 console cycles, it's not going to happen the next one.

And no, you don't need a $5000 PC to play games at 60fps. I just bought a $500 PC and even though I don't game much, I can still get 1080p/60fps in any title I want. If I want more, then you obviously have to pay more.

Don't bother trying to argue with people like this.. It's like someone slating a game or movie that they've never seen or played.Console optimizatios are a thing. Forza 7 is 4k, fully stable 60fps with hdr, and it runs on a 2013 modified mobile cpu.

Yeah, "some" games.Except for the fact that current Pro and X is already currently outputting Dynamic 4K/60fps in some games.. What are you smoking, can I have some?

It actually means you get a variety of AAA games period because the PC Community cannot support the development cost of these titles. I'm by no means saying console is better but as far as I see it, PC needs console for games, consoles do not need PC!When people say 'consoles are holding back PC's', they are talking soley about the majority of PC games being console ports, ports that are based on tech numerous levels below a lot of PC's. And that is fact.

I mean it works both ways of course, majority of big PC games are console ports, but because of that it means we can play games at higher settings, resolutions, and framerates. So there is always a sliver lining, and thats whats so good about PC gaming, the freedom and choice.

2001 is completely different to now as far as tech hardware. Consoles are closing in on performance. Please show me a PC that I can buy for $500 that will run 'some' current games at Dynamic 4K HDR 60fps?Yeah, "some" games.

On PC, it's never a compromise. Ever since 2001, you can get frame rates as high as 300 fps. I don't remember PS2/Xbox/ or Gamecube ever doing that and the generation that came after that was even worse.

If you want consistently high frame rates, consoles have never had that advantage the moment they shifted towards 3D graphics.

This video is from 2 years ago but he built a PC that's actually cheaper than XONEX and mentioned if he lowered the settings more, he could get full 4K & 60fps.2001 is completely different to now as far as tech hardware. Consoles are closing in on performance. Please show me a PC that I can buy for $500 that will run 'some' current games at Dynamic 4K HDR 60fps?

Edit: I've been gaming on my 4K TV for nearly 2 years, fuck 1080p. I'd rather 30fps 4K then 1080p 60+fps. I have a mid tier gaming PC with 1440p monitor and can run 1440p 60fps and I hardly use it because personally the experience I feel on my 75 inch 4K HDR TV feels superior.

Huh?So basically 1080Ti which'll me memory limited in a way 1080Ti is not.

1kg of chocolate =/= 1kg of IronOne more time again, GCN TFLOPS =/= RDNA TFLOPS.

i wanna know if that can render something like this in a playable state

All I ever want is compulsory 60fps in every game.

What exactly you don't understand from what I've said?Huh?

And again

Shiggy: What's a Flopu?Meanwhile on nintendo...

What exactly you don't understand from what I've said?

So basically 1080Ti which'll me memory limited in a way 1080Ti is not.

Be more specific please. There's at least 2 things you can ask me to clarify / explain.That part.

The whole thing is gibberish. Have you reread it?Be more specific please. There's at least 2 things you can ask me to clarify / explain.

If you're being serious, there are two choices:

1. Wait for Sony/MS to remaster them on new consoles

2. Wait for PC emulation to run them at higher FPS

At least with the second option, it actually benefits everyone, because emulation helps preserve gaming history while making them run better. Whereas Sony/MS could release a game one day and say "lol suck it" and then take it down like they've done with Gravity Rush or Driveclub.

And that's just 1st party exclusives. Almost every 3rd party game now a days is multiplat in which case, the choice is clear. A PC version of the same game will always have 60fps available.

These are standardized platforms.Unless your last game console was literally a SEGA Genesis from the 90s, it's almost 3 decades now where 60fps as a standard does not exist.

As I've said, PS5 / XONE GPUs will be memory limited at some point, cuz there's absolutely no way that they'll have access to 11 GBs of VRAM

We don't know official specs yet. At least 4GB (if not more) of RAM will be used by OS, not to mention that all of the memory on consoles is shared. It's basically PC without dedicated RAM and VRAM for different stuff and workloads. On PC both VRAM and RAM is used when you're playing games (including OS). For example, Resident Evil 2 eats up to 9GB of VRAM in native 4K (or close to it) and 6GB of RAM, but if you'll be playing the game in 4K on a GPU which is memory limited, RAM usage can spike up to 10GB in 4K and up to 9GB in 1440p so that's almost 19-20 GB (RAM + VRAM) in total for 1440p / 4K (provided you even have that much, otherwise game assets will be loading from SSD / HDD instead).They will have access to at least 16Gb of VRAM. All the RAM in the new consoles is VRAM.

What "image quality"?It's just that they choose to sacrifice FPS for image quality and other graphical bells and whistles.

You're missing the point. You just asked me "where can I play these exclusive games at 60fps". Did I not just give you an answer as to how?I buy consoles for the exclusives when they are released. I don't sit around for 5 years and hope they will be remastered for a new console or emulated on PC just because of 60fps. That is crazy.