That's... not how comparisons work. Let's pretend that the Spiderman code wasn't re-engineered for the purposes of being a demo (it was). The base load time of State of Decay 2 is apprximately 45 seconds,

which the Series X decreases to approximately 7 seconds. This is approximately just 15% of the original load time. The base load time of Spiderman on the PS4 is approximately 8 seconds,

which the PS5 decreases to approximately 0.8 seconds. This is approximately just 10% of the original load time. So, we see a 10% vs 15% final load time. However, it's likely that a game with a significantly improved asset streaming service - such as Spiderman, in direct comparison to State of Decay 2 - would see a better improvement overall from the SSD speeds. So, even when adjusting the numbers to enable a correct comparison, we're still not comparing apples to apples.

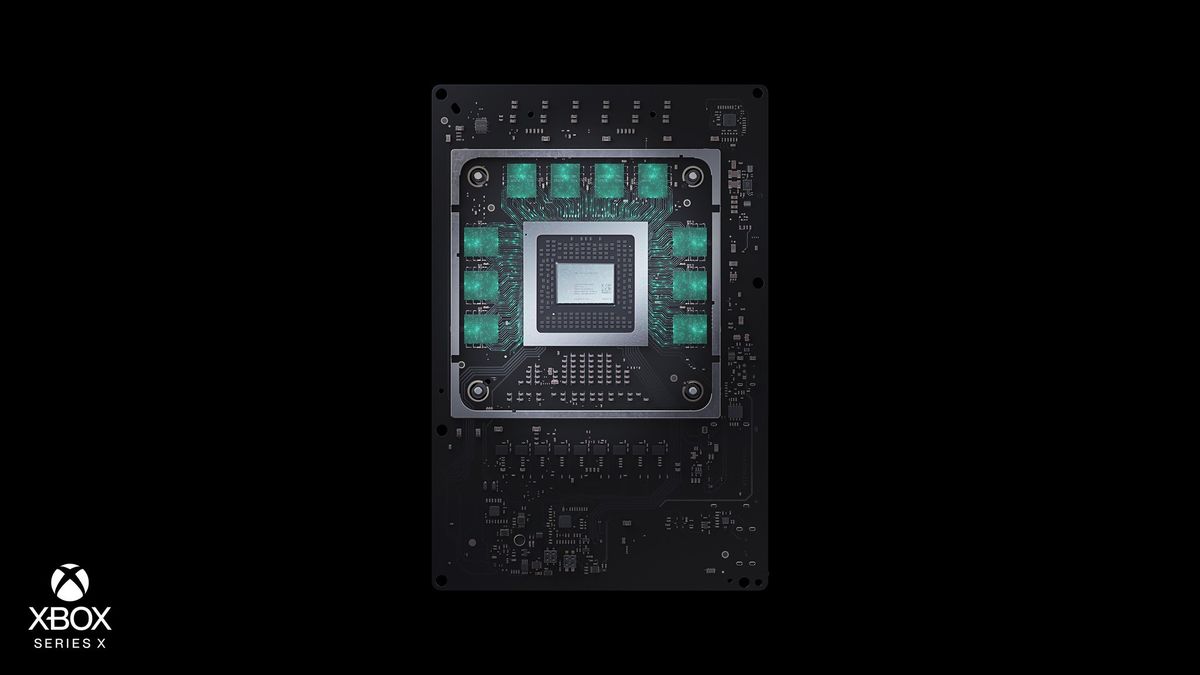

Further to your posts, Sony haven't invented "game-changing technology", they just have a fast SSD. Microsoft are also using a fast SSD, but I think we can agree it's certainly not as fast as Sony's. Microsoft, however, actually

have invented some game-changing technology. In addition to Mesh Shaders - worth pointing out that the PS5 has the Geometry Engine to replicate this functionality - the real dark horse is SFS, or Sampler Feedback Streaming.

This tech, available in DX12 Ultimate, is going to shrink IO bandwidth requirements and memory footprints pretty significantly for texture assets, which are vast majority of game data being loaded in. If you care to learn more,

check out Microsoft's 17 minute developer-focused presentation on it. Warning: it's dense and technical in nature.

The short version is that, while Sony can load an 8mb 4k texture quickly, Microsoft have developed a way to load just

800kb of that same texture for the same rendered result. Thus, Microsoft's slower SSD combined with SFS will most likely outpace Sony's faster SSD when loading the same texture assets. Being as this is patented technology that requires the suitable hardware to utilise it, it's unlikely that Sony will be able to replicate this within their own API in the near-term.

Anyway, I hope this helps you understand why, while Sony's loading presentation is impressive, I'm not convinced that their raw IO throughput is the best method to achieve the goals of diminished load times and better asset streaming.