Assuming that the panel of Epic Chinese all knew exactly what they were talking about, my takeaway from the removed video is that not only will UE5 be awesome when released, the demo we've seen is but a taste of things to come.

The loudest dude in the panel said and implied a few things:

1. They are aiming for 60fps with visual quality as shown in that demo on next gen consoles and UE5 isn't out now because they haven't reached that point yet.

2. He strongly implies that progress is being made and the above target is not merely wishful thinking; ~40fps on their notebook, with uncooked assets (I suppose that means unoptimised assets).

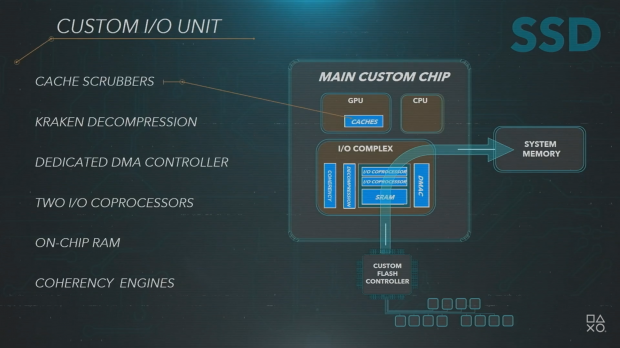

3. In his estimation, two triangles per pixel at 2K resolution is "not really that much" and Nanite doesn't need the high speed SSD solution by Sony to pull that flying scene off. In other words, a similar scene could probably be made to be even more complex in future.

Some points mentioned by others on the panel:

1. Niagara is expected to be very useful in the film and animation industry, especially for visual FX. One of the guys said he was quite impressed at how Niagara was used to make the puddle, the keyword is "quite". I think he knows it's not the best looking (and behaving) water...yet. He also said some other cool things about Niagara and its potential application in games but I couldn't really understand the examples he gave.

2. Lumen is expected to be applied in conjunction with other lighting techniques. One of them said that unlike GPU-based RT, you can selectively apply Lumen to specific aspects. If I'm not misunderstanding what was said, the explanation was that GPU-RT can either be fully on or off, and sometimes that results in the scene not being able to run at a minimum of 30fps. Lumen was described as being very flexible.

Personally, this means Fortnite will look and (probably) run better, so I'm hyped

Edit: Changed "image quality" to "visual quality".