Reading the Eurogamer article again, I think Kirby Louise is right;

The form factor is cute, the 2.4GB/s of guaranteed throughput is impressive, but it's the software APIs and custom hardware built into the SoC that deliver what Microsoft believes to be a revolution - a new way of using storage to augment memory (an area where no platform holder will be able to deliver a more traditional generational leap). The idea, in basic terms at least, is pretty straightforward - the game package that sits on storage essentially becomes extended memory, allowing 100GB of game assets stored on the SSD to be instantly accessible by the developer. It's a system that Microsoft calls the Velocity Architecture and the SSD itself is just one part of the system.

I don't think it will use zero. The CPU still needs to tell the I/O which data to read and where to put it in RAM. The I/O handles the actual transfer and decompression by itself.

Yeah, I think it might be a concept worth considering as being the case, as well. Think of it this way; if the 100 GB/s were just functioning the same as the rest of the NAND storage, why even single it out? Why highlight that 100 GB block in particular if it's just acting as expected and providing data throughput through to main system memory? Especially given that's what the entire drive itself would be doing (presumed)?

"Instant" might be referring more to direct calls in the game code to data on that 100 GB as if it were just additional RAM, so no need to specify specific access to the SSD path. Kind of like how older consoles could address ROM cartridges as extensions of RAM (the tradeoff being that data would be read-only, which I'm assuming this 100 GB cluster would be set to as well once the contents desired to be placed there are actually written to the location).

I'm still curious if this specific 100 GB portion is a higher-quality SLC or even MLC NAND cache; IIRC Phison's controllers have been used in at least one SSD that operates in that manner. I'd like to think so for this particular feature if it's being implemented, but I figure we'll see eventually.

Someone who perceives themselves an authority figure even though they tried getting people to ignore the Github leak almost as soon as it came out (yet it turned out to be very pertinent to the consoles in the end). Basically just another poster like the rest of us, so he would need to back up his statements directly to be taken with any further consideration (personally).

Having a dedicated to that to free up CPU power seems better to me.

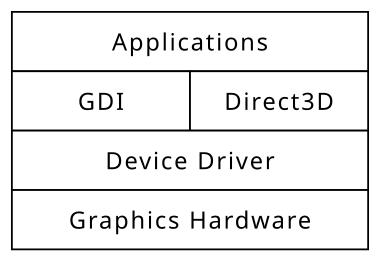

In a lot of ways it does. But I can see this from MS's POV, too. They want their software implementations of DirectStorage (and others) adaptable across a range of devices within the PC, laptop, tablet etc. space. And server markets, too. So with that consideration, which approach seems more easily feasible?

A: Design a hardware block to completely handle the task and needing to deploy that in new CPUs, APUs, PCIe cards etc. which require hardware upgrades that can eat up to millions of costs for things like the server market, or..

B: Find a way to manage that on the CPU with as little resources as possible (1/10th of a single core in XSX's case), that can be designed for deployment onto current existing CPUs, APUs, servers etc. and scale its performance based on what they hardware they already have, while also being fluid enough to ensure future-proofing as time goes on?

Seeing the kind of company MS is, I can see why they chose the latter approach. The former approach isn't

inherently better, neither is the latter. They just have their strengths for their specific purposes.