Jigsaah

Gold Member

Tech heads, get on this! How does a 10TF machine and a 12TF machine both exhibit 2070 performance? It should be noted that the developer says "for now" suggesting there may be more potential to unlock once they've had more experience with the kits. But to not see many differences?

wccftech.com

wccftech.com

KeokeN on Next-Gen: We Don't See Many Differences Between PS5 & XSX; Tempest Will Free CPU Resources

We spoke to Deliver Us The Moon developer KeokeN to learn their thoughts on next-gen consoles, Sony's PS5 and Microsoft's Xbox Series X.

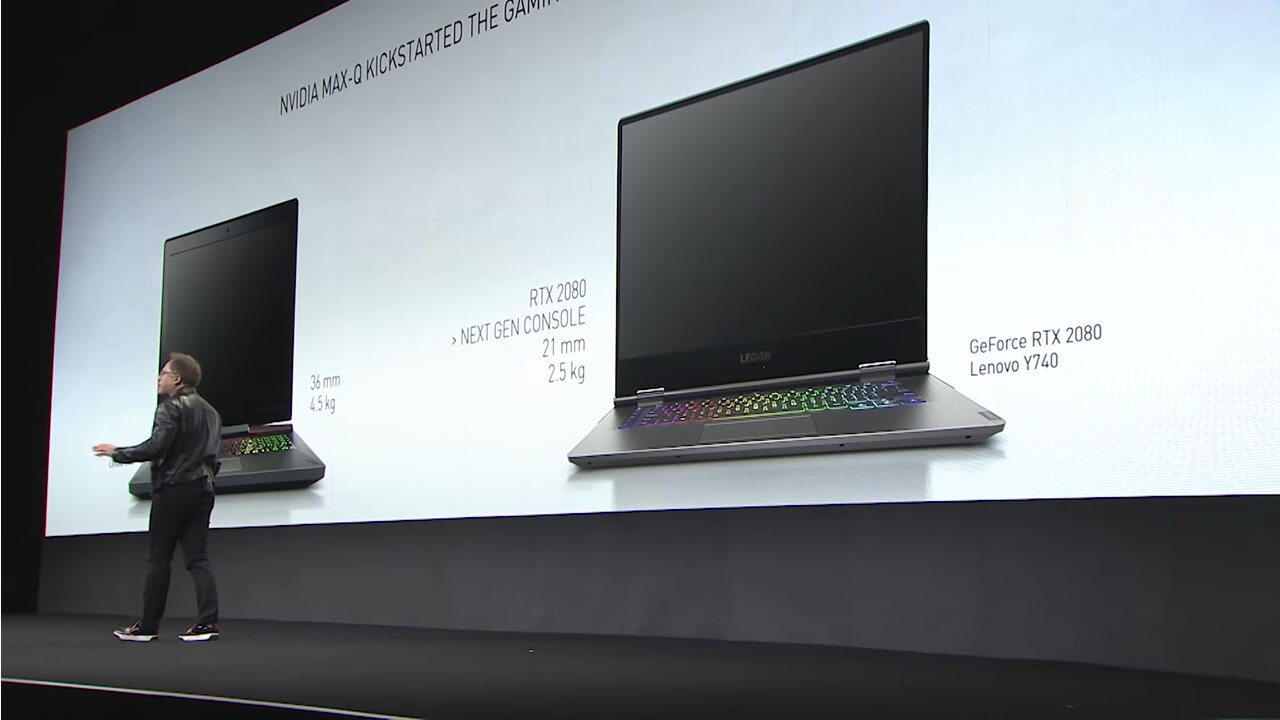

The computing power of the new consoles is very promising, and we're very excited to see ray tracing come to next-gen consoles. It is difficult to say since we don't know the exact ray tracing specifications yet, but early snippets of info do suggest similar performance to an RTX 2070 Super, which will definitely be enough for similar results to what we have now on PC.

For now, we don't see too many differences, they seem to be competing well against each other and both are pushing new boundaries.