I have a unique perspective on the recent nVidia launch, as someone who spends 40 hours a week immersed in Artificial Intelligence research with accelerated computing, and what I’m seeing is that it hasn’t yet dawned on technology reporters just how much the situation is fundamentally changing with

technoodle.tv

"I have a unique perspective on the recent nVidia launch, as someone who spends 40 hours a week immersed in Artificial Intelligence research with accelerated computing, and what I’m seeing is that it hasn’t yet dawned on technology reporters just how much the situation is fundamentally changing with the introduction of the GeForce 30 series cards (and their AMD counterparts debuting in next-gen consoles).

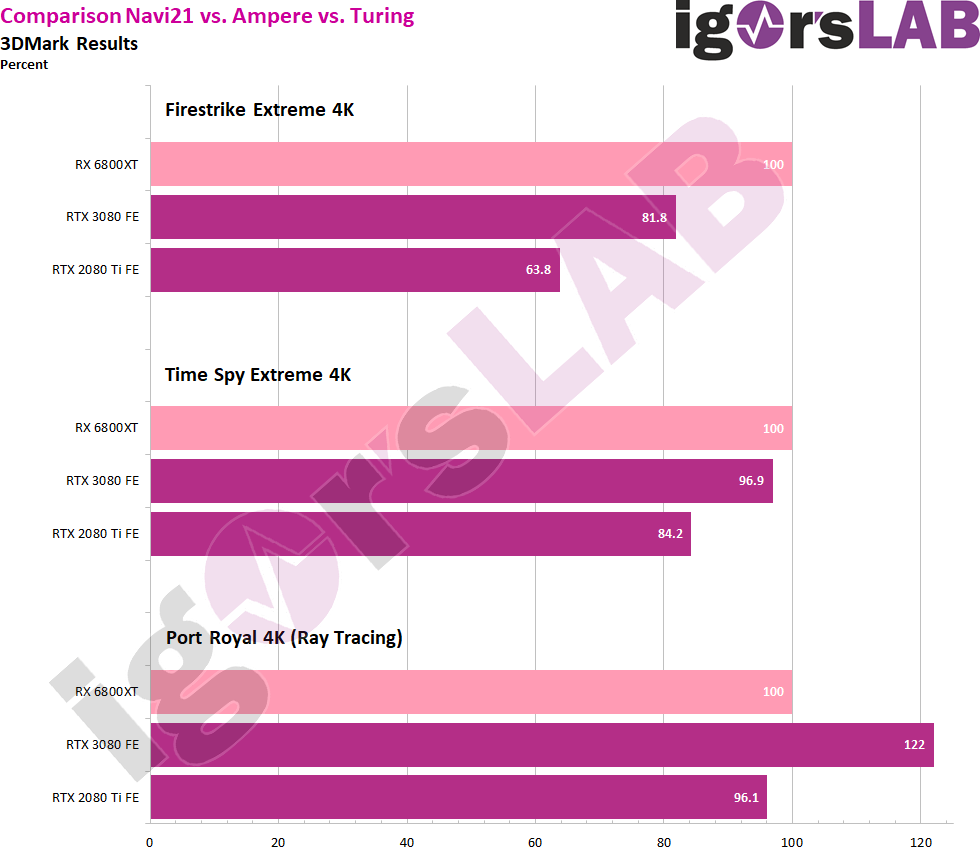

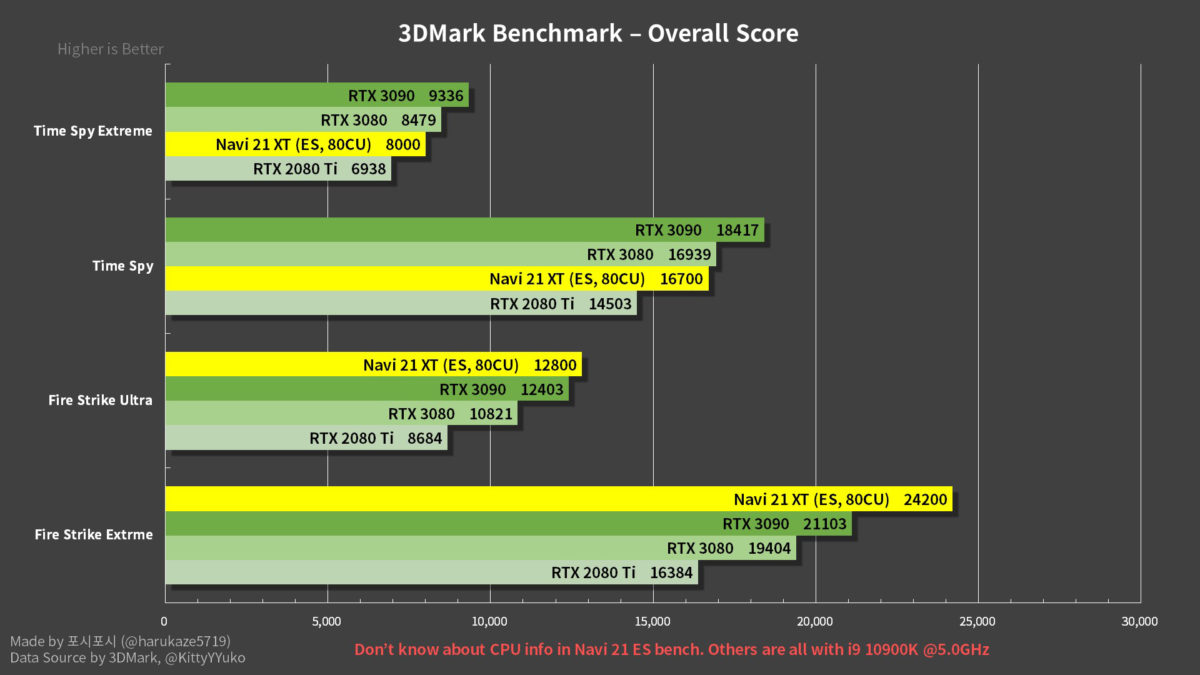

Reviewers are taking a business-as-usual approach with their benchmarks and analyses, but the truth is, there is nothing they can currently include in their test suites which will demonstrate the power of the RTX 3080 relative to previous-gen GPU’s.

nVidia has been accused of over-stating the performance improvements, by cherry picking results with RTX or DLSS turned on, but these metrics are

the most representative of what’s going to happen with next-gen games. In fact, I would say nVidia is understating the potential performance delta.

Don’t get me wrong, most of the benchmarks being reported are valid data, but these cards were not designed with the current generation of game engines foremost in mind.

nVidia understands what is coming with next-gen game engines, and they’ve taken a very forward-thinking approach with the Ampere architecture. If I saw a competing card released tomorrow which heavily outperformed the GeForce 3080 in current-gen games, it would actually set my alarm bells ringing, because it would mean that the competing GPU’s silicon has been over-allocated to serving yesterday’s needs. I have a feeling that if nVidia wasn’t so concerned with prizing 1080 Ti’s out of our cold, dead hands, then they would have bothered even less with competing head-to-head with older cards in rasterization performance. "