See how you are lost in FUD.

If you'd check the OP, you'd know that per game NN training is a feature of DLSS 1.0, no longer present in DLSS 2.0.

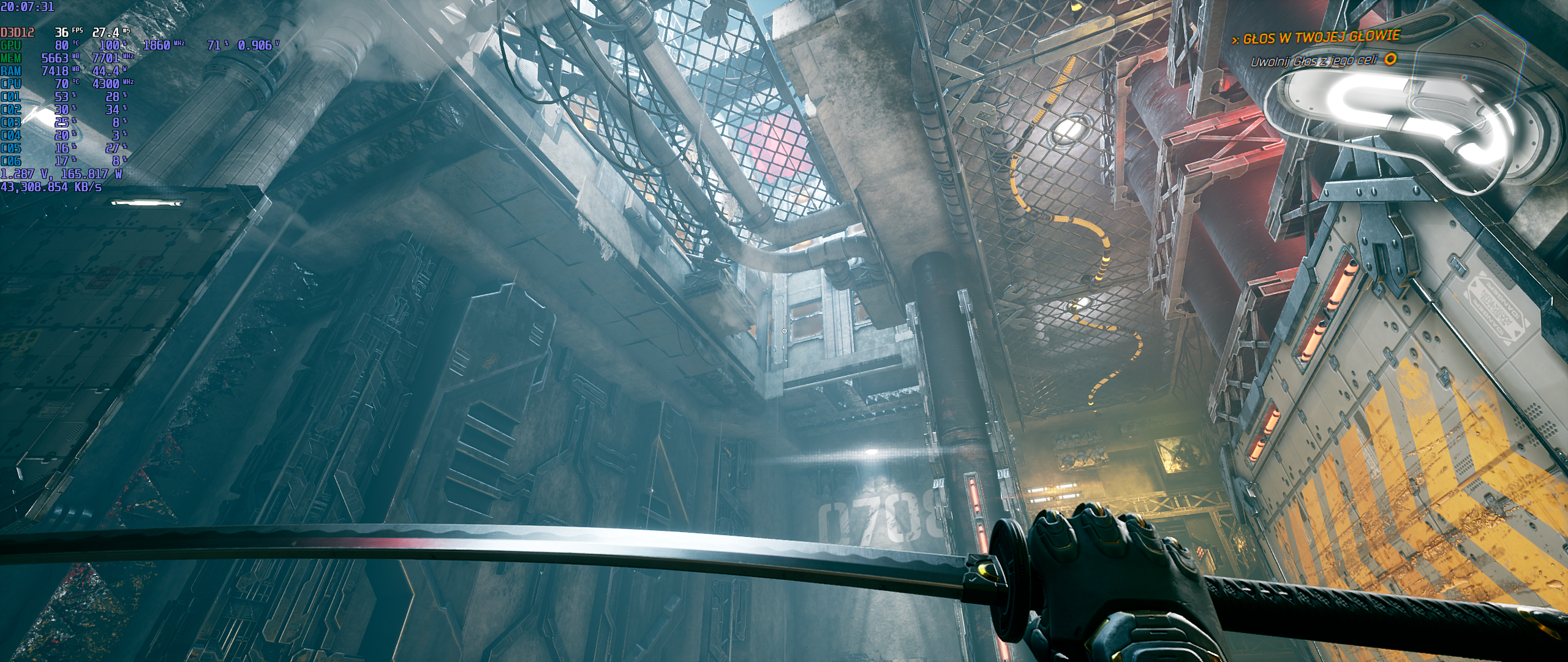

"RT reflections" have a very abstract concept of "resolution":

You cast rays, you get "something".

With the number of rays cards can cast today, what you get is a noisy mess:

Frame above is from Quake RT by NV (an open source thingy).

More on this here:

That is not how rendering and AI "fill-in" works..... There are other ways to use AI other than fill-in.

www.neogaf.com