Metroiddarks

Member

Who's building websites in C++ though.... Nothing easily about HTML either, most people fuck it up!

I will left this topic before somebody else say JSON or XML also should be considered programing languages.

Who's building websites in C++ though.... Nothing easily about HTML either, most people fuck it up!

Not really websites but can be used for e.g browser games, look up emscripten and webasembly.Who's building websites in C++ though.... Nothing easily about HTML either, most people fuck it up!

I'm sure the PS5 CPU cache is similar to that of Zen 3, so thus more efficient.

Can you explain why do you think that infinity cache and the cache scrubbers essentially produce the same results?Although, it seems the cache scrubbers essentially produce the same results

Wasn't the Zen2 on XBSX advertised as a derivate of the server CPU or something like that? If it's based on Renoir then 8MB it is.

Not really websites but can be used for e.g browser games, look up emscripten and webasembly.

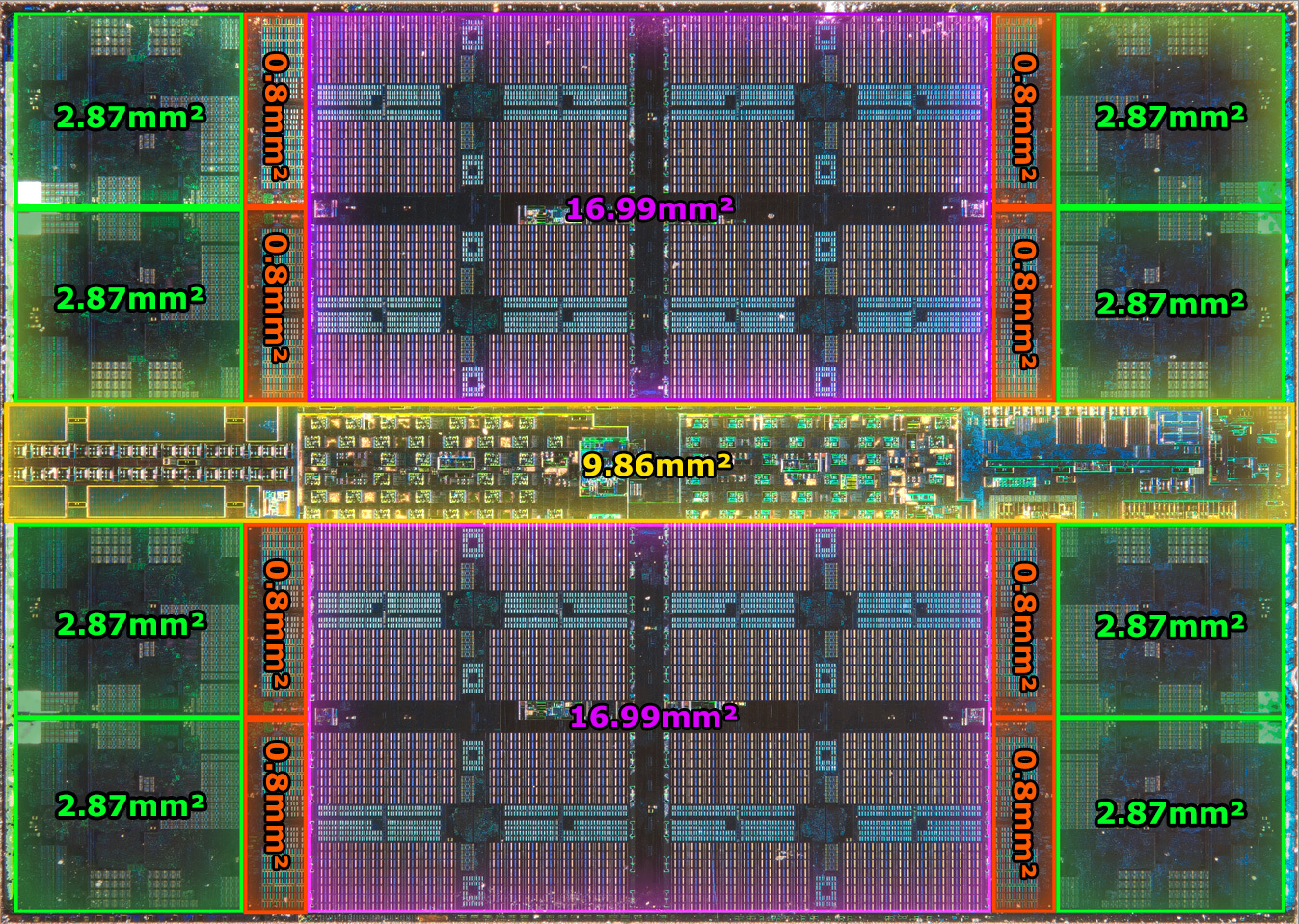

The PR calls them 'server class' cores but it is just that, PR! The CPUs in these consoles were always going to be mobile variant based and I have thought so right from the beginning of speculation 3.5 years ago. A full fat desktop CPU would use far too much of the 300-360mm^2 die space budget console tend to have. Over 50% of the die space in a 74mm^2 Zen 2 chiplet is the L3 cache so the reduction to 8MB isn't a surprise and so much L3 isn't needed from what I've read.

I will left this topic before somebody else say JSON or XML also should be considered programing languages.

Yeah, caches are big as fuck, I didn't thought of that. Probably 8MB is as large as couple of cores if not bigger. Well, that is settled.

I am really confused right now... I thought HDMI 2.0 would not degradade colour gamut at all. Is it problem with only Spider Man? Damn I got 2.0 HDMI TV with peak brightness around 1500 nits and damn... that makes me sad.

It is quite shocking really! I would imagine AMD have reduced these sizes somewhat more for the consoles with improvements and N7P/N7e though.

not on consoles... The Series X has 4+4MByte, the question is how much the PS5 has, 8MB unified or 4+4MByte too?8MB L3? Sounds like bullshit to me, Zen2 has 16MB per CCX so 32MB in total.

Zen 2 with 8MB L3 unified cache is a must... basically is what is making PS5 CPU better for high framerate than Series X CPU even with 100Mhz disadvantage.as predicted he dropped some interesting nuggets

There's a bunch of other stuff too which I left out which he was re-confirming in this video as well like the PS5 being designed around "raw geometry throughput and data latency". I recommend watching the whole video.

- Sony being coy with the PS5 internals and deep technical features was mostly because of the bad reception of the Road to PS5 talk, there was an internal mandate from Jim Ryan himself and developers also followed suit hence the very slow drip of information

- CPU is Zen 2 with a unified 8MB L3 cache (confirmed by two developers), there is no option to turn off SMT of the CPU. The unified cache allows significantly lower latency when accessing the cache memory.

- One CPU core is dedicated to operating system and similar functionality which leaves the rest of the 7 cores for developers

- The DDR4 chip is for SSD caching and OS tasks, developers will completely ignore this.

- PS5 does not feature Sampler Feedback Streaming however there are tools which offer similar results/functionality by developers (however it's a little more complicated)

- PS5's Primitive Shaders are much more advanced than the ones used in RDNA 1 and allow "extreme" levels of precision to the GPU

- VRS also runs on "extreme" precision with the Geometry Engine

- Unreal Engine 5's "Nanite" technology was making good use of the PS5's Geometry Engine and has been the best showcase of the GE so far

- The cache scrubbers have been overlooked and are not getting enough attention, according the the developer they offer a significant speedup of the GPU and reduce a lot of overhead

- The Tempest Engine is already being used by developers for CPU related tasks and is great at things like physics

- PS5's compute unit architecture is pretty much the same as that in the desktop implementation of RDNA 2 and the Series X

I guess I could try going into a long ass explanation, to the best of my ability, as to just how the cache scrubbers work and how it's tied to the coherency engine. Honestly the info on all this is out there. Basically, the end result of infinity cache and the cache scrubbers is to allow the GPU to operate more efficiently. GPU cache scrubbers means less cache misses and less time for compute units being idle while waiting for memory reads. This in turn means you save on memory bandwidth and increase the amount of data being fed to the compute unit. Infinity cache, on the other hand, keeps things locally that otherwise would need to be requested from the VRAM. A special pool of memory slapped straight onto the GPU.Can you explain why do you think that infinity cache and the cache scrubbers essentially produce the same results?

I am agree with that.I'm talking about the right tool for the job.

During install/game boot and randomly after an hour or so for a few seconds for me.Are ps4/ps5 discs spinning all the time or only during install on ps5 like it was on ps4 ?

Are ps4/ps5 discs spinning all the time or only during install on ps5 like it was on ps4 ?

8MB L3? Sounds like bullshit to me, Zen2 has 16MB per CCX so 32MB in total.

I think a lot of people here overvalue developers and a lot of them aren't as smart as they think. They make a lot of mistakes.

Maybe I didn't hear/see something but that video has the same info he said before

I would like to know this and will check this out when I check out my nephew's PS5 later this week. I can't see why a check on the disc can't just be done as you place into the drive and not need to spin up again until/unless the disc is removed during play.

So there might be something wrong with whole PS5 when it comes to downconverting Full RGB to YCC 422. Vincent needs to test more PS5 games.

The disc only spins during install and when booting a game. It’s also the only noise Ive heard from the console.

All this info was known already, and has been posted here in various threads, on twitter, etc. I watched it and nothing surprised me, except him claiming the cache scrubbers was to "throw out GPU instructions" (paraphrasing). Either misspoken or misunderstood, I suppose.how does he know all this , he an insider?

When playing Control from disc the drive span up after I unpaused the game, but I could barely hear it over the game audio. Compared to the PS4 it's night and day in terms of noise levels, but the XSX definitely has the edge in this department.The disc only spins during install and when booting a game. It’s also the only noise Ive heard from the console.

The 4000 Zen2 mobile chips also has similar lower L3 caches and the performance hit is around 15-20% compared to their desktop counterparts, and that at a much lower power target.NXGamer talked about this in one of his PS5 BC videos, PC’s are also used for other things than just gaming so the 32MB L3 cache makes sense. With the consoles, he said they don’t need that much L3 cache since the only thing they’re used for is gaming.

I mean some of the stuff wasn’t already known or it wasn’t shared on this thread.He added no information that was not already known or in sony patents / road to Ps5. Nothing. No unique information at all.

I'm absolutely sure the PS5 CPU is different from XSX and has links with Zen 3. It's all about throughput. I'm sure of it.No, the improvement in Zen3 is not the cache itself but how it is arranged.

In Zen2 you have these units called CCXs with 4 cores and, in the case of the desktop versions, 16MB of L3. This allows a lot of flexibility as AMD can create different CPUs just by adding or removing CCXs. However, there is an issue when you go over one CCX, for example, when you want to build an 8 core CPU and need two CCXs. If a core in the CCX1 needs to access the L3 cache of the CCX2 it takes a latency penalty, as it doesn't have direct access to it, it needs to go to chips central I/O , then access the cache on the other CCX.

So in Zen2, if you cores are accessing the L3 cache of the CCX they are part of, the latency is OK, if not, then the latency massively spikes. Of course, the more CCXs the worse.

Zen3 fixes this by increasing the ammount of cores per CCX and the L3 cache size. In Zen3 each CCX has 8 cores and 32MB of L3 cache.

So if PS5 has this, it has Zen2 core but Zen3 cache arrangement but I sincerely doubt it.

I mean some of the stuff wasn’t already known or it wasn’t shared on this thread.

As for the information not being unique I don’t really have anything to say, I wasn’t expecting him to be coming up with wild claims about what the PS5 APU can do only for it to be total horse shit, actually reminds me of someone in this thread.

You might not have, but let’s be honest... he hyped that video more than he usually does#clickbait

Agreed, PS5 is doing a great job at maintaining frame rates especially at 120 FPS as we've seen on DF and other comparisons.Zen 2 with 8MB L3 unified cache is a must... basically is what is making PS5 CPU better for high framerate than Series X CPU even with 100Mhz disadvantage.

I think Call of Duty Cold War is technically "dynamic 4K" at 120 Hz but its just averages around 1080p res, right?.Is the Rainbow Six Siege PS5 update the first game that we’lol be able to run that has 120fps and dynamic 4K simultaneously? I’d like to purchase it to test my setup and some hdmi cables as well.

I think Call of Duty Cold War is technically "dynamic 4K" at 120 Hz but its just averages around 1080p res, right?.

We have no idea what res R6S will average at when using 120 Hz mode (my guess is around 1440p.)

I think both games will be sending a 2160p @120 Hz video signal out to your TV if your setup is all HDMI 2.1.

Rainbow 6 Siege is a fun game, enjoy!R6 is $9.99 and CoD is $69.99. So just to try and test it’s worth it. Thanks

Fuck You i LOL'ed so hard at that in the office. Got some stares.SUPAIDAMAN!!!

as predicted he dropped some interesting nuggets

There's a bunch of other stuff too which I left out which he was re-confirming in this video as well like the PS5 being designed around "raw geometry throughput and data latency". I recommend watching the whole video.

- Sony being coy with the PS5 internals and deep technical features was mostly because of the bad reception of the Road to PS5 talk, there was an internal mandate from Jim Ryan himself and developers also followed suit hence the very slow drip of information

- CPU is Zen 2 with a unified 8MB L3 cache (confirmed by two developers), there is no option to turn off SMT of the CPU. The unified cache allows significantly lower latency when accessing the cache memory.

- One CPU core is dedicated to operating system and similar functionality which leaves the rest of the 7 cores for developers

- The DDR4 chip is for SSD caching and OS tasks, developers will completely ignore this.

- PS5 does not feature Sampler Feedback Streaming however there are tools which offer similar results/functionality by developers (however it's a little more complicated)

- PS5's Primitive Shaders are much more advanced than the ones used in RDNA 1 and allow "extreme" levels of precision to the GPU

- VRS also runs on "extreme" precision with the Geometry Engine

- Unreal Engine 5's "Nanite" technology was making good use of the PS5's Geometry Engine and has been the best showcase of the GE so far

- The cache scrubbers have been overlooked and are not getting enough attention, according the the developer they offer a significant speedup of the GPU and reduce a lot of overhead

- The Tempest Engine is already being used by developers for CPU related tasks and is great at things like physics

- PS5's compute unit architecture is pretty much the same as that in the desktop implementation of RDNA 2 and the Series X

And the CPU L3 cache being only 8MB sounds like complete bullshit to me. I'm pretty sure Sony is trying to reduce as much as possible how much bandwidth the CPU takes from the GPU. This is the complete opposite of that.

So they have build, according to him, these amazing cache management precisely to improve hit rates and improve overall bandwidth but then they decide to give the CPU an L3 that is 1/4 the size of the commercial CPUs.

SUPAIDAMAN!!!

Developers are generally where the issues are, not languages.

I've heard plenty of advocates for strongly typed, const correct, static analysis friendly code. It's amazing how much shit code you can write within those boundaries too.

I will left this topic before somebody else say JSON or XML also should be considered programing languages.

I'm talking about the right tool for the job.

Sierge is really that great if you like online multiplayer.R6 is $9.99 and CoD is $69.99. So just to try and test it’s worth it. Thanks

We got any word from Sony?Two and a half million PS5's out there, and I can't freaking believe that we still couldn't see the SoC full specs.

It doesn't make any sense. LOL

Good language design is always important. For instance, you can write shit and slow code in Rust, but you will never encounter memory safety related runtime panic. Anyone worked with C/C++ would know how easy people could mess up with this stuff.Developers are generally where the issues are, not languages.

I've heard plenty of advocates for strongly typed, const correct, static analysis friendly code. It's amazing how much shit code you can write within those boundaries too.

What?Two and a half million PS5's out there, and I can't freaking believe that we still couldn't see the SoC full specs.

It doesn't make any sense. LOL