Correct. yes, this is the path of the future (10-year timeline)

Software-Based Display Simulators

(e.g. CRT electron beam simulators in shader)

Upcoming 240Hz OLEDs are almost enough to begin CRT electron beam simulators in software (simulate 1 CRT refresh cycle via 4 digital refresh cycles).

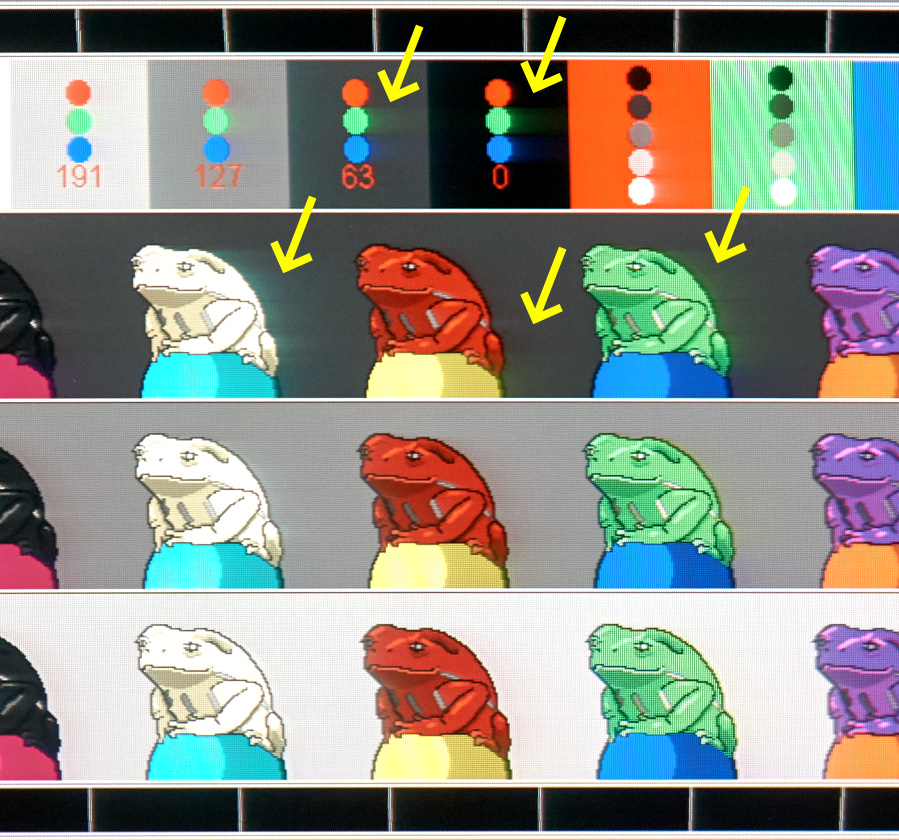

Today we can do this:

An example of how software algorithms can simulate a different display that you don't already have:

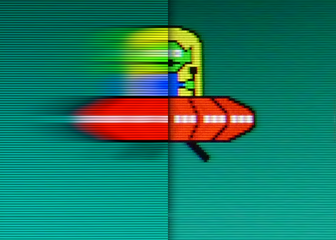

- testufo.com/vrr

Variable refresh rate simulation

Software-based simulation of VRR on a non-VRR display.

Works on any display, even non-VRR displays.

- testufo.com/blackframes#count=4&bonusufo=1

Variable control of motion blur at a fixed frame rate

Requires 144Hz or higher to avoid uncomfortable flicker.

Applicable to future adjustable phosphor decay capabilities.

- testufo.com/rainboweffect

Simulation of DLP color wheel (rainbow effect)

Requires 240Hz or higher refresh rate for the rainbow effect to look accurate.

If you can't see the rainbow effect, wave your hand really fast in front of this animation to see the rainbow effect.

WARNING: epilepsy warning if you don't already have a 240Hz monitor. These demos look flickerfree on a 240Hz+ screen

DO NOT RUN THIS TEST AT 60HZ: EYE PAIN WARNING

Tomorrow, we will be able to do this:

240Hz HDR/OLED -- semi-accurate CRT electron gun simulators (4ms blur)

600Hz+ displays -- plasma subfield simulators.

1000Hz+ displays -- accurate CRT electron gun simulators (1ms blur) with accurately similar zero motion blur and phosphor decaybehind (and 60Hz flicker is equally comfortable as old CRT), better than classic software BFI

1440Hz+ displays -- DLP temporal dithering simulation via binary 1-bit flashing on/off pixels to generate 24-bit color (24 LCD/OLED refresh cycles per simulated DLP refresh cycle)

We Only Need To Simulate Up To Temporal Retina Thresholds

NOTE: Yes, CRT responds in nanoseconds for the leading edge blur, but there's a lot of trailing edge blur. The human eye doesn't see the leading edge blur on CRT (due to nanosecond/microsecond response) since it's far below retina threshold. But the trailing blur is noticeable (

as phosphor ghosting) and we can still simulate that in software -- because there's literally 20ms worth of blurring.

Simulating a 60 Hz CRT Accurately Via 1920 Hz Display: 32 Digital Refreshes Per 1 CRT Refresh

For example, a future 1920Hz display gives us 32 digital refresh cycles to simulate 1 CRT refresh cycle for a 60Hz CRT. This is done by using a GPU shader to simulate 1/32th of a CRT refresh cycle of a CRT electron beam. So you generate 32 frames of 1/32sec worth of CRT electron gun simulation, to output to a 1920 Hz display (1/60sec worth). Fast enough to be (more or less) human-retina simulation of a CRT. And because it'd be a correct simulation of a CRT including rolling scan and phosphor decay, the comfort will be equal to an original CRT (unlike uncomfortable 60Hz squarewave BFI).

You'd get the same artifacts (e.g. plasma contouring, DLP noise, rainbow artifacts, CRT phosphor trails), because of the accuracy of simulation of the retro display afforded from the fine granularity of simulation made possible by brute Hz.

That being said, we ideally need HDR, so we can flicker the pixels even brighter (if possible), because CRT electron beam can be extremely bright. 2000nit HDR will allow 200nit CRT electron beam simulation (if software-based phosphor decay is adjusted to ~90% blur reduction)

A Retina-Everything (Resolution,Hz,HDR) Can Software Simulate Any Prior Display Accurately

4K 240Hz OLED, then 4K 480Hz, then 8K 240Hz then 8K 500Hz, then 8K 1000Hz (and so on) -- display algorithm simulators will become more and more accurate as the century proceeds, and we'll achieve perfect Turing test (A/B blind test between a flat MicroLED/OLED and a flat CRT tube)

when we retina-out resolution AND we retina-out refresh rate AND we retina-out HDR .... Such a display can theoretically simulate any display before it!

This century will be lots of fun attempting to do retina simultaneously for all of those (resolution AND refresh AND hdr / color gamut). Tomorrow's temporal retro display simulators will be written as a GPU shader, and open source projects will permanently preserve retro displays in a display-independent OS-independent manner. Just throw sheer brute Hz and you've got your magic. When nobody can purchase a rare used Sony GDM-W900 CRTs in year 2045 for less than $10000, you just simply purchase a 8K 1000hz MicroLED/OLED and then download the a CRT-electron-simulator github project instead. VOILA!!!!

Note: Retina threshold can vary from human to human, but one can simply target the 99% threshold, as an example to capture most of the human populations' sensitivities. Remember, geometric upgrades are needed for Hz (Laboratory tests show that 240Hz-vs-1000Hz is easier to tell apart 240Hz-vs-360Hz). This is true also for 1000Hz versus 4000Hz. Researchers discovered you need roughly 4x refresh rate increases in those stratospheres for human-visible differences due to the diminishing curve of returns. Temporals behave like spatials that the more you get close to retina, the bigger jump up you need to see even a very marginal difference. Like DVD-vs-4K is easier to tell apart than VHS-vs-DVD.

Long term, we would like to see an open source project that creates a Windows Indirect Display Driver, to apply a display simulator to everything you do in Windows (including running emulators and games that doesn't have accurate CRT-temporal simulator, as HLSL simulation of texture doesn't fix identicalness of motion blur / phosphor decay to an original CRT -- just try playing Sonic Hedgehog in MAME, even with MAME HLSL). Adding temporal HLSL simulation in addition to spatial HLSL simulation, solves that problem.