problem is not beyond bottleneck

8 gb gpus run into all sorts of vram/ram limitations at 1440p and beyond with ray tracing enabled and very high textures

and high textures, at times, look worse than ps4 so its not an option either

[/URL]

for now i settled on maxed o

ut ray tracing with very high textures at native 1080p. playing 1440p and above causes huge VRAM bound bottlenecks. setting the textures to High is a fix but you get worse-than-PS4 textures most of the time. since dlss/fsr still uses 1440p/4k assets, they still incur heavy memory consumption

[/URL]

also, high textures + high reflections will give you weird reflections...

using "very high geometry" EVEN with low textures fixes the problem;

so, takeaways;

1) very high geometry has better reflections than ps5 regardless of texture setting, whether you have textures at low or very high

2) high textures+high geometry has worse reflections than ps5 (by huge margin). high geometry practically needs very high textures to properly create reflections

3) high geometry only produces ps5 equivalent reflections when paired with very high textures

and at 1440p and beyond, it is impossible (with how the game manages memory allocation) to fit high geometry+very high textres. you can fit very high geometry+high textures at 1440p, but then you will have to live with textures:

ps4 textures

very high textures

"so called" high textures which Alex suggests for " 6 GB GPUs". You also need to use "high textures" at 1440p and above with 8 GB cards to enable ray tracing without having VRAM bound performance drops

TL;DR

- Game only uses maximum of %80 VRAM (aside from background apps)

- You need very high textures to ensure you get high quality textures that are both intended for PS4 and PS5. High textures are not a MATCH for PS4 textures. They bundled improved PS5 textures and oldgen PS4 textures with Very High texture settings. If you use High textures, you're getting even worse textures than PS4. be careful

- 8 GB VRAM GPUs, with how the game handles memory allocation, is not enough at 1440p and above to both run ray tracing and Very High textures. Only at 1080p I managed to run Ray tracing with Very High textures without having enormous slow downs.

- Slow downs are caused by excess memory spilling into normal RAM, which causes constant data transfer over PCI-e. This does not happen when you have enough VRAM or play at lower resolutions.

In short, it impossible to match PS5 equivalent perforance due to VRAM bottleneckes 8 GB cards experience at 1440p and above, and since PS5 constantly runs around 1440p at 60 FPS modes.

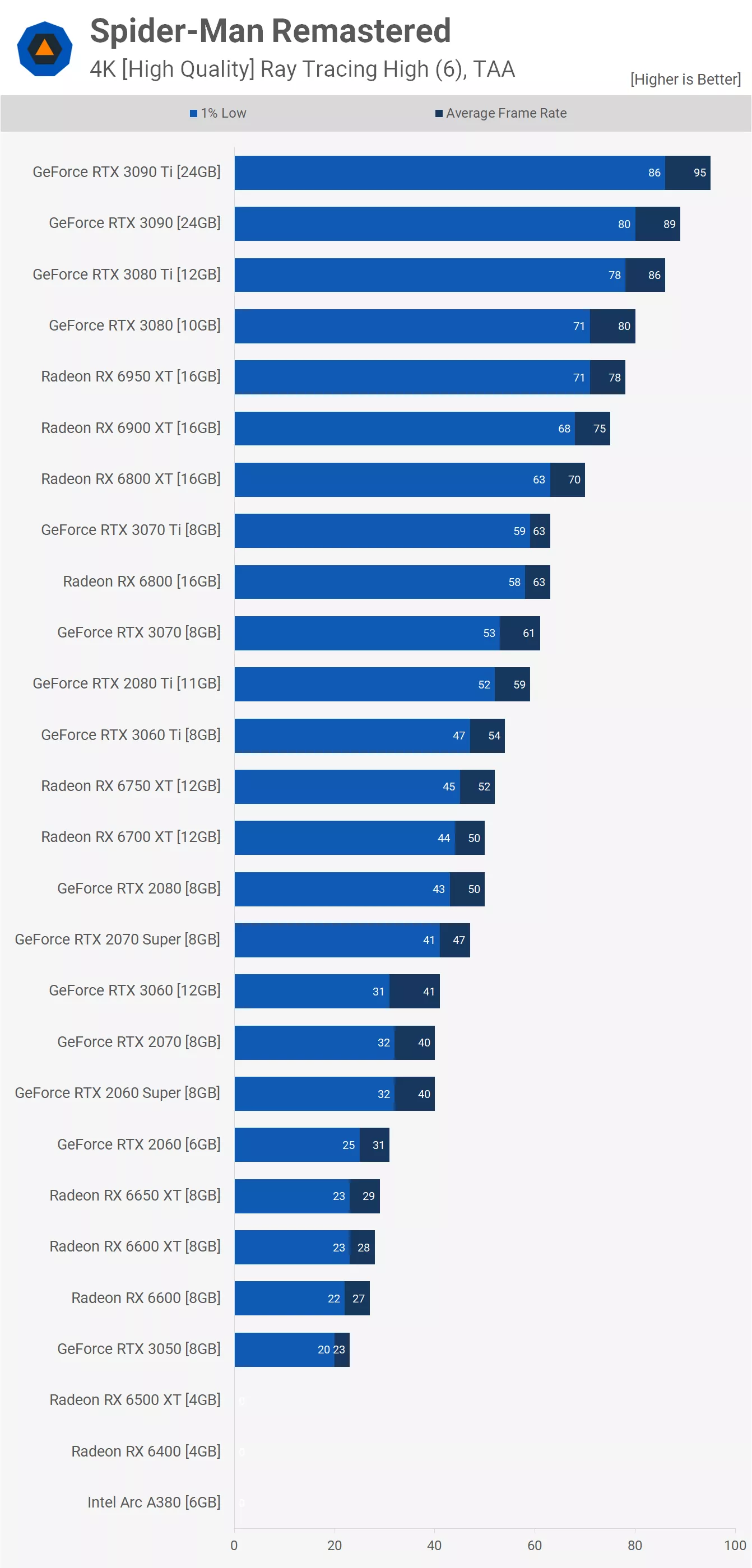

VRAM bottleneck is real, RTX 3060 can almost match PS5 ray tracing performance at native 4K. 3070/3070 ti cannot. At native 4K, VRAM situation becomes so drastic that 3070/3070ti drops frames below 30s with very high textures. Only way to play at native 4k with a 3070 like PS5 does is to use high textures, which as proven above, degrades the overall texture quality quite heavily.

Here at native 4k with nearly ps5 equivalent RT settings, 3060 is able to get 40+ FPS (until it too is affected by VRAM bottleneck, and yes, it does too)

and here 3070 with similar RT settings;

drops below 40, underperforms, even compared to 3060

I know it has been a long post but due to how the game handles memory allocation, and due to how bad high textures look, for now it is impossible to enjoy this game at maximum fidelity at 1440p with an 8 GB card. All cards severely underperform, cause performance slowdowns, excess PCIE transfers.