Shin

Banned

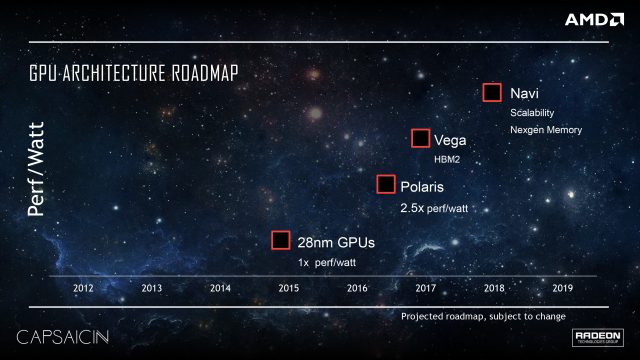

Little is known about AMD's upcoming graphics hardware based on the new Navi architecture, but a number of leaks and driver releases are starting to bring the project into focus. Navi is the codename for new Radeon video cards based on the new 7nm process technology, allowing for more rendering power and higher efficiency - and it's also the architecture confirmed for use in Sony's upcoming PlayStation 5 GPU.

Beyond that, official details on the tech are somewhat thin on the ground. All that we really know is that like Vega before it, the new architecture is arriving late to market, while AMD roadmaps refer only to support for 'next generation memory' as a defining feature. Beyond AMD's next GPU releases, the next phase of development refers to a next generation architecture, suggesting that Navi is based on the same Graphics Core Next (GCN) foundations as hardware going all the way back to 2011's Radeon HD 7970. This has been confirmed by Linux drivers released this week, explicitly tying the Navi codename to the GCN architecture, according to this Phoronix report.

How the core has been enhanced over Vega and its prior GCN stablemates remains to be seen, but a PCB leak from Komachi Ensaka via a now-deleted post on a Chinese forum reveals an all-new board design we've not seen before, marked with AMD branding. The boards seen in the photos are not populated with any silicon, but a lot of information can be gleaned from them.

Credit: Eurogamer <--- read the full story.

Extra: Navi is GCN-based

Easier understanding for the interested parties and large commits were made recently to the arch that reflects upon Gen 8 consoles (PS4/XBox).

Last edited: