Embarassing as always i see.

Uh-huh, you don't have much of a leg to stand on I'm afraid with this, to put it mildly. Lets leave the personal insults and whatever out of this.

The reason its not getting attention wouldnt be because its reserved for a particular set of cpus, a particular set of gpus and a particular set of mobos, right ?

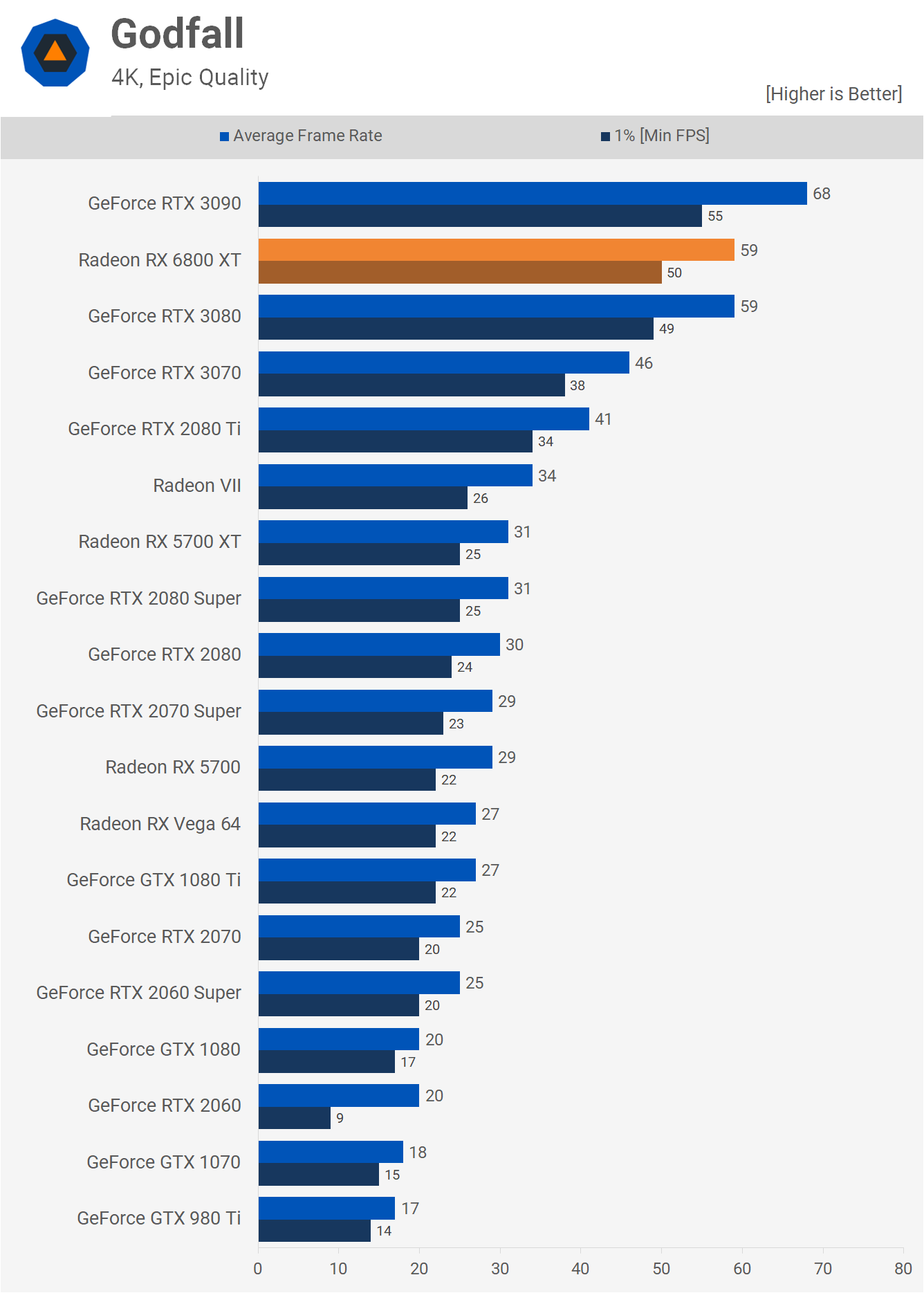

In and of itself that is a fair point and I don't totally disagree either, but then we see things like DLSS which works in a far smaller range/array of games and the hype surrounding it and that narrative kind of falls apart a little under further inspection, no?

Its because everyone is secretely against amd.

Where are you getting this type of sentiment? I've seen this type of thing brought up a few times in various threads from mostly people with a strong Nvidia leaning to put it kindly, nobody has a secret head cannon or fanfic about the world being secretly against AMD. Repeating it indefinitely doesn't somehow make it true.

Currently Nvidia is the long time market leader with somewhere between 70-80% market share right now. Being market leader tends to afford you a certain dominance in mindshare with fans/gamers/the press. Nvidia also has absolutely amazing marketing and branding, like seriously some of the best in the world, they are much better than AMD at marketing their products and features, of course having market dominance certainly helps there.

In terms of the tech press we have already seen with the Hardware Unboxed fiasco that Nvidia can be quite "forceful" so to speak with the talking points, features and editorial narrative that they want people to push. How much more of that goes on behind the scenes and never reported on or heard about? Who knows but it is not a massive stretch to say it could be a potential factor in what tech channels and sites want to push specifically.

Granted I'm not saying that is 100% the case or anything, but Nvidia have given enough pause for thought with their shenanigans that there may always be a seed of doubt in people's minds. Although I don't really want to relitigate the whole HU fiasco, that thread was embarrassing enough as it is. But the point I'm making is that if SAM was an Nvidia feature like DLSS for example and Nvidia had come out with it and not AMD then we could potentially see a more positive press spin than we currently have seen from most outlets. Of course that is not guaranteed but probably somewhat likely all things considered.

Regarding the perception of SAM in the minds of Nvidia fans/fanboys? It would definitely be viewed more positively and added to the "long list of features Nvidia is ahead of AMD with! lol" etc... I mean this is so obvious I don't even think it needs to be said. That is the kind of thing fanboys do whether they are for Sony, MS, Nvidia, AMD, Intel or whoever so I don't really see how you could disagree with such an obvious point. For example if AMD came out with DLSS instead of Nvidia the AMD fanboys would likely be overwhelmingly positive on "AMD-DLSS" and the Nvidia fanboys would be downplaying it every chance they got and vice versa.

Its because we're all pro nvidia.

You certainly are, not really much question about that as it is self evident from your posts in this thread and others. There are also many others who seem to regularly post in this thread or really any Radeon related thread with mostly negative AMD related comments and mostly positive Nvidia comments. This is not rocket science and is again self evident just looking at this or any of those threads. We generally call this type of thing "brigading" or simply trolling.

Generally the motivators are insecurity over their purchase and fear of competition. This results in a need to defend their purchase in an overly zealous manner, kind of like a religious foot soldier or cult member, and as we all know the best defense is a good offense. Anyway this is product fanboying on internet forums 101, nothing particularly new here to learn for anyone reading this. My point was that a lot of the people who match the description above who have been vigorously posting in this thread have been either outright ignoring or downplaying SAM, and my point was that this was simply because it was not an Nvidia feature.

Which is a logical take away, especially given Nvidia's market dominance the last decade or so it is also logical to assume there are far more people who are fans of Nvidia than AMD for example and as such they have been used to buying Nvidia products as a no brainer as AMD were not competitive. Now that they are we see excess downplaying of AMD/their features and otherwise acting out as they don't know how to handle actually having competition. A pretty simple and logical conclusion I think.

Making a pathetic post like this and ending it talking about fanboys and insecurity is just the cherry on top.....Good to see a bastion of objectivity like yourself

As explained my original comment was pretty short, straight forwards and based on logical conclusions and evidence. The fact that you were triggered enough to post the above drivel based on that says everything.

I don't patrol Nvidia threads to try to dissuade people from buying 3000 series GPUs, I don't continually troll them with low effort bait and hot takes, I don't quote AMD marketing as if they were fact. I don't post overly negative comments about 3000 series GPUs in this thread either and I've given Nvidia their due with better Ray Tracing, much better Path Tracing, I think DLSS is great in general and the Nvidia GPUs have big CUDA based advantages in Blender or Adobe suites, these are great things and great reasons to buy them.

Don't take this the wrong way but being called a fanboy by someone who brigades all Radeon threads on behalf of the Nvidia mothership isn't exactly the stinging barb you believe it to be, you unfortunately don't have the credibility to pull that kind of thing off and be taken seriously, sorry.

Acting like you're personally persecuted

Again, where are you getting this from? Who is acting personally persecuted anywhere regarding GPUs? I'm certainly not. Again as above, simply repeating this over and over again doesn't make it true. They are just GPUs man, we are all technology enthusiasts but at the end of the day they are luxury consumer electronics, toys essentially. I don't think anyone is feeling personally persecuted based on toys. I'm certainly not anyway. I just dislike hypocrisy, double standards, misinformation and when people partake in these actions with a smugness multiplier. When I see these things I tend to correct where I can and when I can be bothered.