The Cockatrice

Gold Member

1. No Dev is forcing it upon you.

2. It's an extra feature that isn't used to mask performance optimization

3. "Not saying DS runs bad without it" (it runs great, so what are you telling us exactly?)

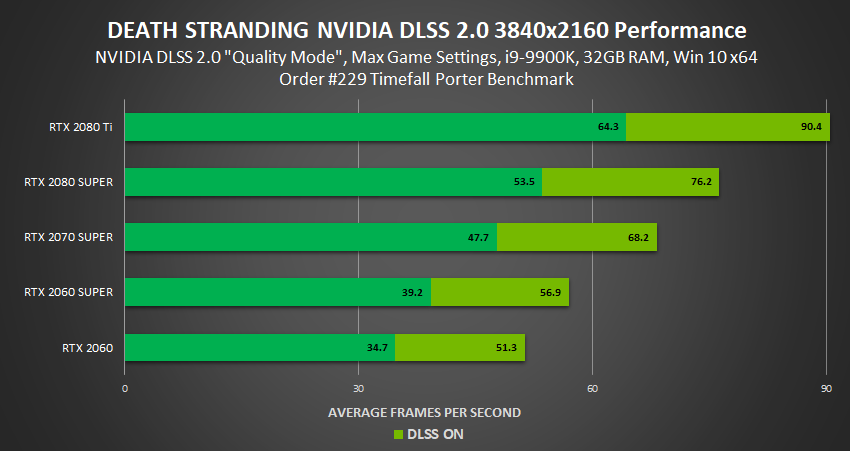

4. They call it the future because it's a huge performance boost at the cost of a slight loss in image quality. We are aware of how punishing 4K is and with RT, it's gonna be worse. No optimization will save you

5. It's more than a sharpening filter. It's a reconstruction algorithm and shits on CAS FidelityFX

6. It's doubtful devs will ever use it to excuse poor performance but if one day it becomes so good it's effectively indiscernible from native 4K all the while offering a huge performance boost, who cares?

If it was just about sharpening then why is AMD's Contrast Adaptive Sharpening in this game inferior to DLSS in a side by side comparison?

I never said it's just a sharpening filter. I know what it does. I used it on my 2080. And the differences are not indiscernible at all. Its easier on a shitty youtube video to hide the differences but when playing is a whole different thing. You have too much blind faith in that it won't be abused as an excuse for poor performance and I'm pretty sure Watch Dogs Legion will be the second example after Control in regards to its use. We can talk more about this in 2022 when it's more common.