http://blogs.nvidia.com/blog/2013/10/18/g-sync/

FULL FAQ: http://www.geforce.co.uk/hardware/technology/g-sync/faq

DEMO: http://www.youtube.com/watch?v=NffTOnZFdVs&hd=1

How To Upgrade To G-SYNC

If you’re as excited by NVIDIA G-SYNC as we are, and want to get your own G-SYNC monitor, here’s how. Later this year, our first G-SYNC modules will be winging their way to professional modders who will install G-SYNC modules into ASUS VG248QE monitors, rated by press and gamers as one of the best gaming panels available. These modded VG248QE monitors will be sold by the modding firms at a small premium to cover their costs, and a 1-year warranty will be included, covering both the monitor and the G-SYNC module, giving buyers peace of mind.

Alternatively, if you’re a dab hand with a Philips screwdriver, you can purchase the kit itself and mod an ASUS VG248QE monitor at home. This is of course the cheaper option, and you’ll still receive a 1-year warranty on the G-SYNC module, though this obviously won’t cover modding accidents that are a result of your own doing. A complete installation instruction manual will be available to view online when the module becomes available, giving you a good idea of the skill level required for the DIY solution; assuming proficiency with modding, our technical gurus believe installation should take approximately 30 minutes.

If you prefer to simply buy a monitor off the shelf from a retailer or e-tailer, NVIDIA G-SYNC monitors developed and manufactured by monitor OEMs will be available for sale next year. These monitors will range in size and resolution, scaling all the way up to deluxe 3840x2160 “4K” models, resulting in the ultimate combination of image quality, image smoothness, and input responsiveness.

http://www.geforce.com/whats-new/ar...evolutionary-ultra-smooth-stutter-free-gaming

Made some images for a visual representation of tearing, lag/stutter, and what G-Sync does. There's probably some inaccuracies (I'm not overly knowledgeable on the tech), but it should at least give people an idea.

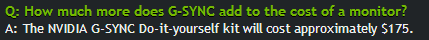

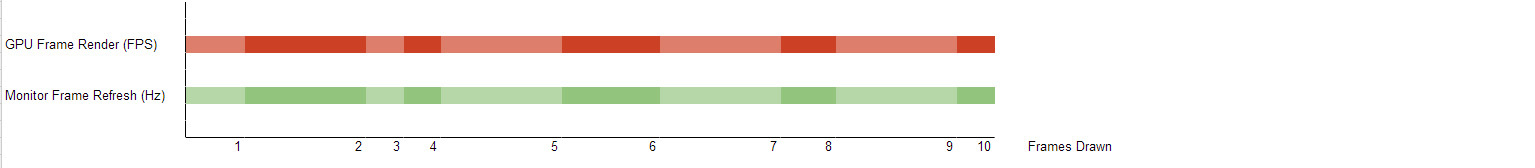

Image 1: Perfection.

In graphics rendering you have two forces at play. Firstly, your hardware/GPU is rendering each frame of the game. We call this framerate. And the other is your monitor/display, which is refreshing display frames. We call this hertz (Hz). Traditionally your monitor's Hz is locked. Do you have a 60Hz monitor? What about 120Hz? Or 144Hz? This number tells you how many times it forcibly refreshes itself every second.

And so, in a perfect world, for every single refresh of the monitor's frames the GPU would render a single frame of game data. They would work in perfect unison together.

But this doesn't happen, not unless you have insane hardware or lower specs. We see it on PC, we see it on consoles, but framerate fluctuates. Your GPU does not render a locked framerate most of the time. And even when it is hitting that smooth 60fps, something can happen in the game that drops it. Big explosion out of nowhere. Building crumbling. The hardware is stressed, and the framerate drops. It might even drop below the monitor's refresh rate.

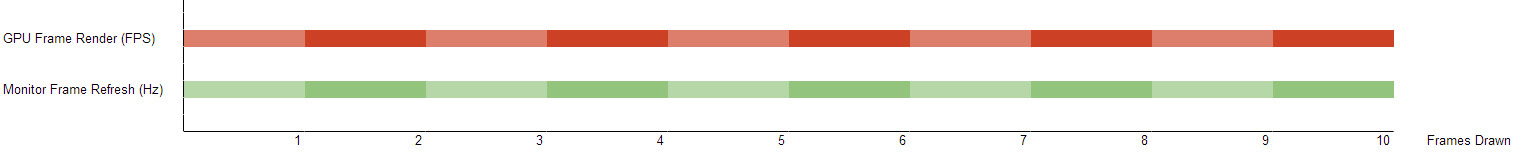

Image 2: Tearing

So what happens when our framerate is moving all over the place, but our monitor's refresh rate is locked? We get something called "tearing". Long and short of it, this is when the frames being rendered by the GPU are not in sync with the monitor's locked frame refresh rate. We get overlaps between the two. See image below.

This means that when the monitor tries to refresh a frame, sometimes the GPU has two frames of overlapped data. This is what causes that big "tear" horizontally through the screen of some games.

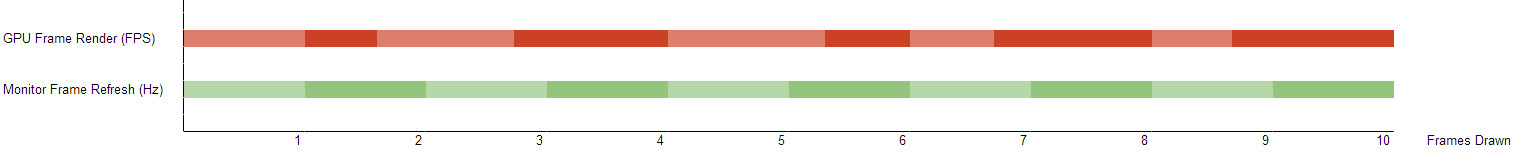

Image 3: Vsync Solution! Also Stutter/Lag

People fucking hate tearing, so a solution was found: Vsync. Vsync acknowledges the refresh rate of your monitor. 60Hz? 120Hz? And it says "I'm only going to send GPU rendered frames to the beat of that refresh rate!". Everything is synchronised! But this has another problem. As we just mentioned, games rarely run at locked framerates. So what happens when my 60fps game drops to 45fps, but my monitor is 60Hz, and I'm using Vysnc?

Essentially, the GPU forces itself to 'wait' on each frame before the monitor refreshes. Remember, the monitor refresh rate is locked. It stays beating to the same rhythm, regardless of how fast or slow the GPU is spitting out frame data. In this case, our GPU is rendering frames slower than the monitor's Hz, but Vsync is forcing it to play catch up. If the monitor tries to draw a frame, but no frame exists, it simply draws the last one, doubling up for a couple of seconds. Imagine this in a game. This would give the impression of "stuttering". This also introduces input lag from peripherals, as the GPU is constantly trying to play catch-up to the monitor's refresh rate.

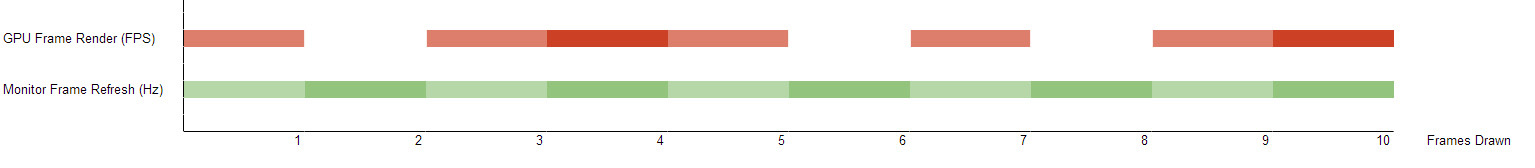

Image 4: G-Sync Solution

G-Sync is a hardware level solution inside your monitor that communicates directly with the GPU. Instead of Vsync, G-Sync says "why don't we change the monitor refresh rate too?".

So no matter how fast or slow the GPU is rendering frames, the monitor is never locked to a particular refresh rate. Not stuck at 60Hz, even if the GPU is stuck on 45fps. In this case, the monitor would change to 45Hz to match the framerate. And if the GPU suddenly boosts to 110fps? The monitor boosts to 110Hz too.

Every frame is drawn perfectly in sync with the monitor. The monitor doesn't ever have to play catch up to the GPU (tearing), nor does the GPU ever have to play catch up to the monitor (stutter/lag).