In graphics, you're going to have some kind of geometry representation. The typical one is a mesh of triangles approximating a shape. So typical that it's universal in games.

But you can also use mathematical equations that describe a shape - let's say a sphere.

One such equation is one that takes a point in 3D space, and returns the shortest distance from that point to the surface of the shape.

You can use that function in rendering - in a ray tracer, for example, to figure out the point on the sphere that a camera's pixel should be rendering.

So instead of putting a bunch of polygons representing a sphere down a rendering pipeline, you can trace a ray per pixel and evaluate precisely what point on the sphere that pixel should be looking at. You've probably heard of 'per pixel' effects in other contexts - this would be like 'per pixel geometry'.

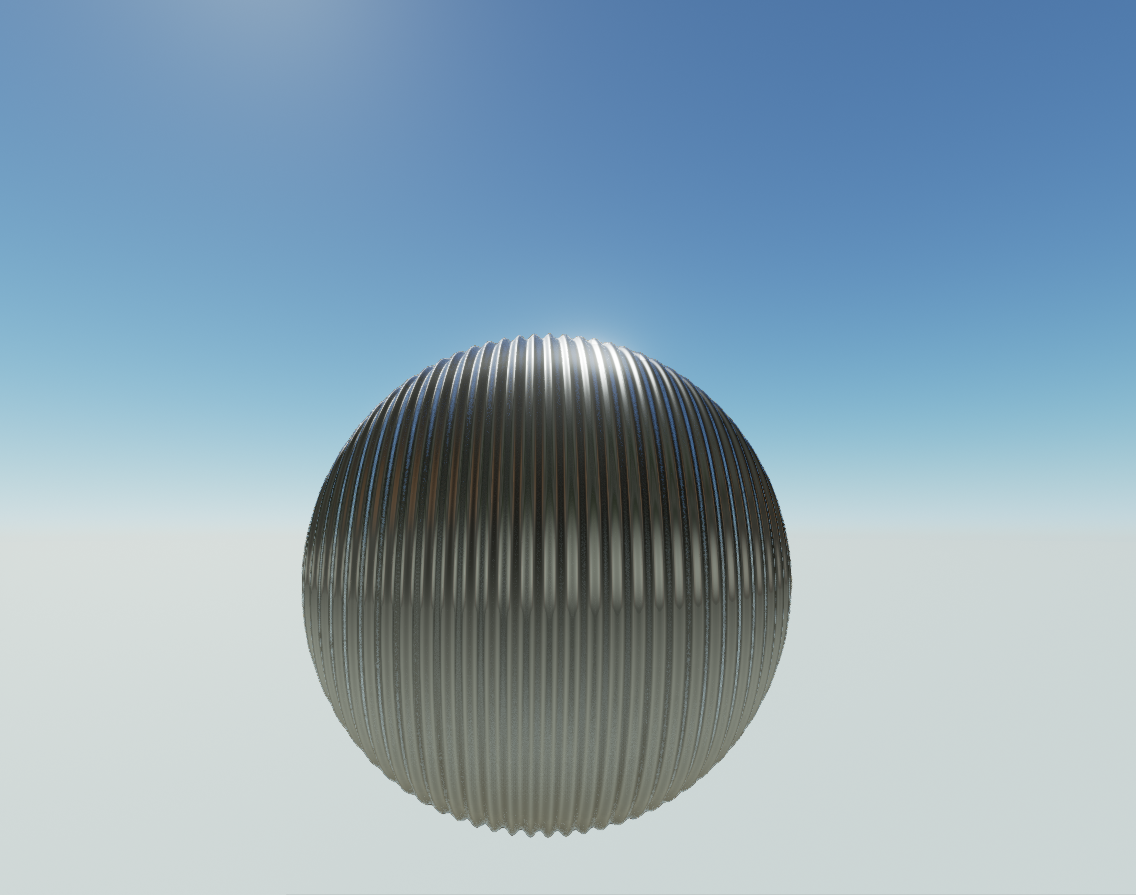

You can do interesting things with these functions. You can very simply blend shapes together with a mathematical operation between two shapes' functions. You can subtract one shape from the other, with another operation. You can deform shapes in lots of interesting ways. Add noise, twist them. For example, here's a shape with a little deformation on the surface described by a function using 'sin':

This render was produced with a tracing of the function - at every pixel the function was evaluated multiple times to figure out the point the pixel was looking at. Notice how smooth it is - you're not seeing any polygonal edges or the like here. It's a very precise kind of way of rendering geometry.

Now this isn't exactly what Media Molecule is doing. And here's where I diverge into speculation based on tweets and stuff.

(I think) MM is taking mathematical functions mentioned above and evaluating them at points in a 3D texture. So turning them into something they can look up at discrete points - which is a lot faster than calculating the functions from scratch. So, when you're sculpting in the game, it'll be baking the results of these geometry defining mathematical functions and operations into a 3D texture. So that's turning the distance functions into a more explicit representation which you might see referred to as a distance field.

To render the object represented by that texture, they have a couple of options. They could trace a ray and look up this 3D texture as necessary to figure out the point on the surface to be shaded - which is what I thought they were doing previously. But a more recent tweet suggests they are 'splatting' the distance field to the screen, which is sort of a reverse way of doing things. They'll be explaining this at Siggraph.

The advantages are the easy of deforming geometry with relatively simple operations. Doing robust deformation and boolean (addition/subtraction/intersection etc.) operations with polygonal meshes is

really hard. Knowledge of 'the shortest distance from a given point to that surface' can also be applied in lots of other areas - ambient occlusion, shadowing, lighting, physics (collision detection). It's a handy representation to have for doing things that are trickier with polygons. UE4 has recently added the option to represent geometry with distance fields for high quality shadowing.

The disadvantage of this - and of using it from top to toe in your pipeline! - is that's it tricky to do it fast, and obviously GPUs and content pipelines etc. are so based around the idea of triangle rasterisation. But GPUs have gotten a lot more flexible lately, so maybe as time wears on we'll see even more less traditional 'software rendering' on the GPU.