McHuj

Member

I've put a spec analysis in the comments of these rumored devkit parts infos :

Devkit parts

Those seems reasonable for 500$ I think

Very specific, very believable. But it could still be all BS.

I've put a spec analysis in the comments of these rumored devkit parts infos :

Devkit parts

Those seems reasonable for 500$ I think

Can someone check I got the bandwidth calc. right

576 GB/sec since it's clamshell mode.

We need the bus width to know the bandwidth.

With 16x chip it can be either 256bits or 512bits.

Wait, is that a 2TB SSD on a console (not dev kit)?Great - if it's fake they did a good job.

Notesmonolithic die ~22.4mm by ~14.1mm die size 316mm2 close to people's expectations 16 Samsung K4ZAF325BM-HC18 32 GB GDDR6 @ 18Gb/s 1152 GB/s bandwidth WOW! memory vrm seems like overkill devkit overengineered - nothing to see here 3 Samsung K4AAG085WB-MCRC, , 2 of those close to the NAND acting as DRAM cache 3 x 2GB DDR4 So 2GB DDR4 for OS, and 4GB as SSD cache - this must be Sony's secret sauce for load times 4 NAND packages soldered to the PCB which are TH58LJT2T24BAEG from Toshiba It's NVMe SSD 4TB total Obviously more than we'll get in baseline console PS5016-E16 from Phison PCIe Gen 4.0 controller makes stuff work . nothing to see here

Sounds good.

Suprised about the monolithic die - I was expecting chiplets on an interposer - maybe it still is but they didn't take the lid off.

Can someone check I got the bandwidth calc. right

No way it's gonna be a big fat 512-bit bus! Unless it's $599 minimum.We need the bus width to know the bandwidth.

With 16x chip it can be either 256bits or 512bits.

Well 256bits gives a bandwidht of 576GB/s if the GDDR6 is running at specification speeds (18000Mhz).No way it's gonna be a big fat 512-bit bus! Unless it's $599 minimum.

I'll take an avatar bet if that happens on a consumer console.

It's a dev kit, don't expect 2tb storage or 32gb ram in the retail unit.Wait, is that a 2TB SSD on a console (not dev kit)?

He said 4 chips and each one is 512GB.

Here it also mentions 2TB, but it's hybrid (like 2TB HDD + 256GB SSD cache):

Ps5 2020 infos - Pastebin.com

pastebin.com

2TB sounds batshit insane for a console! Some other rumors mention 1TB SSD (no HDD) for both Sony and MS.

Honestly, 32GB GDDR6 sounds a lot more believable than slapping 2TB of SSD storage.

Either way, I get the impression that Sony has multiple PS5 iterations in their labs, so multiple leaks from various sources (minus fake ones) might be explained by that.

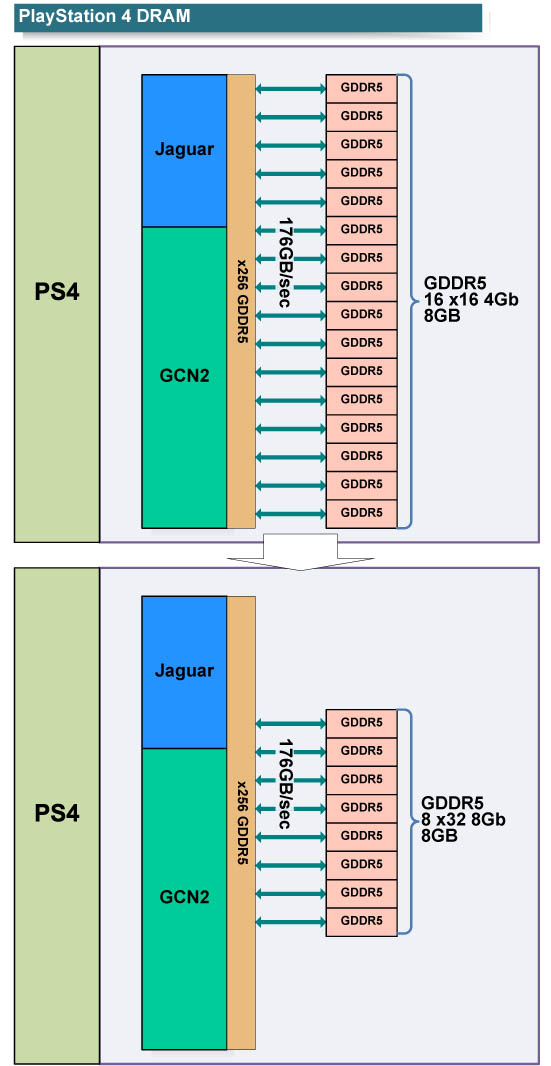

Does anyone remember the 2 PS4 Pro iterations? The one we got had Jaguar, the 2nd one ($499 SKU) has Zen 1 CPU. The 2nd one is probably what became the proto-PS5.

No way it's gonna be a big fat 512-bit bus! Unless it's $599 minimum.

I'll take an avatar bet if that happens on a consumer console.

MS is supposed to release two consoles. One less powerful and another one more powerful. We don't know if these specs are the weaker or stronger."Better" in terms of what? Rasterization? Efficiency? Software-based vs hardware-based RT?

Vega is a compute monster, but it's very power hungry as well. Just calling spade a spade.

Let's say that Sony hits 13TF and MS miraculously reaches 15TF. How many watts do you think they would need for that?

Vega will have less efficiency than Navi, just like with every old vs new GCN microarchitecture comparison.

IIRC, dev kits have 48GB of RAM and 4TB of SSD storage.It's a dev kit, don't expect 2tb storage or 32gb ram in the retail unit.

Wait, is that a 2TB SSD on a console (not dev kit)?

He said 4 chips and each one is 512GB.

Here it also mentions 2TB, but it's hybrid (like 2TB HDD + 256GB SSD cache):

Ps5 2020 infos - Pastebin.com

pastebin.com

2TB sounds batshit insane for a console! Some other rumors mention 1TB SSD (no HDD) for both Sony and MS.

Honestly, 32GB GDDR6 sounds a lot more believable than slapping 2TB of SSD storage.

Either way, I get the impression that Sony has multiple PS5 iterations in their labs, so multiple leaks from various sources (minus fake ones) might be explained by that.

Does anyone remember the 2 PS4 Pro iterations? The one we got had Jaguar, the 2nd one ($499 SKU) had Zen 1 CPU. The 2nd one is probably what became the proto-PS5.

No way it's gonna be a big fat 512-bit bus! Unless it's $599 minimum.

I'll take an avatar bet if that happens on a consumer console.

Now I'm confused. Aren't those dev kit specs?IIRC, dev kits have 48GB of RAM and 4TB of SSD storage.

Yeap and it says 38GB RAM total (32GB GDDR6 and 6GB DDR4) and 4TB SSD.Now I'm confused. Aren't those dev kit specs?

Depends. Microsoft have some high end nextbox coming after all.Now I'm confused. Aren't those dev kit specs?

They should be ditching their (overpriced) TV and phone business, not trying to make excuses to keep those money pits alive.

Wasn't 22gb GDDR6 closer to the bandwidth leak?Yeap and it says 38GB RAM total (32GB GDDR6 and 6GB DDR4) and 4TB SSD.

You are probably looking at 16GB GDDR6 + 6GB DDR4 and 1TB SSD for retail unit.

I've put a spec analysis in the comments of these rumored devkit parts infos :

Devkit parts

Those seems reasonable for 500$ I think

What do you mean? APUs and GPUs have no lid (if you meant the heat spreader, only CPUs have that).One thing says FAKE, FAKE, FAKE here - and that's the die size - there's really no way they could know that without taking the lid of the APU - which would almost certainly break it, or need re lidding .. how the fuck could they do that in a dev environment without getting the sack/into a ton of trouble .. ??

Unless there's an explanation for this I'd say well made fake leak.

What do you mean? APUs and GPUs have no lid (if you meant the heat spreader, only CPUs have that).

Why would it break?

Either way, we'll know for sure the exact dimensions of the APU die (assuming it's monolithic) by the end of 2020.

Great - if it's fake they did a good job.

Sounds good.

Yeap there is a leak with 22GB RAM.Wasn't 22gb GDDR6 closer to the bandwidth leak?

Well thank you - you might want to check it (my stuff) again, because I've had to make 2 major corrections .. oopsThanks for detailling the analysis for me, I was on my phone when I posted on reddit and I usually do all posting on PC

Well thank you - you might want to check it (my stuff) again, because I've had to make 2 major corrections .. oops

Sony key marketing at PS4 reveal was games playing without any loadtimes and most Sony first party games hide the loads times while you watch the cutscenes... Killzone at launch for example give you gameplay in less than 15s from a console cold boot.I'm not sure if I'm allowed to post ERA links, but I think this post is very thoughtful:

Next-gen PS5 and next Xbox speculation launch thread - MY ANACONDA DON'T WANT NONE

16 GDDR6 is what I'd expect if the HBM rumor isn't true.www.resetera.com

I guess Switch revolutionized the gaming field in a way? People want minimum friction these days, no time to waste on console booting/excessive loading times.

OopsYou're very lateCyberPanda

It's not theory, I tested it before I posted.In which games do you suspect to be the case?

Dont get me wrong, I don't believe your theory but if there is some merit to it im curious to see it.

6TF RX 480 can match X1X in FH4 and TW3 with tweaking and decent mem oc. A good oc should yield 2250MHz on either RX 480 or RX 580. I don't believe max mem clock ever changed. Polaris 10 has nasty power consumption scaling at max clocks. X1X also has 326GB/s memory bandwidth, I'm stuck around 273GB/s and RX 480/580 has 288GB/s max.I dont believe 480RX or 580RX is enough to match xbox x results.

Bu buu Buut that's because of trash CPU skewing thingsIt's not theory, I tested it before I posted.FH4 and TW3.

Clam shell mode doesn't cut bandwidth in half, you lose some but nowhere near 50%576 GB/s because clamshell mode

I've read your comment, 16GB is a potential botleneck and unacceptable for a $500 system.Those seems reasonable for 500$ I think

As usualYou're very lateCyberPanda

This doesn't make sense. Reads like a forum poster theoryNew Xbox’s GPU is based of the combination of New Vega (7nm) and the Arcturus 12 GPU

I would only trust him on working out tips. *look at his twitter avatar*This doesn't make sense. Reads like a forum poster theory

Does this josh guy have a reputable track record?

As usual

They're GPU bound in both scenarios. If the X1X had a better CPU then it would either consume more than 175W or end up having a lower power budget for the GPU.Bu buu Buut that's because of trash CPU skewing things

If the X had a decent CPU it would match or surpass rx 580

I get what you are trying to say but it can be a bit misleading since it can be understood as the X GPU is less capable than what its specs say.They're GPU bound in both scenarios. If the X1X had a better CPU then it would either consume more than 175W or end up having a lower power budget for the GPU.

It's not about which is more powerful, it's about finding the sweet spot in relation to perf/watt. RX 580 is in a bad spot for Polaris. It has poor perf/watt in relation to the bog standard RX 480, and hardly a worthy jump over 390. That's why I brought up the 390. In a way, MS rectified the compute to memory bandwidth disparity Polaris 10 has with more Hawaii gen ratio. That's why it's more like a 155W power capped RX 480 with higher mem bandwidth rather than a RX 580. Otherwise you could end up thinking they stuffed a 205W GPU into the X1X. Estimates for next-gen TDP would be assumed to be higher. In theory, at least.

PS5 MONSTER confirmed?Next-generation console: “Immersive” experience created by dramatically increased graphics

rendering speeds, achieved through the employment of further improved computational power and

a customized ultra-fast, broadband SSD.

PS5 MONSTER confirmed?

Isn’t Arcturus supposed to be a generation after Navi? How would it be a mix of old and new? It makes no sense.

Arturus is a GPU codename, likely from Navi.Isn’t Arcturus supposed to be a generation after Navi?

Cell BE is back baby!Broadband SSD?

WTH is that?

Broadband is wide bandwidth data transmissionBroadband SSD?

WTH is that?