MasterCornholio

Member

Covid is still having such a massive impact on distribution channels (I’m seeing this at work a lot).

I'm seeing it alot as well.

.

Toilet Paper is starting to disappear again. And I can't get any ammo!!!!

Covid is still having such a massive impact on distribution channels (I’m seeing this at work a lot).

Nah don't worry they just want your body!It's a bribe. They want information from you.

One day they will contact you and say "Yo BGs remember that gold you got? It came from me now you owe me some tasty inside information"

No everything seems to point to next week or even later.

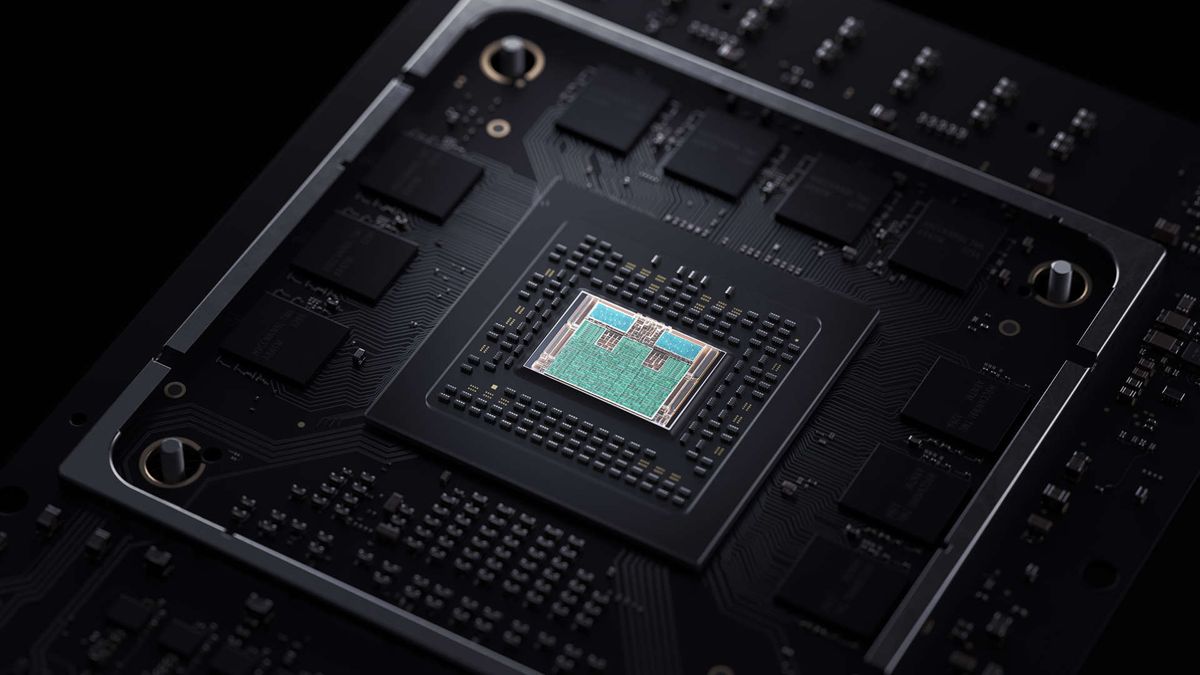

I'm just pure bullshit speculating, but Cerny has said they played around with chips that had more compute units, but settled on 36. It's obvious they save money on a smaller chip and it's also minimum count for backwards compatibility. What if they were also testing their custom 36 CU chip against a larger standard RDNA2 chip? Through all the changes via CU, geometry engine, etc., what if they found it wasn't worth spending on a larger chip? It's possible ray-tracing performance isn't a 1 to 1 with RDNA2. It must have been clear to them that Xbox would have the largest chip possible and so they needed to find ways to make up for the CU difference as much as they could? Again, just spitballing here, but that could be an explanation of why the geometry engine is as modified as much. Also take into account the needs for next-gen PSVR2. Foveated rendering, anyone?Well patents say yes. TF mple numbers, do you think XB1X is more powerful than Lockart ? No its not.

What if I told you that Ps5 Geometry engine or shaders where 15 % is to be made up, compresses the data between the first stage (vertices) and second stage (pixels) only on ps5 ?

What if that gain is creeping up on your 15 %. What will you crow about then ? Want to know what it overcomes, from patent released last week ...

Rememeber all the gossip about ps5 unique new geometry engine....sleep well.

Prototype was blackBlack one is fake

I think console delays get more and more likely every day that passes without solid information. Covid is still having such a massive impact on distribution channels (I’m seeing this at work a lot). It could be very likely that due to that, the consoles delay until Spring. No point putting your product on sale, if there’s no way to get it into the stores.

Now I understand that TF... TP fixation. 12 TP can keep you afloat for a month.I'm seeing it alot as well.

.

Toilet Paper is starting to disappear again. And I can't get any ammo!!!!

I'm just pure bullshit speculating, but Cerny has said they played around with chips that had more compute units, but settled on 36. It's obvious they save money on a smaller chip and it's also minimum count for backwards compatibility. What if they were also testing their custom 36 CU chip against a larger standard RDNA2 chip? Through all the changes via CU, geometry engine, etc., what if they found it wasn't worth spending on a larger chip? It's possible ray-tracing performance isn't a 1 to 1 with RDNA2. It must have been clear to them that Xbox would have the largest chip possible and so they needed to find ways to make up for the CU difference as much as they could? Again, just spitballing here, but that could be an explanation of why the geometry engine is as modified as much. Also take into account the needs for next-gen PSVR2. Foveated rendering, anyone?

How is that mind bending logic?

Why are you saying "fake" and "lame" ?

PS5 might actually have 1800p upscaled and 30fps with lower res RT.

Doesn't make it a bad console.

SourcePrototype was black

Take that back, you Caribou sniffer.

I am looking forward to this Xmas, I have a date with spiderman

Take that back, you Caribou sniffer.

I am looking forward to this Xmas, I have a date with spiderman

If its true that XsX can only do RT or use TMU at the same time but also true that Sony decoupled RT operations from TMUs then PS5 will have the upper hand...

The hardware will launch when it's supposed to. They've committed to that. How much hardware is out there is a different question. Companies will not miss out on putting items out for sale during the holiday season. Again, just my opinion, not a fact, but.. it should be.

Games.. fogettaboudit... they launch when they do.

No bringing in the DualSense just makes you look bad. Nobody here is suggesting that.

The only thing that I've heard here is that Sony might have asked AMD to customize the ray tracing hardware a bit. That's about it. Before dedicated ray tracing hardware was confirmed for the GPU people were speculating of an external raytracing solution.

Hence it's all alot more logical than saying there's a Dead demonic baby in the power supply doing the ray tracing for the console with the blood of innocent nuns. Or saying the controller is doing it which no one is.

Now wasn't that fun?

It's a bribe. They want information from you.

One day they will contact you and say "Yo BGs remember that gold you got? It came from me now you owe me some tasty inside information"

What the heck is a TMU?

A Texture Mapping Unit. As the name implies, it’s responsible for doing Texture Mapping.What the heck is a TMU?

The thing is, it’s not just down to Sony and Microsoft to decide when their consoles are released. They are the product manufacturers, but they require retailers to shift units. That’s why we haven’t heard about pricing and launches already. Lots of fun and games going on behind the scenes. Everybody wants to put the consoles out for holiday season, but circumstances could still be against it happening.

couple of % is 44.6Mhz which reduces power by 10% that's not even bellow 10Tflops.

Prototype was black

The release date is mostly down to the console mfr and distribution chains to go through their process deciding on release date, make qty promises to retailers, line up any exclusivity bonuses for going with certain retailers..etc.

At this point, the manufacturing process is in gear and being ramped up. My guess on why we haven't heard price point or release date is.. A) They go hand in hand. B) They haven't finished lining up the factors mentioned above.

It will still launch Holiday 2020, IMO, as they have already both committed to. That's another reason. Unlike games, when the console mfr puts out "Holiday 2020" it's far far less likely to be delayed than games from a software dev.

Are there any tech insights about how MS extracted 400% more performance for RT out of an APU compared to a full fledged PC card (2080ti)?

I believe Cerny is inferring to running BVH independent of RT, decoupling. If so then advantage Sony.

I think MS just overstated the RT capability they had but now it’s laid out it’s the same as PS5.

I call that a pure bullshit example. a few moments before, he was saying that without variable clocking and redirecting power from cpu to gpu, even 2Ghz was an unreachable target with fixed frequencies.

somehow now everybody believes that ps5 will have a constant 2.23Ghz gpu while the cpu will run at full speed too.

btw he also says that running the CPU at a constant 3Ghz was a headache without variable frequency,

and by now I think pretty much all of you must understand that this was bullshit too, right?

or, to put it more "elegantly", since that's what people seem to prefer, if microsoft can run at a constant 3.6Ghz, while sony "was having headaches" at a constant 3.0Ghz,

at best case scenario this leaves way too much to be desired about their unknown "elegant cooling system", doesn't it?

finally, as I have already written numerous times, if variable clock speed meant "couple of % reduction, like 44.6Mhz" like you say -or even 63Mhz (I give you a full 1% reduction more),

then sony would have simply locked the GPU at 2.1-2.2Ghz and would have called it a day.

my 2c's

Ask the guy who quoted me saying that constant boosting solution means 1% or 2% downclocking at worst case scenarios.Why is this still a discussion point?

MS used “peak” RT figures. This assumes every intersection engine (and there are 2 associated with each pair of CUs) are dedicated to Ray Tracing - BVH traversal or ray/triangle collisions.

In practice the above won’t happen because the Intersection engines are dual purpose - their other job is texture mapping.

So assuming your game has textures, some (or perhaps it’s most accurate to say most) of those Ray Tracing operations will have to wait.

With the AMD solution devs will have to give up texture mapping operations to do some RT. Also, the RT functions are memory intensive so there could be other side effects of doing a lot of RT.

Nvidia are offering a standalone RT cores - they don’t do anything else but RT functions. So those 10 G/sec Rayops are computationally “free” on the 2080Ti - there’s no need to compromise TMU ops.

Also the Nvidia RT cores have 2 components - BVH traversal and Ray/tri hit detect. As far as I can tell right now, the AMD solution just has one unit (which is also the TMU btw) doing both BVH and Ray/tri hits so the 4 ops per second may not be like for like with the Nvidia RTcore operations ...

This is why MS (and Sony before) are saying RT will be used sparingly. Otherwise you’ll get a set of horrible low texture stuff with nice lighting .... sounds like Craig

I don’t think so. Cerny specifically says they’re using AMD’s upcoming (RDNA 2) RT technique with extra processing inside the CUs

Basically the PS5 will do RT the same way as the Xsex - Cerny referred to an “intersection engine” but it’s also the TMU.

That intersection engine will do both BVH traversal and Ray/tri hits.

I think MS just overstated the RT capability they had but now it’s laid out it’s the same as PS5.

if amd launches an 80 CU card at a competitive price though, there will be CUs to spareSeems to me like RTX cards will be the way to go for my future PC then.

"We've added hardware embedded in the compute units," says Grossman, "to perform intersections of rays with acceleration structures that represent the scene geometry hierarchy. That's a sizeable fraction of the specialised ray tracing workload, the rest can be performed with good quality and good real-time performance with the baseline shader and memory design.

"The overall ray tracing speed up varies a lot, but for this task it can be up to 10x the performance of a pure shader-based implementation."

"We do support DirectX Raytracing acceleration, for the ultimate in realism™, but in this generation developers still want to use traditional rendering techniques, developed over decades, without a performance penalty," says Grossman sadly. "They can apply ray tracing selectively, where materials and environments demand, so we wanted a good balance of die resources dedicated to the two techniques."

Hey, I hope you're right - I want my Ps5 dammit! But I'm also aware of a lot of issues going on behind the scenes (not with video games consoles, I hasten to add, I'm not in that specific business) and I'm starting to get a sinking feeling. Really hoping there's some solid news from both manufacturers soon.

Thanks for the explanation. I thought AMD was doing something similar they Nvidia is doing RT with dedicated cores. Now I know that RT will use the CUs instead.

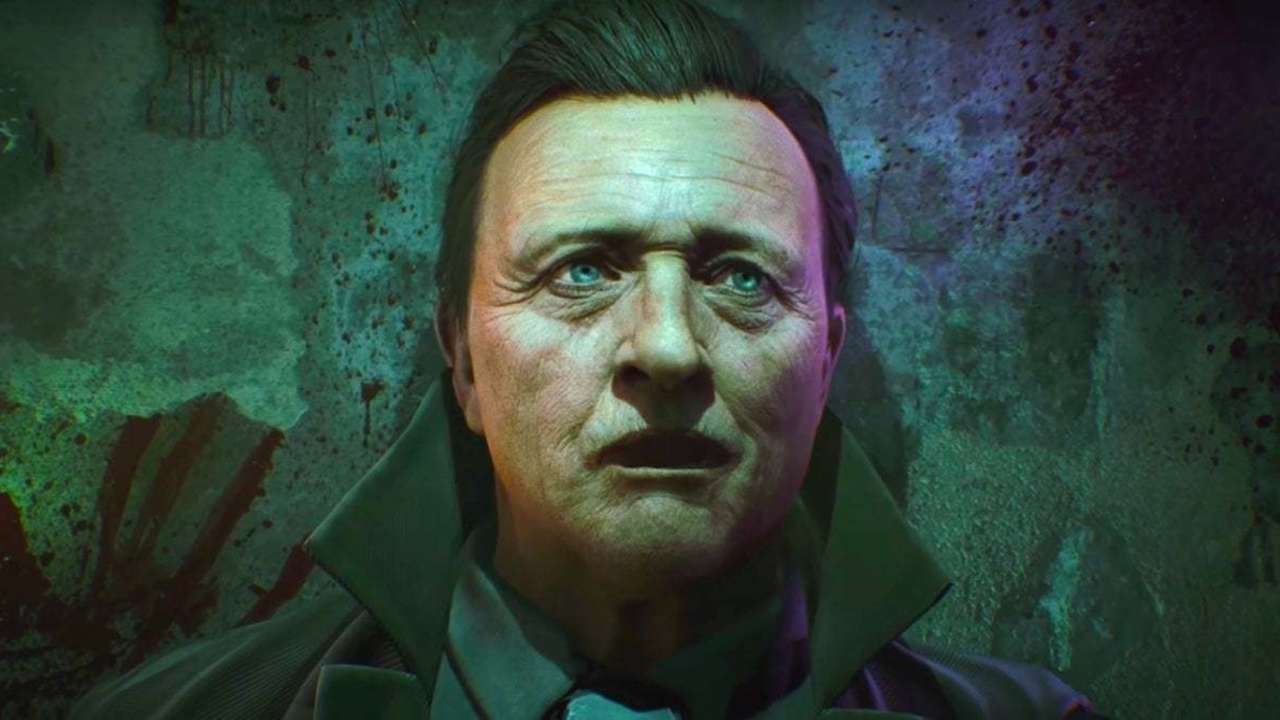

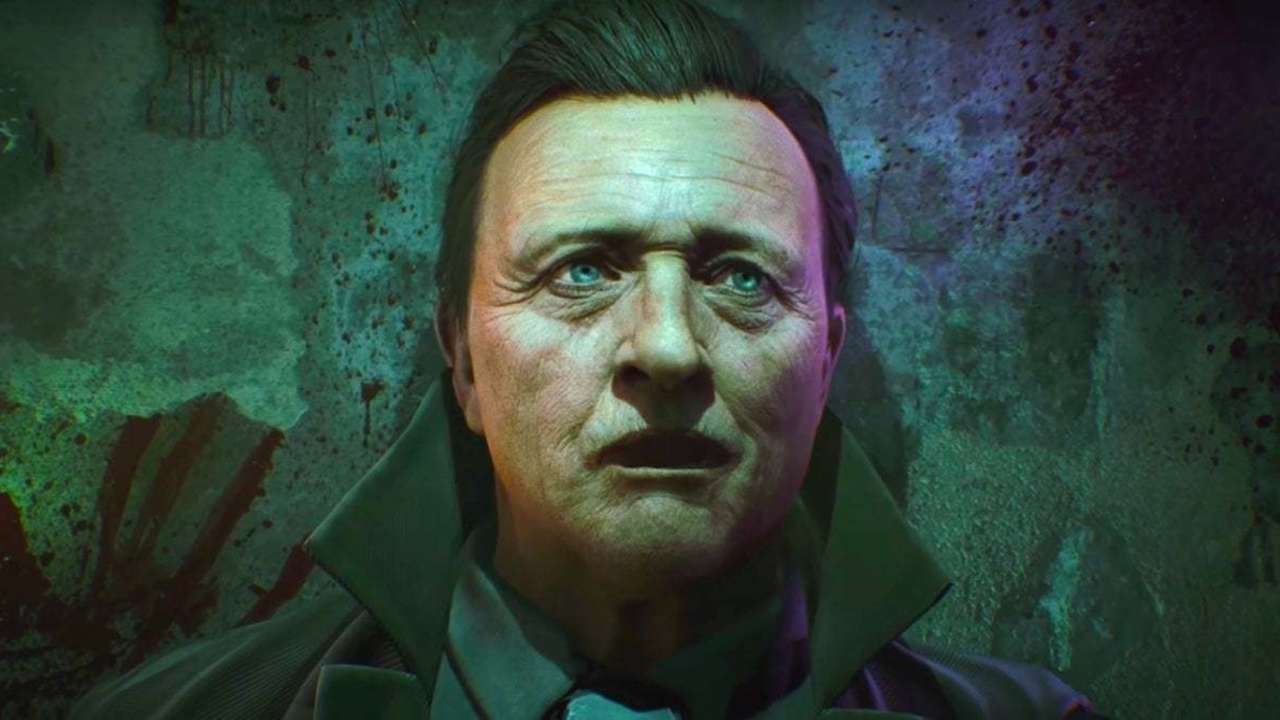

GameSpot exclusively reveal a brand-new graphics comparison showing what the power of PlayStation 5 and Xbox Series X will do to Observer. Bloober Team's lighting artist Tomasz Bentkowski helped breakdown the new visual enhancements, which include things like global illumination, high-dynamic-range and ray-tracing, volumetric lighting, and others. These enhancements create an image that is more realistically lit.

Observer: System Redux will feature a plethora of visual improvements. In addition to the various lighting enhancements, the graphics comparison reveals character model redesigns with more detailed textures, new flickering lights effects, and more

Tell it Bo! Thanks for adding me on PSN my friend. Tour the coolest dude on gaf.Let's not post about PC gaming, this is a next gen consoles thread.

Texture mapping uniut, so pixel shaders or ray tracing.

A Texture Mapping Unit. As the name implies, it’s responsible for doing Texture Mapping.

Thats a texture mapping unit, a part of the GPUWhat the heck is a TMU?

Okay seriously we are about 73 days away from November and still nothing! When are they planing to open pre-prders? A month before release?

New Video Shows How PS5 And Xbox Series X Improve Observer: System Redux's Graphics

New Video Shows How PS5 And Xbox Series X Improve Observer: System Redux's Graphics

Observer: System Redux's graphics comparison between current and next-gen also show redesigned character models.www.gamespot.com

IKR, throw us a bone.