If you are going to use high resolution to make a point

There's no point benchmarking GPU in lower resolutions (because of CPU bottleneck). Just admit you were wrong and I will forgive you

TNT2 vs Geforce 1 DDR - 18 fps vs 35 fps (and 44 fps OC), that's 94% improvement (and 144% OC)

GF1 DDR vs GF 2 Ultra 1600x1200 -25 fps vs 55 fps, that's 125% improvement

GF2 Ultra vs GF3 ti 500 - 55fps vs 100fps, that's 81% improvement

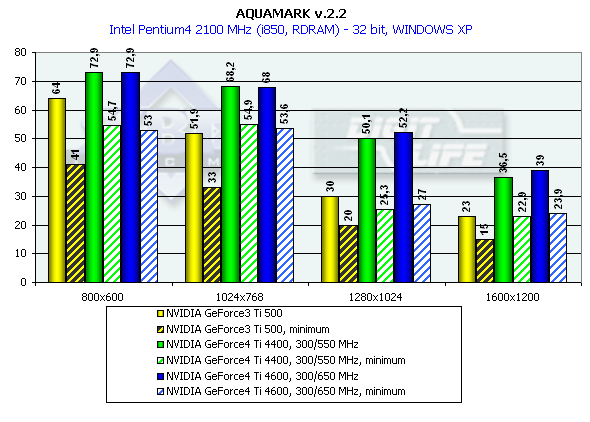

GF 3 Ti 500 vs GF 4 ti 4600 - 23 vs 39 fps, that's 69% improvement

GF 4 ti 4600 vs GF 5 (FX) 5900 XT 1280x1024 - 10 vs 38 fps, that's 280% improvement (and people say FX series was the worst

).

GF4 ti 4600 vs FX5900 Ultra - 20 vs 76 fps, that's also 280% improvement

GF 5900 vs 6800 Ultra - 25 vs 69 fps

GF 6800 Ultra vs GF 7900 GTX - 80 vs 156 fps, that's 176% improvement

7900 GTX vs 8800 GTX - 19 vs 48 fps, that's 152% improvement

8800 Ultra vs 280 GTX - 32 vs 50 fps, that's 56% improvement

GF 285 GTX vs GTX 480 (FERMI 1.0) vs 580 (FERMI 2.0) - 60 vs 100 vs 119 fps, that's 66% (GTX480) and 99% (GTX580) improvement

####################################################################################################################################################################################################################

And since 2012 Nv started selling their mid-range GPU's as high end GPU's. GTX 680 wasnt the best kepler, it was only mid-range kepler, however it was sold at the same price as previous high end GPU's from Nv.

GTX 580 vs GTX 680 vs 780ti (real high end kepler) 32 vs 43 (47 with newer drivers in 2'nd chart) vs 72 fps. That's 34% improvement (GTX680), and 125% (780ti).

So people started paying much more for much less performance

. And where we are today?

1080ti vs 2080ti 59 vs 79 fps, so that's 33% more performance for just 1200 $

If people will still defend Nvidia, their GPU's will be more and more expensive.