DonMigs85

Member

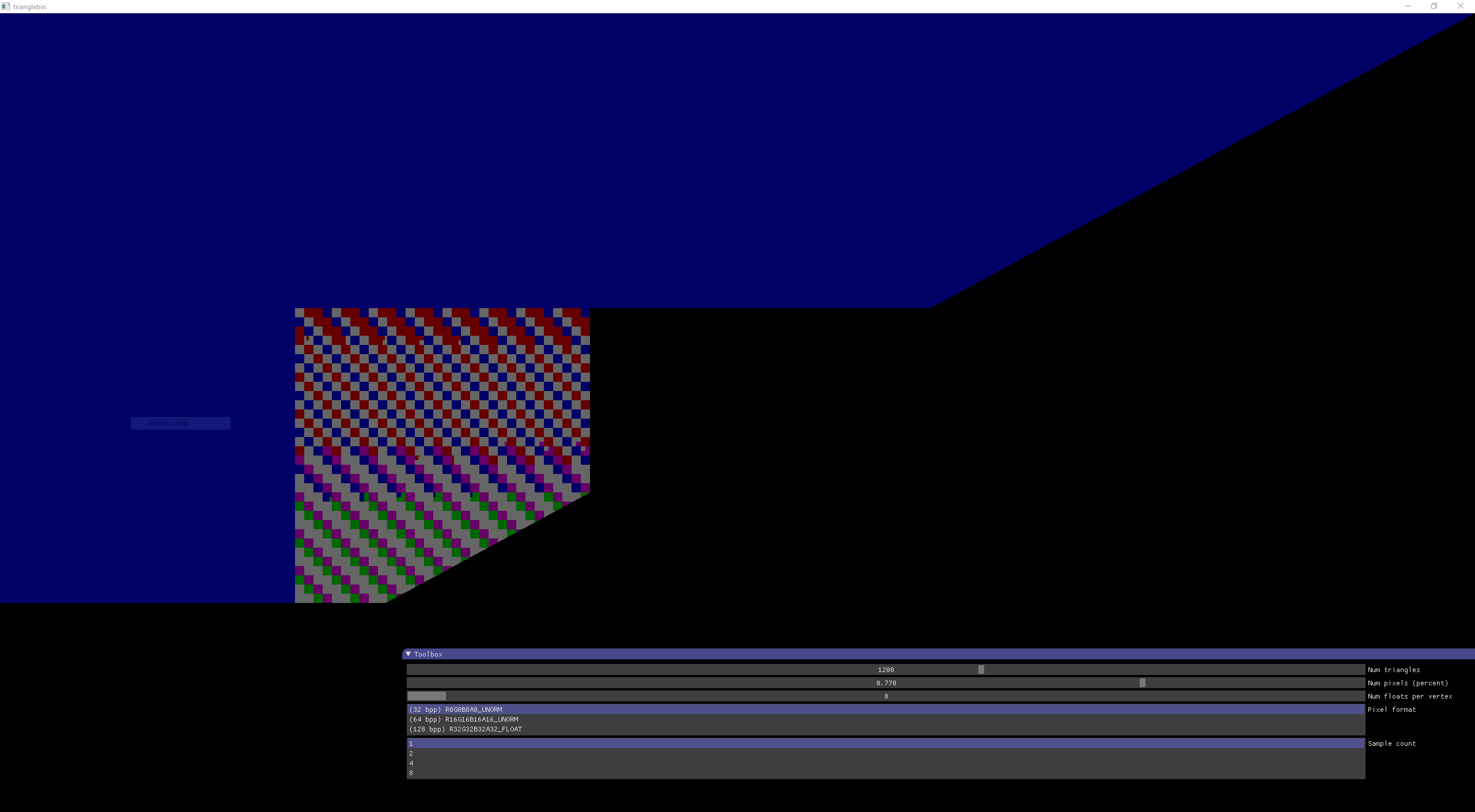

http://www.anandtech.com/show/10536/nvidia-maxwell-tile-rasterization-analysis

Very interesting, especially how they got it to be compatible with PC applications which traditionally expect direct mode rendering. I wonder if they also licensed any patents from Imagination.

Very interesting, especially how they got it to be compatible with PC applications which traditionally expect direct mode rendering. I wonder if they also licensed any patents from Imagination.