Thirty7ven

Banned

Doesn’t seem very accomplished this game. It doesn’t even look that good.

Dont know why this is necessaryNice hair, though lol. There's a wall on the PS4 version that has PS2 textures.

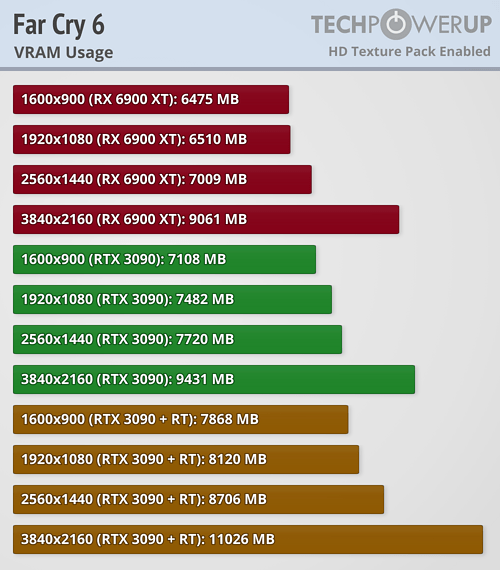

We have already seen a few games using a lot of vram and the trend is to continue to increase, like it has always been.

You can whine all you want and call others as misinformed, but the reality is that games are increasing vram usage.

Is that under the assumption that you'll be running these games at max settings, including RT?

the problem isnt about using more vram everybody expected games to increase memory usage as nextgen consoles came out and add that to the ssd's but i dont see where the extra vram is needed in this game like really what in this game needs 12gb when ue4 last 2 the order days gone killzone shadow fall ran on a ps4 with a max ram roof of 5.5 gb for games... this is why people are mad... i would understand a game like demon souls remake or ratchet and clank would need 12gb on average but weve seen shitty looking games like forspoken that has repeated textures and now this game with vilage corridors suffocating memory usage.. it just doesnt add upRT increases vram usage even further, as we are rendering more of the world with reflections, or have new buffers for global illumination, and the BVH structure, and more shaders.

In the case of RE4 demo, without RT, my GPU was using 12Gb in the village section. With RT it went to 14GB.

And the RT in this game isn't that demanding.

Yes using the xbox controller, I'll try KB+M but this is the sort of game that a like to play on a big screen so it's unlikely that I'll play the game on my desk (my PC is set next to my gaming TV.).Using controller? There is a deadzone on the sticks. M+kb don't have this issue.

You just said it yourself. Consoles are 30 fps machines. This game is targeting 60 fps even in its fidelity resolution mode with RT. Just look at Dead Space. Its 60 fps mode runs at 960p before being checkerboarded to 1440p.The thing is though that this seems like poor optimization instead. Theres no reason the current consoles should be struggling with these games at this point. If your gonna be buying a new pro console ever 2 - 3 years, may as well just buy a pc. Consoles will always be 30 fps machines, maybe 40 or 60 if really well optimized like horizon forbidden west. so long as companies target that things like iq should hold up just fine

?? It looks great. Last gen as fuck yes, but great nonetheless.But my brother in Christ, does it even look good?! This sort of screenshot looks like something with graphical complexity, from 360 era, just rendered out to screenshot level. Does it even use tessellation (look at those rocks)?. Am I going crazy or something? Where the fuck is the GPU power going to in this game.

You just said it yourself. Consoles are 30 fps machines. This game is targeting 60 fps even in its fidelity resolution mode with RT. Just look at Dead Space. Its 60 fps mode runs at 960p before being checkerboarded to 1440p.

Comparing it to HFW is not fair because HFW doesnt use RT which is really taxing on VRAM and VRAM bandwidth. And even then HFW's 40 fps mode drops resolution by half to 4kcb compared to native 4k in its 30 fps mode. Optimization isnt everything. You need better hardware. We dont know why the PS5 and XSX are struggling with 60 fps modes, but its so prevalent that we cannot just blame the devs all the time. Having a mid gen refresh will let devs target native 4k or 1800p 60 fps for games like this solving the performance issues for those of us who care about Image Quality.

Also, the game is definitely a noticeable upgrade over RE2 and even Village when it comes to fidelity.

Way better lighting, textures, and post processing effects.

?? It looks great. Last gen as fuck yes, but great nonetheless.

See above to see just how big of an upgrade it is over RE2 and Village. Village looks flat in comparison.

You just said it yourself. Consoles are 30 fps machines. This game is targeting 60 fps even in its fidelity resolution mode with RT. Just look at Dead Space. Its 60 fps mode runs at 960p before being checkerboarded to 1440p.

Comparing it to HFW is not fair because HFW doesnt use RT which is really taxing on VRAM and VRAM bandwidth. And even then HFW's 40 fps mode drops resolution by half to 4kcb compared to native 4k in its 30 fps mode. Optimization isnt everything. You need better hardware. We dont know why the PS5 and XSX are struggling with 60 fps modes, but its so prevalent that we cannot just blame the devs all the time. Having a mid gen refresh will let devs target native 4k or 1800p 60 fps for games like this solving the performance issues for those of us who care about Image Quality.

Also, the game is definitely a noticeable upgrade over RE2 and even Village when it comes to fidelity.

Way better lighting, textures, and post processing effects.

?? It looks great. Last gen as fuck yes, but great nonetheless.

See above to see just how big of an upgrade it is over RE2 and Village. Village looks flat in comparison.

Didn’t RE8 have the same control issue in the Xbox demo then they fixed it?

Yes using the xbox controller, I'll try KB+M but this is the sort of game that a like to play on a big screen so it's unlikely that I'll play the game on my desk (my PC is set next to my gaming TV.).

RT increases vram usage even further, as we are rendering more of the world with reflections, or have new buffers for global illumination, and the BVH structure, and more shaders.

In the case of RE4 demo, without RT, my GPU was using 12Gb in the village section. With RT it went to 14GB.

And the RT in this game isn't that demanding.

the problem isnt about using more vram everybody expected games to increase memory usage as nextgen consoles came out and add that to the ssd's but i dont see where the extra vram is needed in this game like really what in this game needs 12gb when ue4 last 2 the order days gone killzone shadow fall ran on a ps4 with a max ram roof of 5.5 gb for games... this is why people are mad... i would understand a game like demon souls remake or ratchet and clank would need 12gb on average but weve seen shitty looking games like forspoken that has repeated textures and now this game with vilage corridors suffocating memory usage.. it just doesnt add up

Was that RT but with the rest of the settings turned up to max?

Well, yeah, thats exactly what I did and why im playing the demo at native 4k 60 fps on my 3080.I just think that if you value performance to the point of upgrading your console to what might potentially be a 6-800 dollar box, why not just build a pc that will promise long term performance gains instead of doing that every few years. Like yes the ps5 pro will be more powerful, but will it still be able to match a rig with a 4080 or 90 in it, using the latest intel processors?

RT. Max settings. FSR2 Quality. 1440p. Motion blur, film grain and dof disabled.

My 10 GB 3080 gives me 60 fps with some rare drops into the mid 50s at native 4k. No FSR or interlaced upscaling.Fuck. I didn't crank everything to max cause my 3080 is a 10gb, but considering the game looks last gen, that's wild.

My 10 GB 3080 gives me 60 fps with some rare drops into the mid 50s at native 4k. No FSR or interlaced upscaling.

I have almost every setting set maxed out. No RT, no hair, textures on 4GB high. Volumetric lighting at mid. Both because i honestly couldnt tell a difference in the settings preview.

at 1440p native, I average 110-120 fps with some rare drops to 95 fps.

My tests with RT on at 1440p 120 fps were disastrous. down to 74 FPS during gameplay. In the final cutscene which is a locked 120 fps with RT off with 90% GPU usage was only 55 FPS with RT and hair on. Fuck that.That's similar to my experience, also have a 3080 10gb. Same settings as yours and with my 1440p it hovers between 110-120. May drop to 90-100 at the Village. VRam usage hovers between 5.5 - 6.5, until I get to the Village where it cranked up to 7.2gb.

Previously I ran the Demo with RT on High and had textures at 2GB to compensate, and the game ran just as smooth. But if I cranked everything to max, including RT on, yeah I'd be in the Red for sure.

I wonder if Winjer uses a 4k.

Xbox: Bad Controls

PS5: Bad IQ

PC: Stutter

Pick your poison.

There is no stutter on PC. RE engine != Unreal Engine.Xbox: Bad Controls

PS5: Bad IQ

PC: Stutter

Pick your poison.

exactly so its a developer and design problem not a memory problem... what high res textures.... you can clearly see repeated textures and assets in this game theres literally nothing impressive here low poly assets and repeated textures are everywhere... people need to know where their memory budget is being taxed.. you cant make some cheap ass game with cheap assets and require 12gb when better looking games ran on 5.5 gb memory.. Heres demon souls vs re4 can you please show me why re4 needs 12gb of ramThe dark art style of this game is not very good to show higher resolution textures. DF showed there are diferences, with the PC on max settings having higher texture resolution than consoles.

The other thing to consider is that the RE engine caches a lot of data to prevent stutters. Unlike UE4, that relies on it's streaming engine, but then has a ton of stutters from asset streaming. Not only from shader compilation.

Also, this is not the first game to struggle with GPUs with 8GB of vram. And it won't be the last.

RT. Max settings. FSR2 Quality. 1440p. Motion blur, film grain and dof disabled.

My tests with RT on at 1440p 120 fps were disastrous. down to 74 FPS during gameplay. In the final cutscene which is a locked 120 fps with RT off with 90% GPU usage was only 55 FPS with RT and hair on. Fuck that.

TBH, the 3080 has disappointed me. Gotham Knights had awful stutters in the RT mode that would literally freeze the game by running at 2-3 FPS every half an hour or so. Hogwarts with RT is straight up unplayable. RE3 was more or less ok but almost no difference for a massive performance hit. And now this. I didnt even bother buying Callisto because its RT performance was so bad.

At this point, im thinking i probably shouldve bought a 6950xt which is better at standard rasterization performance than the 3080 and has more VRAM. I literally havent played an RT game since Cyberpunk on this GPU.

Good bless long cable and/or wireless mouse+kb. Fuck desks.Yes using the xbox controller, I'll try KB+M but this is the sort of game that a like to play on a big screen so it's unlikely that I'll play the game on my desk (my PC is set next to my gaming TV.).

It's definitely partly optimization. Ive been playing RT games since 2019. I know my resolution or FPS is going to cut in half if I turn on RT features. Metro, Control, Cyberpunk... I was willing to make that sacrifice. Some of the latest games though like Witcher 3, simply dropping resolution from 4k to 1440p isnt enough. The performance is still all over the place. Witcher 3 goes from 60 fps to mid 20s as soon as I enter the city. Thats 1/4th the performance I get with RT off. Hogwarts is so fucking bad that I drop to 1080p and it still isnt consistent with drops to 40 fps in hogsmead. Gotham Knights is straight up broken with those 2-3 fps periodic stutters. Im like fuck it.In 2020, my 3080 was a dream to have. But these days, it feels so weird that games really haven't gotten that much more graphically impressive, but the 3080 isn't completely crushing like it used to. But to your 2nd point, I'm wondering if that has more to do with poor optimization. Gotham Knights and Hogwarts Legacy don't look any better than Cyberpunk 2077 or RE8.

exactly so its a developer and design problem not a memory problem... what high res textures.... you can clearly see repeated textures and assets in this game theres literally nothing impressive here low poly assets and repeated textures are everywhere... people need to know where their memory budget is being taxed.. you cant make some cheap ass game with cheap assets and require 12gb when better looking games ran on 5.5 gb memory.. Heres demon souls vs re4 can you please show me why re4 needs 12gb of ram

What stutter?Xbox: Bad Controls

PS5: Bad IQ

PC: Stutter

Pick your poison.

I noticed some traversal stutter like loading new area. Insignificant but it's there. I think DF pointed that too.What stutter?

yes demon souls is a ps5 game i could have chosen a ps4 game to do comparisons but anyhow this is completely a bad design and engine problem nothing to do with hardware...what is it caching because almost all games cache data this was explained by mark cerny on his talk on ps5 only a few games ustilize ssd's for streaming demon souls certainly does at this is totally justified in how it looks but resident evil 4 looks worse than ps4 games and requires 12gb.. even worse with tonnes of cheap and repeated assets.. and it works not only bad on pc but even on console nx gamer found streaming stutters.. its badly made games like this and forspoken that carelessly use resources that make people think their harware is the problem.. before you know it they are gonna release another shitty looking game that requires 32gb ram again and people will jump to claiming its raytracing or high res textures complete bsIf I'm not mistaken, Demon Souls was launched only for the PS5, a console with 16GB of unified ram.

A couple of GB are used by the OS. But because it's a unified memory architecture, it doesn't have to duplicate data on 2 pools of memory, like on PC.

If that game was to be ported to PC it would probably also use a good chunk of vram.

I agree that RE4 remake does look worse. Capcom is probably caching the whole section the player is in.

The advantage is that the game has no stutters from asset streaming, during most of the area. Only when the player is entering a new area, does is stutter as it's loading the next part.

yes demon souls is a ps5 game i could have chosen a ps4 game to do comparisons but anyhow this is completely a bad design and engine problem nothing to do with hardware...what is it caching because almost all games cache data this was explained by mark cerny on his talk on ps5 only a few games ustilize ssd's for streaming demon souls certainly does at this is totally justified in how it looks but resident evil 4 looks worse than ps4 games and requires 12gb.. even worse with tonnes of cheap and repeated assets.. and it works not only bad on pc but even on console nx gamer found streaming stutters.. its badly made games like this and forspoken that carelessly use resources that make people think their harware is the problem.. before you know it they are gonna release another shitty looking game that requires 32gb ram again and people will jump to claiming its raytracing or high res textures complete bs

nobody asked whether ram or storage is faster i dont know why ur trying to explain this to me, the reason for direct storage or ps5 io is to stream data direct to memory where as on pc you could cache it on system memory and its why you need 32gb on average to run games that where designed for something like ps5 io... my question is why does re4 require 12gb when it looks worse than some ps4 games! and why does it run like shit on current gen consoles even when compared to demon souls that has not only even higher resolution assets, textures, dynamic gi, fluid particles and all this with no loading screens!Caching data in memory is orders of magnitude faster, than using the SSD. Both in terms of latency and in bandwidth.

It’s ok to use all vram. But these games don’t crash and re crashes the moment it goes out.Crashing for lots of people when RT is enabled?

Who is "lots of people", cuz very little about that on steam.

If it was actually an issue Steam would be blowing up about it.

High VRAM usage?

What constitutes high VRAM usage?

And when does using 10GB of VRAM at 4K become just normal VRAM usage.

How much VRAM would you suppose is "normal VRAM usage" at 4K?

Games were using ~8GB of VRAM at 4K like 3 - 4 years ago.....are you expecting them to stay at ~8GB of VRAM forever??

nobody asked whether ram or storage is faster i dont know why ur trying to explain this to me, the reason for direct storage or ps5 io is to stream data direct to memory where as on pc you could cache it on system memory and its why you need 32gb on average to run games that where designed for something like ps5 io... my question is why does re4 require 12gb when it looks worse than some ps4 games! and why does it run like shit on current gen consoles even when compared to demon souls that has not only even higher resolution assets, textures, dynamic gi, fluid particles and all this with no loading screens!

Yeah the game crashed for me too as soon as I pushed the VRAM limit. NX Gamer was able to recreate the crash every time.It’s ok to use all vram. But these games don’t crash and re crashes the moment it goes out.

The demo 100% crashes in few spots if I have max and rt enabled. Disabling rt or lowering few settings somewhat help.

3080 10gb

I don’t understand, didn’t DF say that PS5 reached a 1440p resolution in the demo?

NX gamer is covering the demo here isn’t he?

you didnt asnwer anything... ssd for caching was developed because games used alot of memory in caching data that wasnt needed on screen and instead faster ssd's and io technology make it possible for developers to use more of the ram they need for whats on screen and only streaming from storage what they required without loading screens this is why ssd's and io technology was massively improved on console this gen... pc's could always cache data on system ram because you can add whatever amount of ram on a pc where as consoles are closed systems... second im not insisting on demon souls i just asked you why does re4 look worse than ps4 games like the order and last of us 2 yet requires more than 12gb ram on pc... the reason im asking that is because its a badly designed and optimized game to require such amounts of memory to no visual correlation this is what pisses me off its devs carelessly using up memory without consideration if demon souls was made by capcom with re engine you would probably require 64gb ram then.You were talking about using the SSD for caching, so I explained that memory is much faster. That's it.

Now, why does RE4 looks worse than some last gen games, is something you should ask Capcom.

You insist on talking about Demon Souls, but that game would probably use as much vram, if it was ported for PC.

you didnt asnwer anything... ssd for caching was developed because games used alot of memory in caching data that wasnt needed on screen and instead faster ssd's and io technology make it possible for developers to use more of the ram they need for whats on screen and only streaming from storage what they required without loading screens this is why ssd's and io technology was massively improved on console this gen... pc's could always cache data on system ram because you can add whatever amount of ram on a pc where as consoles are closed systems... second im not insisting on demon souls i just asked you why does re4 look worse than ps4 games like the order and last of us 2 yet requires more than 12gb ram on pc... the reason im asking that is because its a badly designed and optimized game to require such amounts of memory to no visual correlation this is what pisses me off its devs carelessly using up memory without consideration if demon souls was made by capcom with re engine you would probably require 64gb ram then.

re2 and re3 do the same after RT patches.Yeah the game crashed for me too as soon as I pushed the VRAM limit. NX Gamer was able to recreate the crash every time.

The RE engine lets you overload the VRAM but it can cause these crashes. I remember some of these in RE3.

jesus u keep repeating what ive said and twisting the argument without actually answering any questions i feel like im replying to a repeat machine, ue4 games stutter because of shader compilation not becuase of vram caching and pc's dont cache data on vram they cache it on system ram and i didnt just compare to demon souls as you are insisting ive told you there are ps4 games that look better than this game and have far more on screen than this game but run on 5.5gb memory the question was why does this mediocre looking game require 12gb memory! for what exactly?I'm having trouble understand you, because of the lack of punctuation and capitalization.

You are right about PCs having more memory and using it to cache data.

But caching data on physical media has been done many times before, just more slowly.

Previous gen consoles would cache data on the HDD, as this was much faster than the BluRay or DVD drive.

The SSD does the same concept, but much faster than any HDD.

Now on PC, if we can cache more things on vram, it will be faster than getting that same data from ram or the SSD.

This is one of the reasons why UE4 games stutter so much.

You keep insisting on comparing Demon Souls with RE4 remake. If you look at the texture resolution, they are probably similar.

The issue is the rest of the game, such lighting, geometry, art style, etc.

jesus u keep repeating what ive said and twisting the argument without actually answering any questions i feel like im replying to a repeat machine, ue4 games stutter because of shader compilation not becuase of vram caching and pc's dont cache data on vram they cache it on system ram and i didnt just compare to demon souls as you are insisting ive told you there are ps4 games that look better than this game and have far more on screen than this game but run on 5.5gb memory the question was why does this mediocre looking game require 12gb memory! for what exactly?

just reduce texture resolution/pool size, problem is solved, in most cases. newer texture resolution/pool settings are geared towards 12-16 gb. if you dont give them to do something, they also became a waste. even with reduced texture poolsize (from 5000 mb to 3000 mb; ultra to high [the old low] ), hogwarts legacy will load most important textures in high quality at all times from short distance to midrange distance. maybe at long distance you may notice some texture oddities but that is to be expected.In 2020, my 3080 was a dream to have. But these days, it feels so weird that games really haven't gotten that much more graphically impressive, but the 3080 isn't completely crushing like it used to. But to your 2nd point, I'm wondering if that has more to do with poor optimization. Gotham Knights and Hogwarts Legacy don't look any better than Cyberpunk 2077 or RE8.

Why does everyone keep saying this? The 24.5 tflops 6950xt was their 300 watt card on 7. Their 5nm 52 tflops chip is the same 300 watt tdp. They can easily get 2x more graphics power for the ps5 pro if they target 6950xt specs and don’t skimp on the vram upgrade.

It will cost $599 minimum but someone said they will ship it without the bluray drive and charge $99 for it so they will definitely make profit on day one.

The key is not to make the same mistake they made with the ps4 pro.

this is why i said this game isnt really taxing the hardware its just a badly optimized mess theres just no way a game like last of us 2 ran on a ps4 and this game drops to 30's on a series x and crashes on a pc with 32gb ram and all the iq problems on ps5, but people naively jump to say its a hardware limitation... without context

You just said it yourself. Consoles are 30 fps machines. This game is targeting 60 fps even in its fidelity resolution mode with RT. Just look at Dead Space. Its 60 fps mode runs at 960p before being checkerboarded to 1440p.

Comparing it to HFW is not fair because HFW doesnt use RT which is really taxing on VRAM and VRAM bandwidth. And even then HFW's 40 fps mode drops resolution by half to 4kcb compared to native 4k in its 30 fps mode. Optimization isnt everything. You need better hardware. We dont know why the PS5 and XSX are struggling with 60 fps modes, but its so prevalent that we cannot just blame the devs all the time. Having a mid gen refresh will let devs target native 4k or 1800p 60 fps for games like this solving the performance issues for those of us who care about Image Quality.

Also, the game is definitely a noticeable upgrade over RE2 and even Village when it comes to fidelity.

Way better lighting, textures, and post processing effects.

?? It looks great. Last gen as fuck yes, but great nonetheless.

See above to see just how big of an upgrade it is over RE2 and Village. Village looks flat in comparison.