GymWolf

Member

still, the inside of my pc can cause vomit or migrain even in normal people without a pcDude obviously doesn't have a pc. Look at his/her post history. Just talks a bunch of shit.

still, the inside of my pc can cause vomit or migrain even in normal people without a pcDude obviously doesn't have a pc. Look at his/her post history. Just talks a bunch of shit.

Lmfaaaaaaaaaaaaaaao not gonna lie, but my first pc, and my first car audio build was a spiders nest. So don't feel bad. As long as it gets the job done, who cares what it looks like. Functionality over form factor all day long!still, the inside of my pc can cause vomit or migrain even in normal people without a pc

i mean, the cables don't touch anything that can cause troubles but they are pretty free and i clean the inside like 1 time every 6m-1y...Lmfaaaaaaaaaaaaaaao not gonna lie, but my first pc, and my first car audio build was a spiders nest. So don't feel bad. As long as it gets the job done, who cares what it looks like. Functionality over form factor all day long!

That's all that matters. As long as your games play the way you intend to, there's nothing wrong with athletics.i mean, the cables don't touch anything that can cause troubles but they are pretty free and i clean the inside like 1 time every 6m-1y...

i'm just an hardcore noob lazy pc gamer

i never give a fuck about aesthetics in console or pc, give me a dick shaped console with bi-coloured pink and purple balls and some glitter on it and a 3080ti under his ass at 600 dollars and i'm okay with that, maybe call it ps5 reeee version or some shit like thatThat's all that matters. As long as your games play the way you intend to, there's nothing wrong with athletics.

i never give a fuck about aesthetics in console or pc, give me a dick shaped console with bi-coloured pink and purple balls and some glitter on it and a 3080ti under his ass at 600 dollars and i'm okay with that, maybe call it ps5 reeee version or some shit like that

Then it's good that Microsoft already said that XSX will be as silent as a One X.What I care about though is how loud the console/pc is. I can’t stand having a jet engine in my living room.

Sometimes my pro is so damn loud EVEN WITH HEADPHONES I hear the damn thing!

Imagine actually being this full of yourself.Dude obviously doesn't have a pc. Look at his/her post history. Just talks a bunch of shit.

Imagine having such a terrible reaction score compared to your posted messages. Obviously no one cares what you have to say, and reaction score only reiterates that even more. Imagine being terrible at riding a bike, or terrible at playing FPS. You hold the gold medals in these things. Imagine having a mid tier pc, but talking shit to someone who has much better hardware than you? You probably don't like the same backlash you gave me, do you?Imagine actually being this full of yourself.

Sorry my english is a bit shitty...are you praising the gpu inside seX or not? With crush expactations you mean in a positive way right?!

Imagine having such a terrible reaction score compared to your posted messages.

And your score barely went up since then....No difference. You can blame it on your shitty personality or Gaf, but the fact still remains. No one calls you out for sucking in games, in real life, etc. But when you call someone else out, they will definitely see all of your insecurities and deficiencies. And yet again, you still won't show your shit pc will you? You wanna call my pc out, but don't have the balls to show yours? Is that one cable gonna hurt my performance or self esteem? Are my "bitch" cables gonna affects my fps? My psu probably costs more than your pc, but would I call you out for that? Hell no, but since you did, it's fair game.That moment you're too stupid to realise Gaf didn't even have reactions for more than 75% of my time here.

i don't give a fuck about noise either.What I care about though is how loud the console/pc is. I can’t stand having a jet engine in my living room.

Sometimes my pro is so damn loud EVEN WITH HEADPHONES I hear the damn thing!

Imagine actually being this full of yourself.

It is quite entertaining witnessing such extreme levels of projection.But that guy is hilarious. I thought that these exist only in folk tales. But here we are.

Still... No pc, no nudes, no nothing....?!?! Son, I am disappoint.... To say the least. It's a good thing that everyone from AUS isn't like that. My favorite musician was born there.It is quite entertaining witnessing such extreme levels of projection.

It truly is new console generation times.

Your favourite musician is Kate Miller Heidke?Still... No pc, no nudes, no nothing....?!?! Son, I am disappoint.... To say the least. It's a good thing that everyone from AUS isn't like that. My favorite musician was born there.

Nah, never heard of her before.Your favourite musician is Kate Miller Heidke?

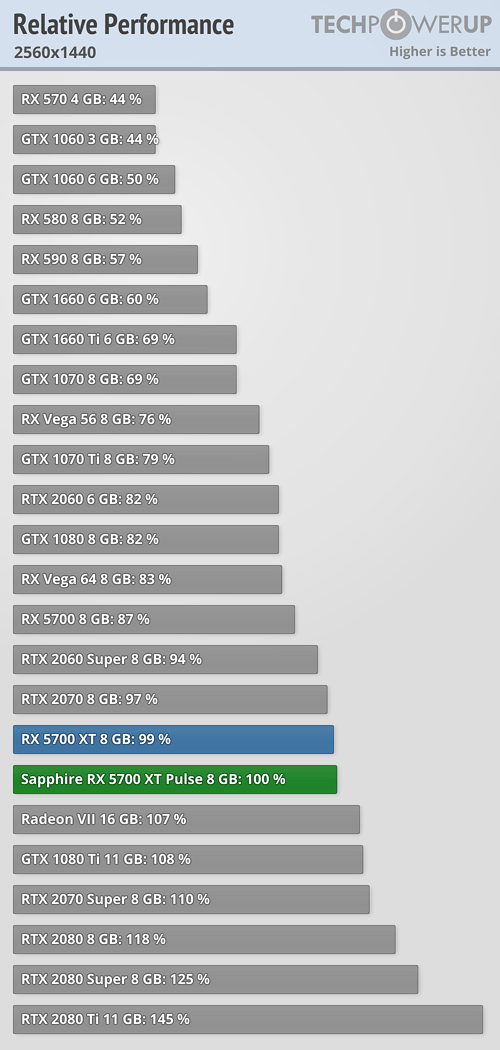

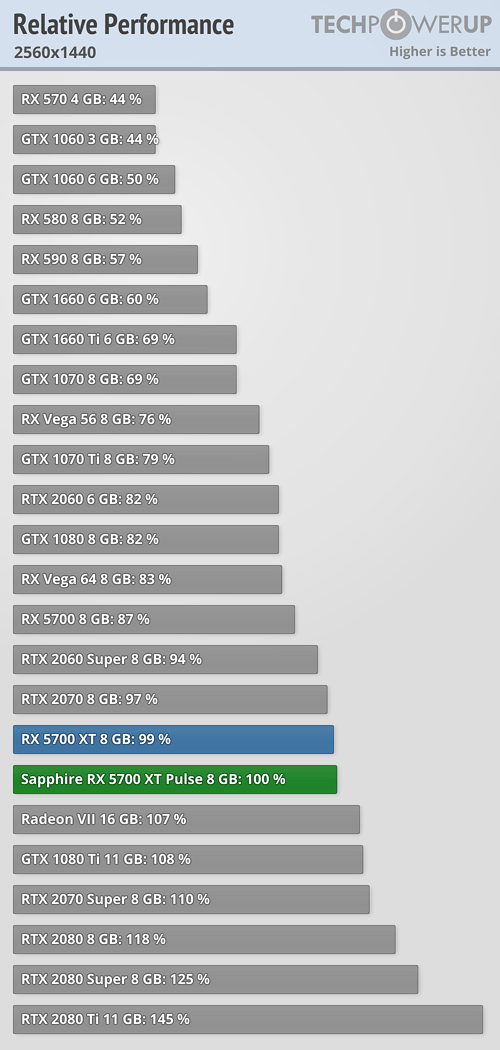

Nice sentiment but it does not conform to reality. You are basing your information on who has the absolute fastest card and basically popular opinion. You use the 5700XT as a reference to compare it to a much older GTX 1080 to somehow justify that the 5700XT is years behind. All conjecture, and no basis. Not only that, the 5700XT is about 20% faster than a GTX 1080... And if we compare the TFLOPS, the 5700XT has 9.7 TFLOPS while the GTX 1080 has 8.9 TFLOPS, which is about 10% more TFLOPS, for 20% more performance. Woops.Key word - on paper. Because as much as RDNA is more efficient than GCN, it's still nowhere near as good as NV CUDA cores (that also evolve each generation).

AMD literally has to be one process node ahead in order to catch up with NV GPUs from one generation behind, their currently strongest GPU, 5700XT offers performance of a GTX1080, a 4yo 16nm card... AMD always had much bigger TF numbers (and synthetic benchmark scores), they already offered a 9TF+ GPU half a decade ago with Fury X, vs less than 6TF 980Ti, but we all know what the actual outcome was between the two. And then they had cards like Vega 64 and Radeon VII with 13-13.5TF, and again, with mixed actual real-life performance.

So again - on paper, yeah, they are catching up with a 2yo 12nm card, while NV is yet to show what they are capable of with 7nm, and the leaks already indicate a 6144SP card, so we're potentially looking at 21-22 (more efficient) TFlops.

With all that being said, I'm waaay more than confident about XBX performance looking at the specs, given the consoles optimized environment and just looking at what the devs already achieved on current-gen consoles with a mere ~1,5TF GPUs.

I just wish there was a console with Intel CPU and NV GPU on board, a man can dream...

Nice sentiment but it does not conform to reality. You are basing your information on who has the absolute fastest card and basically popular opinion. You use the 5700XT as a reference to compare it to a much older GTX 1080 to somehow justify that the 5700XT is years behind. All conjecture, and no basis. Not only that, the 5700XT is about 20% faster than a GTX 1080... And if we compare the TFLOPS, the 5700XT has 9.7 TFLOPS while the GTX 1080 has 8.9 TFLOPS, which is about 10% more TFLOPS, for 20% more performance. Woops.

And it's funny how you mention the Radeon VII, and completely forget to mention that the 5700XT is practically just as fast as the Radeon VII (only 1% slower on average), while literally having less than 73% of the TFLOPS of the Radeon VII.

AMD and nVidia TFLOPS were not comparable in the past, because nVidia's architecture was always more efficiently used than AMD's. That was a combination of both the hardware and developers more often than not optimizing for nVidia.

There is a way to show how close the TFLOPS really are, and nowadays, they are pretty much equal. How we know? A german website clocked a Navi and Turing GPU at the exact same clocks, same memory speeds, and them having the same amount of cores, which theoretically gives them the exact same amount of TFLOPS.

Guess what. RDNA came out 1% faster than Turing.

GCN was extremely old, and with Navi, AMD pretty much closed the gap in terms of architecture. RDNA2 will undoubtedly also have large improvements over RDNA1.

So don't go around saying that AMD is years behind. Do not underestimate RDNA.

The larger the die size or amount of cores, the less likely it is to reach high clock speeds. Having almost 65% more cores while getting 'only' 40% more performance is something to be noted. If it's at 7nm, the die size itself might not be that much bigger, but the amount of cores is too high to have an equal distribution among them in terms of clock speed, so they need to keep the clocks down to allow for all cores to work properly. That is the main reason why AMD has mainly been focusing on small dies and lowering CUs for the same performance. They can't afford to throw out 'dormant' performance.NVIDIA Next Generation GPUs With Up To 7552 Cores Benchmarked – 40% Faster Than TITAN RTX

40% faster than titan rtx at only 1.1GHZ

theoreticaly can peak 30Teraflops at 2ghz

this is why imo next generation consoles are so exciting! you have all this great technology getting into the hands of the typical masses of developers and gamers. honestly even once launched it won't be in the hands of the masses, that will take 1 or 2 years after next gen launch for that train to get really moving and of course you're looking at 3-5 years after next gen launch for this to become typical like PS4 is now, but it's still forward progress. so perhaps ~2023? it will be a somewhat negligible effect until then. it just takes time

5700XT offers performance of a GTX1080

"Uninformed people in your sentence is hilariously ironic.Why do uninformed people think a game has to be made and tailored for a specific GPU?!

Are you on acid, or something?What is the market share for the xbsx?

Xsx isn't out, which should have been obvious to tell.... Devs make a game that can be played on various hardwarse, but it's not tailored to one specific gpu. That's not how it works...Consoles, (and in this case we are talking about devices faster than 99% of gaming PC market) sell at a pace of about 40-50 million a year.

There will be a very strong base just in a year or so.

Amazing, somebody could post bullshit like this on a gaming enthusiast forum and not get smacked.

Try to read it slowly and you may need to read it twice: Navi beats Turing at perf/transistor.

"Uninformed people in your sentence is hilariously ironic.

How, do you think, devs decide how complex the geometry could be and how detailed the textures?

Are you on acid, or something?

Devs make a game that can be played on various hardwarse, but it's not tailored to one specific gpu. That's not how it works...

Devs make a game that can be played on a zoo of configuration, not giving a fuck about any single one of them.

That is how stuff works in PC world.

Big enough devs optimize games (most of all, geometry, textures) for consoles.

Blizzard even went as far as to optimize CPU side of things for 6 cores.

Platform exclusives target that one single configuration and wipe the floor with anything we see on even remotely comparable PC hardware, in a "how could that kind of graphic be achieved on that GPU!?!?!?" way.

You've got to be particularly special to not understand the efficiency benefits closed systems like consoles enjoy over PC coupled together with the far larger install base making them be the target hardware for developers to work towards. Never the less it's a good laugh reading the delusional rationales they try to convince themselves with.

There are few facts in this post, hardware is hardware and it's agnostic of the host platform. The GPU in the Xbox One X for example while configured differently than say the RX 580; it lines up almost perfectly with it in terms of capability scaling teraflops. The only real delineation between the Scopio Engine in the One X and an RX 580 PC build comes down to the CPU configuration.Some people in here aren't the brightest of the bunch apparently.... So let's say consoles get their cut down processor and gpu, all in a single package. That's called an APU. Still following along? This will already be dumbed down than compared to having separate cpu and gpu, with it's own dedicated cooling. Closed systems can only rely on lower clock speeds because of TDP and lower power limitations. Anyone with common sense should be seeing where this is going (especially as history repeats itself).

While having lower specs, the games will never perform better than the pc counterpart, except for when devs get lazy, and fuck up the game. With consoles finally catching up to mid spec'd pc's, what would make someone think consoles will suddenly beat every current consumer gpu, running a completely different architecture? It's disingenuous and laughable at best. Gamepass and PSNow will prove all the naysayers wrong.

Having to work with 2 configurations for xbsx and lockheart, and 2 for ps5, won't suddenly make console games look better. And judging by the 2 responses above me, much thought wasn't put in, at all. Because of multiple configurations of a closed system, wouldn't devs code for the baseline? As in the weaker of the two? Maybe add in a few extras for the higher end console and what not. But nothing will beat being able to adjust these things. So to use basic logic and history, this has been easily proven that pc's have better hardware configurations, before consoles release, during their release, and for the whole console life cycle. Then to the next gen.

Sure not every pc gamer will opt for the best of the best cpu/gpu, but that's the beauty of it. If i want the best gaming experience, I'm not going to buy that game on a console over the pc version. I'm gonna enjoy it at over 100fps, on a ultrawide monitor, everything maxed out. On the other hand, if all of your friends are on a certain console, then it only makes to get that instead.

Bonus round: anyone wanna try and disapprove this with DF or anyone elses footage, articles, screenshots, etc? Everyone can have their opinion, which is fine, but facts do not lie. Pc has better hardware than consoles. Prove the facts wrong.

This is halfway accurate, while it is exaggerated a lot in terms of broader capability from a closed system; over time they're able to eek more out of a console than PC hardware. A comparable system to the base Xbox One simply wouldn't run a lot of games coming out these days or would do so terribly and in an unplayable state. That right there is where the advantage of a console comes into effect.Those benefits are greatly exaggerated, these days even PC games run on low level APIs. Go ahead and take any recent multiplatform game and compare, there's no big difference between performance if you closely match the settings and hardware. Obviously developing a game as an exclusive let's developers focus on one machine which let's them squeeze more out of it, but it's the same for PC (if PC had studios buying exclusives)

And to reiterate that some more, how many Xbox one X or ps4 pro games were exclusively built for it, and not just having a patch that let's you play with higher resolution than the regular Xbox one or ps4? Why wouldn't lockheart and series x be any different this time around either? Just to only have a higher resolution on 12TF is a disservice to those that spent the extra money over lockheart. It won't have exclusive games, cause that would be a disservice to people who didn't spend the extra money to get the series x.Those benefits are greatly exaggerated, these days even PC games run on low level APIs. Go ahead and take any recent multiplatform game and compare, there's no big difference between performance if you closely match the settings and hardware. Obviously developing a game as an exclusive let's developers focus on one machine which let's them squeeze more out of it, but it's the same for PC (if PC had studios buying exclusives)

Compare any rx 580 to the apu in the Xbox 1X. Check out the difference in base clock speeds, and then what they can sustain because of proper cooling and being able to use more available power, as they aren't as limited by TDP. Or using the same settings on a pc with comparable hardware, and how it'll still perform better, just because of cooling, power, clock speed. It's like having a gpu that is clocked at 1.7ghz, but i can run it at 2.2ghz. I'll get a big boost to frames, which the console will stay at 1.7ghz with the same gpu. So that same gpu performs better on pc, than on a console.There are few facts in this post, hardware is hardware and it's agnostic of the host platform. The GPU in the Xbox One X for example while configured differently than say the RX 580; it lines up almost perfectly with it in terms of capability scaling teraflops. The only real delineation between the Scopio Engine in the One X and an RX 580 PC build comes down to the CPU configuration.

You're not getting a dumbed down GPU by any means simply because it's in an APU, the only capability difference falls at the feet of the CPU and that's stringent on a poor before the generation design decision. They're not making that mistake going into the 9th generation, the system is going to be much more balanced, have a far more capable CPU and this 12 Teraflop GPU will perform the same as a 12 Teraflop GPU in a discrete PC build.

This is halfway accurate, while it is exaggerated a lot in terms of broader capability from a closed system; over time they're able to eek more out of a console than PC hardware. A comparable system to the base Xbox One simply wouldn't run a lot of games coming out these days or would do so terribly and in an unplayable state. That right there is where the advantage of a console comes into effect.

Having to work with 2 configurations for xbsx and lockheart, and 2 for ps5, won't suddenly make console games look better.

This is halfway accurate, while it is exaggerated a lot in terms of broader capability from a closed system; over time they're able to eek more out of a console than PC hardware. A comparable system to the base Xbox One simply wouldn't run a lot of games coming out these days or would do so terribly and in an unplayable state. That right there is where the advantage of a console comes into effect.

Because the systems would be identical bar the GPU compute and possibly the amount of memory meaning the only factor that would need to be accounted for would be resolution.And to reiterate that some more, how many Xbox one X or ps4 pro games were exclusively built for it, and not just having a patch that let's you play with higher resolution than the regular Xbox one or ps4? Why wouldn't lockheart and series x be any different this time around either? Just to only have a higher resolution on 12TF is a disservice to those that spent the extra money over lockheart. It won't have exclusive games, cause that would be a disservice to people who didn't spend the extra money to get the series x.

You can't compare them that way because the GPU's are intrinsically different while targeting roughly the same teraflops.Compare any rx 580 to the apu in the Xbox 1X. Check out the difference in base clock speeds, and then what they can sustain because of proper cooling and being able to use more again power, as they aren't as limited by TDP. Or using the same settings on a pc with comparable hardware, and how it'll still perform better, just because of cooling, power, clock speed. It's like having a gpu that is clocked at 1.7ghz, but i can run it at 2.2ghz. I'll get a big boost to frames, which the console will stay at 1.7ghz with the same gpu. So that same gpu performs better on pc, than on a console.

Oh, hi there, how are ya?Oh, hi there, how are ya?

For the rest, you still didn't get:

1) what optimizing assets (geometry, textures, sometimes even code) for consoles means

2) XSeX beating (literally) 99% of PC gamer GPUs

3) There is no way anyone with a PC could vastly overpower XSeX (PS5 likely won't be far away either way) any time soon

Basically any game, it would be like taking a system with an old HD 7770 OC and an FX-8350 downclocked to less than 1.6 Ghz (slightly better IPC) and expecting some kind of playable experience.Any examples of this? Games coming out on Xbox one these days make massive compromises, sub-30 fps at a very low resolution. The results would be similar if you ran it at the same settings on a similar PC.

Though there are games on consoles that use lower than low PC settings, so I guess in that sense it is in fact more "optimized".

Didn't mean to offend you, kid. Don't cry.Oh, hi there, how are ya?

Consoles like Switch certainly.Consoles NEED every bit of optimization possible.

We have 30fps, because people prefer nicer graphics at 30fps, to this kind of graphics:30fps

I literally said that, explaining why: because there is a zoo of PC configs, there is no point in singling out any concrete configuration and making serious effort to optimize for it. Consoles, on the other hand...Do you think games aren't optimized for pc configurations or something?

Have you checked how big is the gap between 5700XT and 2080, darling?2) Lol!?!? Proof of that?!

Upgrade to which $200 GPU?Why can't a pc owner upgrade to a $200 gpu and beat the one X?

At most you can have an overpriced-whyonearthwasteyourmoney $1300 card, that, wait for it, is maybe 10% ahead of Microsoft has announced.3) my pc already shits on next gen

Didn't mean to offend you, kid. Don't cry.

Consoles like Switch certainly.

I also wonder how that helps your argument, chuckle.

We have 30fps, because people prefer nicer graphics at 30fps, to this kind of graphics:

at 1000fps.

I literally said that, explaining why: because there is a zoo of PC configs, there is no point in singling out any concrete configuration and making serious effort to optimize for it. Consoles, on the other hand...

Have you checked how big is the gap between 5700XT and 2080, darling?

Upgrade to which $200 GPU?

Are you on acid or something? Oh wait, I've already ask. Not that you'd admit it, anyhow.

At most you can have an overpriced-whyonearthwasteyourmoney $1300 card, that, wait for it, is maybe 10% ahead of Microsoft has announced.

And I've miss

Ah, good to know, I was close to not realizing it.Sorry kid, you are the offended one,

XSeX is likely faster than 2080sup. There is only one card faster than 2080sup in consumer market, I thought.there are several cards beating both the 5700 xt and 2080

And even more beating Switch. Heck, I doubt you could even buy a GPU that does NOT beat it.There are several $150 gpu's that beat out the xb1x

Well, first, is WHEN.so why wouldn't $200 gpu's shit on it as well?

Why compare TFs, when actual game perf figures are known.10% more performance over a 16-17TF card?

Lmfaaaaaaaaaaaaaaao are you implying the 1x is on par with a gtx 1080?! It's not even on 1070's level of performance. This is fucking hilarious!Ah, good to know, I was close to not realizing it.

XSeX is likely faster than 2080sup. There is only one card faster than 2080sup in consumer market, I thought.

And even more beating Switch. Heck, I doubt you could even buy a GPU that does NOT beat it.

Well, first, is WHEN.

And then there is butthurt Microsoft that wants revenge after current gen fiasco, when, among other things, it had much weaker console.

This brings us to consoles using chips faster than modern xx80 GPU.

Now, 1080 was released in 2016. There still isn't a $200 card that is faster than that.

Why compare TFs, when actual game perf figures are known.

Dude, are you drunk?are you implying the 1x is on par with a gtx 1080?

Are you drunk? You quoted me when i clearly mentioned xb1x, you know the console that is CURRENTLY out. You responded to that with the 1080 and 200 bucks. So, are YOU drunk?Dude, are you drunk?

I also mentioned XSeX beating 2080.You responded to that with the 1080 and 200 bucks.

But you mentioned the 1080 in response to me saying you can beat xb1x with a 150 or 200 dollar card. Why mention the 1080 specifically to that quote? Mental capacity on minimum today? Or everyday? Go back and read again and again, till something clicks up there. Otherwise, you are just shifting goalposts as per usual. I'm not trying to be rude, but you keep on with immature statements, so I just throw them back your way. We can continue like adults, or we can stay on your childish level.I also mentioned XSeX beating 2080.

Mental capacity issues?

4 years old 1080 card is still unbeaten at $200 mark.Why mention the 1080 specifically to that quote?

No one mentioned the 1080, but someone who is continuing to pull at strings and thin air. Rtx 2080 (non super) will beat the xbsx in framerate, raytracing, bandwidth. Possibly even the rtx 2070.4 years old 1080 card is still unbeaten at $200 mark.

SSeX is faster than 2080.

WHEN do you expect to buy $200 card faster than 2080?

Imagine being terrible at riding a bike, or terrible at playing FPS

Can't even get through your first paragraph without you being hilariously wrong.So let's say consoles get their cut down processor and gpu, all in a single package. That's called an APU. Still following along?

Good to know that there is someone on this planet that thinks 8tflops > 12 tflops.So a 2070 will beat xbsx.

10% more performance over a 16-17TF card?!

You have no clue about me, but have hilariously embarrassed yourself in regards to those things LOL.I don't even know how you came to these conclusions but they're particularly entertaining given that I would shit on you from a great height at both of those tasks.

Can't even get through your first paragraph without you being hilariously wrong.

Good to know that there is someone on this planet that thinks 8tflops > 12 tflops.

A 2080ti is not a 16-17 tflop card

The XSX is already better than a 2080 Super

The X1X is not a down clocked rx580 and is hardly inferior