-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

PS CEO Jim Ryan: "Devs are saying PS5 is easiest PS system to get code running on"

- Thread starter Boss Mog

- Start date

Negotiator

Member

With 4K assets in the pipeline? Don't hold your breath...Good. This should streamline workflow and hasten the pipeline. More games out the window, hopefully less bug ridden and dev time as a complement.

Myths

Member

Would only ever for a PC, Chief.With 4K assets in the pipeline? Don't hold your breath...

Negotiator

Member

PC-only?Would only ever for a PC, Chief.

Next-gen consoles are supposed to be 4K-ready (more RAM, more GPU grunt etc.)

In other words, expect fewer and bigger games. This has been the trend for a long time.

PS3 had 720p assets vs PS4 1080p assets. Just because they changed the ISA to x86, it doesn't mean the creative process (not programming) became easier. It becomes harder and harder every gen.

DonF

Member

Bless Cerny! Praise be! Ken Kutaragi is a genius, but not all game devs are geniuses, and not all need to be!

The ps3 was a great piece of hardware, but only the best and most dedicated devs were able to harness all of it's power.

Ps4 is a beast, if the ps5 is anything like the ps4, we are in for another great gen!

The ps3 was a great piece of hardware, but only the best and most dedicated devs were able to harness all of it's power.

Ps4 is a beast, if the ps5 is anything like the ps4, we are in for another great gen!

Komatsu

Member

Good to hear. Cerny is a great platform architect.

Can't wait till we have a better idea of the kinds of games that will be available in the console's launch window. And how much the platform can be pushed.

I man, PS3 games looked like this in 2006:

And like this in 2013:

PS2: Six memory spaces (IOP [2mb], SPU [2mb], CPU, GS, VU0&1), the different processors (GS, R5900 ["Emotion Engine"], MDEC, etc). Not a super intuitive platform. Word on the street the initial documentation was not great either (for those curious, that's a link to the EE user's manual).

PS3: I really don't know what Sony was thinking: the GPU is weak, the CPU is a mess to work with (you got to leverage the SPEs to compensate for the GPU weakness, etc. etc.) and the tools were reportedly so garbage that halfway through the generation some first party studios had developed their own.

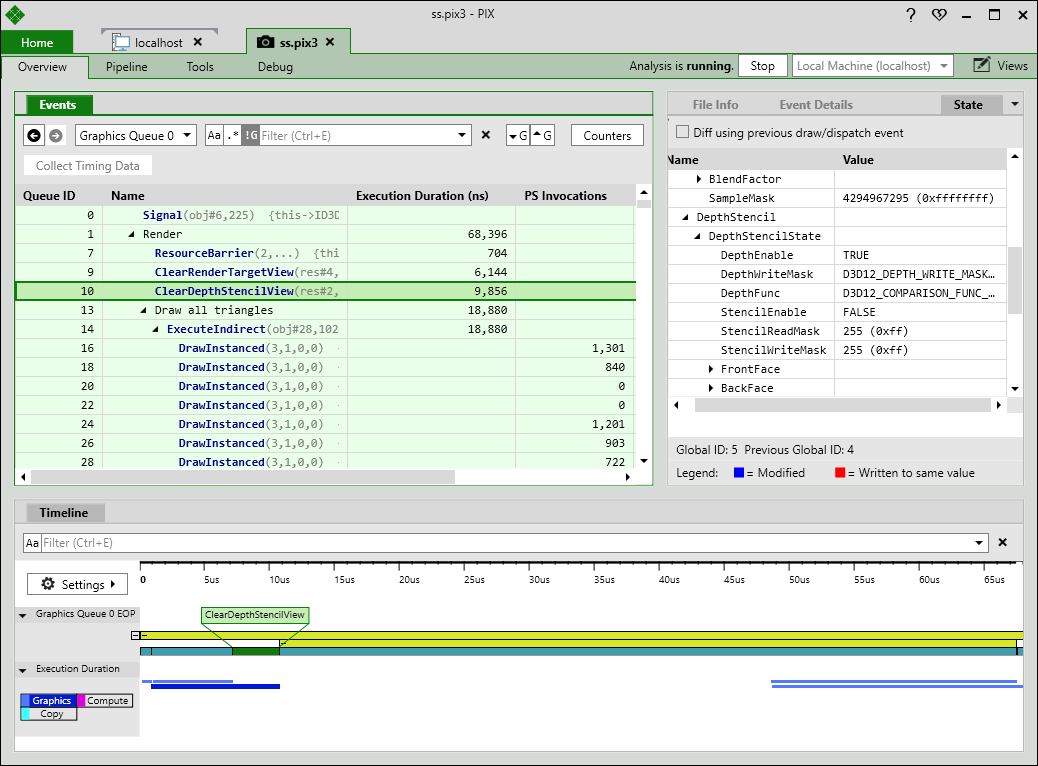

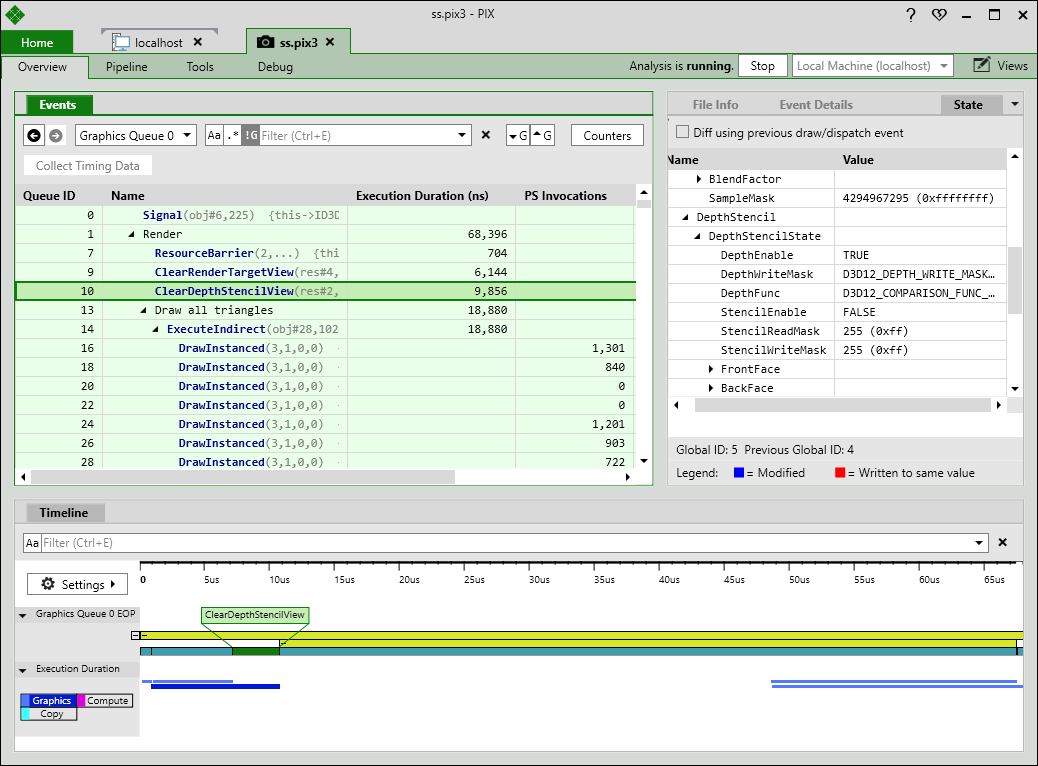

The Xbox 360 wasn't a walk in the park either, but at least they had this baby here. Long live PIX!

Can't wait till we have a better idea of the kinds of games that will be available in the console's launch window. And how much the platform can be pushed.

I man, PS3 games looked like this in 2006:

And like this in 2013:

Which, considering PS consoles historically are the hardest to code for, doesn't say that much.

But a better API is always welcome.

PS2: Six memory spaces (IOP [2mb], SPU [2mb], CPU, GS, VU0&1), the different processors (GS, R5900 ["Emotion Engine"], MDEC, etc). Not a super intuitive platform. Word on the street the initial documentation was not great either (for those curious, that's a link to the EE user's manual).

PS3: I really don't know what Sony was thinking: the GPU is weak, the CPU is a mess to work with (you got to leverage the SPEs to compensate for the GPU weakness, etc. etc.) and the tools were reportedly so garbage that halfway through the generation some first party studios had developed their own.

The Xbox 360 wasn't a walk in the park either, but at least they had this baby here. Long live PIX!

Kdad

Member

What else was he going to say?

"The Playstation 5 is a veritable anal fissure of game development."

Day Fucking One

mckmas8808

Mckmaster uses MasterCard to buy Slave drives

Great news! Maybe this will be launch games will be good.

CJY

Banned

No. Are you saying when Tesla announces a new car that's faster, it's an "admission of fault" for not making their past cars just as fast? This logic applies to all industries and technological advance in general, particularly as it pertains to iterative refinement.The fact he has to say that should be seen more as an admission of fault for past failings.

Using your logic, we should be seeing the final, ultimate iteration of everything from day one.

PendekarRajawali

Member

Agreed.I'd prefer it if it was easier for me to buy

*cough*

$400

*cough*

ahem.

Rest

All these years later I still chuckle at what a fucking moron that guy is.

And wasn't Saturn the hardest at the time? Wasn't PS2 easy as well? It seems like the PS3 was the only time Playstation was hard to work on in that regard.Don't make past mistakes again (PS3)... checked.

PS4 was the easiest to code.

Kagey K

Banned

No the PS2 was super hard to develop for as well. In fact that is the reason why Steve Ballmer came out and did the Developers, Devopers, Devolpers rant at that one E3.And wasn't Saturn the hardest at the time? Wasn't PS2 easy as well? It seems like the PS3 was the only time Playstation was hard to work on in that regard.

Killzone developer says PS3 dev easier than PS2

You've heard it time and time again: the PS3 is not easy to develop for. However, the folks behind Sony's flagship FPS, Killzone 2, will disagree. Guerilla Games' Managing Director Hermen Hulst spoke to GameDaily about the working as a PlayStation-exclusive developer. "Like us, if you are native...

If you have a first party studio saying PS3 is easier, you know other parties were fucked on PS2.

Negotiator

Member

Cell (SPUs) is easier than EE (VUs), just like Radeon GPGPU (CUs) is easier than Cell SPU programming.No the PS2 was super hard to develop for as well. In fact that is the reason why Steve Ballmer came out and did the Developers, Devopers, Devolpers rant at that one E3.

Killzone developer says PS3 dev easier than PS2

You've heard it time and time again: the PS3 is not easy to develop for. However, the folks behind Sony's flagship FPS, Killzone 2, will disagree. Guerilla Games' Managing Director Hermen Hulst spoke to GameDaily about the working as a PlayStation-exclusive developer. "Like us, if you are native...www.engadget.com

If you have a first party studio saying PS3 is easier, you know other parties were fucked on PS2.

What matters is the SIMD-centric philosophy, which is the pillar of a closed platform. Rest assured that Navi on PS5 will keep the same focus and extend it for even more uses (machine learning etc.)

LordOfChaos

Member

I'd love to get this guys impressions on all new consoles

- PlayStation 1: Everything is simple and straightforward. With a few years of dedication, one person could understand the entire PS1 down to the bit level. Compared to what you could do on PCs of the time, it was amazing. But, every step of the way you said "Really? I gotta do it that way? God damn. OK, I guess... Give me a couple weeks." There was effectively no debugger. You launched your build and watched what happened.

- N64: Everything just kinda works. For the most part, it was fast and flexible. You never felt like you were utilizing it well. But, it was OK because your half-assed efforts usually looked better than most PS1 games. Each megabyte on the cartridge cost serious money. There was a debugger, but the debugger would sometimes have completely random bugs such as off-by-one-errors in the type determination of the watch window (displaying your variables by reinterpreting the the bits as the type that was declared just prior to the actual type of the variable --true story).

- Dreamcast: The CPU was weird (Hitatchi SH-4). The GPU was weird (a predecessor to the PowerVR chips in modern iPhones). There were a bunch of features you didn't know how to use. Microsoft kinda, almost talked about setting it up as a PC-like DirectX box, but didn't follow through. That's wouldn't have worked out anyway. It seemed like it could be really cool. But man, the PS2 is gonna be so much better!

- PS2: You are handed a 10-inch thick stack of manuals written by Japanese hardware engineers. The first time you read the stack, nothing makes any sense at all. The second time your read the stack, the 3rd book makes a bit more sense because of what you learned in the 8th book. The machine has 10 different processors (IOP, SPU1&2, MDEC, R5900, VU0&1, GIF, VIF, GS) and 6 different memory spaces (IOP, SPU, CPU, GS, VU0&1) that all work in completely different ways. There are so many amazing things you can do, but everything requires backflips through invisible blades of segfault. Getting the first triangle to appear on the screen took some teams over a month because it involved routing commands through R5900->VIF->VU1->GIF->GS oddities with no feedback about what your were doing wrong until you got every step along the way to be correct. If you were willing to do twist your game to fit the machine, you could get awesome results. There was a debugger for the main CPU (R5900). It worked pretty OK. For the rest of the processors, you just had to write code without bugs.

- GameCube: I didn't work with the GC much. It seems really flexible. Like you could do anything, but nothing would be terribly bad or great. The GPU wasn't very fast, but it's features were tragically underutilized compared to the Xbox. The CPU had incredibly low-latency RAM. Any messy, pointer-chasing, complicated data structure you could imagine should be just fine (in theory). Just do it. But, more than half of the RAM was split off behind an amazingly high-latency barrier. So, you had to manually organize your data in to active vs bulk. It had a half-assed SIMD that would do 2 floats at a time instead of 1 or 4.

- PSP: Didn't do much here either. It was played up as a trimmed-down PS2, but from the inside it felt more like a bulked-up PS1. They tried to bolt-on some parts to make it less of a pain to work with, but those parts felt clumsy compared to the original design. Having pretty much the full-speed PS2 rasterizer for a smaller resolution display meant you didn't worry about blending pixels.

- Xbox: Smells like a PC. There were a few tricks you could dig into to push the machine. But, for the most part it was enough of a blessing to have a single, consistent PC spec to develop against. The debugger worked! It really, really worked! PIX was hand-delivered by angels.

- Xbox360: Other than the big-endian thing, it really smells like a PC --until you dug into it. The GPU is great --except that the limited EDRAM means that your have to draw your scene twice to comply with the anti-aliasing requirement? WTF! Holy Crap there are a lot of SIMD registers! 4 floats x 128 registers x 6 registers banks = 12K of registers! You are handed DX9 and everything works out of the box. But, if you dig in, you find better ways to do things. Deeper and deeper. Eventually, your code looks nothing like PC-DX9 and it works soooo much better than it did before! The debugger is awesome! PIX! PIX! I Kiss You!

- PS3: A 95 pound box shows up on your desk with a printout of the 24-step instructions for how to turn it on for the first time. Everyone tries, most people fail to turn it on. Eventually, one guy goes around and sets up everyone else's machine. There's only one CPU. It seems like it might be able to do everything, but it can't. The SPUs seem like they should be really awesome, but not for anything you or anyone else is doing. The CPU debugger works pretty OK. There is no SPU debugger. There was nothing like PIX at first. Eventually some Sony 1st-party devs got fed up and made their own PIX-like GPU debugger. The GPU is very, very disappointing... Most people try to stick to working with the CPU, but it can't handle the workload. A few people dig deep into the SPUs and, Dear God, they are fast! Unfortunately, they eventually figure out that the SPUs need to be devoted almost full time making up for the weaknesses of the GPU.

Panajev2001a

GAF's Pleasant Genius

No the PS2 was super hard to develop for as well. In fact that is the reason why Steve Ballmer came out and did the Developers, Devopers, Devolpers rant at that one E3.

Killzone developer says PS3 dev easier than PS2

You've heard it time and time again: the PS3 is not easy to develop for. However, the folks behind Sony's flagship FPS, Killzone 2, will disagree. Guerilla Games' Managing Director Hermen Hulst spoke to GameDaily about the working as a PlayStation-exclusive developer. "Like us, if you are native...www.engadget.com

If you have a first party studio saying PS3 is easier, you know other parties were fucked on PS2.

It is interesting they were saying that... for modern shader based games having nVIDIA tooling and coders who already cut their teeth on PS2 VU’s made it easier to max SPE’s out (especially since they got internal experience with PS3 long before everyone else)... but I would not say easier in and of themselves (and that is not a dig against PS3)... see Cerny’s presentation about the famous “time to polygon” too.

The first year of PS2 was full of strong third party titles too.

Panajev2001a

GAF's Pleasant Genius

I'd love to get this guys impressions on all new consoles

- PlayStation 1: Everything is simple and straightforward. With a few years of dedication, one person could understand the entire PS1 down to the bit level. Compared to what you could do on PCs of the time, it was amazing. But, every step of the way you said "Really? I gotta do it that way? God damn. OK, I guess... Give me a couple weeks." There was effectively no debugger. You launched your build and watched what happened.

- N64: Everything just kinda works. For the most part, it was fast and flexible. You never felt like you were utilizing it well. But, it was OK because your half-assed efforts usually looked better than most PS1 games. Each megabyte on the cartridge cost serious money. There was a debugger, but the debugger would sometimes have completely random bugs such as off-by-one-errors in the type determination of the watch window (displaying your variables by reinterpreting the the bits as the type that was declared just prior to the actual type of the variable --true story).

- Dreamcast: The CPU was weird (Hitatchi SH-4). The GPU was weird (a predecessor to the PowerVR chips in modern iPhones). There were a bunch of features you didn't know how to use. Microsoft kinda, almost talked about setting it up as a PC-like DirectX box, but didn't follow through. That's wouldn't have worked out anyway. It seemed like it could be really cool. But man, the PS2 is gonna be so much better!

- PS2: You are handed a 10-inch thick stack of manuals written by Japanese hardware engineers. The first time you read the stack, nothing makes any sense at all. The second time your read the stack, the 3rd book makes a bit more sense because of what you learned in the 8th book. The machine has 10 different processors (IOP, SPU1&2, MDEC, R5900, VU0&1, GIF, VIF, GS) and 6 different memory spaces (IOP, SPU, CPU, GS, VU0&1) that all work in completely different ways. There are so many amazing things you can do, but everything requires backflips through invisible blades of segfault. Getting the first triangle to appear on the screen took some teams over a month because it involved routing commands through R5900->VIF->VU1->GIF->GS oddities with no feedback about what your were doing wrong until you got every step along the way to be correct. If you were willing to do twist your game to fit the machine, you could get awesome results. There was a debugger for the main CPU (R5900). It worked pretty OK. For the rest of the processors, you just had to write code without bugs.

- GameCube: I didn't work with the GC much. It seems really flexible. Like you could do anything, but nothing would be terribly bad or great. The GPU wasn't very fast, but it's features were tragically underutilized compared to the Xbox. The CPU had incredibly low-latency RAM. Any messy, pointer-chasing, complicated data structure you could imagine should be just fine (in theory). Just do it. But, more than half of the RAM was split off behind an amazingly high-latency barrier. So, you had to manually organize your data in to active vs bulk. It had a half-assed SIMD that would do 2 floats at a time instead of 1 or 4.

- PSP: Didn't do much here either. It was played up as a trimmed-down PS2, but from the inside it felt more like a bulked-up PS1. They tried to bolt-on some parts to make it less of a pain to work with, but those parts felt clumsy compared to the original design. Having pretty much the full-speed PS2 rasterizer for a smaller resolution display meant you didn't worry about blending pixels.

- Xbox: Smells like a PC. There were a few tricks you could dig into to push the machine. But, for the most part it was enough of a blessing to have a single, consistent PC spec to develop against. The debugger worked! It really, really worked! PIX was hand-delivered by angels.

- Xbox360: Other than the big-endian thing, it really smells like a PC --until you dug into it. The GPU is great --except that the limited EDRAM means that your have to draw your scene twice to comply with the anti-aliasing requirement? WTF! Holy Crap there are a lot of SIMD registers! 4 floats x 128 registers x 6 registers banks = 12K of registers! You are handed DX9 and everything works out of the box. But, if you dig in, you find better ways to do things. Deeper and deeper. Eventually, your code looks nothing like PC-DX9 and it works soooo much better than it did before! The debugger is awesome! PIX! PIX! I Kiss You!

- PS3: A 95 pound box shows up on your desk with a printout of the 24-step instructions for how to turn it on for the first time. Everyone tries, most people fail to turn it on. Eventually, one guy goes around and sets up everyone else's machine. There's only one CPU. It seems like it might be able to do everything, but it can't. The SPUs seem like they should be really awesome, but not for anything you or anyone else is doing. The CPU debugger works pretty OK. There is no SPU debugger. There was nothing like PIX at first. Eventually some Sony 1st-party devs got fed up and made their own PIX-like GPU debugger. The GPU is very, very disappointing... Most people try to stick to working with the CPU, but it can't handle the workload. A few people dig deep into the SPUs and, Dear God, they are fast! Unfortunately, they eventually figure out that the SPUs need to be devoted almost full time making up for the weaknesses of the GPU.

lol

PS2 Linux kit famously had the SPS2 kernel extension and library that essentially turned it into a 16 MB of RAM TOOL dev kit.

Last edited:

LordOfChaos

Member

lolso true about the headache getting to grips with PS2 at the beginning! At least in PS2Linux land (later arrived for TOOL dev kits too), you did have general debuggers for VU’s, GIF, and GS (down to visualising render commands step by step and all the buffers... seeing depth and texture buffers was a big help). Look for SVVUDB (the guy that made it ended up at Sony and worked on PS3 famous rubber ducks demo).

PS2 Linux kit famously had the SPS2 kernel extension and library that essentially turned it into a 16 MB of RAM TOOL dev kit.

"There's no debugger for all of these cool new cores?"

"Just write code without bugs!"

Sony has come a long way from the older Japanese cultural norm of things being needlessly hard being 'better'.

Last edited:

Negotiator

Member

I don't think so.They should thank AMD for inventing Ryzen CPU. A power horse that can run anything. If it was sony they would still be tinkering with that old netbook CPU.

The cat series (Bobcat, Jaguar, Puma) has been abandoned by AMD. There is no 7nm roadmap for those CPUs.

Sony and MS had to pay AMD to tape out Jaguar at 16nm FinFET, since AMD's roadmaps only had Jaguar available until 28nm.

It's easier to use Zen 2 and the performance benefit is a bonus.

ZywyPL

Banned

Can't wait till we have a better idea of the kinds of games that will be available in the console's launch window. And how much the platform can be pushed.

I man, PS3 games looked like this in 2006:

And like this in 2013:

It's not gonna happen, consoles are now simple, close-specs PCs, as oppose to all those wicked, exotic, voodoo architectures they used to be, all the power is available from the get-go now, just look at PS4's launch games - Infamous:SS, TO:1886, Driveclub, KZ:SF, I can even argue those games offer better image quality and sharper textures than modern titles with their CBR and somewhat washed-out textures due to open-world structure. But that's a good thing IMO, because why wait for great quality games half a decade, if not more, instead of receiving them right from the start.

DeepEnigma

Gold Member

It's not gonna happen, consoles are now simple, close-specs PCs, as oppose to all those wicked, exotic, voodoo architectures they used to be, all the power is available from the get-go now, just look at PS4's launch games - Infamous:SS, TO:1886, Driveclub, KZ:SF, I can even argue those games offer better image quality and sharper textures than modern titles with their CBR and somewhat washed-out textures due to open-world structure. But that's a good thing IMO, because why wait for great quality games half a decade, if not more, instead of receiving them right from the start.

And you see the evolution over time with Decima over Killzone with HZD and now DS (and they are open world in comparison). RDR2 also looks better than other open world games around launch.

New rendering pipelines will always be developed over the course of a system's life. More so next gen with machine learning than this gen I wager. But I also feel the launch games next gen are going to be something of a graphical marvel, more than doubters are expecting.

Last edited:

Negotiator

Member

The Order 1886 might be more impressive than Uncharted 4 graphics-wise, I'll give you that, but gameplay leaves a lot to be desired... I don't want a static game with barely any interaction. Uncharted 4 as a whole (in terms of physics, AI interaction etc.) seems more impressive to me. I don't want a tech demo (there's Demoscene for that), I want a video game.It's not gonna happen, consoles are now simple, close-specs PCs, as oppose to all those wicked, exotic, voodoo architectures they used to be, all the power is available from the get-go now, just look at PS4's launch games - Infamous:SS, TO:1886, Driveclub, KZ:SF, I can even argue those games offer better image quality and sharper textures than modern titles with their CBR and somewhat washed-out textures due to open-world structure. But that's a good thing IMO, because why wait for great quality games half a decade, if not more, instead of receiving them right from the start.

RDR2 is also a huge step up over Witcher 3. Witcher 3 barely uses 2GB VRAM on PCs. There's a reason they were able to port it on Switch. I don't think we're gonna see a RDR2 Switch port. It's like PS3.5 vs PS4.5.

DeepEnigma

Gold Member

The Order 1886 might be more impressive than Uncharted 4 graphics-wise, I'll give you that, but gameplay leaves a lot to be desired... I don't want a static game with barely any interaction. Uncharted 4 as a whole (in terms of physics, AI interaction etc.) seems more impressive to me. I don't want a tech demo (there's Demoscene for that), I want a video game.

RDR2 is also a huge step up over Witcher 3. Witcher 3 barely uses 2GB VRAM on PCs. There's a reason they were able to port it on Switch. I don't think we're gonna see a RDR2 Switch port. It's like PS3.5 vs PS4.5.

The Order is also much more "corridor" and not as open as UC4 is as well. Man I would an 1886 sequel on the PS5.

"There's no debugger for all of these cool new cores?"

"Just write code without bugs!"

Sony has come a long way from the older Japanese cultural norm of things being needlessly hard being 'better'.

You could always debug by printing memory dumps. On paper. (True story btw)

And yet PS2 era was the best gaming era ever. And the console sold at an unprecedented rates.

I blame millenials, actually.

Whine little shits who feel entitled and cannot code.

Last edited:

Panajev2001a

GAF's Pleasant Genius

Oh sure, but partially the old way was more of a documentation and software tooling issue than bad HW choices (whenever you try to shoot for very high performance on a small power budget and trying to keep costs down you get very focused and sometimes hard to learn HW and rarely something easy to code for, powerful, and cheap)."There's no debugger for all of these cool new cores?"

"Just write code without bugs!"

Sony has come a long way from the older Japanese cultural norm of things being needlessly hard being 'better'.

My point though was that the statement about debuggers was partially incorrect: it is true for launch, but not afterwards and also ignores the Performance Analyser tool they offered developers post launch.

On PS2 Linux kit, coupled with low level efficient access (think real PS2 dev kit limited to 16 MB of RAM), people had visual debuggers for VU’s and VIF’s, GIF, and GS (down to being able to step through your GS packets and draw calls, going from memory... have not touched it in a looooong time).

Last edited:

Rest

All these years later I still chuckle at what a fucking moron that guy is.

But I also feel the launch games next gen are going to be something of a graphical marvel, more than doubters are expecting.

I hope so. People really oversold what the last gen was as far as a graphical jump. When I look at current gen graphics I see what people were claiming they saw last gen. The jump from PS2/Xbox to PS3/360 seemed really small to me, the jump from PS4/360 to PS4/XB1 felt like catching up.

I want to be blown away next year.

Kagey K

Banned

I felt right out of the gate that this gen was a stop gap to next gen and actually expected it to be a shorter gen, then the Pro and the X fucked it all up. Now I worry tat the Pro and the X will reduce gains made for next gen.I hope so. People really oversold what the last gen was as far as a graphical jump. When I look at current gen graphics I see what people were claiming they saw last gen. The jump from PS2/Xbox to PS3/360 seemed really small to me, the jump from PS4/360 to PS4/XB1 felt like catching up.

I want to be blown away next year.

Negotiator

Member

Really? Programmable shaders as a baseline feature was a huge step up in terms of realism.I hope so. People really oversold what the last gen was as far as a graphical jump. When I look at current gen graphics I see what people were claiming they saw last gen. The jump from PS2/Xbox to PS3/360 seemed really small to me, the jump from PS4/360 to PS4/XB1 felt like catching up.

I want to be blown away next year.

bhunachicken

Member

The jump from PS2/Xbox to PS3/360 seemed really small to me.

That's what I thought at first, too. Then I plugged my PS3 into an HDTV. Difference was night and day. Elder Scrolls IV looked like a completely different game.

Well, the reason PlayStation exists is because PS 1 was easier than Saturn to code for , but PS2 and 3, yeah......Which, considering PS consoles historically are the hardest to code for, doesn't say that much.

But a better API is always welcome.

Rest

All these years later I still chuckle at what a fucking moron that guy is.

I saw that difference too, my brother had a PS3 before I did, he had an HD TV but didn't have an HDMI cable. Uncharted looked like a Dreamcast game, then when he got an HDMI cable it looked like Uncharted.That's what I thought at first, too. Then I plugged my PS3 into an HDTV. Difference was night and day. Elder Scrolls IV looked like a completely different game.

Really? Programmable shaders as a baseline feature was a huge step up in terms of realism.

When it comes to graphics I don't think there's much good discussion to be had in talking about any one technical specification, you have to look at images, animation, and games as a whole. You can get granular into polygon counts if you really want to, but all the horsepower on the screen doesn't mean that it looks better when it gets to someone's eyes and is interpreted by their brain. By the end of the PS2 era there was a lot of artistry going into making things look good without the latest greatest tech. The art isn't in the tools.

Last edited:

Negotiator

Member

Are you saying Uncharted is just tech (pixel shaders) and no art? I'm pretty sure you weren't impressed by the gen leap because you didn't use an HDMI cable from the get-go.I saw that difference too, my brother had a PS3 before I did, he had an HD TV but didn't have an HDMI cable. Uncharted looked like a Dreamcast game, then when he got an HDMI cable it looked like Uncharted.

When it comes to graphics I don't think there's much good discussion to be had in talking about any one technical specification, you have to look at images, animation, and games as a whole. You can get granular into polygon counts if you really want to, but all the horsepower on the screen doesn't mean that it looks better when it gets to someone's eyes and is interpreted by their brain. By the end of the PS2 era there was a lot of artistry going into making things look good without the latest greatest tech. The art isn't in the tools.