M1chl

Currently Gif and Meme Champion

Stop being lazy fuck like myself and hover over my nick : DIf you like to apologize you must be from the northern part.

Stop being lazy fuck like myself and hover over my nick : DIf you like to apologize you must be from the northern part.

Sorry, I’m with the iPhone. Anyways, northern enough.Stop being lazy fuck like myself and hover over my nick : D

Sorry, I’m with the iPhone. Anyways, northern enough.

How SSD's will change game design in the new generation - Mystic

I knew it! When I said that constant video recording for the "share" functionality would destroy the SSD in very short time in the Next Gen thread they told me that modern SSDs are capable of handling it.

www.forbes.com

www.forbes.com

Two or three in the last 24 hours... between leaks and updates etc.My boy put out a new video?

Thanks. This is a relief.It should handle 1TB and more per day without a sweat. Mine dropped from 100% to 81 after 10 years (OS). Even streaming at 4K60 wont hurt the SSD.

Normal use, will never affect them negatively and even heavy use wont be an issue.

At the best possible quality, Stadia will use 35 Mbps of data per second, or about 15.75GB per hour. At Google's recommended minimum quality, Stadia will use about 4.5GB per hour.

24*16*365 = 140,160GB per year (140TB, 24/7)@35Mbit (4K preset stadia)

24*32*365 = 280,320GB per year (280TB, 24/7)@70Mbit

24*320*365 = 2,800,320GB per year (2,800TB, 24/7)@700Mbit

SAMSUNG 970 EVO (500GB, 2018)

300TB per day for 5 years guaranteed

300 * 365 = 109,500TB per year, for 5 years non stop before affecting perf.

2TB model goes up to 1,200TB per day.

How Much Data Do We Create Every Day? The Mind-Blowing Stats Everyone Should Read

We are generating truly mind-boggling amounts of data on a daily basis simply by using the Internet or logging on to Facebook or Instagram, communicating with each other or using smart devices. Here we look at some of the amazing facts and figures of this big data world.www.forbes.com

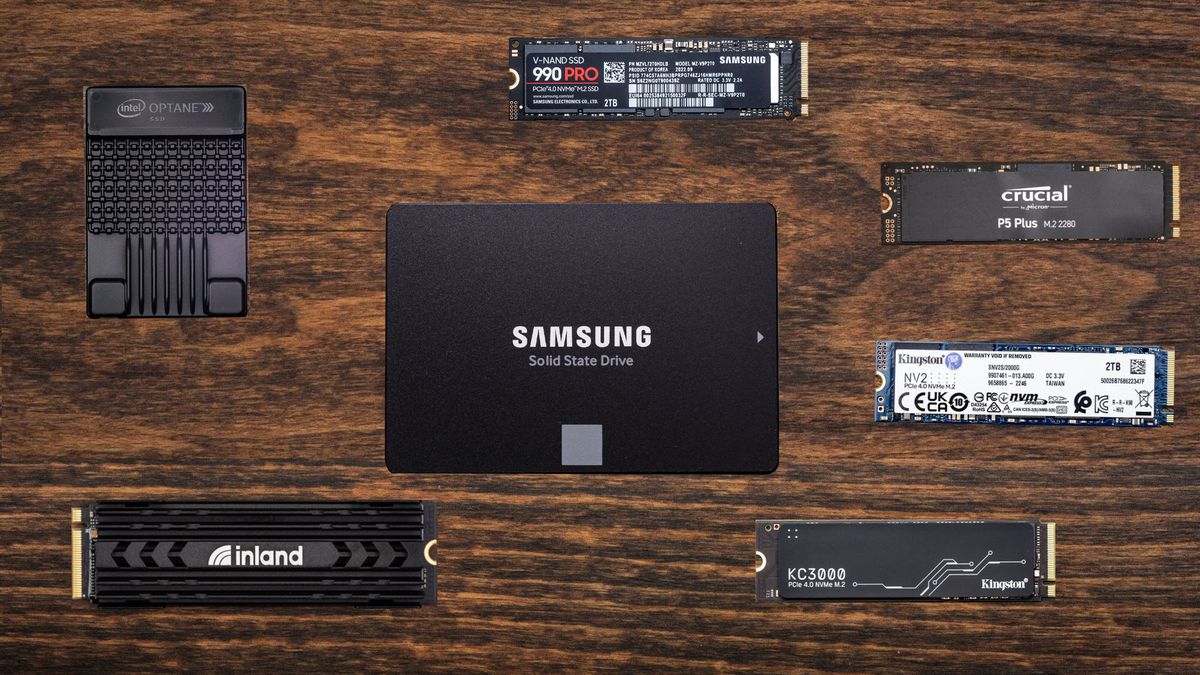

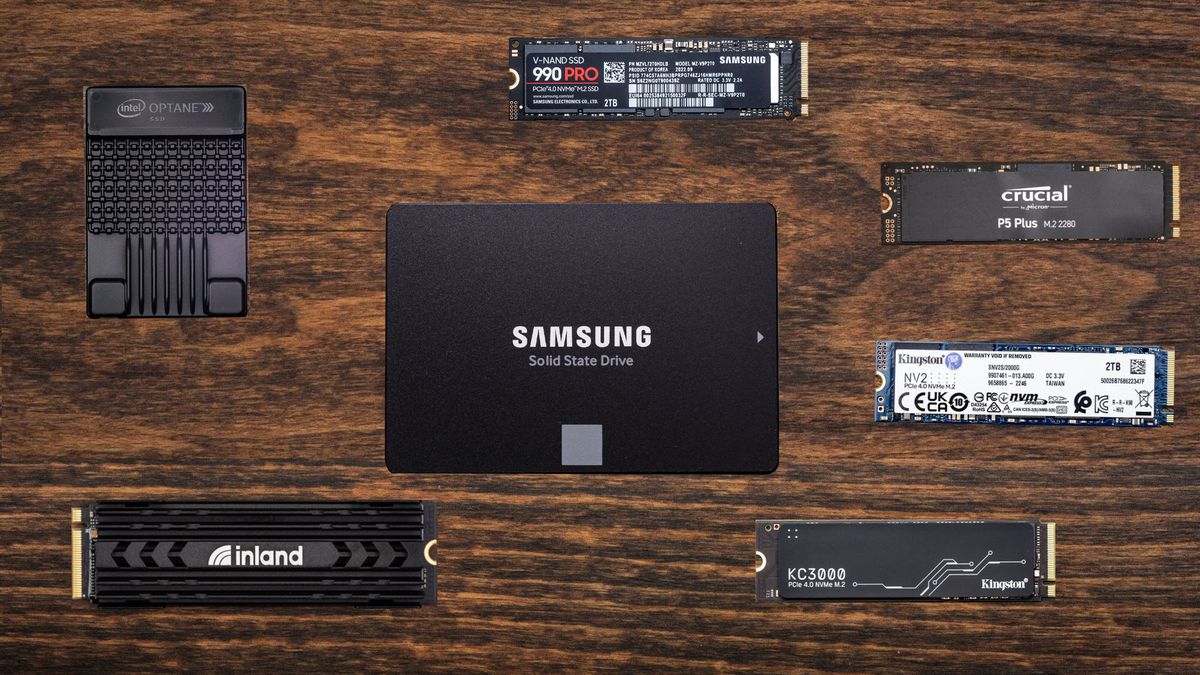

Best SSDs 2024: From Budget SATA to Blazing-Fast NVMe

Based on our extensive tests, these are best SSDs for every need and budget.www.tomshardware.com

So they are changing memory adresses to lessen the rewriting impact on SSDs? That seems like a good idea. Good on them.

How SSD's will change game design in the new generation - Mystic

A healthy dose of skepticism is always good...Can't wait to finally have open world games, where u can walk into houses and leave and move further in the world without loading.

Oh wait.

A healthy dose of skepticism is always good...

The houses you can go in can now be far more complex and far more different from everything that's around it, allowing for much more unique interiors for houses that are close together. Not to mention it's far easier to implement even in the cases you mention meaning they will be able to do it more often and in a far larger capacity. Also that it's possible at higher speeds.Can't wait to finally have open-world games, where u can walk into houses and leave and move further in the world without loading.

Oh wait.

This is more than wear management. It's also about a low level SSD access system that works along with a traditional one, but is used for accessing read-only data (think textures, level data, code) in a very low latency/high bandwidth way. The low level system has a much simpler and condensed way to store access metadata so that much more of it can be stored on the SRAM of the SSD controller. This prevents needing to first go to the SSD or main memory just to look up house keeping data before even accessing the data you really want. Cutting out that middleman reduces latency a lot, and helps maintain bandwidth when making lots of small reading requests.Yes, precisely. This is just a description of the wear management system that Sony have used in their drive, written in maximally confusing patentese. Note that all SSDs have some form of wear management because if they didn't the most frequently accessed blocks would be quickly destroyed. Also note that for at least several decades the majority of patents don't really describe any advances in the state of the art - just different ways of doing things that aren't covered by existing patents, which is why poring over them trying to extract anything of value is often a pointless exercise.

But none of these techniques are new - all they have done is mashed them all up into a specific implementation and wrapped it with enough patentese to pass the now very low bar for "innovation" you need to get a patent. I've been involved in this game myself - when you are designing something you make a whole bunch of implementation decisions and it's now common practice to try and describe them in a way that's possibly patentable to give you extra ammunition against other people.This is more than wear management. It's also about a low level SSD access system that works along with a traditional one, but is used for accessing read-only data (think textures, level data, code) in a very low latency/high bandwidth way. The low level system has a much simpler and condensed way to store access metadata so that much more of it can be stored on the SRAM of the SSD controller. This prevents needing to first go to the SSD or main memory just to look up house keeping data before even accessing the data you really want. Cutting out that middleman reduces latency a lot, and helps maintain bandwidth when making lots of small reading requests.

There is other stuff in there like

- using priority levels to give that low level access first dibs on the data

- using accelerators to check for data integrity, tampering, and doing decompression

- splitting up reads into small chunks that can be done in parallel and stored on the controller before ultimately going to main memory

I was responding to you saying this was about SSD wear management. It wasn't. Wear management was hardly mentioned in the patent. This patent was primarily about how to remove the bottlenecks that would cripple a very fast SSD.But none of these techniques are new - all they have done is mashed them all up into a specific implementation and wrapped it with enough patentese to pass the now very low bar for "innovation" you need to get a patent. I've been involved in this game myself - when you are designing something you make a whole bunch of implementation decisions and it's now common practice to try and describe them in a way that's possibly patentable to give you extra ammunition against other people.

Personally, I absolutely hate it - you end up expending engineering effort on stupid crap that's just different enough to avoid the minefield created by all the other nonsense patents that have been issued rather than concentrating on the things that will actually make a difference. But it makes work for "IP lawyers" and I guess that has to count for something ... maybe.