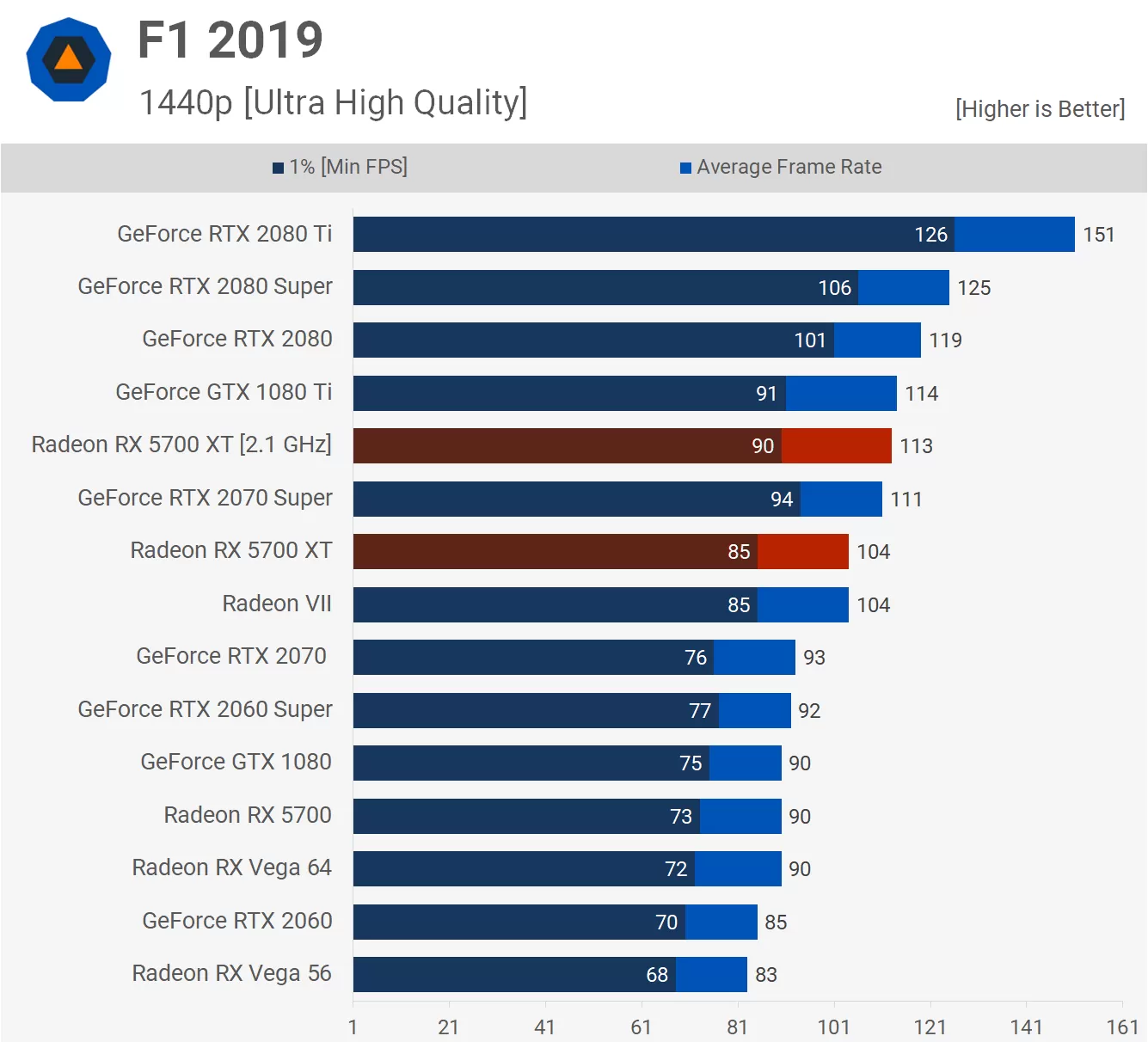

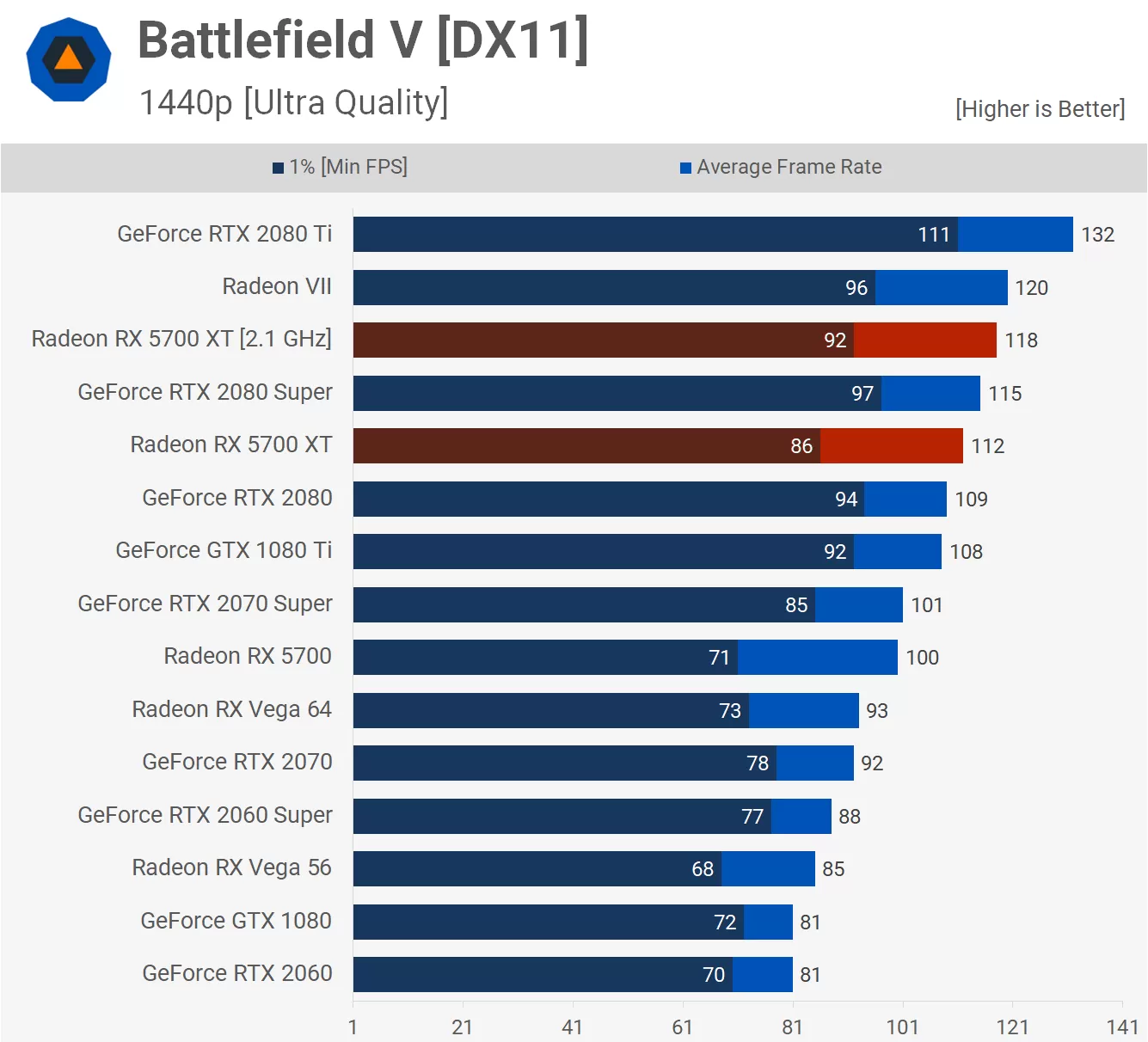

I am talking about real performance, like in games.

you want some overclock examples for 5700 XT?

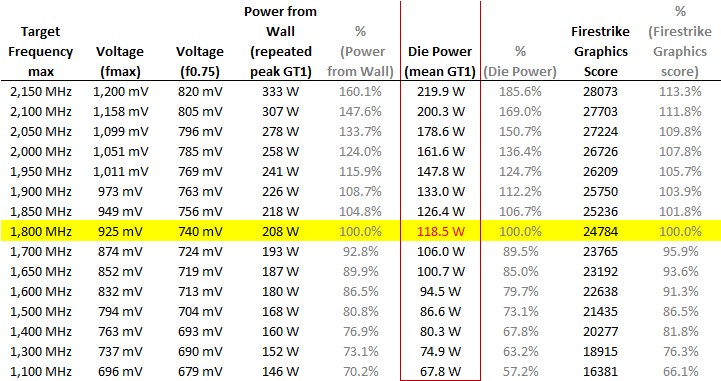

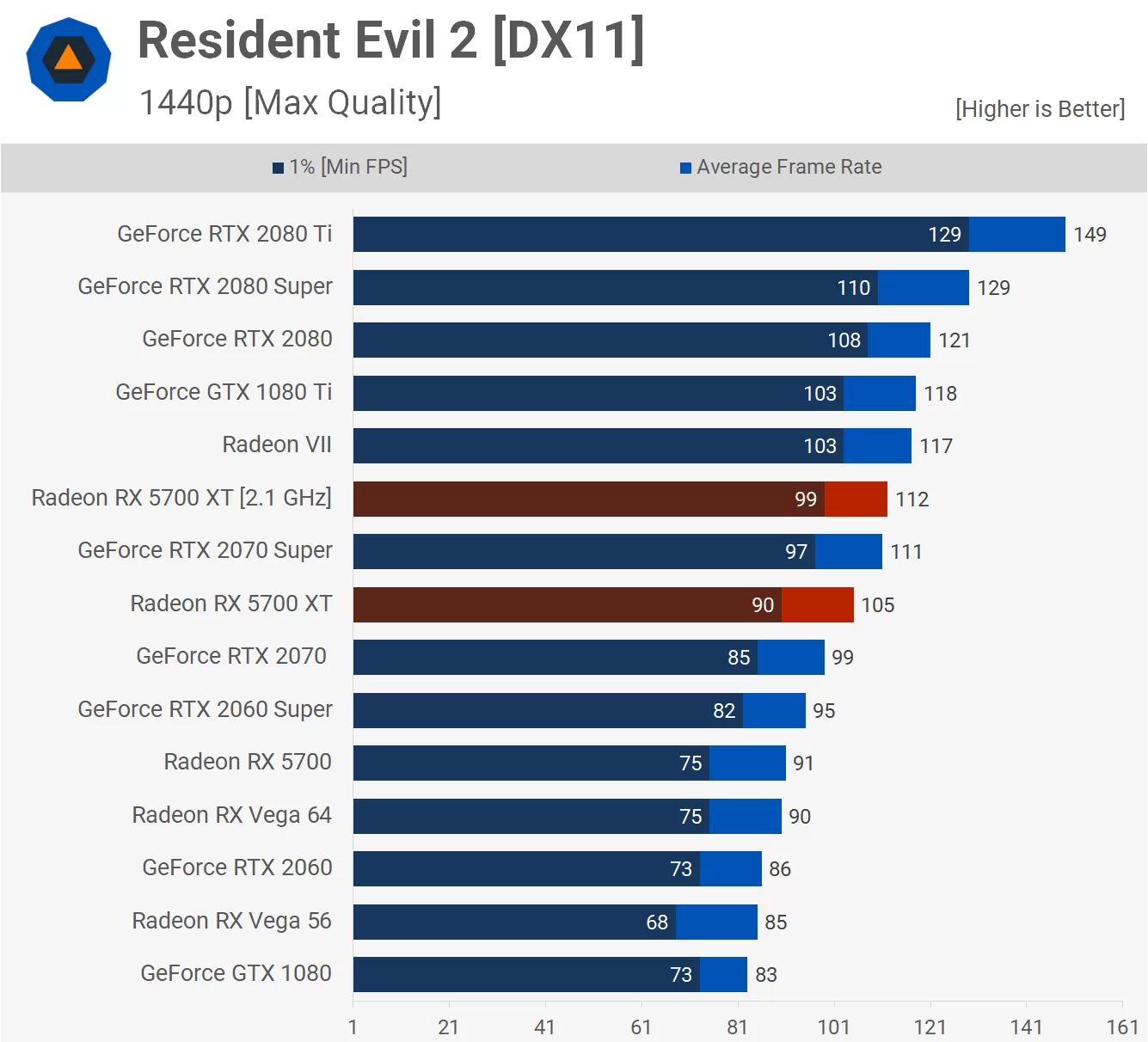

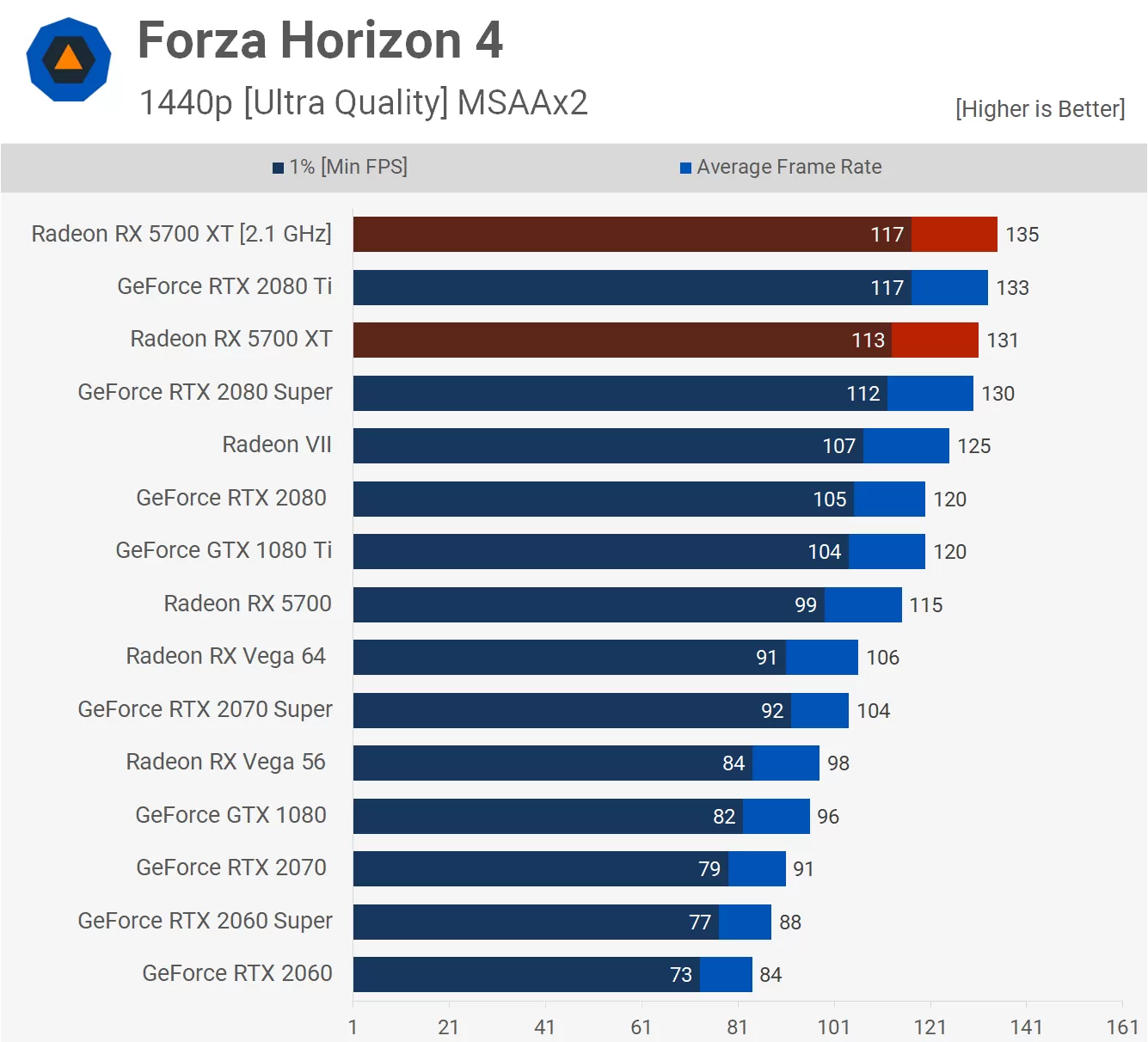

from 1800 to 2050 overclocked , you get 5% on battlefield, 6% on RE2 , 4% on AC:Odyssey, 6% on fortnite, 3% on fora horizon, 6% on tomb raider, out of more than a dozen games tested, only one managed 10%, the division2.

and that was even at 1440p, not even 4k.

result: for a ~7% performance increase you get a ...~40% consumption increase, with all assorted things this brings.

so, I'm not "making up shit", and also, I've had enough conversation with you.

thank you