SenjutsuSage

Banned

So I watched the session titled "Xbox Velocity Architecture: Fast Game Asset Streaming and Minimal Load Times for Games of Any Size"

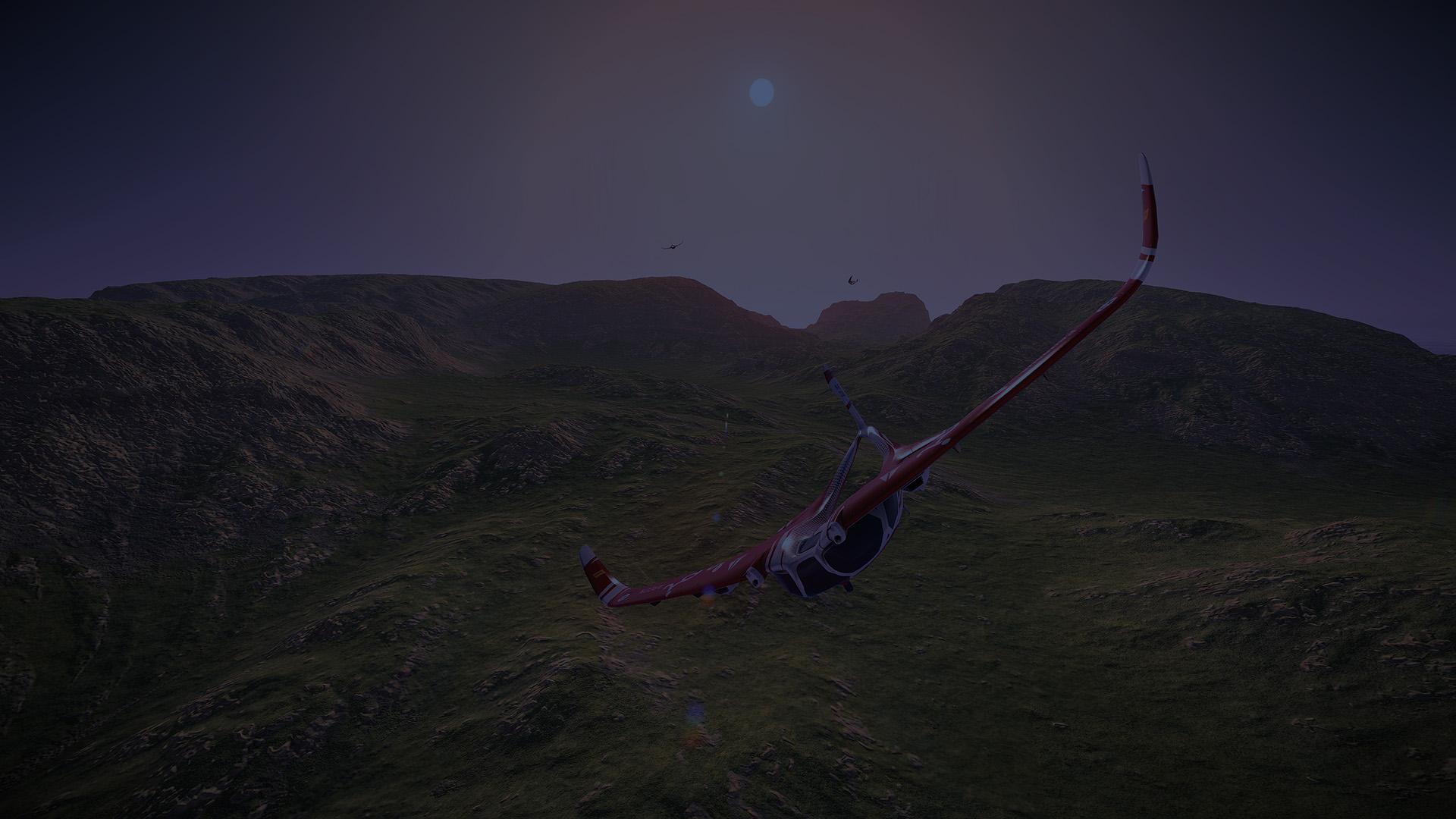

Remember that demo they showed running on Series S? Well, they expanded on it significantly and ran it this time on Series X. They showed a lot more detail and gave a lot more detail, and showed us it running in real-time. Needless to say, the results are fucking impressive.

So the demo we saw has over 10GB of texture data in it, highly detailed for close up inspection.

They showed the numbers for asset streaming the same content on the equivalent of Xbox One X, and how long it takes to load it all (22 seconds or so for 2.7GB of data)

On Series X this was dropped to only 565MB and series x completed the task twice in real-time in under .20 seconds. .19 seconds the first time .17 seconds the second time.

They also showed a highly optimized and intentionally conservative Gen 9 console equivalent of texture streaming without Sampler Feedback Streaming. They pointed out that the numbers present are actually lower for the gen 9 version without sfs because many texture streaming systems will often do over-streaming because they won't be nearly as optimized as the example they're using. Versus the last gen equivalent Series X did the equivalent of 2.68GB in less than .20 seconds. Versus the intentionally conservative gen 9, highly optimized equivalent (that they stress would actually be using more memory than what's shown), the Series X did the equivalent of 1.57GB in less than 0.20 seconds.

By far one of the most impressive parts for me was once they started to do really fast quick camera cuts of the nature that would typically give most streaming systems trouble and expose pop-in. Even in those extreme cases Sampler Feedback Streaming was insanely fast with no pop-in, at least that I could see, and with each cut you could be dealing with north of an instant 1-2GB/s of texture data required at any given second.

They pointed out that typically whenever games need to do this type of loading, there is a loading screen or a cutscene used to hide pop-in, but SFS is just that fast that none is needed even when dealing with over 10GB of texture data.

The video session is over so the video isn't available now, but will surely be available later. Here's a screen cap from the demo.

They point out that Xbox Series X and S games are guaranteed no less than a sustained 2GB/s of raw read speed. It's still 2.4GB/s raw, and it does this, but only when the OS and hardware aren't doing anything else. They reiterate that this is a legitimate multiplier of both SSD speed and performance as well as graphics memory. So going with the minimum guaranteed read speed that would be 6GB/s raw with SFS and 10GB/s compressed with SFS.

Now what about far more complex looking games because one could say it works that well only due to the controlled demo right? They say with confidence that Sampler Feedback Streaming's multiplier effect will be the same no matter what.

"the absolute numbers you see in the numbers bar aren't that high especially compared to the 10 - 16GB of memory you see in our consoles. Our content in this tech demo is fairly simple. A real AAA title would likely have significantly more complex materials and more objects visible. Crucially however, the comparison between sampler feedback streaming and traditional MIPS streaming holds true regardless of material complexity. The numbers will scale with content and the multiplier will still ring true."

Full Game Stack Live Presentation Video added:

Remember that demo they showed running on Series S? Well, they expanded on it significantly and ran it this time on Series X. They showed a lot more detail and gave a lot more detail, and showed us it running in real-time. Needless to say, the results are fucking impressive.

So the demo we saw has over 10GB of texture data in it, highly detailed for close up inspection.

They showed the numbers for asset streaming the same content on the equivalent of Xbox One X, and how long it takes to load it all (22 seconds or so for 2.7GB of data)

On Series X this was dropped to only 565MB and series x completed the task twice in real-time in under .20 seconds. .19 seconds the first time .17 seconds the second time.

They also showed a highly optimized and intentionally conservative Gen 9 console equivalent of texture streaming without Sampler Feedback Streaming. They pointed out that the numbers present are actually lower for the gen 9 version without sfs because many texture streaming systems will often do over-streaming because they won't be nearly as optimized as the example they're using. Versus the last gen equivalent Series X did the equivalent of 2.68GB in less than .20 seconds. Versus the intentionally conservative gen 9, highly optimized equivalent (that they stress would actually be using more memory than what's shown), the Series X did the equivalent of 1.57GB in less than 0.20 seconds.

By far one of the most impressive parts for me was once they started to do really fast quick camera cuts of the nature that would typically give most streaming systems trouble and expose pop-in. Even in those extreme cases Sampler Feedback Streaming was insanely fast with no pop-in, at least that I could see, and with each cut you could be dealing with north of an instant 1-2GB/s of texture data required at any given second.

They pointed out that typically whenever games need to do this type of loading, there is a loading screen or a cutscene used to hide pop-in, but SFS is just that fast that none is needed even when dealing with over 10GB of texture data.

The video session is over so the video isn't available now, but will surely be available later. Here's a screen cap from the demo.

They point out that Xbox Series X and S games are guaranteed no less than a sustained 2GB/s of raw read speed. It's still 2.4GB/s raw, and it does this, but only when the OS and hardware aren't doing anything else. They reiterate that this is a legitimate multiplier of both SSD speed and performance as well as graphics memory. So going with the minimum guaranteed read speed that would be 6GB/s raw with SFS and 10GB/s compressed with SFS.

Now what about far more complex looking games because one could say it works that well only due to the controlled demo right? They say with confidence that Sampler Feedback Streaming's multiplier effect will be the same no matter what.

"the absolute numbers you see in the numbers bar aren't that high especially compared to the 10 - 16GB of memory you see in our consoles. Our content in this tech demo is fairly simple. A real AAA title would likely have significantly more complex materials and more objects visible. Crucially however, the comparison between sampler feedback streaming and traditional MIPS streaming holds true regardless of material complexity. The numbers will scale with content and the multiplier will still ring true."

Full Game Stack Live Presentation Video added:

Last edited: