Saw iWaggleVR tweet a link to a GDC session by Sony London studios as reported in Japanese on Game Watch. Here's the Google translate link:

https://translate.google.co.uk/tran....impress.co.jp/docs/news/20160315_748313.html

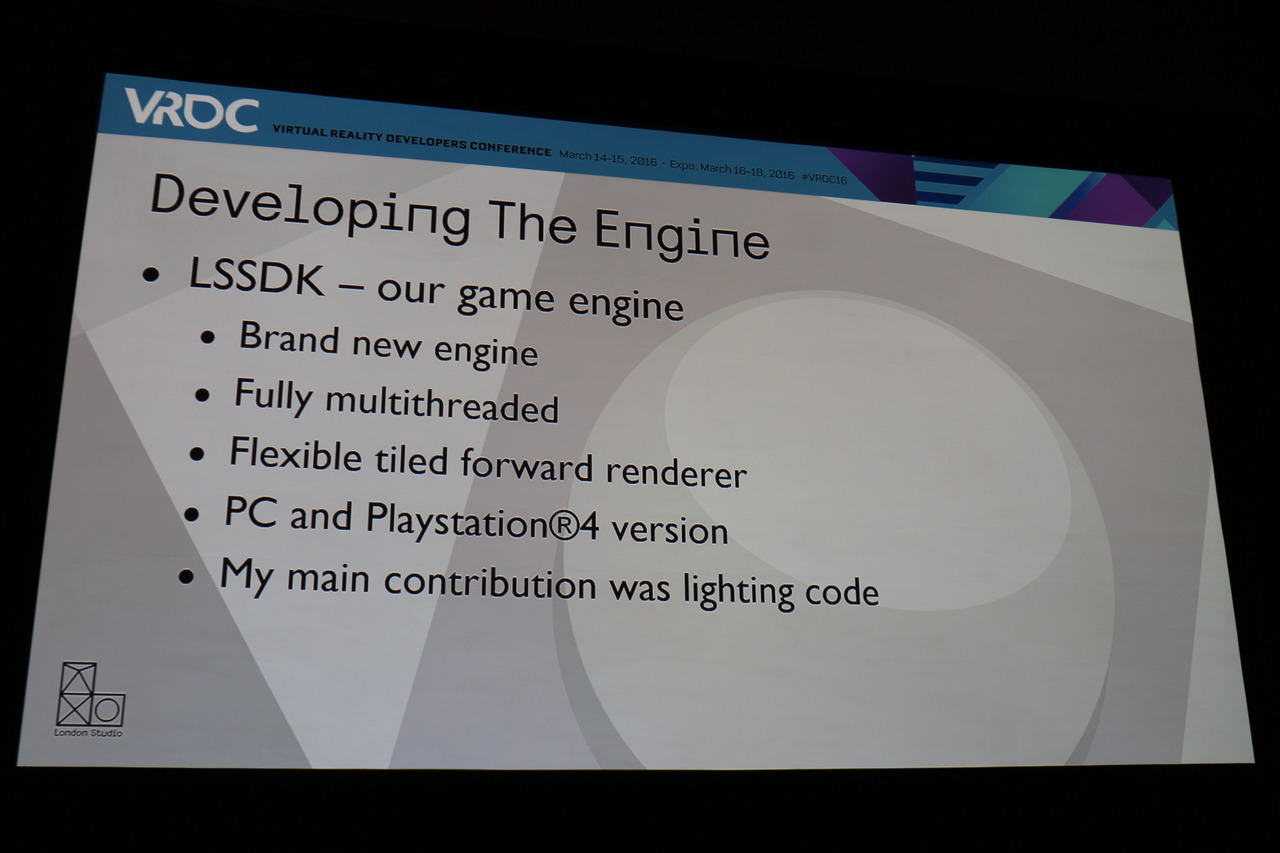

Seems like Sony London Studios have created an in-house engine from scratch purely for VR named LSSDK (that is actually a multiplatform engine with Windows but using the latter only as a quick-iteration development environment). Some technologies are being shared with third-parties.

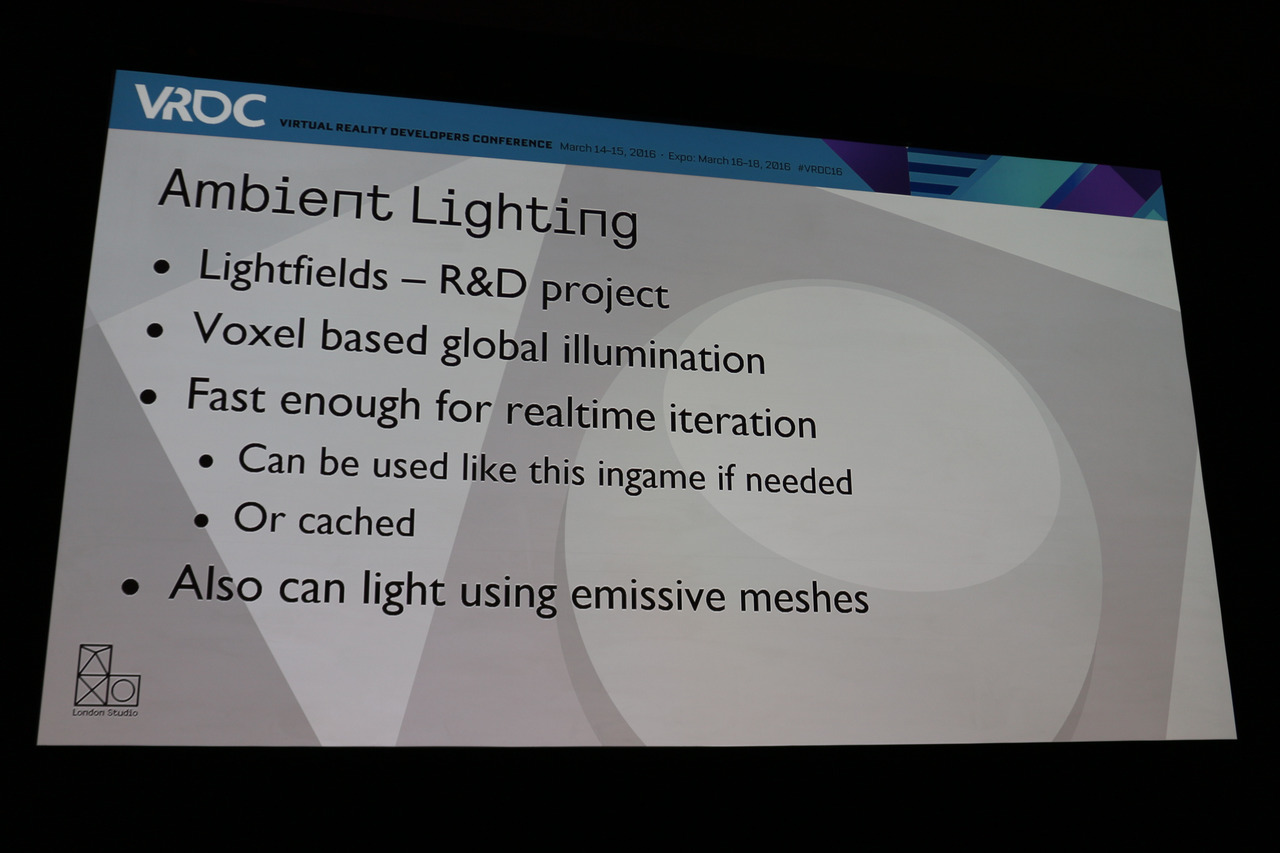

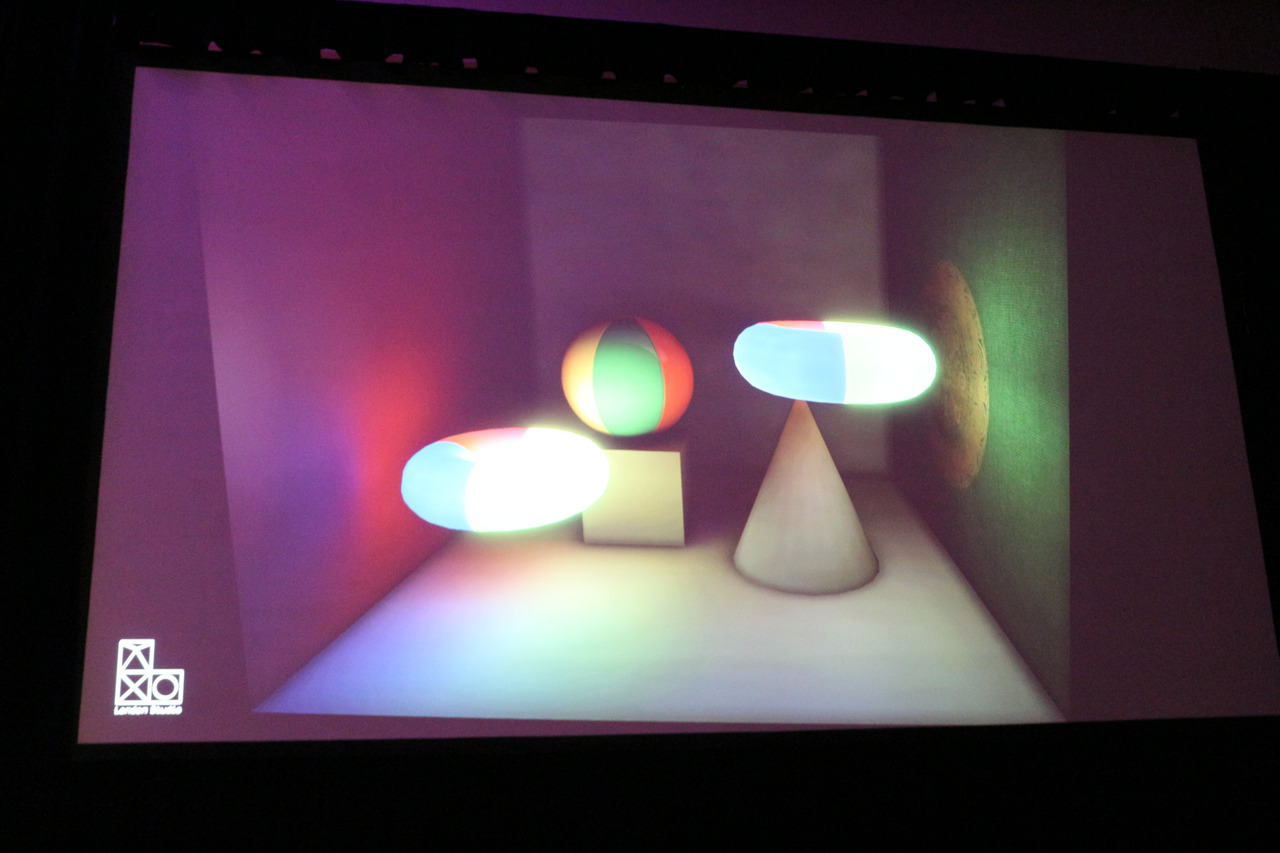

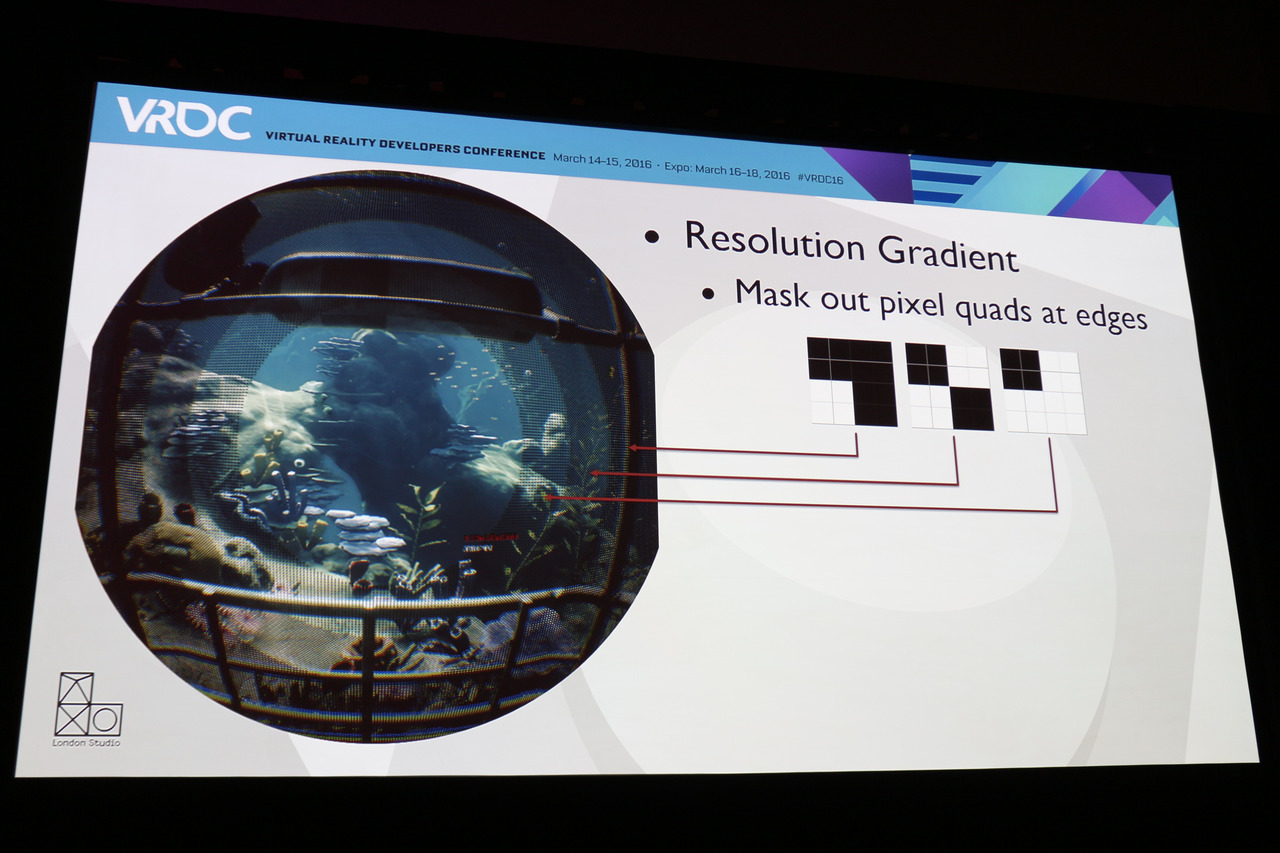

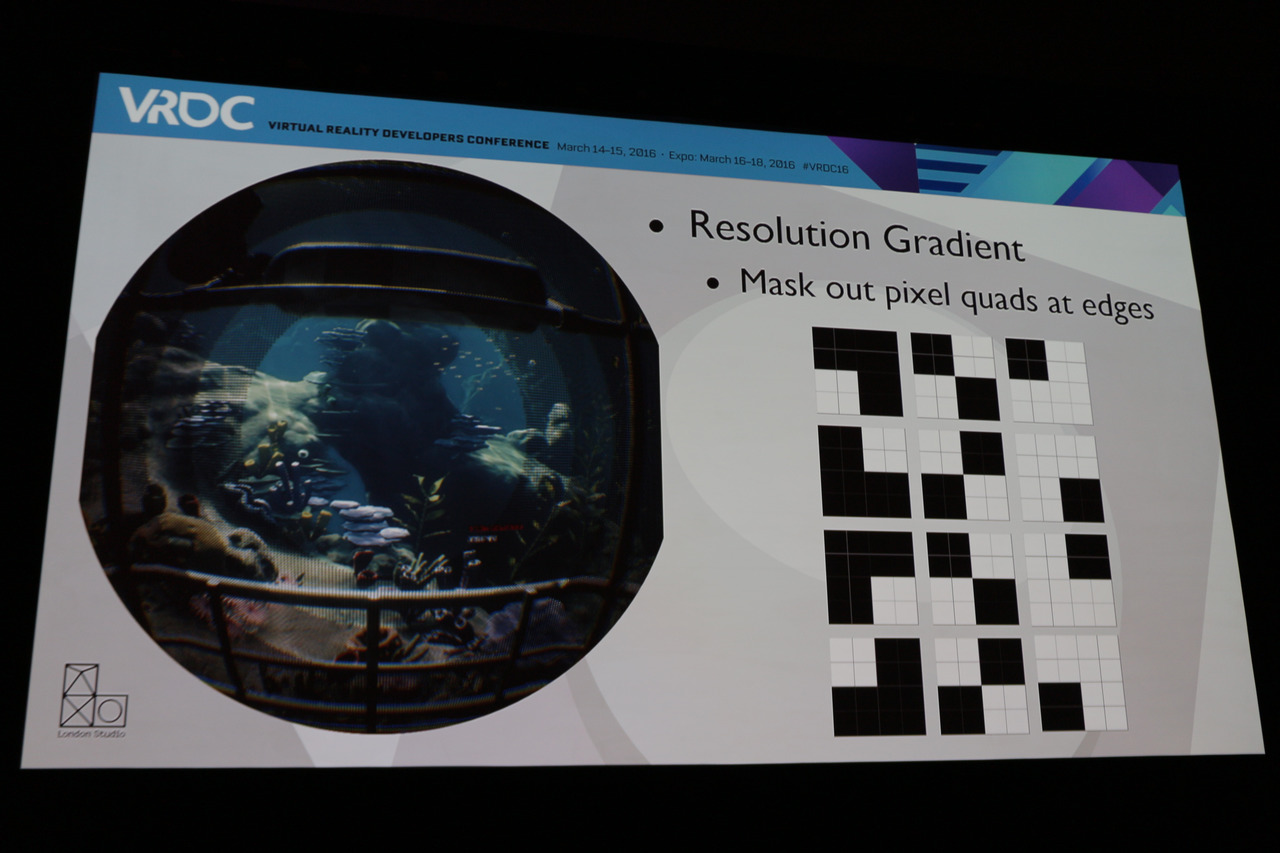

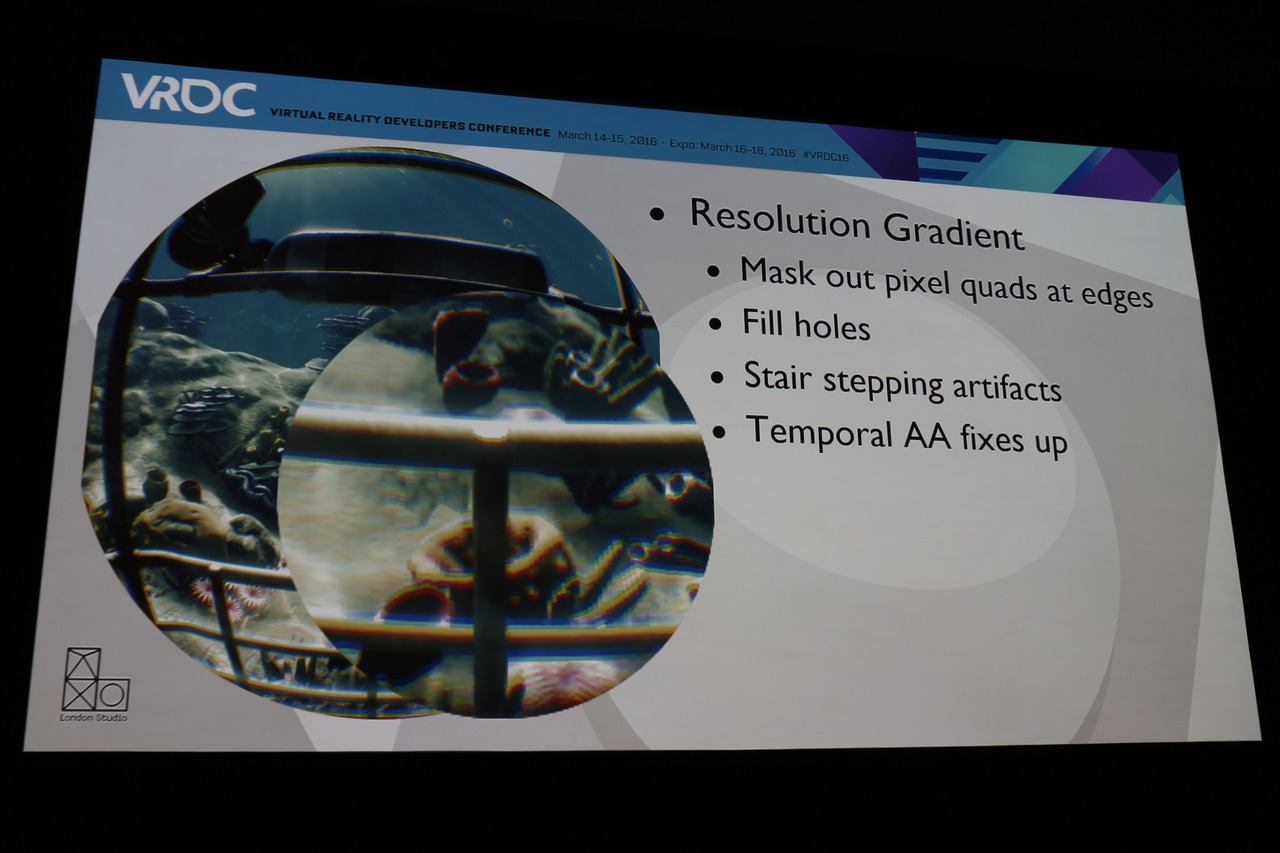

Some cool aspects is that it has Voxel-based global illumination as well as a really effective Resolution Gradient technique (saving 25% GPU power). Valve presented a similar radial density masking method at GDC last week but it seems like Sony's technique is much more mature as it has a shifting pattern that also applies temporal AA to fix-up the masked pixels. Really smart stuff.

The presentation also confirms Sony renders 1.2-1.3x the screen resolution (minus masked pixels) like the other VR hmds.

Voxel-based global illumination technique called 'Lightfield':

Shifting pattern for Resolution Gradient masking:

https://translate.google.co.uk/tran....impress.co.jp/docs/news/20160315_748313.html

Seems like Sony London Studios have created an in-house engine from scratch purely for VR named LSSDK (that is actually a multiplatform engine with Windows but using the latter only as a quick-iteration development environment). Some technologies are being shared with third-parties.

Some cool aspects is that it has Voxel-based global illumination as well as a really effective Resolution Gradient technique (saving 25% GPU power). Valve presented a similar radial density masking method at GDC last week but it seems like Sony's technique is much more mature as it has a shifting pattern that also applies temporal AA to fix-up the masked pixels. Really smart stuff.

The presentation also confirms Sony renders 1.2-1.3x the screen resolution (minus masked pixels) like the other VR hmds.

Voxel-based global illumination technique called 'Lightfield':

Shifting pattern for Resolution Gradient masking: