-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Toy Story in real time?? WHEN?!

- Thread starter Jea Song

- Start date

elrechazado

Banned

MrPliskin said:While CG movies have the benefit of extreme resolution, lighting, and texture work, I would say that these games capture the look and / or feel of Toy Story perfectly

Ratchet and Clank Future Titles

LittleBigPlanet

Viva Pinata

Kameo

There are other games (like Gears, Uncharted, etc) that are all phenomenal visually, but comparint the art styles those are the only games I can toss in there, IMO.

DennisK4 - I would actually argue that graphics are at a point where they don't need significant advancement. I would gladly take a small leap next gen with a much lower price point and an easier development process.

banjo kazooie nuts and bolts

magicalsoundshower said:That may be true but it still doesn't change the fact that Toy Story's raytracing (realistic lighting)...

Toy Story used scan-line rendering only, RenderMan didn't do raytracing until Cars

MrPliskin said:While CG movies have the benefit of extreme resolution, lighting, and texture work, I would say that these games capture the look and / or feel of Toy Story perfectly

Ratchet and Clank Future Titles

LittleBigPlanet

Viva Pinata

Kameo

There are other games (like Gears, Uncharted, etc) that are all phenomenal visually, but comparint the art styles those are the only games I can toss in there, IMO.

DennisK4 - I would actually argue that graphics are at a point where they don't need significant advancement. I would gladly take a small leap next gen with a much lower price point and an easier development process.

The thing is for right now it looks good enough. It'll eventually be very similar to ps2 or last gen in general, that it just all looks the same.

Technically: when we can have realtime ray-tracing for huge amounts of polygons and lightsources at 60 fps.

Estethics wise: ffs that's subjective

edit:

Estethics wise: ffs that's subjective

edit:

eh, raytracing will soon be more feasible than that anyway, as polygon counts go way upidlewild_ said:Toy Story used scan-line rendering only, RenderMan didn't do raytracing until Cars

I'm impressed. Really impressed. So many blind people here.

Next gen, I hope. It is possible, just with jaggies. I'd need lots of AA to have images this sharp, but hardware makers are more interested in pumping more polygons and effects, because most consumers care more for those.

Next gen, I hope. It is possible, just with jaggies. I'd need lots of AA to have images this sharp, but hardware makers are more interested in pumping more polygons and effects, because most consumers care more for those.

poppabk

Cheeks Spread for Digital Only Future

Cars was using roughly 2000 2.8GHz processors to render 1 frame an hour. We are not getting anywhere close within a decade.Chrono said:Eh, TS doesn't look so good.

Wall-e however is an exciting goal for gaming to reach... Maybe around 2020? By then petaflop+ computing will be affordable for gaming PCs and perhaps consoles.

Some of you guys need to seriously rewatch Toy Story again. Preferably on Bluray to see it in it's proper glory (ie. without any artifacting caused by lower resolution output). Current-gen games are still nowhere near that level. Some games may fake a lot of the stuff Toy Story does quite well, but overall it's still not on a comparable level.

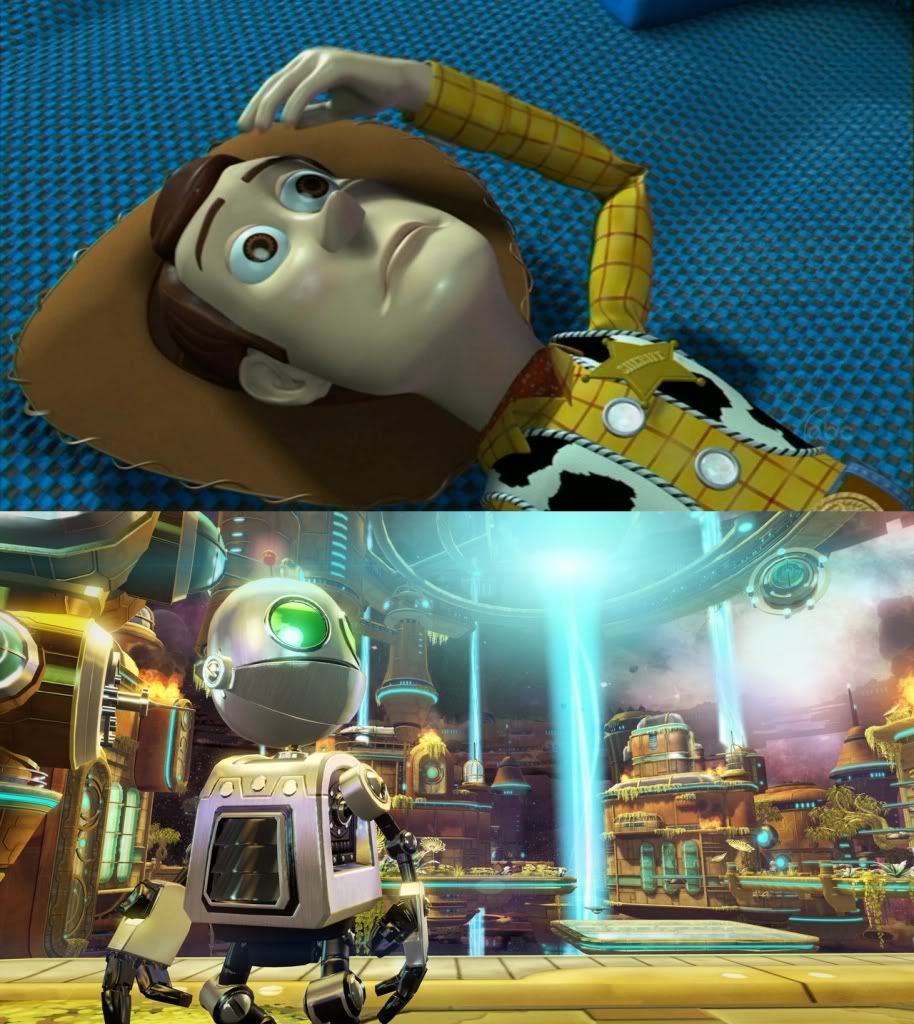

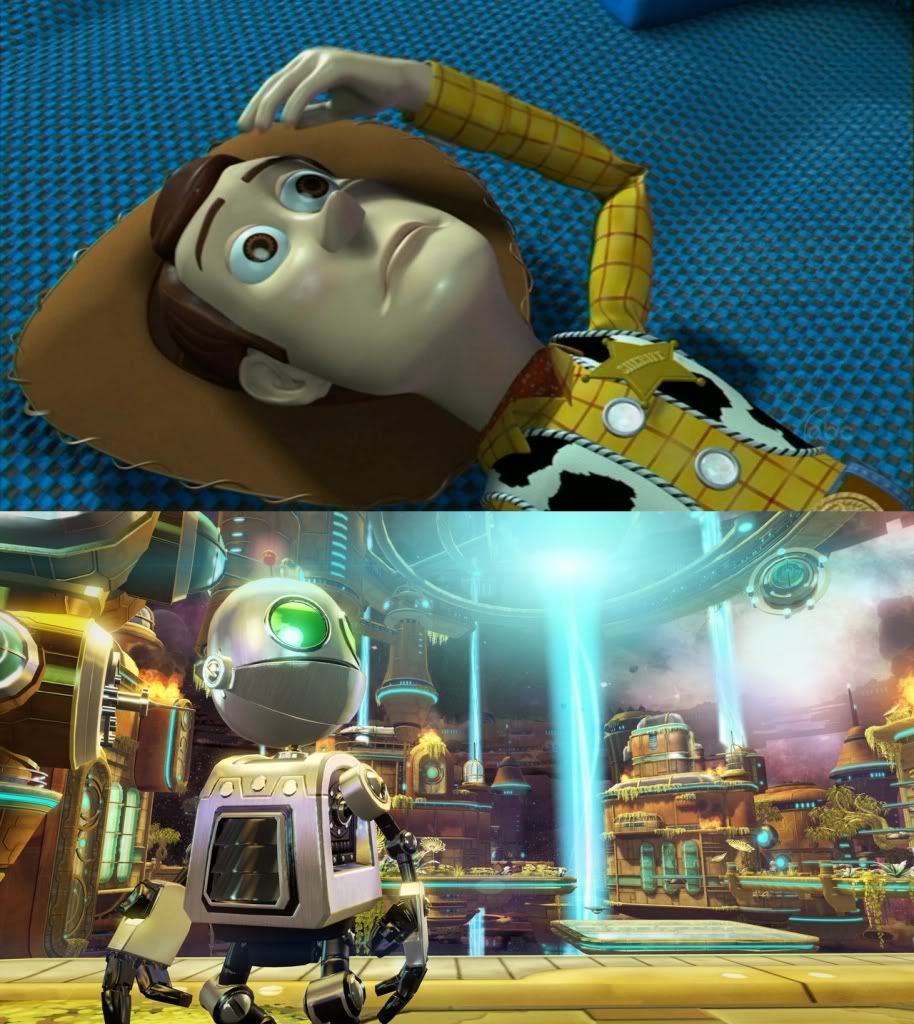

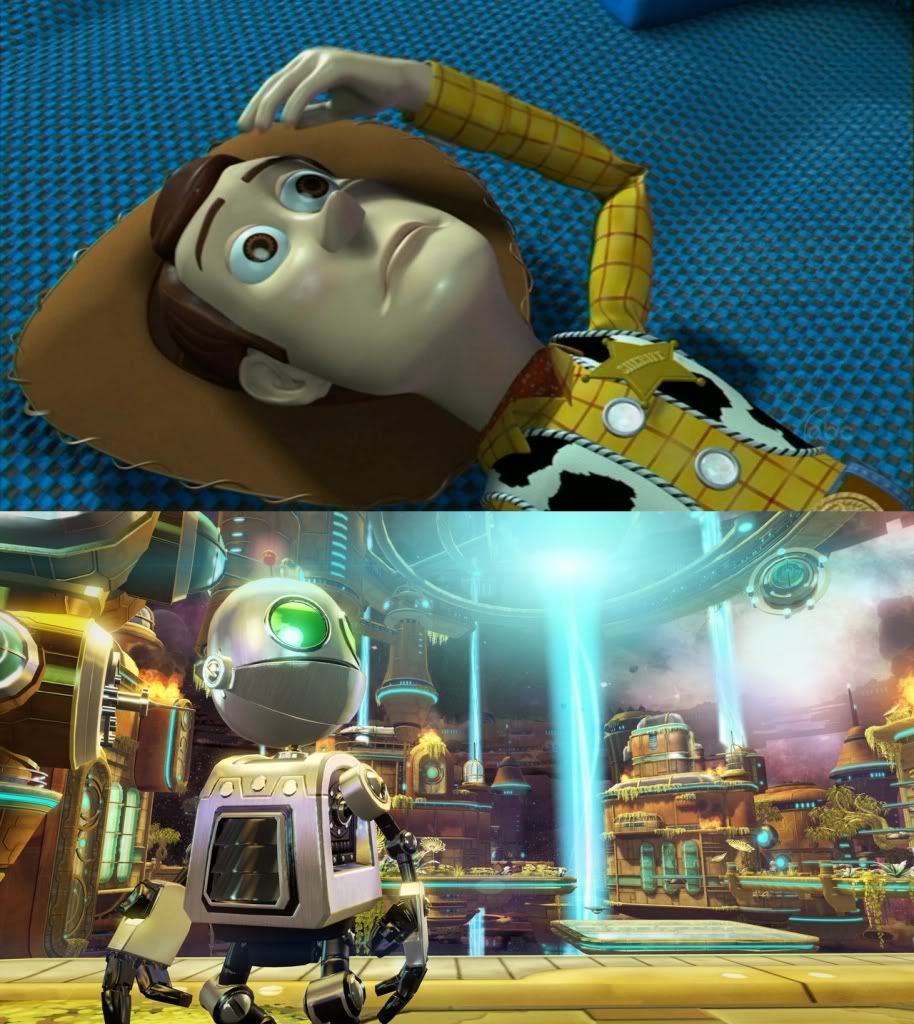

Just look at this image for example. The shading on Woody's face is on an entirely different level compared to what games currently can show. The screenshot of Clank which it is compared against, doesn't even come close in regards to that effect, despite the ones making the comparison thinking otherwise. Then there's the huge polycount used for Woody's face, and the smooth animation (which you naturally can't see on a screenshot), as well as the smooth anti-aliasing which is blown up on that R&C screen anyway. Also, watch the cloth Woody lies on. It has depth, it's a seperate model. In a game that would've been a normalmapped texture and you would've seen the difference.

Just look at this image for example. The shading on Woody's face is on an entirely different level compared to what games currently can show. The screenshot of Clank which it is compared against, doesn't even come close in regards to that effect, despite the ones making the comparison thinking otherwise. Then there's the huge polycount used for Woody's face, and the smooth animation (which you naturally can't see on a screenshot), as well as the smooth anti-aliasing which is blown up on that R&C screen anyway. Also, watch the cloth Woody lies on. It has depth, it's a seperate model. In a game that would've been a normalmapped texture and you would've seen the difference.

EmCeeGramr

Member

"Hey, the shiny plastic and metal surfaces in one of my video games sorta kinda look like something from a Pixar movie, if I squint a little! Toy Story graphics!"

MrJollyLivesNextDoor

Member

poppabk said:Cars was using roughly 2000 2.8GHz processors to render 1 frame an hour. We are not getting anywhere close within a decade.

and thats a highly detailed scene using raytracing, rasterization used in real time applications could get similar results with considerably smaller hardware requirements (although that is still way off)

I think Toy Story 1 could be achieved quite easily in real time with current tech, people are comparing the likes Crysis and Uncharted 2 but thats very apples to oranges. Those two games (especially Crysis) are rendering huge detailed environments - Toy Story normally fetaures small rooms filled with fairly simplistic characters. The character models, textures and shaders used could easily be recreated with todays hardware, the main thing to concentrate on lighting (something raytracing gets very accurate/realistic results in) and keeping enough overhead to ensure high resolution and loads of AA (very important in getting that 'cgi' look)

nelsonroyale

Member

R0nn said:Some of you guys need to seriously rewatch Toy Story again. Preferably on Bluray to see it in it's proper glory (ie. without any artifacting caused by lower resolution output). Current-gen games are still nowhere near that level. Some games may fake a lot of the stuff Toy Story does quite well, but overall it's still not on a comparable level.

See this is a technical vs visual impact discussion...for one devs have problem developed a hell of a lot of tricks rendering a scene since Toy Story was originally shown. Secondly, considering new rendering techniques, if consoles did have the level of resources available similar to the machines that were used to create TS, they would be able to create something far more visually spectacular. I think we can agree, however, that for their respective platforms, games like crysis and uncharted 2 have far more visual impact that Toy story does today. Even when released TS employed a rather cutesy style, that because of its simplcity was pretty convincing. However, the visual style is pretty low key... When I saw the movie on release the visuals did not not impress me at all to the extent that playing crysis for the first time did...

MrJollyLivesNextDoor

Member

this is IMO the best representative of a CGI-esque look in real-time on PS3 and even then it's still rendering much more than it needs to:

FF7 Tech Demo

FF7 Tech Demo

Visualante

Member

Offline rendering and realtime rendering are apples and oranges.

I'd say models are probably on par but the notion that we should be ray tracing effects in games is pretty stupid. We've spent 15 years working out solutions to simulate it at a half decent frame rate. Why would we want to switch on expensive ray traced effects and set ourselves back for little to no visual improvement.

I'd say models are probably on par but the notion that we should be ray tracing effects in games is pretty stupid. We've spent 15 years working out solutions to simulate it at a half decent frame rate. Why would we want to switch on expensive ray traced effects and set ourselves back for little to no visual improvement.

Of course the IQ can never be matched in real time. Neither can the fact that a cgi sequences can be handcrafted in every detail while a realtime system does not only have to push all those pixes but also has to react to player input, blend animations and calculate physics and ai. That eats computation power like candy and the player will hardly ever be at the right place at the right time with the right camera angle to get the best shot possible. We will never get the "pen falling in slow-mo" experience from Ratatouille.

But look at Crysis screenshots made with super sampling, custom lighting and carefully composed player and enemy placement. That´s quite a leap compared to in-game and with hardware we will have in the next few years it could run at realtime, but as I said, you will hardly get the most out of it without a human making decisions.

Look at todays top console games like Uncharted and Gears 2. Now imagine you have overclocked 5870s in crossfire, an overclocked quad cpu and and obscene amount of RAM. That´s hardware you can get today. Call it an XboxStation 364 Wii Hd. Then take developers that are not only good at artistic choices, but also have programmers that can squeeze the most out of said hardware, that code directly to the metal using every bit of power instead of the "optimisation" an open platform like pc normally has.

This monster might still be far away from Toy Story just because CGI just can´t be reached by realtime rendering techniques for all the reasons, but I bet many people couldn´t see the difference at a non-theatre sized screen.

But look at Crysis screenshots made with super sampling, custom lighting and carefully composed player and enemy placement. That´s quite a leap compared to in-game and with hardware we will have in the next few years it could run at realtime, but as I said, you will hardly get the most out of it without a human making decisions.

Look at todays top console games like Uncharted and Gears 2. Now imagine you have overclocked 5870s in crossfire, an overclocked quad cpu and and obscene amount of RAM. That´s hardware you can get today. Call it an XboxStation 364 Wii Hd. Then take developers that are not only good at artistic choices, but also have programmers that can squeeze the most out of said hardware, that code directly to the metal using every bit of power instead of the "optimisation" an open platform like pc normally has.

This monster might still be far away from Toy Story just because CGI just can´t be reached by realtime rendering techniques for all the reasons, but I bet many people couldn´t see the difference at a non-theatre sized screen.

jamesinclair

Banned

I must admit, when watching it in theaters last week, I did think to myself "PS2 was supposed to do this LOL"

And yes, I said lol in my head.

And yes, I said lol in my head.

Flying_Phoenix

Banned

There's a pretty wide difference between the animation quality and techniques of a full CG film like Toy Story and that of a video game. It's like comparing a 2D animated feature film, like Disney or Ghibli, to a made for TV cartoon. Sure there may not be that much of a difference between the two, but what makes the former look so good is the result of using certain techniques that require a painstaking amount of both time, money, and talent. I guess what I'm trying to say that it is much more than just raw power (though that is also an issue) that makes those films look so good.

poppabk

Cheeks Spread for Digital Only Future

Well apparently the 3D version of toy story was rendered at ~24FPS, so almost fast enough for a game, assuming it is rendering each frame twice then we are above the 30fps threshold. Pixar use rasterization and ray tracing depending on their needs, they aren't ray tracing everything.MrJollyLivesNextDoor said:and thats a highly detailed scene using raytracing, rasterization used in real time applications could get similar results with considerably smaller hardware requirements (although that is still way off)

So all we need is the equivalent power of 1000 (I think their render farm might be up to 3000 by now) 2.8GHz processors to get Toy Story in realtime. We aren't talking considerably less hardware requirement we are talking a factor of a 1000, there is no faking or optimizing that difference away.

Graphics Horse

Member

Toy Story could never be done in real time, it's a tremendous strain on the animator's wrist.

SapientWolf

Trucker Sexologist

I don't think Toy Story was ever released on Bluray.R0nn said:Some of you guys need to seriously rewatch Toy Story again. Preferably on Bluray to see it in it's proper glory (ie. without any artifacting caused by lower resolution output). Current-gen games are still nowhere near that level. Some games may fake a lot of the stuff Toy Story does quite well, but overall it's still not on a comparable level.

Just look at this image for example. The shading on Woody's face is on an entirely different level compared to what games currently can show. The screenshot of Clank which it is compared against, doesn't even come close in regards to that effect, despite the ones making the comparison thinking otherwise. Then there's the huge polycount used for Woody's face, and the smooth animation (which you naturally can't see on a screenshot), as well as the smooth anti-aliasing which is blown up on that R&C screen anyway. Also, watch the cloth Woody lies on. It has depth, it's a seperate model. In a game that would've been a normalmapped texture and you would've seen the difference.

Lord Error

Insane For Sony

Toy Story didn't use raytracing. I think Pixar's first movie to use raytracting was Cars. Also, lighting in TS doesn't look that good anymore to be honest. You have to consider that a lot of todays games' looks comes from their precomputed lightmaps which are rendered with lighting algorithms that haven't even existed back when TS was made.Xavien said:As for Uncharted 2 or Crysis, both games use tricks to get to that level of fidelity at a stable FPS, but even they don't come close to toy story, they don't even use ray-tracing, but rasterisation, as such the lighting suffers terribly compared to Toy Story.

Toy story didn't use subsurface scattering at all for example for it's lighting. Games today can use it to a good effect. Approximated, yes, but much better than if it's not there at all. Compare the shading on Drake's face in UC2 with human character in TS, and tell me that it doesn't look much better.R0nn said:Some of you guys need to seriously rewatch Toy Story again. Preferably on Bluray to see it in it's proper glory (ie. without any artifacting caused by lower resolution output). Current-gen games are still nowhere near that level. Some games may fake a lot of the stuff Toy Story does quite well, but overall it's still not on a comparable level.

poppabk

Cheeks Spread for Digital Only Future

Ha you left the dreamworks logo in the bottom corner - totally gives it away.skyfinch said:Forget Toystory. I remember when Xbox 1 was being launched and one of the PR dudes said that Shrek looked like the movie. And you know what? I think it's true.

Which one is the game and which one is the movie? I don't know.

skyfinch said:Forget Toystory. I remember when Xbox 1 was being launched and one of the PR dudes said that Shrek looked like the movie. And you know what? I think it's true.

Which one is the game and which one is the movie? I don't know.

They're both too ugly to tell apart.

poppabk said:Cars was using roughly 2000 2.8GHz processors to render 1 frame an hour. We are not getting anywhere close within a decade.

There's no need for all that power - the people making Cars didn't care about how much power they had, they just used brute force. How close can developers get to Cars using the latest software techniques a decade from now, I don't know, but I don't think they'll need the same processing power. Also, a good CPU by that time will beat the crap out of those 2000 processors. I imagine a single CPU with thousands of cores all on one chip, connected with optical connections, maybe done with carbon nanotubes or graphene and not silicon - getting speeds in hundreds of gigahertz or even in the terahertz range, and using software utilizing multi-core processors like people wouldn't even dream about today.

I thought george lucas said it could..jamesinclair said:I must admit, when watching it in theaters last week, I did think to myself "PS2 was supposed to do this LOL"

this x1000elrechazao said:banjo kazooie nuts and bolts

http://news.bbc.co.uk/2/hi/technology/8300310.stm

^since we're talking GPUs thought I'd link to that BBC article. Bold for the lazy:

I did not know that Nvidia invented GPUs ( ) or that Iron Man's armor was CG...

) or that Iron Man's armor was CG...

^since we're talking GPUs thought I'd link to that BBC article. Bold for the lazy:

GPUs: the next frontier in film

By Maggie Shiels

Technology reporter, BBC News, Silicon Valley

Dumbledore and fire

ILM staff spent months researching real flames to see how they moved

Technology and movie-making have always gone hand in hand but the latest breakthroughs are changing the very nature of the process.

Those in the industry say that thanks to the role of graphics processing units (GPUs), the director's vision can be more fully realised.

It also means that special effects teams are involved in the making of the movie at a far earlier stage.

In the past, their creations would be done in post-production and not be seen for weeks or even months after a movie has wrapped.

All that is changing thanks to the GPU, according to leading industry players like Richard Kerris, chief technology officer of Lucasfilm, part of Industrial Light and Magic (ILM).

The GPU is a specialised graphics processor that creates lighting effects and transforms objects every time a 3D scene is redrawn. These tasks are mathematically intensive and in the past were done using the brains of a computer known as the central processing unit or CPU.

ILM was started by Star Wars director George Lucas, and is the biggest special effects studio in the business and behind blockbusters like E.T., Star Trek, Terminator, Harry Potter, Transformers and M Night Shyamalan's soon-to-be-released fantasy The Last Airbender.

"The advent of the GPU is really the next big frontier for us. We have seen hundreds of times improvements over the last few months. This is taking Moore's Law out the window," Mr Kerris told BBC News.

"Back in the day, the simplest of special effects rendering took a lot of computing power and a 500-square-foot room back then that really wouldn't operate our phone systems today.

"But, the talent and the understanding of what could be done out of that was able to produce movies like Terminator. It was cutting-edge stuff and getting a computer that was the size of a small automobile to render out simulations of a twister was pretty groundbreaking then," said Mr Kerris.

Speed is key

At the heart of what the GPU does is speed, according to Dominick Spina, product manager for Nvidia, the company that invented the technology.

First computer generated main character

The Terminator T-1000 liquid metal shape shifter still wows audiences

"This is a big leap and the amount of data that can be crunched, analysed and documented can be done a lot faster on the GPU than the CPU. On certain simulations, we are talking a 100-200 times improvement," said Mr Spina.

With that kind of acceleration, Dr Jon Peddie, president of Jon Peddie Research says directors are no longer limited by the technology.

"A movie operates at 24 frames a second, and it takes for one of these extraordinary movies that we see anywhere from as few as 12 to as many as 24 hours for one frame.

"So now you have 24 days for a second's worth of film. By using the GPU for this rendering, you can literally reduce that to one 1,000th of what is was. So if it was say 24 hours to do the job, now you can do it in 24 seconds.

"GPU computing is on the cusp of transforming a major part of the computing industry," claimed analyst Rob Enderle, president of the Enderle Group.

"This is to supercomputers what PC's were to mainframes, and I doubt the world will ever be the same again."

'Making the impossible possible'

From a creative standpoint, studios say the technology has been a real boon.

"With this system, the creative process has been transformed from tedious to fun," said Rob Bredow, chief technology officer for Sony Pictures Imageworks, which used Nvidia's GPU to make "Cloudy With a Chance of Meatballs."

Cloudy With a chance of Meatballs

Animators created 80 different kinds of food for the movie

"When an artist can do 10 times as many iterations of an effect in the same amount of time, the quality of the end product will be that much better. Renders that would have taken 45 minutes or more to run on a CPU, are now cut down to 45 seconds," he said.

Mr Kerris from LucasFilm is in total agreement.

"In the past, a lot of times the director would say 'I want this kind of effect' and the team would go away, do their work and a year later come back with it and if it wasn't what you as a director wanted, then the whole process had to get re-instated.

"We are just at the tip of the iceberg in terms of experimentation but already we have seen the impact on some of the films we worked on this summer like Harry Potter.

"There was a final scene at the end of the film that had to do with fire and Dumbledore moving the fire around his head. When the director saw that he could actually direct the fire, he expanded that entire scene so it became a much more prominent scene in the film," explained Mr Kerris.

"GPUs are making the impossible possible in movies, bringing anything from swirling tornadoes to fiery infernos to the big screen," said Nvidia's Mr Spina.

"In the past movies dominated by crazy water, weather and fire effects were nearly impossible to make regardless of budget or timeframe; with the advent of the GPU, artists are able to make these visions a reality."

Story telling

So what does the future of this technology hold?

"In the next 6-8 months you will see some major films with some incredibly big scenes that are primarily done through GPU's. We are talking about taking it to a new level and doing it in a way where it won't take two years to do," said Mr Kerris.

Iron Man

Digitally adding Iron Man's armour later let the actor concentrate on acting [pic of Iron Man in linked article]

"Beyond that, I think GPU's will start to play a part in the home environment. You could hypothesise that a GPU could offload some stuff from the TV processor to give real-time overlays and graphics and data say with a sports game or show.

"You are going to see amazing new amusement centres, virtual reality and augmented reality. All these things will exploit the GPU on a more personal basis," said Dr Peddie.

"We take our mobile phone and with augmented reality we experience the world in a different way. The power of the GPU does that for us. We are at a tipping point," said Dr Peddie.

But at the end of the day, Mr Kerris stressed that when it comes to the movies, all the whizz-bang technology is worth nothing if the story is not up to scratch.

"George Lucas founded the different divisions of Lucasfilm not for technology's sake. In fact he is a very non-tech person. For him technology is a means to an end and it is always the story that counts," stressed Mr Kerris.

I did not know that Nvidia invented GPUs (

Guardian Bob

Member

Pixar / Dreamworks will always stay a step ahead. Their technology improves too.

msdstc said:Really? That doesn't look that good at all. The way I try to look at things is 10 years ago what was the best looking game out? How about 10 years before that? Try and imagine 20 years down the road how much of a leap games should be able to make.

An exponential leap; that's what I'd say.

But at the end of the day, Mr Kerris stressed that when it comes to the movies, all the whizz-bang technology is worth nothing if the story is not up to scratch. "George Lucas founded the different divisions of Lucasfilm not for technology's sake. In fact he is a very non-tech person. For him technology is a means to an end and it is always the story that counts," stressed Mr Kerris.

That explains why the Star Wars prequels are sooo good

That article is interesting though, I always thought they had something like 3d storyboards where they check out a scene before rendering it in full detail. This makes it sound like this is something new.

nelsonroyale said:See this is a technical vs visual impact discussion...for one devs have problem developed a hell of a lot of tricks rendering a scene since Toy Story was originally shown. Secondly, considering new rendering techniques, if consoles did have the level of resources available similar to the machines that were used to create TS, they would be able to create something far more visually spectacular. I think we can agree, however, that for their respective platforms, games like crysis and uncharted 2 have far more visual impact that Toy story does today. Even when released TS employed a rather cutesy style, that because of its simplcity was pretty convincing. However, the visual style is pretty low key... When I saw the movie on release the visuals did not not impress me at all to the extent that playing crysis for the first time did...

But anybody who has eyes can see the differences. I love uncharted 2 and it wowed me several times, but the game is not even close, even the cutscenes are generations away. The animations in toy story, the image quality, the polygon count all destroy current games. I don't even see how there's a debate here.

What? Toy Story? (not the upgraded version in our cinemas today). Has been surpassed.Jea Song said:In 1995 the world of animation was forever changed with the release of Toy Story, the first ever computer animated feature film.

Since then iv always dreamed of playing a video game where the in game graphics were as good as those. Almost 15 years later have we come close? I still dont see a game that does this level of quality. Maybe uncharted 2, or crysis? But when will we finally see EVERY game at least look as good as Toy Story? Next Gen? Or have we achieved this already?

Uncharted

Ratchet & Clank

Viva Pinata

etc. etc.

poppabk

Cheeks Spread for Digital Only Future

I think you are applying wishful thinking to your processor projections. Processor speed increased by a factor of 1000 in the last 10 years, and there is no reason to believe that we are going to do better over the next 10 years (and most likely worse as we hit physical/heat/power limits). So in 10 years time a top of the range computer will still be behind a current day 'average' super computer.Chrono said:There's no need for all that power - the people making Cars didn't care about how much power they had, they just used brute force. How close can developers get to Cars using the latest software techniques a decade from now, I don't know, but I don't think they'll need the same processing power. Also, a good CPU by that time will beat the crap out of those 2000 processors. I imagine a single CPU with thousands of cores all on one chip, connected with optical connections, maybe done with carbon nanotubes or graphene and not silicon - getting speeds in hundreds of gigahertz or even in the terahertz range, and using software utilizing multi-core processors like people wouldn't even dream about today.

The big thing with Toy Story and its sequel or any other pixar film is the image and texture quality. Getting those sort of polygon counts, with that level of texturing and image quality is going to take another 10 years. We can mimic it fairly well now, but to match it is a few years off.

poppabk said:I think you are applying wishful thinking to your processor projections. Processor speed increased by a factor of 1000 in the last 10 years, and there is no reason to believe that we are going to do better over the next 10 years (and most likely worse as we hit physical/heat/power limits). So in 10 years time a top of the range computer will still be behind a current day 'average' super computer.

I think I remember Kurzweil saying that by 2020 a thousand dollars will buy you computing power in the petaflop range - that's as powerful as the fastest supercomputer today.

Lord Error

Insane For Sony

Some technical differences, yes. However, I think the appeal of the overall image look has been far surpassed, and I think any non-tech person would agree that for example The Last Guardian trailer, or just about anything from UC2 looks more impressive than the plasticy look of original Toy story. Normal person will not look at the level of antialiasing or shadow map resolutions, but the overall effect that is accomplished. That's not to mention how much easier it is to animate toy-like caricature characters convincingly, compared to something that resembles realistic look.msdstc said:But anybody who has eyes can see the differences.

DangerStepp

Member

Astrolad alt account?Orlics said:Bungie has more or less matched the facial modeling of the human characters in Toy Story

Warm Machine

Member

Actually, the blanket Woody is lying on is a poly cloth with a displacement shader for all the weave. Way simpler than it looks.

There are areas in Toy Story that are actually pretty raw. Inside the next door neighbors house is pretty rough in terms of the environment modelling. Also, the ambient light simulation is more or less a single color fill (which was standard for the time). There was no ambient occlusion rendering or baked ambient occlusion of any kind either. They didn't even dome light the scenes as that technique wasn't yet pioneered.

Bugs Life even has some rough spots near the start where shadows are not on and when they are they have obvious pixelation.

Even so, I have never seen a bad Pixar movie by any stretch of the imagination.

There are areas in Toy Story that are actually pretty raw. Inside the next door neighbors house is pretty rough in terms of the environment modelling. Also, the ambient light simulation is more or less a single color fill (which was standard for the time). There was no ambient occlusion rendering or baked ambient occlusion of any kind either. They didn't even dome light the scenes as that technique wasn't yet pioneered.

Bugs Life even has some rough spots near the start where shadows are not on and when they are they have obvious pixelation.

Even so, I have never seen a bad Pixar movie by any stretch of the imagination.

nelsonroyale

Member

msdstc said:But anybody who has eyes can see the differences. I love uncharted 2 and it wowed me several times, but the game is not even close, even the cutscenes are generations away. The animations in toy story, the image quality, the polygon count all destroy current games. I don't even see how there's a debate here.

Look I was talking about visual impact... Toy story barely impressed me visually when it is released...as I said, the artistic design is simple and attractive...its meant to be low key..technical comparisons aside, it doesn't look that impressive to me at all today... Its cg, and its not really impressive cg anymore...crysis on ultra high renders far more visually beautiful scenes than anything I have seen in toy story, and so impresses me more from a purely visual stand point...evnethough I like the simplicity of toy stories design...

Its another ridiculous debate on here... Technical is objective...visual impact is entirely subjective...regardless of the nuts and bolts, I find the totality of some games graphics more impressive than the simplicity of toy stories visual design...looking at the picture above of toy story...really doesn't back up the point the dude was trying to make...really doesn't look that detail at all..

TAJ

Darkness cannot drive out darkness; only light can do that. Hate cannot drive out hate; only love can do that.

Yeah, it wasn't used in movies until X-Men in 2000, and then only in one scene. It was finally used extensively in Pearl Harbor in 2001.Warm Machine said:Actually, the blanket Woody is lying on is a poly cloth with a displacement shader for all the weave. Way simpler than it looks.

There are areas in Toy Story that are actually pretty raw. Inside the next door neighbors house is pretty rough in terms of the environment modelling. Also, the ambient light simulation is more or less a single color fill (which was standard for the time). There was no ambient occlusion rendering or baked ambient occlusion of any kind either. They didn't even dome light the scenes as that technique wasn't yet pioneered.

rohlfinator

Member

Games are rendered on the GPU, though, so this isn't really an apples-to-apples comparison.poppabk said:I think you are applying wishful thinking to your processor projections. Processor speed increased by a factor of 1000 in the last 10 years, and there is no reason to believe that we are going to do better over the next 10 years (and most likely worse as we hit physical/heat/power limits). So in 10 years time a top of the range computer will still be behind a current day 'average' super computer.

Just as a quick estimate from a minute of Googling, one of the CPUs used to render Toy Story would have been able to compute at most roughly 100 MFLOPS, whereas the Radeon HD 5870 is rated at around 2.7 TFLOPS, which is already 27,000 times faster.

Admittedly this is probably not very accurate, but there is simply no comparison between a 1990's-era CPU and a GPU in terms of graphics rendering capabilities.

MrJollyLivesNextDoor

Member

msdstc said:But anybody who has eyes can see the differences. I love uncharted 2 and it wowed me several times, but the game is not even close, even the cutscenes are generations away. The animations in toy story, the image quality, the polygon count all destroy current games. I don't even see how there's a debate here.

i'd have to disagree here, the (prerendered) cutscenes don't really differ heavily from realtime, the main difference being lighting textures and AA - as far as we know it's all in engine, i'd say it could be done with todays GPU's no problem.

I really don't see anything in those toy story screens that can't be approximated pretty closely with rasterization - probably even improve it in some regard, it's basicly a load of high poly objects in an box for a room, the shaders used for the plastic of the toys just look like basic specularity effects - todays offline rendering software can produce materials that literally look photorealistic, toy story looks incredibly basic in comparison.

poppabk

Cheeks Spread for Digital Only Future

And they had maybe 1000 CPU's and rendered one frame every 2-15 hours. So you are only 27 times faster than the original render farm and so are looking at 1 frame every couple of minutes. To get to 30 frames per second is still a 3000 fold jump.rohlfinator said:Games are rendered on the GPU, though, so this isn't really an apples-to-apples comparison.

Just as a quick estimate from a minute of Googling, one of the CPUs used to render Toy Story would have been able to compute at most roughly 100 MFLOPS, whereas the Radeon HD 5870 is rated at around 2.7 TFLOPS, which is already 27,000 times faster.

Admittedly this is probably not very accurate, but there is simply no comparison between a 1990's-era CPU and a GPU in terms of graphics rendering capabilities.