bryanee

Member

Seems like a pretty minimal upgrade. Knowing the Pro wouldnt be able to push a massive resolution boost, I was hoping for HDR more than anything, so this is a bit disappointing.

But its got a pretty big resolution boost.

Seems like a pretty minimal upgrade. Knowing the Pro wouldnt be able to push a massive resolution boost, I was hoping for HDR more than anything, so this is a bit disappointing.

v1.30

v1.50

v1.30

v1.50

v1.30

v1.50

v1.30

v1.50

v1.30

v1.50

I feel the game is already showing its age with the visuals, so it's nice to see it get this update. I'm especially curious how it's going to fare on the One X, though I don't expect much of a deviation from the Pro version.

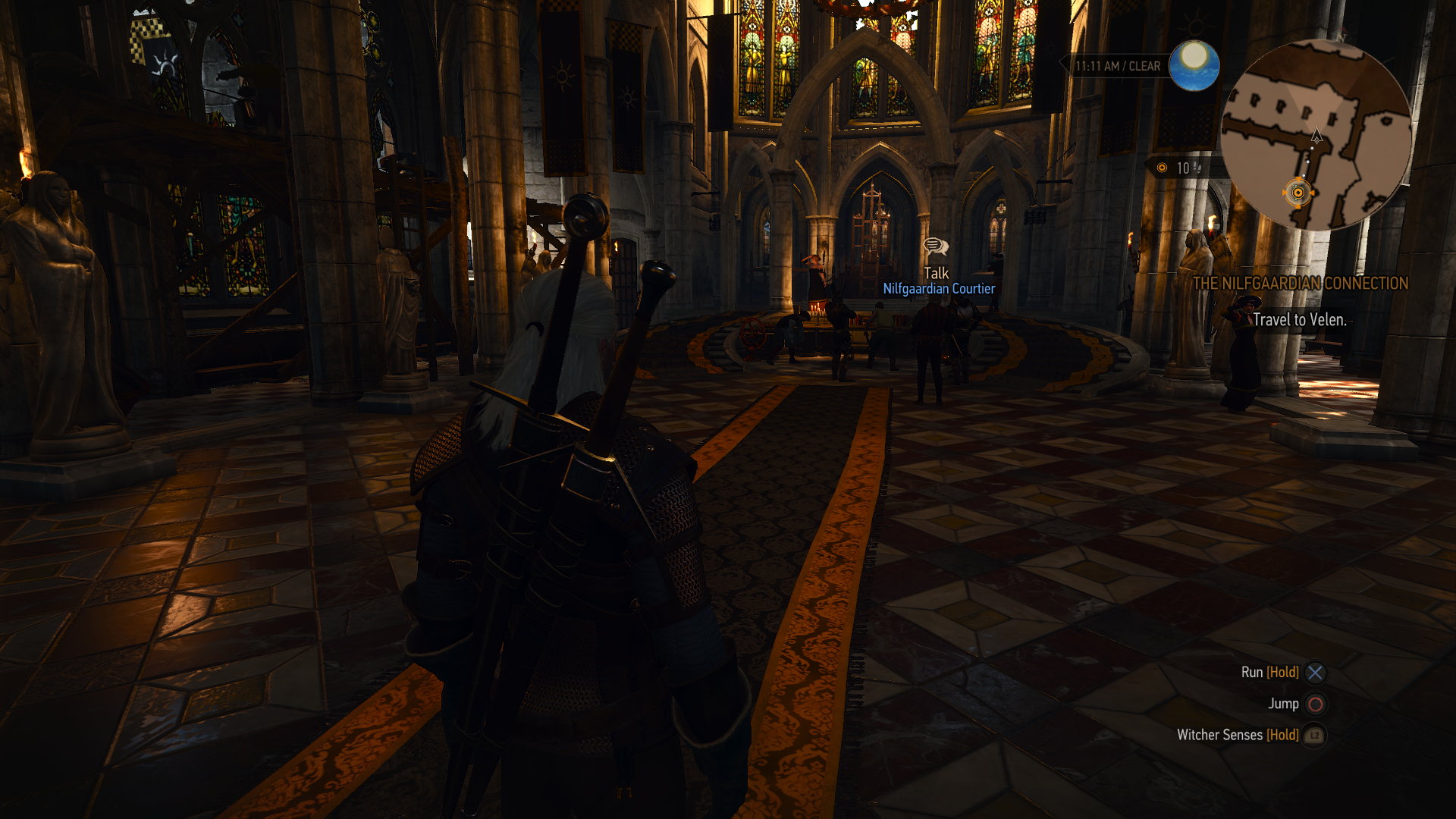

Some quick comparison shots in 4K mode:

Old https://farm5.staticflickr.com/4502/36785612364_df02ee2c50_o.png

Pro https://farm5.staticflickr.com/4463/37237317760_b06da784b5_o.png

Old https://farm5.staticflickr.com/4459/36826150473_0464005be9_o.png

Pro https://farm5.staticflickr.com/4472/37464443312_da4e49f3c0_o.png

Old https://farm5.staticflickr.com/4511/23643027678_157d9ea781_o.png

Pro https://farm5.staticflickr.com/4451/36826145503_52967883b6_o.png

Some quick comparisons with v1.30. Playing on a 1080p monitor.

Very nice, especially the last set of screens - clearly shows supersampling.Some quick comparisons with v1.30. Playing on a 1080p monitor.

But hdr is a much more effective upgrade imo. Given the choice, Id rather they leave the resolution and just do that.But its got a pretty big resolution boost.

HDR may be great, but a resolution increase benefits so many more people.But hdr is a much more effective upgrade imo. Given the choice, Id rather they leave the resolution and just do that.

Some quick comparisons with v1.30. Playing on a 1080p monitor.

But hdr is a much more effective upgrade imo. Given the choice, Id rather they leave the resolution and just do that.

I feel like there's a difference in AO.

I think BaW does look generally better than the rest of the game, but not to an obvious extent.Yeah I can agree with that. There are parts that look great and others not so much. Imagine if they had brought the visuals those early screenshots showed, oh my. But that was never going to happen.

Doesn't Blood and Wine still look damn good though? Never played it but the footage looked great.

So compared to PC, what graphics level is the PS4 version at? Mid range?

Maybe i'll double dip...

@HDR: does it even require any processing power?

They said they would release it when it's done. What's interesting about it?

Resolution: 1920x1080 (4K via cb or geo on Pro)

Nvidia HairWorks: Off

Number of Background Characters: Low

Shadow Quality: Medium

Terrain Quality: Medium

Water Quality: High

Grass Density: Medium

Texture Quality: Ultra

Foliage Visibility Range: High

Detail Level: Medium

Ambient Occlusion: SSAO

All post-process effects on, except vignetting

Didn't the boost mode make some of those really rough FPS areas playable? Does this patch eliminate those bonuses? Couldn't care less about the visual upgrades if they've downgraded the frames per second to achieve it. Kinda focusing on the wrong thing here.

Yes, the game has just received an upgrade patch, enabling it to take advantage of the additional power offered by the PS4 Pro. When playing the game on a PS4 Pro system, The Witcher 3: Wild Hunt and all its additional content feature support for 4K resolution and a slight boost to performance.

Texture quality on PS4 is not ultra, as that adds in mip LOD bias that is much "sharper" than default PS4. It would be somewhere inbetween medium and high.

High and Ultra textures are definitely the same in terms of resolution when the camera is smack-dab right in front of the texture, but the settings for textures also ties in with other variables, namely mipmap bias. From that same NV Guide:I thought High and Ultra textures were same... From GeForce guide:

"On Low, 1024x1024 textures are enabled, though there's also a double dose of Texture, Detail Texture and Atlas Texture downscaling, reducing VRAM usage at the expense of texture detail and clarity. Switching to Medium increases texture detail to 2048x2048, and reduces the downscaling to 1x, marginally improving clarity. On High, downscaling is dropped entirely, revealing max quality 2048x2048 textures, greatly improving image quality.

On Ultra, there are no further improvements to clarity or detail, merely an increase in the memory budget, allowing more textures to be stored in memory at any given time. Running around on foot this makes little difference, but when galloping on horseback it minimizes the chance of encountering the unsightly streaming-in of high-quality textures."

So on ultra (or even high), textures further into the distance use a lower mip, and thus are higher in apparent resolution. This can of course have problems though and cause extra aliasing if the pixel density is much smaller than texel density, and if the texture has lots of specularity / is an alpha texture with holes that end up being smaller than pixel in size.v1.04 introduces TextureMipBias, a new [Rendering] setting that is tied to Texture Quality. If you're interested, the ins and outs of Mipmaps can be discovered here. For everyone else, the cliff notes: mipmaps are lower-resolution versions of textures that are utilized to increase performance and minimize VRAM use. In the case of The Witcher 3: Wild Hunt, mipmaps are aggressively used for the innumerable layers of foliage that can be seen for miles, and for just about every other texture in the game. This improves performance and keeps VRAM requirements down, but results in some lower-quality foliage and surfaces in the immediate vicinity of Geralt.

By reducing the bias, as this new setting does, higher-resolution mipmaps are loaded at near-range, and the rate at which mipmaps are scaled down across distant views is reduced, increasing visible detail considerably. Unfortunately, this also increases aliasing and shimmering on fine detail, such as the mesh shoulder pads of Geralt's starting armor, and the many thin pieces of swaying grass. As the bias is further reduced these problems intensify, though they can be somewhat mitigated by increasing the rendering resolution and by downsampling. Also, make sure to disable sharpening filters, which greatly exacerbate these issues.

Below, we demonstrate the visual impact of TextureMipBias at "0", the v1.03 level, and the level used on Low and Medium Texture Quality settings in v1.04; at "-0.4", the value used for High; at "-1.0", the value used for Ultra; and at "-2.0", our tweaked value. Focus primarily on the left side of the screen, paying particular attention to the thatched roofs, the tree and foliage near to them, and the trees before the river (hold down ctrl and press + to enlarge these sections for a better view).

The boost mode didn't unlock the full GPU performance in games that didn't support the pro. So it basically ran 18 GCN cored at 911 MHz instead of the 800 MHz the original PS4 could achieve. The other half of the GPU is still deactivated for stability purposes. The CPU does run at full speed though.Didn't the boost mode make some of those really rough FPS areas playable? Does this patch eliminate those bonuses? Couldn't care less about the visual upgrades if they've downgraded the frames per second to achieve it. Kinda focusing on the wrong thing here.

Performance on PS4 had actually been mostly resolved pre-Pro thanks to patches.Didn't the boost mode make some of those really rough FPS areas playable? Does this patch eliminate those bonuses? Couldn't care less about the visual upgrades if they've downgraded the frames per second to achieve it. Kinda focusing on the wrong thing here.

I thought High and Ultra textures were same... From GeForce guide:

"On Low, 1024x1024 textures are enabled, though there's also a double dose of Texture, Detail Texture and Atlas Texture downscaling, reducing VRAM usage at the expense of texture detail and clarity. Switching to Medium increases texture detail to 2048x2048, and reduces the downscaling to 1x, marginally improving clarity. On High, downscaling is dropped entirely, revealing max quality 2048x2048 textures, greatly improving image quality.

On Ultra, there are no further improvements to clarity or detail, merely an increase in the memory budget, allowing more textures to be stored in memory at any given time. Running around on foot this makes little difference, but when galloping on horseback it minimizes the chance of encountering the unsightly streaming-in of high-quality textures."

I got a little frustrated in the area with all the water. Can't remember the name. Got so tired of those swimming things.

So basically textures are max resolution up close medium but uses mip maps for textures at a distance, whereas high gets rid of mipmapping and it's max resolution all the time and on Ultra the game stores additional texture data in order to avoid streaming transitions ?

Holy shit, it got bumped to 4K!? Buying it right now.One last pixel-count to further show that it is 2160p on the edges (thanks to Omegabalmung9 for the pic)

Source pic:

Amazing work by CDPR!

So compared to PC, what graphics level is the PS4 version at? Mid range?

Maybe ill double dip...

@HDR: does it even require any processing power?

So compared to PC, what graphics level is the PS4 version at? Mid range?

Maybe ill double dip...

@HDR: does it even require any processing power?

Some quick comparison shots in 4K mode:

Old https://farm5.staticflickr.com/4502/36785612364_df02ee2c50_o.png

Pro https://farm5.staticflickr.com/4463/37237317760_b06da784b5_o.png

Old https://farm5.staticflickr.com/4459/36826150473_0464005be9_o.png

Pro https://farm5.staticflickr.com/4472/37464443312_da4e49f3c0_o.png

Old https://farm5.staticflickr.com/4511/23643027678_157d9ea781_o.png

Pro https://farm5.staticflickr.com/4451/36826145503_52967883b6_o.png

Resolution: 1920x1080 (4K via cb or geo on Pro)

Nvidia HairWorks: Off

Number of Background Characters: Low

Shadow Quality: Medium

Terrain Quality: Medium

Water Quality: High

Grass Density: Medium

Texture Quality: High

Foliage Visibility Range: High

Detail Level: Medium

Ambient Occlusion: SSAO

All post-process effects on, except vignetting

I'd like to know this as I tried the PC version at 4k with medium settings on a gtx 1060 6gb. Looked great and ran fine....in the starting area. I'm going to see what it looks like on the Pro now with this patch.

The trees in the background 😂😂😂That looks pretty good

Seems sub 30fps in places to me now? Is it just me?

Guys, I can confirm that we are getting 4K on the edges!

Thanks to Tinúviel and his perfect screenshots, I was able to pixel count and get a definite, beautiful, 2160p pixel count!

My line is 30 pixels up

The math, for people unaware looks like this:

Draw 30 pixels up from a pixel "staircase"

Skip a pixel up and across like I did in the photo

Continue drawing a line sideways, until you hit another staircase

Count each staircase between the sections you marked

In our case, it happens to be 30 staircases

Now, you divide the staircases you counted from the amount of pixels you drew upwards at the start so:

30/30= 1

then you take that number and multiply it by the resolution of the photo

1 x 2160= 2160

Seems sub 30fps in places to me now? Is it just me?

It's not just you. It does now drop more from 30fps. This didn't happen in boost mode.

Ita not just me but there are definite performance drops now where there wasn't before. On boost mode it was pretty much a 30fps lock. Whilst it looks much better now it doesn't hold its frame rate nearly as much I find.