I'm also reading about theories on how there's bottlenecks in the Xbox I/O system that don't don't allow it to operate at maximum speed. I was given a discussion on how Microsoft is having issues with BCpak which is causing them to have bottle necks within the system. I can definitely see them improve the software to reduce potential bottlenecks.

What I can't see them doing is matching Sonys I/O system mostly due to the way the actual drive is designed. All the software and additional hardware that they have can't change the output if that SSD. If physical limitation is 2.4 GB/s raw then it will always be that way unless Microsoft changes the physical structure of the drive itself.

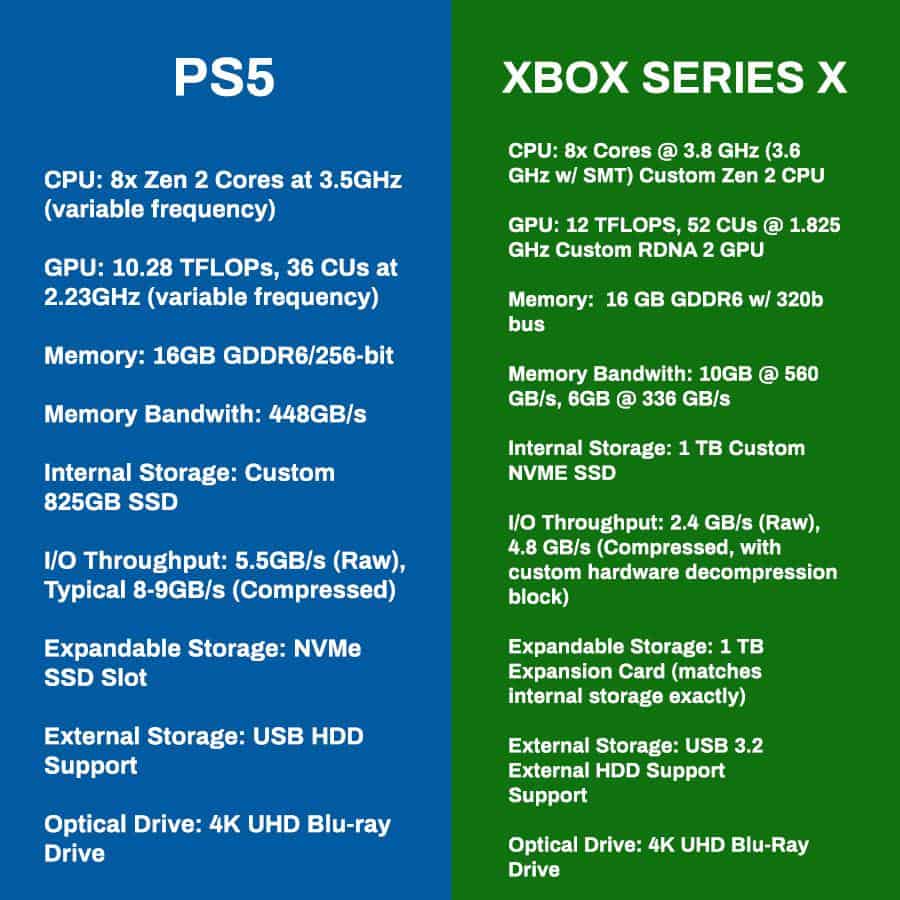

People just have to accept that the GPU delta (in favor of the XSX) and the I/O delta (in favor of the PS5) can't be eliminated at this point.

"But as you said, we'll have to wait until more official information arrives."

That's true but I'm not confident of the delta being narrowed due to the physical designs of the drives.

Where are you getting these theories from and who's discussing these things? I'd like some links, myself, because I've seen some people discussing these things around various places but IMO they are usually either missing big pieces of the picture or have some agenda of their own, fudging certain points they bring up to paint a different picture than what's probably actually there.

Regarding BCPack, the only thing I've heard personally is that they are looking to push the compression rate higher. I don't see how that suddenly means it is causing problems or bottlenecks, unless "bottlenecks" now pertains to temporary design challenges which every system goes through when under development (and more often than not resolves).

In some ways it feels like some of these bottleneck concerns have only came up in light of Road to PS5 and seem like a talking point to warp some part of the discussion around. I've seen seemingly knowledgeable people around Youtube comments, for example, try implying XSX's SSD won't even reach half the stated speed but then if you look at their reasoning it turns out to be complete bunk they fabricate out of thin air. And then they might have a pattern to their discussion that further shows why they have the impression of that type of bottleneck but none of that impression is really formed on anything MS have publicly stated.

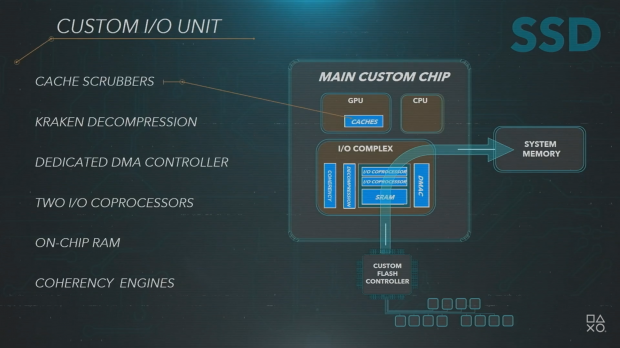

For example, some people try saying since the CPU still does some of the work, that is now a bottleneck. But only 1/10th of one of the cores is actually doing any work in relation to the SSD I/O stack, not to mention even with SMT enabled XSX's CPU is still 100 MHz faster than PS5's, so essentially it cancels itself out. Plus if they're going with a more software-tailored approach they would want some portion of the management being handled on the CPU; on other systems it could be more than 1/10th of a Zen 2 core, depends on the processor. But that effectively means it should be deployed easily on a range of PC devices.

You're misunderstanding something here. I said going by the paper specs themselves does not paint the full picture. This is applicable to the GPUs, how is it suddenly impossible to see it being applicable to the SSD I/O? Because of physical structure? We don't even fully know what the physical structure for both are yet. One is 12 channels, the other is 4 channels, but that could be 12x 64 GB modules on one and either 4x 256 GB modules or 4x4 64 GB modules on the other. That in itself could point to a physical structure different than what some are assuming.

Whatever software optimizations are being taken, would not be taken if the companies didn't feel the hardware could support them. I find it a bit hard to believe MS are pushing optimizations that are basically creating bottlenecks in hardware they seem to have shown a great understanding for thus far, considering how many engineers and department divisions they have to leverage talent and development from. That part feels a bit like 4Chan drama bait, comparable to the rumors Sony PS5 devkits were overheating, or Sony panicking to up the GPU clock at the last minute. Those were pretty ridiculous rumors, true.

We'll find out more, for certain, but I'd say don't be surprised if the actual real-world performance in SSD I/O stack between the two systems is closer than what the (sparse) paper specs we know so far indicate. It won't close the gap, that's never been suggested. But it won't be of any real shock if the actual performance delta is notably smaller than it currently appears with what little is known so far. If you're only looking at the hardware side of the SSD I/O stack, you are not looking at the full picture. There are in fact other aspects of the way texture asset data can be stored, prioritized, accessed and drawn that could also factor into this, but that's getting too complicated for discussion here.

Just saw a tweet by a developer on Twitter. She believes that 100GB being instantly accessible means that the system will believe the XSX actually has 116GB of RAM rather than 16GB. So, you have the 10GB @ 560GB/s, the 6GB @ 320GB/s, and the 100GB @ SSD speed.

Interestingly, she posted this a few months ago;

Kind of gives me flashbacks to older microcomputers that basically allocated ROM as part of the memory addressing space. Unfortunatley NAND is not as fast as ROM but I'm curious if this is divergent in any way from what AMD's SSG cards do.

Gonna need to do some more research on this, curious about what they're trying to do here. I'm almost ready to leapfrog to August but wouldn't want to miss the PS5 and XSX gameplay events first xD.