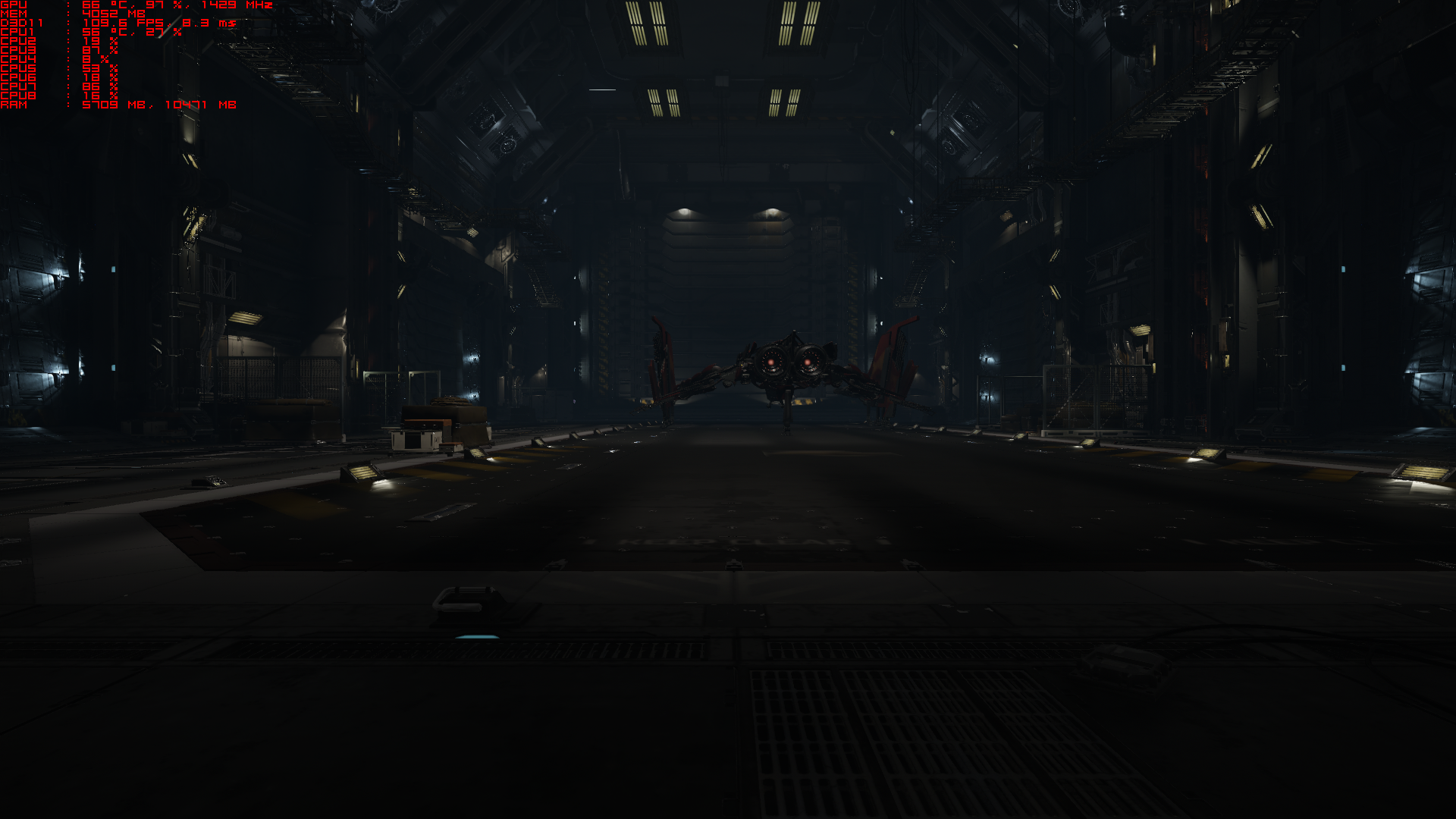

Again, you are not considering the full set of potential implications of what you are talking about. What is measured if you look at a metric such as FPS is the final, aggregate performance of a complex stack of interacting hardware and software components. This means that individual effects on performance might well be underrepresented, or not represented at all, in this number. For example, if a game is limited primarily by CPU and API overheads, or ROPs, or shader processing, or vertex setup -- and so on and so forth -- on a given platform, then changing the AF level won't have a measurable impact on aggregate performance even though it might have one on another platform where the resource bottlenecks contributing to the final measured performance are distributed differently.

I don't see how "missing" something can result in a complex set and distribution of distinct AF levels across a variety of surfaces.

There is no need to be patronising. I am well aware that different hardware has different bottlenecks and that it is not always obvious. Take the 7790 being very shader heavy, it has obvious bandwidth / rop bottlenecks as it has similar shader performance to the 7850. Or Fury(X) where it should show better scaling than it does at stock settings and surprisingly has great scaling with memory overclocking despite offering lots of bandwidth anyway.

The devs in the article talk about a) bandwidth and b) AF being expensive. If a was true it would show up in the Kaveri system as that has far less bandwidth than both PS4 and Xbox One. It would also suffer the same memory contention issues that the consoles can have due to it also being a shared memory pool. if b was true it would also show up in the Kaveri system due to it being massively underpowered vs the console GPUs.

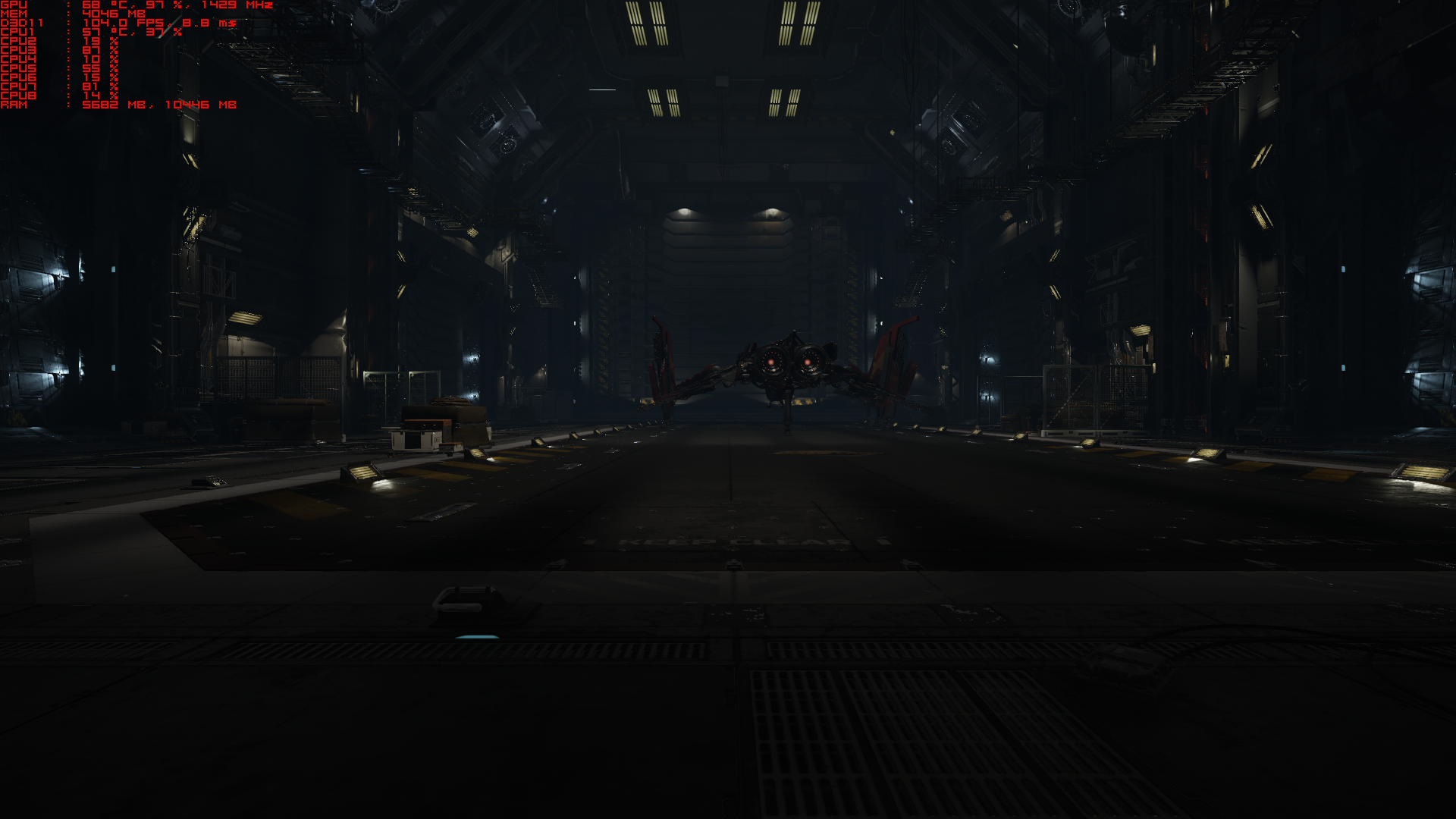

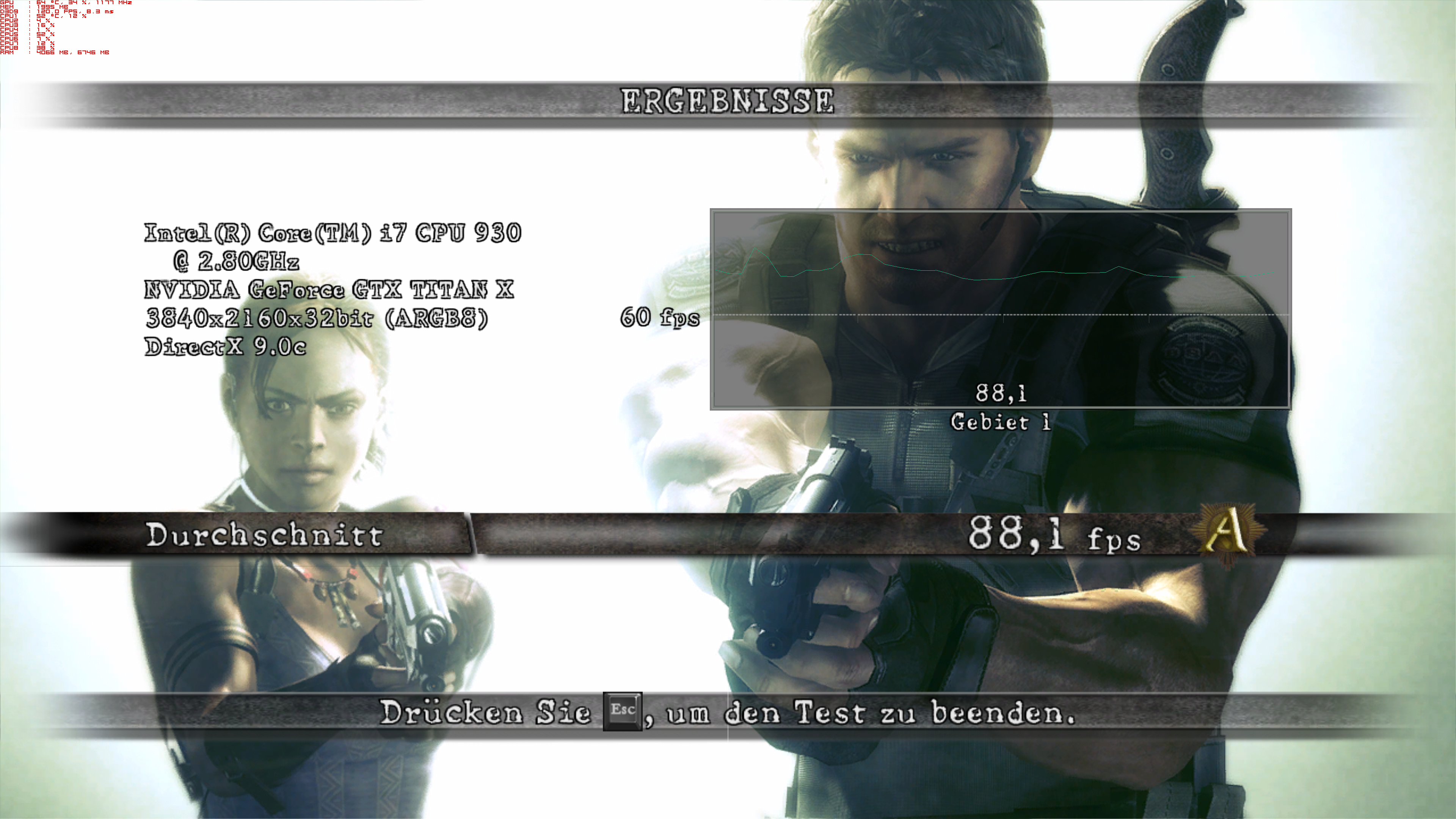

The results show that AF is not expensive (if it was something as weak as the A10-7800 would suffer when going from 0x to 16x) and that it is not a bandwidth issue (if it was the 38.4 GB/s of the Kaveri system would also highlight it). Is AF limited by TMU performance? If it was you would expect greater than 2% drops across the board considering the A10-7800 only has 32 TMUs running at 720Mhz. The Xbox One has 48 running at 853Mhz and the PS4 has 72 running at 800Mhz. Every bottleneck you look at would appear first on the Kaveri platform if it existed yet it does not show up in any of the tested games.

A larger selection of games would be a lot more conclusive so I do hope DF follow up when they have time with some more games to see if there are any situations which show larger drops after turning on AF.

Missing was the wrong word to use, it is more a case of it not really being considered in any deep way. This is speculation but I think it can be pretty easily be explained in two ways

1) Low AF on both consoles - This could be a simple case of work flows. Timelines are tight and finding the time to change the process flow and then test higher AF levels could just be something that studios do not want to commit to. It seems like it should be an easy win to change it up to have 8x AF as a baseline and hardware wise it looks like there is little to no performance impact but perhaps the issue is neither hardware or software but simply lack of time to change and test it.

2) No AF on PS4 but AF on Xbox One - As already stated this is probably some sort of default API setting difference and a simple oversight where the dev thinks its enabled, it is not picked up in QA for some reason and ships with the setting turned off on PS4 and turned on on Xbox One.

In light of the above I do think I was a bit harsh with my comments stating that it was squarely on the devs shoulders as I did not really consider the time impact that such a change could have.

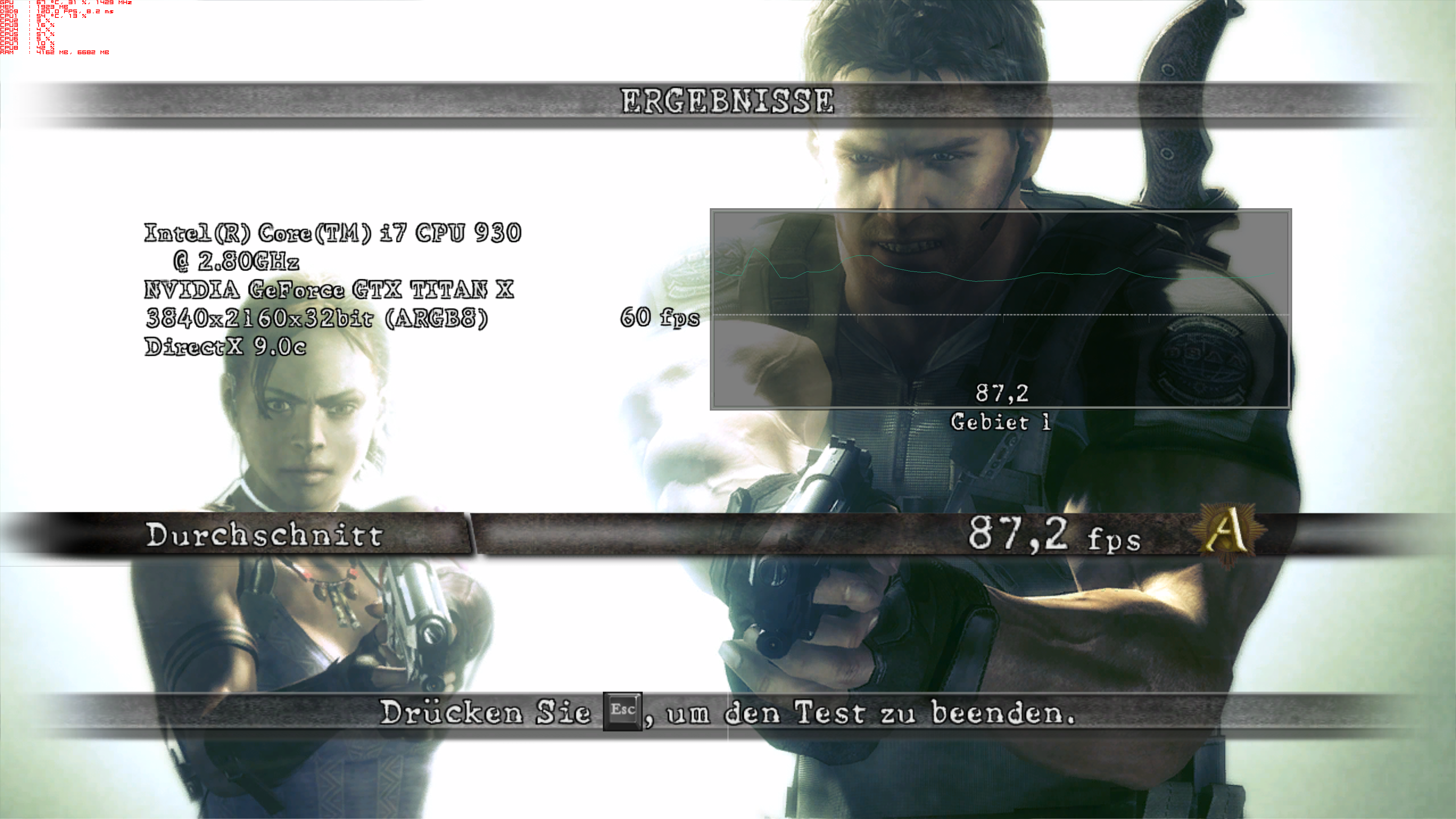

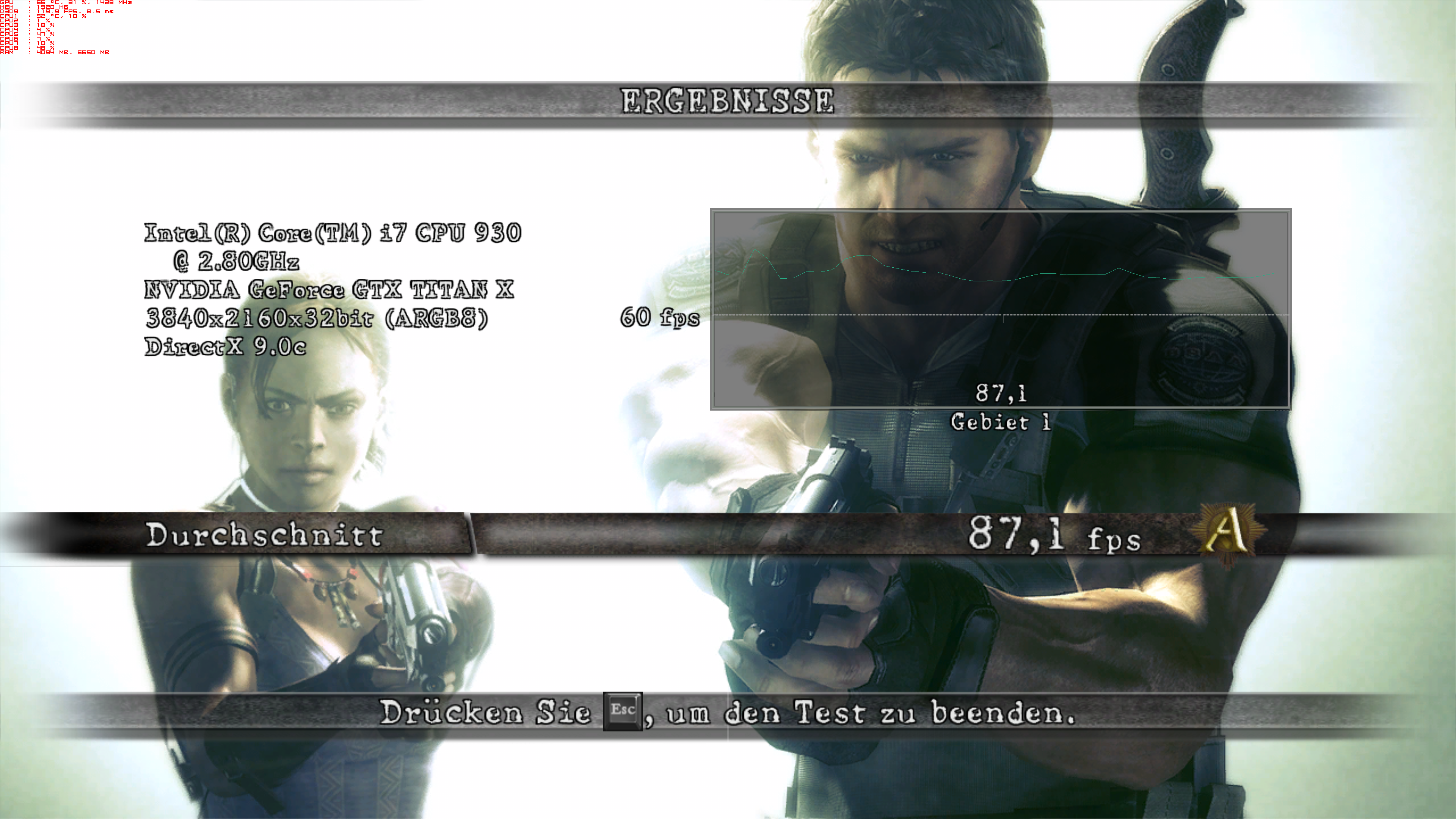

Margin of error is less than 2 %.

Error margin is not a fixed %, it depends on the repeatability of the test and the number of tests performed. A set benchmark is more repeatable than a gameplay test so the error bars in the former are smaller than the latter. The more repeats you do also close the error bars as you have more data points. Generally 5% is pretty reasonable if you are doing a basic 3 repeat, exclude the outliers (if any) and averaging the remaining results kind of test.