There is no way. Even not buying their products won't get them to change as evidenced by Wii U sales.This. As I've mentioned earlier, it's been a long, long time since IBM cared about the consumer market. Low power? How about practically never.

Plus, x86 is evidently more developer friendly, if Sony choosing it at the behest of developer feedback is anything to go off of.

Kind of curious: what's the best way to give Nintendo feedback?

-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Wii U CPU |Espresso| Die Photo - Courtesy of Chipworks

- Thread starter Fourth Storm

- Start date

blu

Wants the largest console games publisher to avoid Nintendo's platforms.

You do realize the 'gains' that x86 has seen today when talking about that power envelope are exhibited in the Atom lineup, right? And Atoms are not exactly the epitome of power efficiency - there's a reason ARM beats them handily.The Gekko CPU was very efficient compared to it's rivals, de-cached P3s (celerons?), but this was a long time ago, that generation while it's been updated by nintendo over the years, likely hasn't seen the gains today compared to x86 has.

Doing what? You can't throw in such a statement without backing it up with some figures and context. Apropos, Apple's driving factor for the switch was their product roadmaps. And there both Freeescale and IBM had managed to make Apple look stupid a few times already, failing to launch an announced product on time. Enter Core, with which Intel had finally managed to meet Apples performance per watt requirements, while also offering better scaling with clock compared to anything IBM/FS had on their tables at the time, and industry-leading fab tech. Oh, I almost forgot - Intel was also offering Apple some free chipsets to go along with the Cores. All that's what made Apple jump ship.Apple could have kept using the G3 CPU on it's mobile parts, it made for stellar powerbooks in it's day, but really, there is only so far it seemed it could go on battery power, or in lower power applications that apple wanted to do. x86 IS more efficient if we're talking performance per watt. Apple made a good move.

FS invested in low-power PPC alright. There's a chance a G2 sits somewhere in your car. Soon it will be all ARMs, of course.If however, there was competition and the same investment in the low power PPC processors it could have been another story, but clearly IBM was looking at heavy metal, server CPU's and not investing in the low power stuff.

cartman414

Member

You do realize the 'gains' that x86 has seen today when talking about that power envelope are exhibited in the Atom lineup, right? And Atoms are not exactly the epitome of power efficiency - there's a reason ARM beats them handily.

It depends on the power envelope of Espresso. Intel's been making some massive strides in that direction for their mainline processors which now include ultra-low power envelope, graphic integrated solutions. Atom has been steadily improving, and will more likely than not surpass ARM with Silvermont, which will go out-of-order.

lostinblue

Banned

Not against de-cached Pentium 3. G3 was very high performing per clock against anything; like said it just didn't scale up well enough and this happened in the middle of a MHz race (that eventually lead us to Pentium 4/Netburst; architecture and the scrapped IBM PPC GUTS architecture, later ressurected and used on current gen consoles; architectures that were utter crap but favored clocks)The Gekko CPU was very efficient compared to it's rivals, de-cached P3s (celerons?), but this was a long time ago, that generation while it's been updated by nintendo over the years, likely hasn't seen the gains today compared to x86 has.

Understand, CPU's don't get a major shakeup every 6 months, so an old architecture can actually still be good for what it is (which is why intel technically salvaged Pentium 3 after Pentium 4 and recently it salvaged Intel Pentium 1 to serve as a basis for Atom and even used it largely unchanged on Larabee/MIC), a processor architecture retired or not is still a part of every manufacturer portfolio and sometimes, they just might make sense to use again; after all over the years it's mostly just the design methodologies and production of more complex designs that changed.

PPC 750 is a CPU reduced to the bare minimum, meaning it'll always be high performing per silicium invested and that's why it didn't get redesigned, that's why IBM is still offering PPC 476FP (a similar design) as a modern high performance short pipeline solution.

What happened over the years is CPU logic meant for asymmetric processing as well as accelerations and floating point units got bigger; and the G3 stands where it was, obviously. It stands there because there would be no point in changing it; at some point if you changed the design you'd just end up with a G4. (IBM actually tried it, because G4 was Motorola produced; but a G3 with altivec would be a slighly different implementation of a G4 taking most of the design decisions that went into it)

G3 is a simple design, reduced to the essential; you can't boast that for most designs out there today who have comparatively bigger pipelines (hence take longer to complete a cycle) but have more overhead to run more things (dispatch instructions per cycle) so that eventually evens out things. Not to mention they go to higher frequencies.

So despite G3 being high performing as fuck, it was beaten by higher clocked CPU's, even then; it doesn't matter if you have a very efficient platform if you can't possibly go past 600 MHz in 2000.

There was a MHz race for that reason; because if you can't beat them per clock you might as well go higher; and go higher they did. To realms G3 couldn't dream of at that manufacturing process; they were, of course, looking for a balance. G3 and Pentium 4 being two extremes, and the all around solution being something in the middle.

This doesn't change the fact that the thing was high performing per clock; and it still is.

LOL. x86 as an architecture surely isn't more efficient; it has lots of legacy inter-dependencies.Apple could have kept using the G3 CPU on it's mobile parts, it made for stellar powerbooks in it's day, but really, there is only so far it seemed it could go on battery power, or in lower power applications that apple wanted to do. x86 IS more efficient if we're talking performance per watt. Apple made a good move.

Steve Jobs Apple (1997 onwards) always planned that move; more R&D for consumer products go to x86 and production is higher so yields are better. MacOS X internally had been running on x86 for years, as NeXTSTEP that served as a basis was x86 ready.

And IBM's inability to deliver on roadmaps and deliver good yields over G5 processors probably sealed the deal; as that inability before sealed it for Motorola/Freescale G4's as a flagship processor.

The thing really setting x86 apart at this point is manufacturing process (intel is using trigate) and power gating; you might remember speedstep on the Pentium 3, that was the first step; IBM has no power gating whatsoever; which is why they have no embedded recent "from-the-ground implementation" offerings, but on the G3 they really don't need it because it's so efficient and comparatively simple; it doesn't have the structure to be an energy hog.

In the end, and in general purpose it's most likely in line with ULP core 2 duo cpu's, both in energy consumption and performance per clock. Thing is they arrived there through different means; one kept it simple the other power gated itself into oblivion.

G3 is one of the most effective per watt general purpose solutions out there still.

Although I wish they did more; specially regarding the altivec, and FP portion of the CPU, there's nothing inherently wrong in there.If however, there was competition and the same investment in the low power PPC processors it could have been another story, but clearly IBM was looking at heavy metal, server CPU's and not investing in the low power stuff.

We have some benchs somewhere to prove it.

I'm still hoping for a MIPS last stand.FS invested in low-power PPC alright. There's a chance a G2 sits somewhere in your car. Soon it will be all ARMs, of course.

I find world domination by ARM a boring proposition.

You do realize the 'gains' that x86 has seen today when talking about that power envelope are exhibited in the Atom lineup, right? And Atoms are not exactly the epitome of power efficiency - there's a reason ARM beats them handily.

Wrong.

The atoms are not on the level of other x86 CPUs and Intel has not put much R&D into them for a long time, Intel has seemed to now of got off their arse and the newer atoms do put up a good fight against ARM CPUs with around the same power usage, processing power and doing it all with half the number of cores!

blu

Wants the largest console games publisher to avoid Nintendo's platforms.

You somehow missed a good deal of what I said, so let me emphasize, just for you. The 'other x86 CPUs' by Intel are not in the power range of G3s (don't forget we're talking comparable fab nodes here). For reference, AMD has bobcats/jaguars. What does Intel have? That's right - Atoms!Wrong.

The atoms are not on the level of other x86 CPUs and Intel has not put much R&D into them for a long time, Intel has seemed to now of got off their arse and the newer atoms do put up a good fight against ARM CPUs with around the same power usage, processing power and doing it all with half the number of cores!

You somehow missed a good deal of what I said, so let me emphasis, just for you. The 'other x86 CPUs' by Intel are not in the power range of G3s (don't forget we're talking comparable fab nodes here). For reference, AMD has bobcats/jaguars. What does Intel have? That's right - Atoms!

Given the G3 efficiency, I wonder why Nintendo doesn't upgrade to those. Would that adversely effect backwards compatibility?

They could at least add altivec to the CPUs.

blu

Wants the largest console games publisher to avoid Nintendo's platforms.

Espresso belongs to the G3 lineup. Perhaps you meant G4?Given the G3 efficiency, I wonder why Nintendo doesn't upgrade to those. Would that adversely effect backwards compatibility?

They could at least add altivec to the CPUs.

Espresso belongs to the G3 lineup. Perhaps you meant G4?

It does? I thought it was pre-G3. Seems I was mistaken.

Don't G3 CPUs support Altivec, though?

blu

Wants the largest console games publisher to avoid Nintendo's platforms.

They don't. One of the few major differences between the G3 and G4 is that the latter support AltiVec.It does? I thought it was pre-G3. Seems I was mistaken.

Don't G3 CPUs support Altivec, though?

They don't. One of the few major differences between the G3 and G4 is that the latter support AltiVec.

Okay, Then I guess I did mean G4.

I mainly wanted to know if it would be possible to add Altivec to the Nintendo's CPU line. Even though lostinblue seems to consider this misleading. http://macspeedzone.com/archive/4.0/g4vspent3signal.html, Altivec gives such great watt for watt performance.

I saw it stated that the newer line of Intel CPU's were using the Pentium 3 tech as a bases so that would turn a CPU like espresso into a tank in comparison.

lostinblue

Banned

Architecture is largely similar, there were even upgrade kits for G3 machines to use G4 CPU's.

The main difference is Altivec and the fact G4 was manufactured by Motorola at all times despite IBM involvement in development, whereas the PPC750 was a IBM endeavor (Motorola onde gave it a go in 1997 and 1998).

IBM at some point planned the PPC 750VX Mojave with AltiVec, 1.8 GHz (2 GHz planned for 750VXe revision) and 400 MHz FSB, but eventually scrapped it due to bad timing and Apple disinterest. They would have had to mess with the pipeline (lenghten it) for that to be possible on PPC750 in 2004 at 130-90 nm's so it probably wouldn't be code compatible with Gekko anymore, it was for all means and purposes a G4 by IBM.

Ironically it could have been technically Nintendo who killed it's chances to get to market because the timing for it to be used on the Wii would have been right. 750CL clearly arrived because IBM was producing them for Nintendo anyway; had it been a shift towards 750VX it would have probably gotten to market as well.

It has been speculated that they might have lifted the SMP support from it for Espresso, but clearly not the Altivec nor the rest of the design. Nintendo probably didn't care for it because the code compatibility would go through the window and they didn't care much about the increased SIMD/FPU performance; it's clearly evident Nintendo designed the machine as a low spec GPGPU machine, so they expect FPU needs to be satisfied via that.

Also bare in mind Gekko and Broadway were designed to address this somewhat, with the inclusion of paired singles and custom SIMD instructions built in (in order to improve FP performance); in a way it was something akin to a Altivec lite implementation.

Of course though G4 absolutely destroys PPC750 (including Gekko and Broadway) in that area because it's 4-way; it also destroyed Pentium 3 because of the very same reasons. (it had problems scaling up just like G3 had experienced before though; Motorola had huge problems and fell out of favor with Apple due to their inability to deliver within the established timelines; in turn the aforementioned PPC 750VX was created due to that).

The flagship 500 MHz G4 part, originally promised for launch in August 1999 ultimately only sailed in February 2000; Pentium 3 had launched in February 1999 with speeds up to 600 MHz at launch, so for Apple forced to downgrade their flagship machines to 450 MHz it appeared a lot like struggling to get a 50 MHz increase (no mention of 600 MHz) in a market whose marketing revolved around MHz (in the middle of the GHz race, no less) when intel was experiencing no problems with their architecture.

By the time the 500 MHz G4 part appeared Pentium 3 had reached 800 MHz (and the 866 MHz model launched within one month) so the clockrate difference also single handedly closed the distance a little.

The main difference is Altivec and the fact G4 was manufactured by Motorola at all times despite IBM involvement in development, whereas the PPC750 was a IBM endeavor (Motorola onde gave it a go in 1997 and 1998).

IBM at some point planned the PPC 750VX Mojave with AltiVec, 1.8 GHz (2 GHz planned for 750VXe revision) and 400 MHz FSB, but eventually scrapped it due to bad timing and Apple disinterest. They would have had to mess with the pipeline (lenghten it) for that to be possible on PPC750 in 2004 at 130-90 nm's so it probably wouldn't be code compatible with Gekko anymore, it was for all means and purposes a G4 by IBM.

Ironically it could have been technically Nintendo who killed it's chances to get to market because the timing for it to be used on the Wii would have been right. 750CL clearly arrived because IBM was producing them for Nintendo anyway; had it been a shift towards 750VX it would have probably gotten to market as well.

It has been speculated that they might have lifted the SMP support from it for Espresso, but clearly not the Altivec nor the rest of the design. Nintendo probably didn't care for it because the code compatibility would go through the window and they didn't care much about the increased SIMD/FPU performance; it's clearly evident Nintendo designed the machine as a low spec GPGPU machine, so they expect FPU needs to be satisfied via that.

It's only "misleading" because it's only testing the Altivec strengths. In real world scenarios it's more tame, because code isn't usually SIMD/FPU centric and DMIP-wise they're very similar, note: they also link to those benchmarks in the article.Even though lostinblue seems to consider this misleading. http://macspeedzone.com/archive/4.0/g4vspent3signal.html, Altivec gives such great watt for watt performance.

Also bare in mind Gekko and Broadway were designed to address this somewhat, with the inclusion of paired singles and custom SIMD instructions built in (in order to improve FP performance); in a way it was something akin to a Altivec lite implementation.

Of course though G4 absolutely destroys PPC750 (including Gekko and Broadway) in that area because it's 4-way; it also destroyed Pentium 3 because of the very same reasons. (it had problems scaling up just like G3 had experienced before though; Motorola had huge problems and fell out of favor with Apple due to their inability to deliver within the established timelines; in turn the aforementioned PPC 750VX was created due to that).

The flagship 500 MHz G4 part, originally promised for launch in August 1999 ultimately only sailed in February 2000; Pentium 3 had launched in February 1999 with speeds up to 600 MHz at launch, so for Apple forced to downgrade their flagship machines to 450 MHz it appeared a lot like struggling to get a 50 MHz increase (no mention of 600 MHz) in a market whose marketing revolved around MHz (in the middle of the GHz race, no less) when intel was experiencing no problems with their architecture.

By the time the 500 MHz G4 part appeared Pentium 3 had reached 800 MHz (and the 866 MHz model launched within one month) so the clockrate difference also single handedly closed the distance a little.

blu

Wants the largest console games publisher to avoid Nintendo's platforms.

It is a bit misleading because AltiVec should not be compare to MMX but to SSE, which got introduced by P3 as the 'KNI instruction set' (the memories..). Even by that comparison, though, AltiVec used to make SSE look silly. Key point: used to. We're at SSE 4.2 today, and more importantly, Intel have introduced an actually non-moronic SIMD ISA - AVX (and recently AVX2), while AltiVec has remained more or less the same. So things are not so clear cut anymore.Okay, Then I guess I did mean G4.

I mainly wanted to know if it would be possible to add Altivec to the Nintendo's CPU line. Even though lostinblue seems to consider this misleading. http://macspeedzone.com/archive/4.0/g4vspent3signal.html, Altivec gives such great watt for watt performance.

You got the wrong impression there. Before Espresso could even consider playing in the major league it would need a major-league fab node. Also, there's no point in comparing Espresso (a very clear-cut G3 specimen, be that the best of the lineup), to something which originated as P3, but saw several major design iterations (starting with the Core architecture), and a myriad of fab node transitions. If you want to consider G3's successor G4, then that could make more sense, but again, Espresso is a G3, not a G4. By today's standard, Espresso is a netbook design. It can play against the Bobcats and Atoms of today, but not in the major league. clarification: I'm talking of single-thread performance here.I saw it stated that the newer line of Intel CPU's were using the Pentium 3 tech as a bases so that would turn a CPU like espresso into a tank in comparison.

prag16

Banned

Asked this in the GPU thread, but the question is getting buried underneath constant off topic bickering, so I'll put it here too since it also concerns the CPU...

This was a long time ago at this point, and maybe it was fully validated and explained beyond any possible doubt many times, but are we 100% cock sure on those reported clock speeds? Didn't Marcan glean those while the system was running in Wii mode (I remember he had to hack around a bit to even unlock the other two CPU cores in that mode or something?)?

Did anyone ever corroborate this independently, or was marcan the only one? Again, sorry if this was 100% irrefutably explained before, but I've been in and out on this thread from way back and haven't seen 100% of the content.

cartman414

Member

One other issue lies in whether PPC would be able to be used for the generation following this one without issue, now that the other two have already moved on, one of them at the behest of 3rd parties. I just hope Nintendo won't remain too insular to care.

One other issue lies in whether PPC would be able to be used for the generation following this one without issue, now that the other two have already moved on, one of them at the behest of 3rd parties. I just hope Nintendo won't remain too insular to care.

I fail to understand where all this condemnation directed towards the PPC architecture is. Its generally better watt for watt than x86

The only problem would come form lazy or cheap devs that dont' want to take the time to learn the difference in the hardware and program code to its strengths as opposed to just porting the code and truncating what want fit in the first try.

I would have no problem with Nintendo sticking with the PPC. There is also the option to go with the modern PowerPC architecture. I would be nice if the next consle had a Power8 in it.

http://en.wikipedia.org/wiki/POWER8

That thing destroys any Intel/AMD CPU curerntly on the market(as Power CPU's always do upon release).

Maybe they could make a hybrid with it.

What exactly are the advantages of the Power7 cache inside Espresso? I don't think that has fully been explored.

You know, I just noticed something while reading the Power7 doc. Its says that the Power7 has eDram on it. Doesn't Espresso supposedly interface with the eDram as well?

4 MB L3 cache per C1 core with maximum up to 32MB supported. The cache is implemented in eDRAM, which does not require as many transistors per cell as a standard SRAM[5] so it allows for a larger cache while using the same area as SRAM.

http://en.wikipedia.org/wiki/POWER7

Would also be nice to see Nintendo go with DDR4 in their next console as well. According to Wikipedia it gets higher performance at a lower voltage, so that would fit right in with Nintendo's focus on energy consumption and efficiency.

lightchris

Member

I fail to understand where all this condemnation directed towards the PPC architecture is. Its generally better watt for watt than x86

Not necessarily, as it depends on the spcific architecture.

Power 7 was not more energy efficient than Intel's Westmere for example:

"The Power 7 CPUs are in the 100 to 170W TDP range, while the Xeon E7s are in the 95 to 130W TDP range. A quad 3.3GHz Power 755 with (256GB RAM) server consumes 1650W according to IBM (slide 24), while our first measurements show that our 2.4GHz E7-4870 server will consume about 1300W in those circumstances.

Considering that the 3.3GHz Power 7 and 2.4GHz E7-4870 perform at the same level, we'll go out on a limb and assume that the new Xeon wins in the performance/watt race."

(source)

I would have no problem with Nintendo sticking with the PPC. There is also the option to go with the modern PowerPC architecture. I would be nice if the next consle had a Power8 in it.

http://en.wikipedia.org/wiki/POWER8

That thing destroys any Intel/AMD CPU curerntly on the market(as Power CPU's always do upon release).

As with Power7 Power8 is desigend as a server CPU and I'm not sure if it would be a good fit for a game console. Another problem is that these CPUs are very expensive. And if it really will "destroy" Intel's CPUs remains to be seen.

What exactly are the advantages of the Power7 cache inside Espresso? I don't think that has fully been explored.

You know, I just noticed something while reading the Power7 doc. Its says that the Power7 has eDram on it. Doesn't Espresso supposedly interface with the eDram as well?

You quoted it yourself, the eDRAM on Power7 is the L3 Cache. The advantage is that it needs less transistors compared to traditional SRAM, so that you can fit more cache on the same space and thus save money. On the other hand it should've slightly higher latencies. A bit of explanation can be found here.

Not necessarily, as it depends on the spcific architecture.

Power 7 was not more energy efficient than Intel's Westmere for example:

"The Power 7 CPUs are in the 100 to 170W TDP range, while the Xeon E7s are in the 95 to 130W TDP range. A quad 3.3GHz Power 755 with (256GB RAM) server consumes 1650W according to IBM (slide 24), while our first measurements show that our 2.4GHz E7-4870 server will consume about 1300W in those circumstances.

Considering that the 3.3GHz Power 7 and 2.4GHz E7-4870 perform at the same level, we'll go out on a limb and assume that the new Xeon wins in the performance/watt race."

(source)

As with Power7 Power8 is desigend as a server CPU and I'm not sure if it would be a good fit for a game console. Another problem is that these CPUs are very expensive. And if it really will "destroy" Intel's CPUs remains to be seen.

You quoted it yourself, the eDRAM on Power7 is the L3 Cache. The advantage is that it needs less transistors compared to traditional SRAM, so that you can fit more cache on the same space and thus save money. On the other hand it should've slightly higher latencies. A bit of explanation can be found here.

For the eDRAM, I was referring to the supposed connectivity to the eDram or 1t-SRAM that Espresso has with Latte. Though I thought eDRAM was supposed to be lower latency? Well, I learned something new.

This is going from what Shin'en said about the GPU/CPU connectivity in the Wii U and how you get huge performance boosts from using them conjunctively. There was also some talk in the GPU thread a while about the GPU RAM being used for high speed, low latency communication between the CPU and GPU which makes me think that the CPU can also access the eDRAM on Latte. Then there are the benefits of them being on the same MCM. Its possible that this might be an area which gives a huge performance boost to the Wii U that has mostly gone overlooked.

It would still be interesting if Power8 tech were implemented in the beyond next gen iteration of Espresso. By then is should be 20nm.

lostinblue

Banned

Problem is R&D, IBM is not pouring any R&D into low power architectures in order to compete in embedded mobile platforms, and home consoles are for the better and worst embedded platforms at this point.

PPC750 is still a good CPU, but it's a good barebones CPU whereas R&D elsewhere has been put to use in power gating and trigate/LPG; IBM is doing neither, the most power draw conscious implementation they did in 10 years that wasn't a byproduct of node shrink must have been the eDRAM instead of SRAM, that Nintendo used.

And so now that intel roadmap is really agresive in getting power consumption down in order to compete with ARM and with AMD to follow it's only gonna get worse; it's simply a race IBM isn't partaking.

It's pretty obvious either IBM changes it's goals (which I doubt they will) or PPC being used on embedded platforms is a dying breed.

PPC750 is still a good CPU, but it's a good barebones CPU whereas R&D elsewhere has been put to use in power gating and trigate/LPG; IBM is doing neither, the most power draw conscious implementation they did in 10 years that wasn't a byproduct of node shrink must have been the eDRAM instead of SRAM, that Nintendo used.

And so now that intel roadmap is really agresive in getting power consumption down in order to compete with ARM and with AMD to follow it's only gonna get worse; it's simply a race IBM isn't partaking.

It's pretty obvious either IBM changes it's goals (which I doubt they will) or PPC being used on embedded platforms is a dying breed.

cartman414

Member

The only problem would come form lazy or cheap devs that dont' want to take the time to learn the difference in the hardware and program code to its strengths as opposed to just porting the code and truncating what want fit in the first try.

With their present 3rd party situation, Nintendo is in no position to dictate 3rd party development standards in contrary to their competitors. I mean, while there's no real comparison, look at what happened with Cell (PS3).

Problem is R&D, IBM is not pouring any R&D into low power architectures in order to compete in embedded mobile platforms, and home consoles are for the better and worst embedded platforms at this point.

PPC750 is still a good CPU, but it's a good barebones CPU whereas R&D elsewhere has been put to use in power gating and trigate/LPG; IBM is doing neither, the most power draw conscious implementation they did in 10 years that wasn't a byproduct of node shrink must have been the eDRAM instead of SRAM, that Nintendo used.

And so now that intel roadmap is really agresive in getting power consumption down in order to compete with ARM and with AMD to follow it's only gonna get worse; it's simply a race IBM isn't partaking.

It's pretty obvious either IBM changes it's goals (which I doubt they will) or PPC being used on embedded platforms is a dying breed.

This, so much. Other companies are creating SoCs, which is something one would expect Nintendo to be all for.

With their present 3rd party situation, Nintendo is in no position to dictate 3rd party development standards in contrary to their competitors. I mean, while there's no real comparison, look at what happened with Cell (PS3).

This, so much. Other companies are creating SoCs, which is something one would expect Nintendo to be all for.

Context makes a lot of difference. What in the world does Nintendo's current third party situation have anything to do with what I was talking about? Nobody was ever debating that.

starwarsfan541

Banned

Asked this in the GPU thread, but the question is getting buried underneath constant off topic bickering, so I'll put it here too since it also concerns the CPU...

I want to know this answer too.

I want to know this answer too.

Maybe hit up Marcan's blog post and/or ask him? I'm sure others would like to know too.

cartman414

Member

Context makes a lot of difference. What in the world does Nintendo's current third party situation have anything to do with what I was talking about? Nobody was ever debating that.

The PS2 was hard to code for in its day, even more so than Wii U, but given Sony's market leverage back then, not enough for 3rd parties to ignore.

The same 3rd parties nowadays have the luxury of three similar architectured, closely powered platforms (XBOne, PS4, PC) that can still give them decent marketshare. The outlier? They've already been the odd man out for the past decade as is. They should be doing everything they can to give developers less of an excuse to ignore them.

lostinblue

Banned

Wii U is not hard to code for; it's just different than current gen consoles and some devs spent years optimizing for them. PS2 was a nightmare, only superseeded by Sega Saturn and Nintendo 64.

It's actually bound to get easier (for the Wii U/Devs/adaptations) when code has been optimized for newer consoles, throughput of this part is akin to the others of course, if anything because it has less cores, but it's more in line with their nature (on the PS3/X360 code had to be dual threaded to counter the fact that they were in-order whereas the Wii U is out of order and single threaded).

It's actually bound to get easier (for the Wii U/Devs/adaptations) when code has been optimized for newer consoles, throughput of this part is akin to the others of course, if anything because it has less cores, but it's more in line with their nature (on the PS3/X360 code had to be dual threaded to counter the fact that they were in-order whereas the Wii U is out of order and single threaded).

Answered it in the other thread, by quoting the original post.Maybe hit up Marcan's blog post and/or ask him? I'm sure others would like to know too.

blu

Wants the largest console games publisher to avoid Nintendo's platforms.

I'm not necessarily countering your points, but a remark nevertheless: IBM's supercomputer lineup is based on PPC low-power designs. My guess is IBM would drop R&D of low(er) power PPC the moment they exited the supercomputer business. Question is, will they? One could argue they are getting serious pressure from the GPGPU foray (better compute density, etc), but I, personally, don't see IBM going the way of HP.Problem is R&D, IBM is not pouring any R&D into low power architectures in order to compete in embedded mobile platforms, and home consoles are for the better and worst embedded platforms at this point.

PPC750 is still a good CPU, but it's a good barebones CPU whereas R&D elsewhere has been put to use in power gating and trigate/LPG; IBM is doing neither, the most power draw conscious implementation they did in 10 years that wasn't a byproduct of node shrink must have been the eDRAM instead of SRAM, that Nintendo used.

And so now that intel roadmap is really agresive in getting power consumption down in order to compete with ARM and with AMD to follow it's only gonna get worse; it's simply a race IBM isn't partaking.

It's pretty obvious either IBM changes it's goals (which I doubt they will) or PPC being used on embedded platforms is a dying breed.

On a further note, I think Power8 is the last of its kind, i.e. CPUs that go a hell of a distance to provide maximum per-thread performance. Intel might continue pushing the bounds there a tad longer, just because inertia is their shtick, but the days of the monster single-thread performance CPUs are counted.

http://en.wikipedia.org/wiki/POWER8

That thing destroys any Intel/AMD CPU curerntly on the market(as Power CPU's always do upon release).

Maybe they could make a hybrid with it.

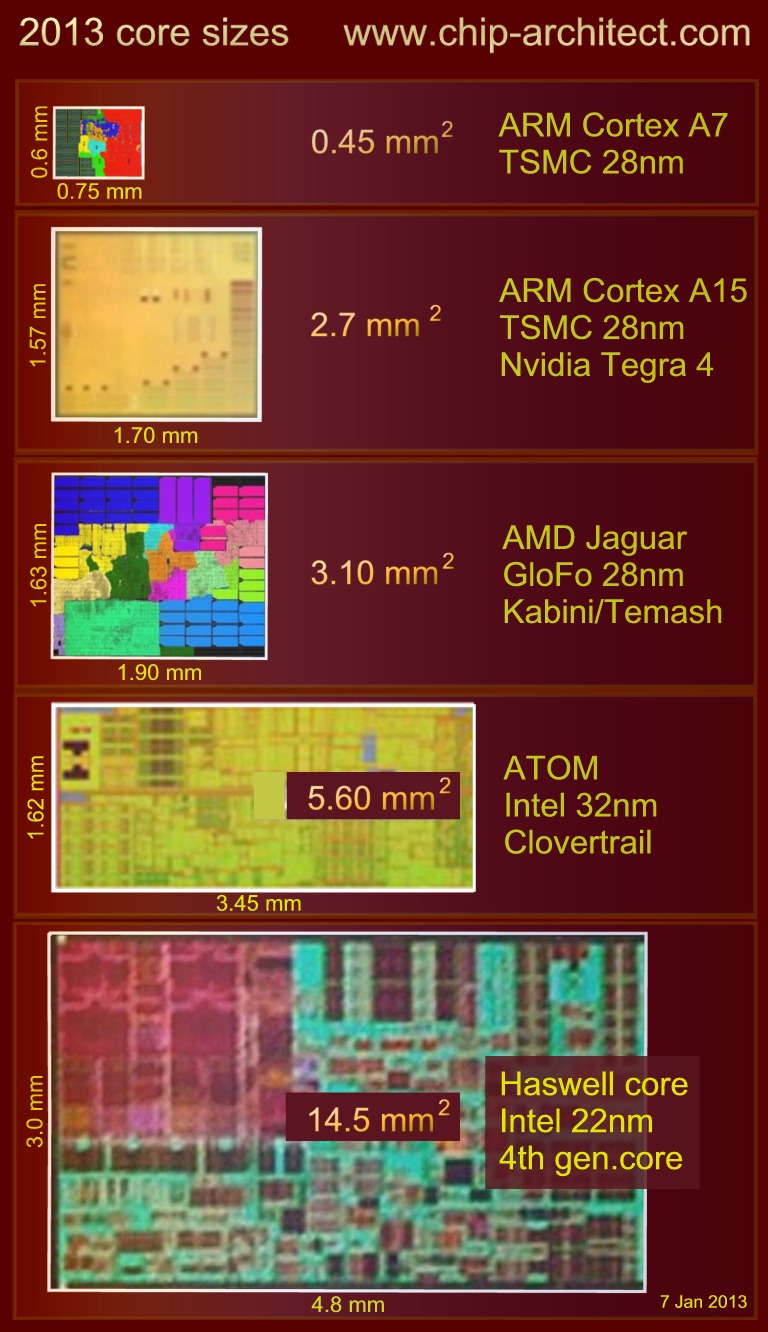

It's also 15-16mm2 per core compared to 3.1mm2 per core for Jaguar. That's 5x the size (and at a smaller process to boot - 22mm vs. 28nm) so you'd certainly hope that it performs significantly faster.

What would be interesting is to see how it compares to Haswell.

Not necessarily, as it depends on the spcific architecture.

Power 7 was not more energy efficient than Intel's Westmere for example:

"The Power 7 CPUs are in the 100 to 170W TDP range, while the Xeon E7s are in the 95 to 130W TDP range. A quad 3.3GHz Power 755 with (256GB RAM) server consumes 1650W according to IBM (slide 24), while our first measurements show that our 2.4GHz E7-4870 server will consume about 1300W in those circumstances.

Considering that the 3.3GHz Power 7 and 2.4GHz E7-4870 perform at the same level, we'll go out on a limb and assume that the new Xeon wins in the performance/watt race."

(source)

The E7-4870 is a 32nm chip though and the Power7 (without "+") still a 45nm chip.

lostinblue

Banned

Power 7, A2 and other CPU's?I'm not necessarily countering your points, but a remark nevertheless: IBM's supercomputer lineup is based on PPC low-power designs. My guess is IBM would drop R&D of low(er) power PPC the moment they exited the supercomputer business. Question is, will they? One could argue they are getting serious pressure from the GPGPU foray (better compute density, etc), but I, personally, don't see IBM going the way of HP.

They're certainly not low power in the sense that they could be inside any console available today. Although I agree, they're not as monstrous as I made them to be to illustrate a point. They're essentially high clock desk CPU's aimed for cluster embedding.

Problem is really the power gating; if you use them at full load for folding it's probably not really all that relevant, but in a real-world situation that is bound to change a lot; their designs haven't invested in that whatsoever and Intel is getting crazy good with it. They're too far behind to be able to compete in that area.

Another problem is the implementations themselves, do you know that PPC 970 northbridges were energy hogs? They wasted 30W alone, at all times. Which is one of the reasons even with PPC970 @ 30W parts their use in laptops wasn't something possible to do.

Of course, this steamed from the fact that they didn't really compete directly with Intel and AMD in that area (who at that point had a open northbridge environment with parts from Via and Nvidia being very competent which made AMD and Intel try harder); having a closed market ultimately hindered them; and it still is (hindering them).

blu

Wants the largest console games publisher to avoid Nintendo's platforms.

I was referring to the A2 and 470, the latter of which is a fairly low-power embedded PPC.Power 7, A2 and other CPU's?

They're certainly not low power in the sense that they could be inside any console available today. Although I agree, they're not as monstrous as I made them to be to illustrate a point. They're essentially high clock desk CPU's aimed for cluster embedding.

Regardless, I don't know if power gating is IBM's issue at the moment, but I do know Intel have the lithography leadership. As with most things today, that is bound to end rather soon, just because we're entering the age of silicon disillusionment.

lostinblue

Banned

I consider 476FP to be an overdrive offshoot of sorts; it's based on an old architecture... not even trying to pose as something new and it's not flagship in the least for IBM.I was referring to the A2 and 470, the latter of which is a fairly low-power embedded PPC.

Sure they released it as late as 2009 (but in the CPU world that's 4 years ago) as an embedded solution (perhaps to succeed PPC750CL) and since gave no indication of it being further developed or having something it can call a roadmap, it seems to be supported if needed be but it's as stuck as PPC750 is; no node shrinks, no frequency steppings and no further upgrades. Such is not the case with Power 7 and A2 at this point, for they're as flasgship as it can be.

PPC476 FP is their embedded last stand, no doubt, but I think it speaks for itself; hence I disregarded it.

A2 is kind of an alien design, but yeah, it's certainly surprisingly energy draw aware.

I don't think it is because IBM is obviously not building CPU's around their own limitations, rather, they're focusing on their strenghts. Power gatings means the world for a laptop/embedded device with a modern cpu; because we went from one bullet machines to parallel processing ones, overhead increased and because there's a little energy to be lost everywhere the more complex the design is the more energy stand to be lost at idle. That's where power gating comes in; and I'm sure you know this, but just putting it out there.Regardless, I don't know if power gating is IBM's issue at the moment, but I do know Intel have the lithography leadership. As with most things today, that is bound to end rather soon, just because we're entering the age of silicon disillusionment.

Peak is not the issue (and it's my understanding current IBM CPU's are designed for peak, seeing they're not aimed at consumers), at peak you'll be using those channels anyway, power gating is more akin to building a submarine in the sense that if you leave resources unused you can simply shut those doors and confine yourself to a smaller area just so energy doesn't go there and doesn't get wasted in the process. Never this simple of course.

As for Intel loosing leadership in the cpu lithography realm, perhaps, I surely hope so. But it's part of their business model so just like they won't let x86 go without a fight, I doubt they'll just give up, they're ahead also because they spent loads of money on R&D for it.

Silicon disillusionment is inevitable, but they sure know a thing or two.

From the latest Bink changelog:

Not sure how useful that is, considering Nintendo has their own proprietary low bitrate codec which I assume is available to licensed developers (based on Mobiclip), but it's interesting to see how this really old core still manages to surprise developers even after all those years.

http://www.radgametools.com/bnkhist.htm

- Added Wii-U support for Bink 2 - play 30 Hz 1080p or 60 Hz 720p video! We didn't think this would be possible - the little non-SIMD CPU that could!

- Added Wii support for Bink 2 - play 30 Hz 640x480 video. Another Broadway platform we didn't initally think could handle Bink 2 - great CPU core!

Not sure how useful that is, considering Nintendo has their own proprietary low bitrate codec which I assume is available to licensed developers (based on Mobiclip), but it's interesting to see how this really old core still manages to surprise developers even after all those years.

It's not only possible, it's very likely. As for how Espresso and Latte are connected, nobody knows for sure. Seems to use a modified, unusually wide 60x bus.So, back to the question I asked earlier.

What is this about Espresso's connectivity to Latte. I saw that some of the RAM on Latte was specifically supposed to be for talking to Espresso. Is it possible that it has access to all of Latte's memory?

w1gglyjones

Member

Not sure how useful that is, considering Nintendo has their own proprietary low bitrate codec which I assume is available to licensed developers (based on Mobiclip), but it's interesting to see how this really old core still manages to surprise developers even after all those years.

When will they do a dieshrink of the GPU and CPU?

If I had to guess, probably never. Why?When will they do a dieshrink of the GPU and CPU?

w1gglyjones

Member

If I had to guess, probably never. Why?

Doesn't that save them money and allow them to reduce the price of the console?

lostinblue

Banned

The core shrink race is loosing steam and turning into diminishing returns.Doesn't that save them money and allow them to reduce the price of the console?

The dirt:

Price per transistor stays mostly the same. no matter the node.

Waffer price goes considerably up, washes away the benefits.

It could - in theory. But at the same time, using old shit can also reduce costs (proven, predictable yield, nobody else uses the fabs). Not to mention shrinking in itself doesn't come cheap, and even more so with exotic stuff like eDRAM. As amazing as eDRAM is as a technology, it's supposedly very hard to manufacture, and even harder to develop further. Which includes shrinking.Doesn't that save them money and allow them to reduce the price of the console?

I wrote this in the iPhone thread, when somebody asked how the Wii U compares to A7 regarding the transistor count: Without eDRAM tech, MEM1 alone would have more transistors than the whole A7. A lot more. eDRAM saves space and reduces power consumption, but makes manufacturing a lot more complicated. So it's not even necessarily cheaper at the end of the day, but it allows Nintendo to make the system as small and quiet as it is.

From the latest Bink changelog:

http://www.radgametools.com/bnkhist.htm

Not sure how useful that is, considering Nintendo has their own proprietary low bitrate codec which I assume is available to licensed developers (based on Mobiclip), but it's interesting to see how this really old core still manages to surprise developers even after all those years.

Kinda reminds me of Blu's CPU benchmarks, where Broadway(?) performed surprisingly well on the FLOP-heavy test, for a CPU where FLOPs aren't even its forte. Are there any performance info on other CPUs/devices running Bink 2, for comparison sake? Like what resolution & framerate they run at. It sounds like quite an accomplishment for a PPC core, and a testament to what some(Criterion) have said. That is; the Espresso is much more capable than it appears on paper.

Ah, now its starting to make more sense.It's simply more efficient than those, quite honestly also because they're bad CPU's; and yet they're PPC, not x86. that architecture got dropped once, (IBM GuTS) because it was turd, it got revived out of Sony wanting something different, Microsoft wanting whatever they ordered and IBM wanting to test some more at their clients expenses.

You don't need to be efficient to tackle this CPU or no-one would be saying it punches above it's weight; you have to be efficient to tackle PS3 and X360 architectures, and that's why it's suffering here, because it's of a different nature.

You just need to code for it: it's a 32-bit CPU with 64-bit FPU. And it behaves like one; short pipeline so it's fast at giving resources, but it doesn't dispatch many instructions per cycle opposite of modern solutions. And the 64-bit FPU ensues the floating point performance certainly isn't over the moon; but CPU's not so long ago were like that so it's not like it's a foreign concept.

The fact that it is only 1.24 GHz though, is damaging for that part of the design.A more branched design is not necessarily brute force; brute force is going 4 GHz with liquid cooling, adding more complexity just so the cpu can do more at the same time was the solution needed in order to keep gaining performance. I mean we've been sitting on the 2.4 GHz figure being a normal clock for 10 years now; yet pull a 2.4 GHz from 10 years ago (a multiprocessor solution if you will just so it compares better) versus a dual core from 5 years ago to one today, all within that ballpark and performances will grandly differ.

That's because architectures became more and more complex internally while striking for a balance.

It's balancing act.Erm... sure dude. I was pretty honest regarding it, that's it. I dunno if it was downgraded, people told me it was, what else do you want from me?

So let me get this straight. The Wii U CPU is having problems because the code on the PS3/360 is so complex and filled with things to get around their bottleknecks that the Wii U CPU doesn't need and thus wasn't designed to handle?

lostinblue

Banned

Not just bottlenecks. It's a mix of everything.So let me get this straight. The Wii U CPU is having problems because the code on the PS3/360 is so complex and filled with things to get around their bottleknecks that the Wii U CPU doesn't need and thus wasn't designed to handle?

Current gen console CPU's are in-order solutions, that means, they can only process one thing at a time, it's like a line of people in front of a store balcony; they have to be dispached in order of arrival; even if one client has 1000 things in the basket and the other one just one; one will take forever to attend to, and perhaps even create problems along the way whereas the other one will simply have to wait.

Out of order being more like a multiple counters type of solution with someone or something overseeing the load and where those people should go in order to get away faster, instructions get sent and then they wait, if the branch prediction thinks it can fit between two other instructions then it gets dispached there and gets across faster.

Xenon and Cell are in-order, so predicting this problem, not seen since Pentium 1, they added two lanes (2-way) just so when one line is stalling the other one can be moving nonetheless.

This is oversimplifying things though, as normal multithreading on out of order CPU's is meant to take advantage of unused resources, which usually amount to a 30% boost at best, but on these cpu's case, because they simply stall if something being executed has to wait for something from memory (and doesn't concede that turn for something smaller to be parsed) it actually means that the second thread sometimes has 100% of the processor overhead for it, making it even more important to have multithreaded code.

That means the code has to be aware of such structure though, it has to be multithreaded; Gekko/Broadway/Latte are not capable of that; they don't have two lanes, so if code optimized for that gets to it, not only will it be written for strenghts that are not his, it'll also ocuppy two cores instead of one (no software should be doing that, of course), anyway if you drop code designed like that it'll simply flunk... bad. The only way for that not to be the case would be brute force, high clocks at that.

PS3 and X360 solutions were designed like that for floating point performance, simplifying and broadening everything else (basically, it's the opposite of PPC 750; being short pipelined means less MHz and that'll hamper FPU performance in the end, because it's separate from the main CPU design but operating at the same clock; with these cpu's the decision was simplifying them up to the point they're pretty weak in general purpose, just so they can clock higher and that happens to benefit the FPU performance); this happened as Sony had Emotion engine before and it was the only thing on PS2 outputting floating point (the GPU outputted 0 Flops).

Cell was designed to be like a Emotion engine, SPE's being like the Vector Units on it, and Sony even thought about not adding a GPU for a quite a bit, instead making a PS3 out of two Cell's. Dude's were crazy and living the dream back then.

The software ended up having to be badly coded.

You have this modern approach to coding where it is stated that good code is abstract to the hardware and thus retaining easy portability; that makes everything console pushing related a bad practice, or bad code, since you're trying to bend the code to the hardware, and with hardware so peculiar as current gen console cpu's all the worse.

It ended up like an anarchy of sorts, people spent years optimizing their software and tech for in-order 2-way; and considering the fact that performance was still lousy and they lacked things like a sound processor (making them use the cpu for stuff like that, draining further resources) they went further, the only thing these cpu's were good at was floating point so you had things no one in his right mind would write with floating point in mind, like AI, being written to take advantage of otherwise unused CPU overhead.

Of course that code doesn't scale well onto Wii U; it doesn't scale well for PC either, but PC at least has the power to bypass it through brute force.

It's complicated basically, but essentially they're completely different designs with the Wii U being a more standardized design, and thus dealing well with good code (example PC ports should go well, as those are never too optimized for whenever) but very badly with current gen bad code.

prag16

Banned

Very interestng and informative. So basically, the guy in the GPU thread claiming the 360 is much much closer to the Wii U than the PS3 is way off base?Not just bottlenecks. It's a mix of everything.

Current gen console CPU's are in-order solutions, that means, they can only process one thing at a time, it's like a line of people in front of a store balcony; they have to be dispached in order of arrival; even if one client has 1000 things in the basket and the other one just one; one will take forever to attend to, and perhaps even create problems along the way whereas the other one will simply have to wait.

Out of order being more like a multiple counters type of solution with someone or something overseeing the load and where those people should go in order to get away faster, instructions get sent and then they wait, if the branch prediction thinks it can fit between two other instructions then it gets dispached there and gets across faster.

Xenon and Cell are in-order, so predicting this problem, not seen since Pentium 1, they added two lanes (2-way) just so when one line is stalling the other one can be moving nonetheless.

This is oversimplifying things though, as normal multithreading on out of order CPU's is meant to take advantage of unused resources, which usually amount to a 30% boost at best, but on these cpu's case, because they simply stall if something being executed has to wait for something from memory (and doesn't concede that turn for something smaller to be parsed) it actually means that the second thread sometimes has 100% of the processor overhead for it, making it even more important to have multithreaded code.

That means the code has to be aware of such structure though, it has to be multithreaded; Gekko/Broadway/Latte are not capable of that; they don't have two lanes, so if code optimized for that gets to it, not only will it be written for strenghts that are not his, it'll also ocuppy two cores instead of one (no software should be doing that, of course), anyway if you drop code designed like that it'll simply flunk... bad. The only way for that not to be the case would be brute force, high clocks at that.

PS3 and X360 solutions were designed like that for floating point performance, simplifying and broadening everything else (basically, it's the opposite of PPC 750; being short pipelined means less MHz and that'll hamper FPU performance in the end, because it's separate from the main CPU design but operating at the same clock; with these cpu's the decision was simplifying them up to the point they're pretty weak in general purpose, just so they can clock higher and that happens to benefit the FPU performance); this happened as Sony had Emotion engine before and it was the only thing on PS2 outputting floating point (the GPU outputted 0 Flops).

Cell was designed to be like a Emotion engine, SPE's being like the Vector Units on it, and Sony even thought about not adding a GPU for a quite a bit, instead making a PS3 out of two Cell's. Dude's were crazy and living the dream back then.

The software ended up having to be badly coded.

You have this modern approach to coding where it is stated that good code is abstract to the hardware and thus retaining easy portability; that makes everything console pushing related a bad practice, or bad code, since you're trying to bend the code to the hardware, and with hardware so peculiar as current gen console cpu's all the worse.

It ended up like an anarchy of sorts, people spent years optimizing their software and tech for in-order 2-way; and considering the fact that performance was still lousy and they lacked things like a sound processor (making them use the cpu for stuff like that, draining further resources) they went further, the only thing these cpu's were good at was floating point so you had things no one in his right mind would write with floating point in mind, like AI, being written to take advantage of otherwise unused CPU overhead.

Of course that code doesn't scale well onto Wii U; it doesn't scale well for PC either, but PC at least has the power to bypass it through brute force.

It's complicated basically, but essentially they're completely different designs with the Wii U being a more standardized design, and thus dealing well with good code (example PC ports should go well, as those are never too optimized for whenever) but very badly with current gen bad code.

lwilliams3

Member

Thanks for the informative post, lostinblue.Not just bottlenecks. It's a mix of everything.

Current gen console CPU's are in-order solutions, that means, they can only process one thing at a time, it's like a line of people in front of a store balcony; they have to be dispached in order of arrival; even if one client has 1000 things in the basket and the other one just one; one will take forever to attend to, and perhaps even create problems along the way whereas the other one will simply have to wait.

Out of order being more like a multiple counters type of solution with someone or something overseeing the load and where those people should go in order to get away faster, instructions get sent and then they wait, if the branch prediction thinks it can fit between two other instructions then it gets dispached there and gets across faster.

Xenon and Cell are in-order, so predicting this problem, not seen since Pentium 1, they added two lanes (2-way) just so when one line is stalling the other one can be moving nonetheless.

This is oversimplifying things though, as normal multithreading on out of order CPU's is meant to take advantage of unused resources, which usually amount to a 30% boost at best, but on these cpu's case, because they simply stall if something being executed has to wait for something from memory (and doesn't concede that turn for something smaller to be parsed) it actually means that the second thread sometimes has 100% of the processor overhead for it, making it even more important to have multithreaded code.

That means the code has to be aware of such structure though, it has to be multithreaded; Gekko/Broadway/Latte are not capable of that; they don't have two lanes, so if code optimized for that gets to it, not only will it be written for strenghts that are not his, it'll also ocuppy two cores instead of one (no software should be doing that, of course), anyway if you drop code designed like that it'll simply flunk... bad. The only way for that not to be the case would be brute force, high clocks at that.

PS3 and X360 solutions were designed like that for floating point performance, simplifying and broadening everything else (basically, it's the opposite of PPC 750; being short pipelined means less MHz and that'll hamper FPU performance in the end, because it's separate from the main CPU design but operating at the same clock; with these cpu's the decision was simplifying them up to the point they're pretty weak in general purpose, just so they can clock higher and that happens to benefit the FPU performance); this happened as Sony had Emotion engine before and it was the only thing on PS2 outputting floating point (the GPU outputted 0 Flops).

Cell was designed to be like a Emotion engine, SPE's being like the Vector Units on it, and Sony even thought about not adding a GPU for a quite a bit, instead making a PS3 out of two Cell's. Dude's were crazy and living the dream back then.

The software ended up having to be badly coded.

You have this modern approach to coding where it is stated that good code is abstract to the hardware and thus retaining easy portability; that makes everything console pushing related a bad practice, or bad code, since you're trying to bend the code to the hardware, and with hardware so peculiar as current gen console cpu's all the worse.

It ended up like an anarchy of sorts, people spent years optimizing their software and tech for in-order 2-way; and considering the fact that performance was still lousy and they lacked things like a sound processor (making them use the cpu for stuff like that, draining further resources) they went further, the only thing these cpu's were good at was floating point so you had things no one in his right mind would write with floating point in mind, like AI, being written to take advantage of otherwise unused CPU overhead.

Of course that code doesn't scale well onto Wii U; it doesn't scale well for PC either, but PC at least has the power to bypass it through brute force.

It's complicated basically, but essentially they're completely different designs with the Wii U being a more standardized design, and thus dealing well with good code (example PC ports should go well, as those are never too optimized for whenever) but very badly with current gen bad code.

Well, I believe the design of the PS3 is kind of the opposite of the Wii U (very heavy focus on CPU, relatively weaker GPU. The 360 is definitely build differently than the Wii U, but it is not as different compared to the PS3.Very interestng and informative. So basically, the guy in the GPU thread claiming the 360 is much much closer to the Wii U than the PS3 is way off base?

lostinblue

Banned

It's closer on the GPU solution for sure, seeing it's ATi/AMD with 5-way VLIW; PS3 being a nvidia part; it also retains the Xenos aproach to dedicated framebuffer memory; although we could trace implementations like that back to Gamecube or even PS2. Anyway it has said pool of memory to play with which is certainly a point in common even if it's not prone to the same limitations this time around (thankfully).Very interestng and informative. So basically, the guy in the GPU thread claiming the 360 is much much closer to the Wii U than the PS3 is way off base?

The memory bank is also unified, which is a decision that "on paper" is again closer to X360 than PS3, avoids having to move too many things around.

But it's not particularly close to either design, rather it's painfully aware of some of the problems in both current gen HD consoles and seems to be trying to get away by being more efficient and by fixing those caveats. That makes it a very different beast though.

prag16

Banned

It's closer on the GPU solution for sure, seeing it's ATi/AMD with 5-way VLIW; PS3 being a nvidia part; it also retains the Xenos aproach to dedicated framebuffer memory; although we could trace implementations like that back to Gamecube or even PS2. Anyway it has said pool of memory to play with which is certainly a point in common even if it's not prone to the same limitations this time around (thankfully).

The memory bank is also unified, which is a decision that "on paper" is again closer to X360 than PS3, avoids having to move too many things around.

But it's not particularly close to either design, rather it's painfully aware of some of the problems in both current gen HD consoles and seems to be trying to get away by being more efficient and by fixing those caveats. That makes it a very different beast though.

Not to get too far off topic, but in the other topic the argument was essentially "360 to Wii U ports should be much easier and need much less optimization overall than 360 to PS3 ports".

That sounds like it's not the case. Probably easier in some areas and more difficult in other area, but definitely not "drastically easier, smoother, etc" overall.

Not to get too far off topic, but in the other topic the argument was essentially "360 to Wii U ports should be much easier and need much less optimization overall than 360 to PS3 ports".

That sounds like it's not the case. Probably easier in some areas and more difficult in other area, but definitely not "drastically easier, smoother, etc" overall.

I'd imagine XboxOne to Wii U would produce better results to than any last gen console game ported to it would. The feature set seems more relatable.

It would only be an issue of scaling resources effectively.

MrPresident

Banned

Not to get too far off topic, but in the other topic the argument was essentially "360 to Wii U ports should be much easier and need much less optimization overall than 360 to PS3 ports".

That sounds like it's not the case. Probably easier in some areas and more difficult in other area, but definitely not "drastically easier, smoother, etc" overall.

I think you're referring to my post but that's slightly inaccurate. The argument was restricted to GPU performance under CPU limited scenarios. It has nothing to do with CPU optimizations or, by extension, this thread.

The Wii U and 360 graphic chains are absolutely more similar than either is to the PS3. Neither has a split memory pool or a reliance on SPUs for offloading graphical tasks. Not to mention the different vendor, unified vs. discrete shader cores, and countless other differences. It belongs in the other thread, though.

prag16

Banned

I think you're referring to my post but that's slightly inaccurate. The argument was restricted to GPU performance under CPU limited scenarios. It has nothing to do with CPU optimizations or, by extension, this thread.

The Wii U and 360 graphic chains are absolutely more similar than either is to the PS3. Neither has a split memory pool or a reliance on SPUs for offloading graphical tasks. Not to mention the different vendor, unified vs. discrete shader cores, and countless other differences. It belongs in the other thread, though.

I don't think it was your post. Someone else was talking about this without limiting the scope of the discussion the way you have. Unless you also think the overall picture is more favorable to 360 -> Wii U ports than 360 -> PS3 ports. If you do think that, do you have a response for lostinblue's post?