Yeah I remember those times in the early 00s, and I agree about gpu cycles nowadays.I guess you weren't around in the late 90s and early 00s, when every year brought not just performance but entirely new graphical features to the table. Those were the times.

GPU cycles are incredibly tame now.

-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia Volta is 16nm, expected in May 2017

- Thread starter dr_rus

- Start date

dr_rus

Member

dx 9 days. When a new card came out usually a full year after or so it didn't make your card look irrelevant.

You mean, these days?

July 2002 - Radeon 9700 Pro

Jan 2003 - GeForce FX 5800 Ultra

May 2003 - GeForce FX 5900 Ultra

Sep 2003 - Radeon 9800 XT

May 2004 - Radeon X800 XT

May 2004 - GeForce 6800 Ultra

Feb 2005 - Radeon X850 XT

June 2005 - GeForce 7800 GTX

Oct 2005 - Radeon X1800 XT

Jan 2006 - Radeon X1900 XT

March 2006 - GeForce 7900 GTX

Jun 2006 - GeForce 7950 GX2

Nov 2006 - GeForce 8800 GTX

Incredibly simple times, yes. So little new cards bringing new features /sarcasm

A few days ago Nvidia released the first HBM2 GPU. The Tesla P100 for workstations.

The first consumer GPU with HBM2 will be probably the AMD Vega.

About the next Nvidia GPU and the memory type I have see rumors with GDDR5X, HBM2 and even GDDR6 for late 2017 or 2018.

Quadro GP100 was released back in February: https://arstechnica.com/gadgets/2017/02/nvidia-quadro-gp100-price-details/

Tesla P100 was announced a year ago and available since autumn last year.

PHOENIXZERO

Member

The NVIDIA's 8 series, especially the 8800s lasted so damn ridiculously long for the simple reason that they had horse power that was far beyond the GPUs in the PS3 or XB360 that would become the baseline for like seven years. Had the PS3 and XB360 been succeeded sooner 8800s would've been made irrelevant much quicker.

michaelius

Banned

Wonder how many years it will take for nvidia to go to 10nm, or even 7nm.

As many as it will take TSMC/Samsung/GF to make 10nm proces viable - it's not up to gpu makers but to foudries to make it possible.

DonMigs85

Member

Correct. A lot of them died early because of the lead-free solder issue though.The NVIDIA's 8 series, especially the 8800s lasted so damn ridiculously long for the simple reason that they had horse power that was far beyond the GPUs in the PS3 or XB360 that would become the baseline for like seven years. Had the PS3 and XB360 been succeeded sooner 8800s would've been made irrelevant much quicker.

Nope it didn't last long. I bought a 8800GTX for Crysis 1 and it chugged. For console ports, by 2009 we were on 1080p/1200p monitors and it wasn't nearly enough to drive the resolution. People moved to GTX200 and the infamous Radeon 4000s.The NVIDIA's 8 series, especially the 8800s lasted so damn ridiculously long for the simple reason that they had horse power that was far beyond the GPUs in the PS3 or XB360 that would become the baseline for like seven years. Had the PS3 and XB360 been succeeded sooner 8800s would've been made irrelevant much quicker.

dx 9 days. When a new card came out usually a full year after or so it didn't make your card look irrelevant.

What are you babbling about? Did you live in a bizzarro world?

In the past not only raw performance but also feature sets could be absolutely disruptive in the year after year cycle, those were the days.... now you can easily get away with most of the stuff with an high end Kepler GPU released in 2013

Papacheeks

Banned

What are you babbling about? Did you live in a bizzarro world?

In the past not only raw performance but also feature sets could be absolutely disruptive in the year after year cycle, those were the days.... now you can easily get away with most of the stuff with an high end Kepler GPU released in 2013

I guess I remember my 6800 Ultra being able to play the biggest games with no issue after the 7800 and the refresh 7900 series came out without issue.

LelouchZero

Member

I guess I remember my 6800 Ultra being able to play the biggest games with no issue after the 7800 and the refresh 7900 series came out without issue.

Could you please elaborate on what you mean by "no issue"?

As mentioned by LeleSocho a GPU like the 780 Ti or R9 290/290X can run many games of today at 1080p and above while utilizing some of the highest graphical presets available in the games at 30+ fps. With tweaking you can get locked 60 fps experiences or higher resolutions at 30 fps.

Today, the 780 Ti is over 3 years and 5 months old and the R9 290X is almost 3 years and 6 months old. If you count the GTX Titan that GPU is over 4 years and 1 month old.

Papacheeks

Banned

Could you please elaborate on what you mean by "no issue"?

As mentioned by LeleSocho a GPU like the 780 Ti or R9 290/290X can run many games of today at 1080p and above while utilizing some of the highest graphical presets available in the games at 30+ fps. With tweaking you can get locked 60 fps experiences or higher resolutions at 30 fps.

Today, the 780 Ti is over 3 years and 5 months old and the R9 290X is almost 3 years and 6 months old. If you count the GTX Titan that GPU is over 4 years and 1 month old.

I would hope a graphics card you paid $500+ for would be able to handle games at 1080p couple years after purchase. I didn't see performance dips as big as I see now game wise because of a lot of software with un-optimized performance.

Back in dx9 days there wasn't as many engines that ran like shit on PC. Crytek was the only big one that did not scale the best on dx9 and that was because it was so advanced at the time.

Maybe some bad U3 ports and the likes. But most games from Source, U3, Chrome engine ran very well and scaled well on 3-4 year old cards,unless you bought something now like a 290x/780ti or higher your card would not perform well on newer games with engines that were probably designed using top of the line render or game cards.

When battlefield 2 came out people were running it on a 6600gt without issues. Even my 7800gt I had was running games at somewhat high moderate resolutions. And didn't need to up grade till late 2008-2009. DX 10 by that time was really kicking the shit out of dx9 cards.

kostacurtas

Member

SK Hynix Inc. Introduces Industrys Fastest 8Gb Graphics DRAM (GDDR6)

The client is probably Nvidia.

So maybe:

- Volta 2070/2080 with faster GDDR5X memory, September 2017.

- Volta 2080Ti with GDDR6 memory, early 2018.

Seoul, April 23, 2017 SK Hynix Inc. (or the Company, www.skhynix.com) today introduced the worlds fastest 2Znm 8Gb(Gigabit) GDDR6(Graphics DDR6) DRAM. The product operates with an I/O data rate of 16Gbps(Gigabits per second) per pin, which is the industrys fastest. With a forthcoming high-end graphics card of 384-bit I/Os, this DRAM processes up to 768GB(Gigabytes) of graphics data per second. SK Hynix has been planning to mass produce the product for a client to release high-end graphics card by early 2018 equipped with high performance GDDR6 DRAMs.

The client is probably Nvidia.

So maybe:

- Volta 2070/2080 with faster GDDR5X memory, September 2017.

- Volta 2080Ti with GDDR6 memory, early 2018.

martincrimson87

Banned

I'd say that the 7970 could be comparable with the 8800GT when talking about a card that could handle most console ports with better performance than ps4/xbone and trail through the "generation" The 3GB VRAM buffer sure does help to have decent looking textures, unlike similar cards like the GTX 680.

If the Volta X60 card is 3 times better than my GTX 760 in 1440p I may pull the trigger.

If the Volta X60 card is 3 times better than my GTX 760 in 1440p I may pull the trigger.

Very interesting thank you. It doesn't seem like consumer graphics bandwidth needs are high enough yet to warrant performance HBM2.SK Hynix Inc. Introduces Industrys Fastest 8Gb Graphics DRAM (GDDR6)

The client is probably Nvidia.

So maybe:

- Volta 2070/2080 with faster GDDR5X memory, September 2017.

- Volta 2080Ti with GDDR6 memory, early 2018.

I just bought that a 1080ti, but if they want to release Volta tomorrow I am game. This doesn't make business sense to me because they cut into their 10 series market. More likely something Volta comes out this fall for professional use then next year 2070/2080 .

Wild card is AMD is packing heat, NVIDIA know it and they have to respond, by using the 20 to replace the pricing bucket of 1070/1080 and being able to drop their 10 series price to compete with AMD. I am ready to upgrade whenever. It was pretty awesome that they got the enthusiast market to buy a Titan X, then a 1080ti, then a Titan XP..so maybe?

Wild card is AMD is packing heat, NVIDIA know it and they have to respond, by using the 20 to replace the pricing bucket of 1070/1080 and being able to drop their 10 series price to compete with AMD. I am ready to upgrade whenever. It was pretty awesome that they got the enthusiast market to buy a Titan X, then a 1080ti, then a Titan XP..so maybe?

ethomaz

Banned

That will probably kill HBM2 like RAMBUS lolSK Hynix Inc. Introduces Industry's Fastest 8Gb Graphics DRAM (GDDR6)

The client is probably Nvidia.

So maybe:

- Volta 2070/2080 with faster GDDR5X memory, September 2017.

- Volta 2080Ti with GDDR6 memory, early 2018.

16Gbps @ 254bits = 512GB/s

16Gbps @ 384bits = 768GB/s

I bet we will see GDDR6 at 18Gbps and 20Gbps.

MagicWithEarvin

Member

SK Hynix Inc. Introduces Industrys Fastest 8Gb Graphics DRAM (GDDR6)

The client is probably Nvidia.

So maybe:

- Volta 2070/2080 with faster GDDR5X memory, September 2017.

- Volta 2080Ti with GDDR6 memory, early 2018.

Sign me up for that 2080Ti! I'm cool with upgrading my 1070 around that time.

Sign me up for that 2080Ti! I'm cool with upgrading my 1070 around that time.

Yeah volta xx80ti is the card I'm waiting on to replace my 980ti. Card's been a beast of a GPU, but just doesn't quite cut it for 4K and I think 2018 is really going to be the year consumer level 4k takes off.

Yeah volta xx80ti is the card I'm waiting on to replace my 980ti. Card's been a beast of a GPU, but just doesn't quite cut it for 4K and I think 2018 is really going to be the year consumer level 4k takes off.

It's usually 2 years between 80Ti models, so expect 2080Ti in 2019 at the earliest

Vulcano's assistant

Banned

Are they going right into 20xx after 10xx? I thought they would go all the way from 11xx to 19xx first.

neurosisxeno

Member

With this being the de facto Volta thread, the title should probably be changed, so people new to it don't think it's actually launching in May, every time the thread gets bumped.

In fact newest leaks suggest Q3 2017 unless I'm mistaken.

SK Hynix Inc. Introduces Industrys Fastest 8Gb Graphics DRAM (GDDR6)

The client is probably Nvidia.

So maybe:

- Volta 2070/2080 with faster GDDR5X memory, September 2017.

- Volta 2080Ti with GDDR6 memory, early 2018.

Is there much headroom left with GDDR5X? They're unlikely to go with larger than 256-bit width due to costs (except for a new GV102/Titan/2080ti), and expecting major clock rate boosts beyond the already-fast 11GHz seems unlikely to me.

ethomaz

Banned

GDDR5x max clock is 12Ghz (12Gbps) without overclock.Is there much headroom left with GDDR5X? They're unlikely to go with larger than 256-bit width due to costs (except for a new GV102/Titan/2080ti), and expecting major clock rate boosts beyond the already-fast 11GHz seems unlikely to me.

GDDR6 starts at 16Ghz (16Gbps)... well I believe there will be 14, 15, 17 and 18 GHz GDDR6 in the future... even 20Ghz.

GDDR5x max clock is 12Ghz (12Gbps) without overclock.

GDDR6 starts at 16Ghz (16Gbps)... well I believe there will be 14, 15, 17 and 18 GHz GDDR6 in the future... even 20Ghz.

So if Volta does drop HBM2 and goes with GDDR5X, they only have a 9% improvement in memory speed assuming the same 256-bit / 384-bit breakdown for the GV104 / GV102 ?

If it is coming out this year I really doubt it's using GDDR5X considering the above. It would be very out of character for Nvidia to take a hit to their margins by using a 384-bit bus on anything but their top end chip.

ethomaz

Banned

First Volta gaming cards will replace 1080/1070... not TITANs... that means GV104. 1080/1070 had max 320GB/s bandwidth... GDDR5x can reach 576GB/s.So if Volta does drop HBM2 and goes with GDDR5X, they only have a 9% improvement in memory speed assuming the same 256-bit / 384-bit breakdown for the GV104 / GV102 ?

If it is coming out this year I really doubt it's using GDDR5X considering the above. It would be very out of character for Nvidia to take a hit to their margins by using a 384-bit bus on anything but their top end chip.

nVidia has a big room for increase using GDDR5x.

If I can make a guess I think GTX 2080 (GV104) will have 480GB/s of bandwidth... remember the jump from GTX 980 to GTX 1080 was 226GB/s to 320GB/s.

It's usually 2 years between 80Ti models, so expect 2080Ti in 2019 at the earliest

Uhm, 2080Ti probably won't be here for a LONG, LONG time going by nVidia's naming scheme.

11xx -> 12xx -> 13xx -> 14xx -> 15xx and so on.

That's at least 2 years between each gen.

First Volta gaming cards will replace 1080/1070... not TITANs... that means GV104. 1080/1070 had max 320GB/s bandwidth... GDDR5x can reach 576GB/s.

nVidia has a big room for increase using GDDR5x.

If I can make a guess I think GTX 2080 (GV104) will have 480GB/s of bandwidth... remember the jump from GTX 980 to GTX 1080 was 226GB/s to 320GB/s.

Uh, how exactly is GDDR5X going to reach 576GB/s with a 256-bit bus? It'd have to be nearly 20GHz... or are you saying Nvidia's going to launch GV104 with a wider than 256-bit bus? Nvidia's entire history doesn't have a single *104 chip with anything other than a 256-bit bus so I really doubt that's gonna happen.

I think they did mention an upside of 15 or 16Gbps for GDDR5X, although they'll have switched to GDDR6 by then anyways lol.GDDR5x max clock is 12Ghz (12Gbps) without overclock.

GDDR6 starts at 16Ghz (16Gbps)... well I believe there will be 14, 15, 17 and 18 GHz GDDR6 in the future... even 20Ghz.

ethomaz

Banned

576GB/s is the actual max with a 384bits bus.Uh, how exactly is GDDR5X going to reach 576GB/s with a 256-bit bus? It'd have to be nearly 20GHz... or are you saying Nvidia's going to launch GV104 with a wider than 256-bit bus? Nvidia's entire history doesn't have a single *104 chip with anything other than a 256-bit bus so I really doubt that's gonna happen.

DonMigs85

Member

Uhh, GTX 480 was 384-bitUh, how exactly is GDDR5X going to reach 576GB/s with a 256-bit bus? It'd have to be nearly 20GHz... or are you saying Nvidia's going to launch GV104 with a wider than 256-bit bus? Nvidia's entire history doesn't have a single *104 chip with anything other than a 256-bit bus so I really doubt that's gonna happen.

ethomaz

Banned

Yeap... there are plans for better GDDR5x speeds but with GDDR6 releasing this year I don't see they pushing GDDR5x anymore... I'm still waiting to see a card with 12Gbps GDDR5x.I think they did mention an upside of 15 or 16Gbps for GDDR5X, although they'll have switched to GDDR6 by then anyways lol.

It's usually 2 years between 80Ti models, so expect 2080Ti in 2019 at the earliest

It doesn't really matter to me when it comes out, because I won't be upgrading my monitor tech until it does anyways lol, and my current gpu is going to playing games just fine at my current res for the foreseeable future.

4k being such a massive leap forward has led to weird gaps in the gpu game popping up where most people are still on 1080p despite all the high end cards right now being complete overkill for it. This makes it a non point as to when the next upgrade comes out because there's no point in doing said upgrade without buying a new monitor to take advantage of it

Uhh, GTX 480 was 384-bit

That was a GF110 - not a GF104. The 100/110 line has been exclusively Titan or x80 ti since the 600 series.

DonMigs85

Member

That was a GF110 - not a GF104. The 100/110 line has been exclusively Titan or x80 ti since the 600 series.

my mistake, GF104 was the 460

dr_rus

Member

SK Hynix Inc. Introduces Industry's Fastest 8Gb Graphics DRAM (GDDR6)

The client is probably Nvidia.

So maybe:

- Volta 2070/2080 with faster GDDR5X memory, September 2017.

- Volta 2080Ti with GDDR6 memory, early 2018.

This is all circumstantial at best and Hynix won't be the first to the market with GDDR6 either: Micron fast-tracks superfast GDDR6 graphics memory to satisfy PC gamers

It does hint at GV102 release early 2018 with "Titan Xv" being the obvious product though. GV104 could either use Micron's GDDR6 or faster GDDR5X - although at this point I think that Micron will concentrate on GDDR6 instead of further GDDR5X evolution.

Sign me up for that 2080Ti! I'm cool with upgrading my 1070 around that time.

GV102 Ti card will likely happen around Navi's launch which will likely be around end of 2nd Q of 2018.

llien

Member

GV102 Ti card will likely happen around Navi's launch which will likely be around end of 2nd Q of 2018.

Wouldn't you expect Navi to come later, given how AMD still struggles to release Vega?

GV102 Ti card will likely happen around Navi's launch which will likely be around end of 2nd Q of 2018.

I don't expect this to happen that early. The Ti cards have generally come out only when Nvidia has been able to get good enough yields for the almost full chip of whatever generation. They usually peddle a Titan version for early adopters then halve the price and sell a Ti that can match it in performance even though not in specs.

Does memory speed even make that much of a difference? GDDR5X vs GDDR6 for example.

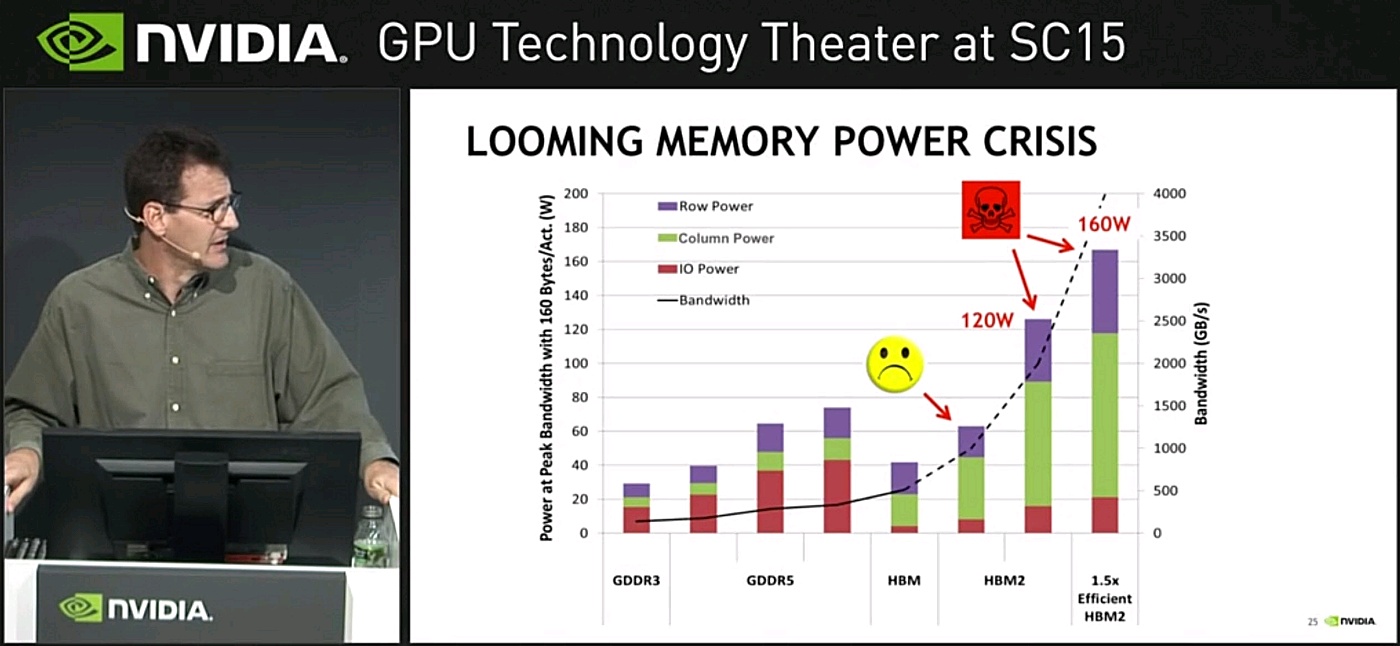

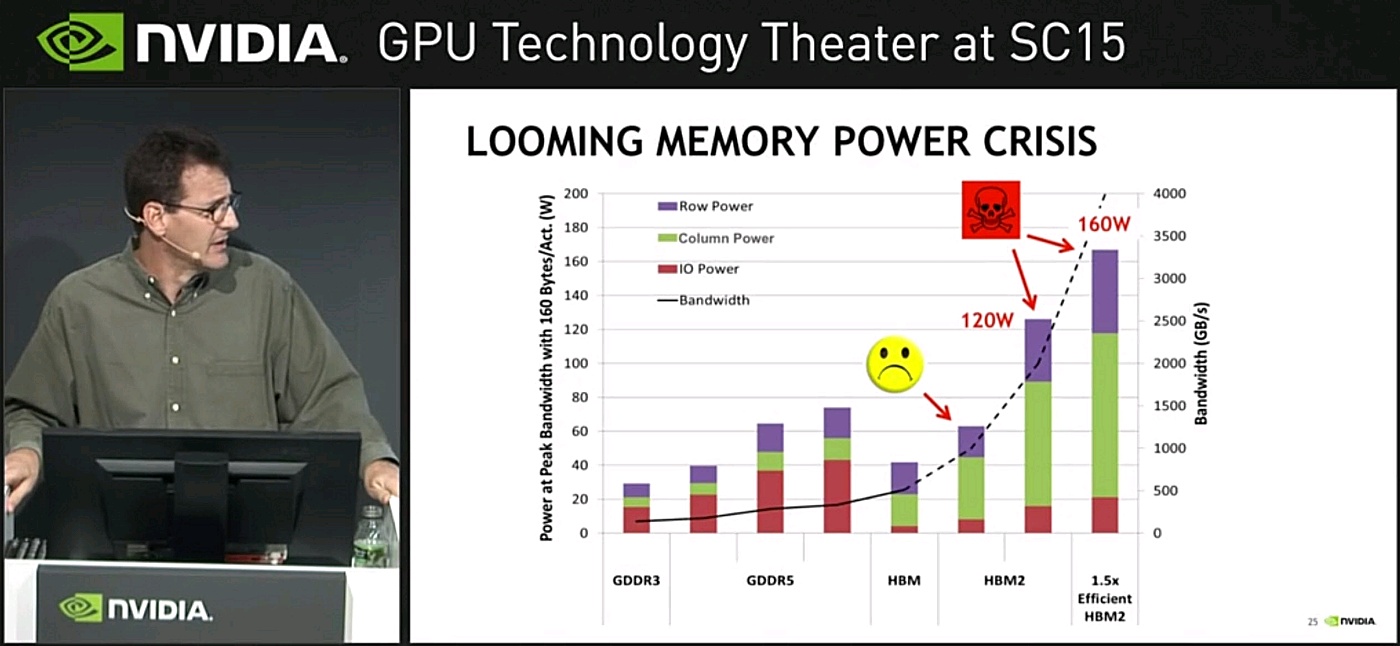

What people are missing here is power efficiency. HBM2 (although there is a wall down the road) consumes less power than GDDR6/G5X. The whole point of it was to leave more juice for the GPU die (and reduce latency). It would also enable much smaller form factor gpus as well as better cooling capacity (more reliability).

Now, GDDR6 is a likely going to be a lot cheaper and easier to hit better yields... so it'll likely win out in the end. Also team green isn't as memory reliant as AMD.

Now, GDDR6 is a likely going to be a lot cheaper and easier to hit better yields... so it'll likely win out in the end. Also team green isn't as memory reliant as AMD.

ethomaz

Banned

Well...Does memory speed even make that much of a difference? GDDR5X vs GDDR6 for example.

The actual highest GDDR5x is 12Gbps... in a 256bits bus it will give you 384GB/s

The first GDDR6 will be 16Gbps... in a 256bits bus it will give you 512GB/s.

The difference is over 100GB/s in a 256bits bus and even bigger in a 384bits bus.

ethomaz

Banned

HBM2 consumes more power than GDDR5x/GDDR6.What people are missing here is power efficiency. HBM2 (although there is a wall down the road) consumes less power than GDDR6/G5X. The whole point of it was to leave more juice for the GPU die (and reduce latency). It would also enable much smaller form factor gpus as well as better cooling capacity (more reliability).

Now, GDDR6 is a likely going to be a lot cheaper and easier to hit better yields... so it'll likely win out in the end. Also team green isn't as memory reliant as AMD.

HBM3 is suppose to fix that and be on pair with GDDR5x/GDDR6 but we will see because HBM uses a big bus data (1024bits or 2048bits) that require more power to make the data travel across all these tunnels.

Latency is not a issue for gaming GPUs... that is why HBM looks to be more useful for P100, V100, etc.

Well...

The actual highest GDDR5x is 12Gbps... in a 256bits bus it will give you 384GB/s

The first GDDR6 will be 16Gbps... in a 256bits bus it will give you 512GB/s.

The difference is over 100GB/s in a 256bits bus and even bigger in a 384bits bus.

Yes but what is the real world impact on game framerates, framepacing etc?

ethomaz

Banned

Dependes of the power of your GPU it requires more bandwidth to be fair.Yes but what is the real world impact on game framerates, framepacing etc?

GTX 1080 10Gbps = 320GB/s

GTX 1080 OC 11Gbps = 352GB/s

TITAN X = 480GB/s

TITAN XP = 550GB/s

TITAN XP with 320GB/s will probably run close to GTX 1080 because the bandwidth will be a bottleneck.

Bandwidth affect a lot the performance in games.

llien

Member

HBM2 consumes more power than GDDR5x/GDDR6.

Is this known for a fact?

Anand expected HBM2 to consume less than GDDRX5, I couldn't find newer articles that go beyond rumors.

Locuza

Member

The perf/watt from HBM is far better in comparison to GDDR5, GDDR5X is a bit more efficient because it doubles the data-prefetch which allows it to drive the interface at lower clockspeed (depending on the data-rate you want of course) but it still doesn't reach the efficiency of HBM.HBM2 consumes more power than GDDR5x/GDDR6.

HBM3 is suppose to fix that and be on pair with GDDR5x/GDDR6 but we will see because HBM uses a big bus data (1024bits or 2048bits) that require more power to make the data travel across all these tunnels.

[...]

GDDR6 isn't much different than GDDR5X, the gap won't be bridged.

dr_rus

Member

Do they actually? Beyond some questionable rumors from last year Vega was always pegged at 2017 in their roadmaps. Most people expected it in the 1st quarter but that was nothing more than wishful thinking, and as far as we know AMD might have always planned to launch Vega a year after Polaris.Wouldn't you expect Navi to come later, given how AMD still struggles to release Vega?

There's also the fact that Vega based Radeon Instinct MI25 is available since January pointing to the fact that there's nothing wrong with Vega itself and the most obvious reason for its late arrival is the current prices of HBM2 modules.

Since Navi will most likely use HBM2 as well this shouldn't be an issue in 2018 and thus it's unlikely that Navi will be impacted by it in the same way Vega is. So I think it's pretty safe to assume that Navi is coming around the same time as Polaris and Vega did in the previous years.

Titan Xv - Jan'18I don't expect this to happen that early. The Ti cards have generally come out only when Nvidia has been able to get good enough yields for the almost full chip of whatever generation. They usually peddle a Titan version for early adopters then halve the price and sell a Ti that can match it in performance even though not in specs.

2080Ti - Jun'18

That gives the new Titan ~6 months of reign which is very much in line with how it was previously.

That's a good question as so far we don't know which speed GDDR6 will launch at. The promise of 16Gbps is just that - a promise. GDDR5X had it's speed promised to be 12-14Gbps and so far, almost a year later, it's only reached 11 (12 if we count some unstable OC in). So it's entirely possible that first GDDR6 cards won't be anywhere close to 16Gbps and will in fact launch at speeds closer to that of promised for GDDR5X - 12-14Gbps.Does memory speed even make that much of a difference? GDDR5X vs GDDR6 for example.

In this case the speed difference between GDDR5X and GDDR6 becomes pretty much irrelevant and thus it may well be that GV104 will launch with GDDR5X and will get a GDDR6 upgrade at some point next year for example.

GDDR6 isn't much different than GDDR5X, the gap won't be bridged.

GDDR6 should run on lower voltages and thus consume less power on the same speed as GDDR5X though.

ethomaz

Banned

Titan XP uses Micron 12 Gbps GDDR5x underclocked from what I know... this one: https://www.micron.com/parts/dram/gddr5/mt58k256m321ja-120?pc={36AC3EF0-A201-4647-A69B-2B6A506FEEDE}That's a good question as so far we don't know which speed GDDR6 will launch at. The promise of 16Gbps is just that - a promise. GDDR5X had it's speed promised to be 12-14Gbps and so far, almost a year later, it's only reached 11 (12 if we count some unstable OC in). So it's entirely possible that first GDDR6 cards won't be anywhere close to 16Gbps and will in fact launch at speeds closer to that of promised for GDDR5X - 12-14Gbps.

dr_rus

Member

Titan XP uses Micron 12 Gbps GDDR5x underclocked from what I know... this one: https://www.micron.com/parts/dram/gddr5/mt58k256m321ja-120?pc={36AC3EF0-A201-4647-A69B-2B6A506FEEDE}

Yeah, but there's a reason why they are underclocked on both Titan Xp and 1080 11Gbps - it's likely unstable on 12Gbps although it may be an issue of the memory controller and not memory chips. Still doesn't paint a great picture for GDDR6 as 6 is basically the same as 5X but on newer production process and with some architecture tweaks - meaning that it will likely be equally hard to run GDDR6 at top speeds.

The perf/watt from HBM is far better in comparison to GDDR5, GDDR5X is a bit more efficient because it doubles the data-prefetch which allows it to drive the interface at lower clockspeed (depending on the data-rate you want of course) but it still doesn't reach the efficiency of HBM.

GDDR6 isn't much different than GDDR5X, the gap won't be bridged.

Given that chart I don't see how GDDR6 reduces that inflation in IO power consumption, which dominates currently. It will only get worse as you drive up clock speeds or data rates.