After reading an article on PS4Pro enhancements for Horizon Zero Dawn I ran into a bullet point that just made me question...

Just how hard is this crap to implement on consoles for Devs?

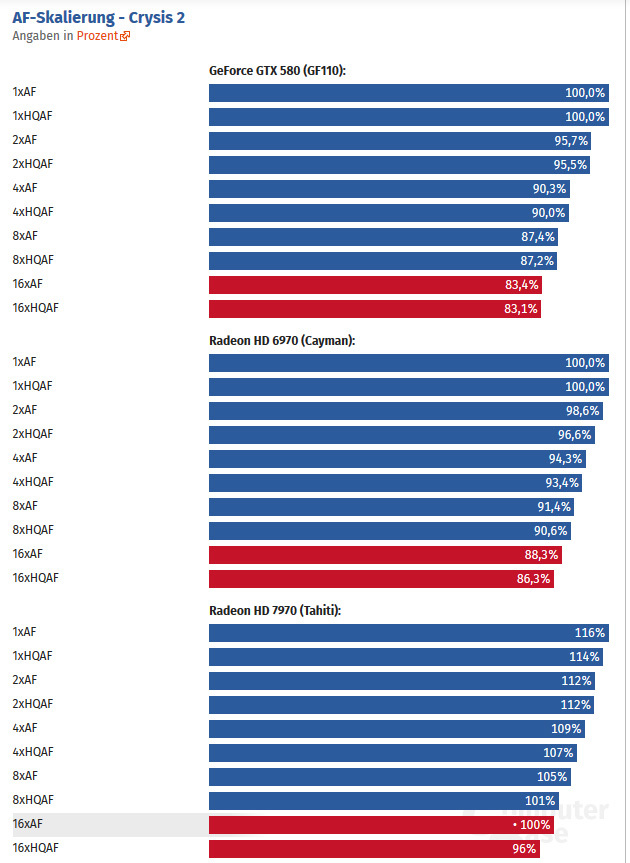

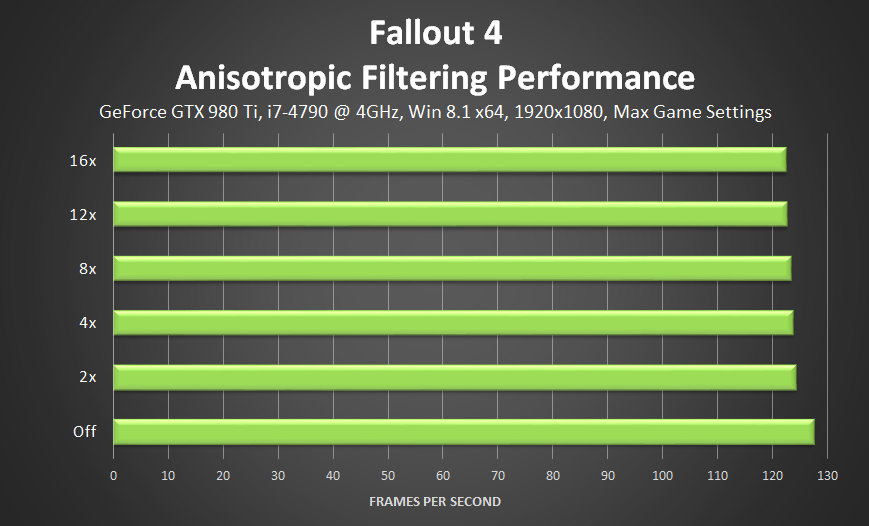

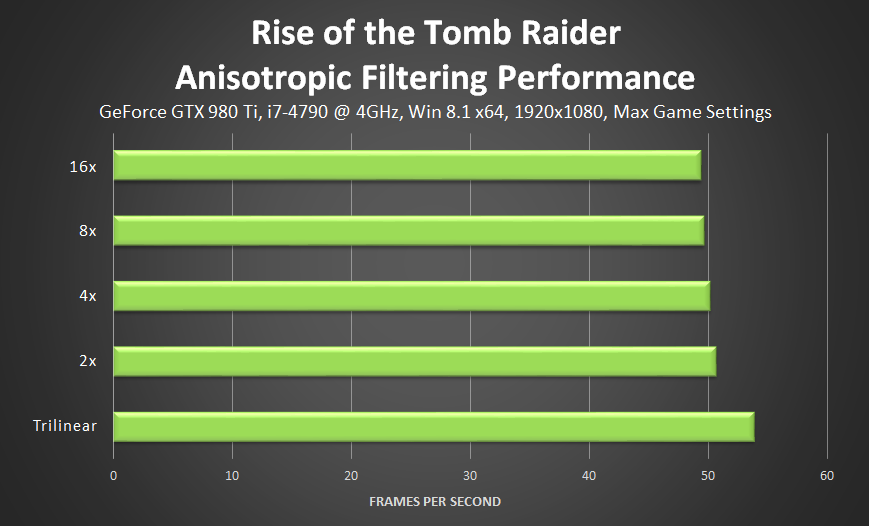

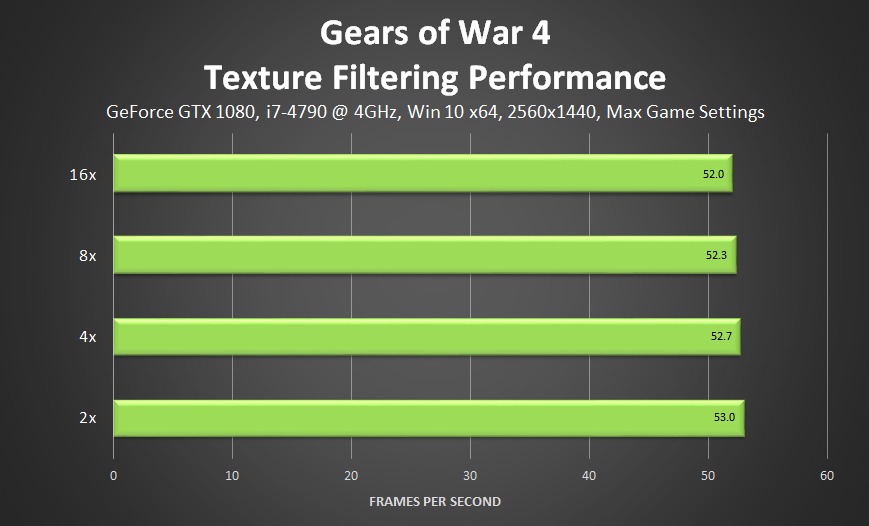

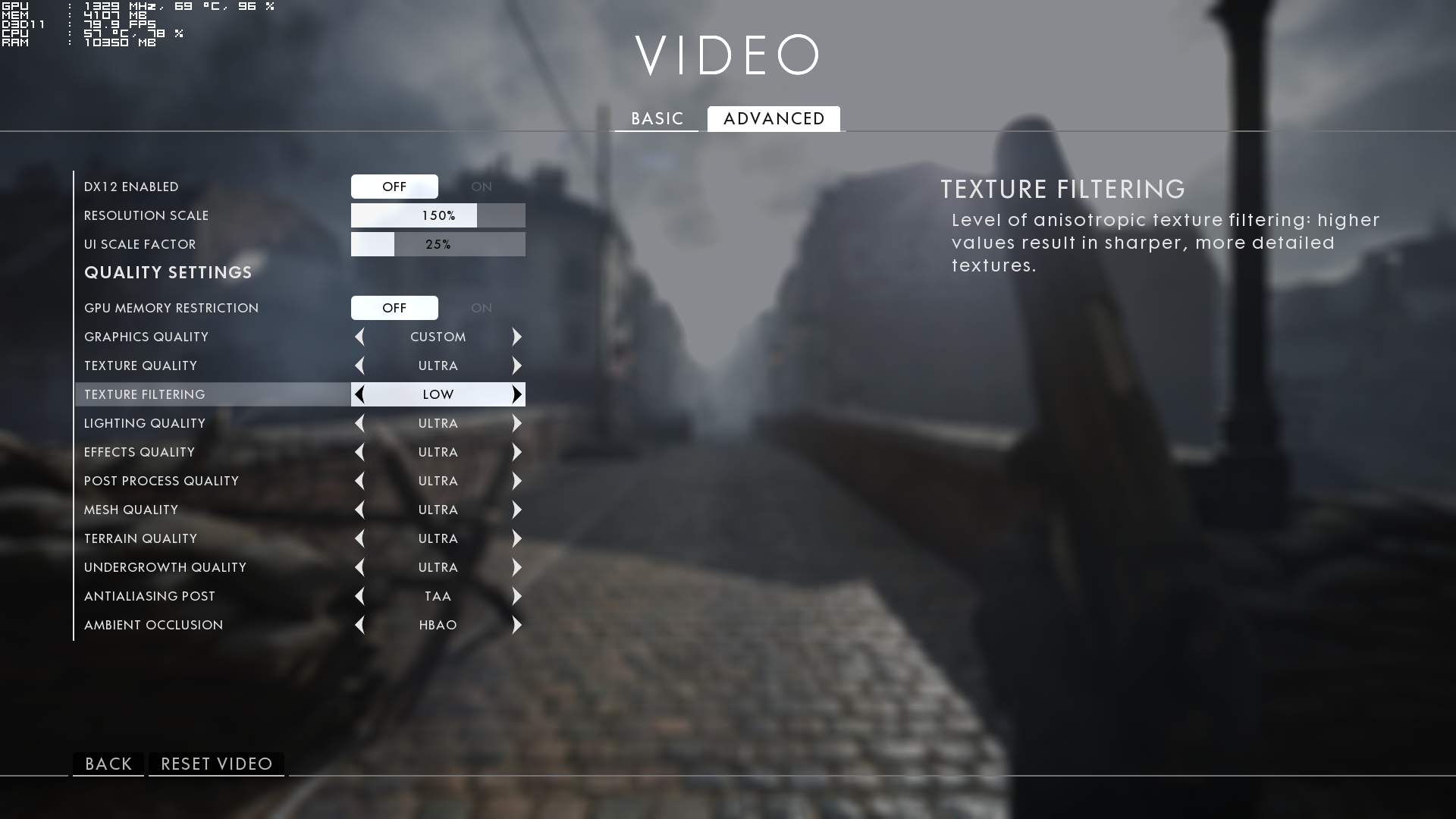

16x Anisotropic filtering is super cheap and a great step up for IQ. I have been using this on even low end cards with at most 1-2 fps difference.

It's 2016.... This shouldn't be a thing. If anything even 8x is fine.

I'm sorry. This is getting old and consoles more than ever now can pump this out easily.

Just how hard is this crap to implement on consoles for Devs?

16x Anisotropic filtering is super cheap and a great step up for IQ. I have been using this on even low end cards with at most 1-2 fps difference.

There will be shadow maps and antisotropic filtering quality enhancements. This will increase the quality of texture sampling, resulting in more detailed environment textures.

It's 2016.... This shouldn't be a thing. If anything even 8x is fine.

I'm sorry. This is getting old and consoles more than ever now can pump this out easily.