ashecitism

Member

http://wccftech.com/gtx-960-ti-benchmarks-specs-revealed/

supposedly GM206 with 128 bit bus and 2 GB VRAM for the base model, which goes in hand with an earlier rumor and a probably (cut down) GM204 or GM206 with 265 bit bus and 4 GB VRAM for one the Tis which would confirm the even earlier rumor with the indian shipping documents

from a couple of days earlier

http://wccftech.com/nvidia-geforce-gtx-960-gm206-210/

supposedly GM206 with 128 bit bus and 2 GB VRAM for the base model, which goes in hand with an earlier rumor and a probably (cut down) GM204 or GM206 with 265 bit bus and 4 GB VRAM for one the Tis which would confirm the even earlier rumor with the indian shipping documents

Turns out there are currently not one, not two, but three engineering samples of the 960. There is your base Geforce GTX 960 and then two different flavors of the GTX 960 Ti. And best, of all we have performance numbers for all three, courtesy of DG Lee over at IYD.KR.

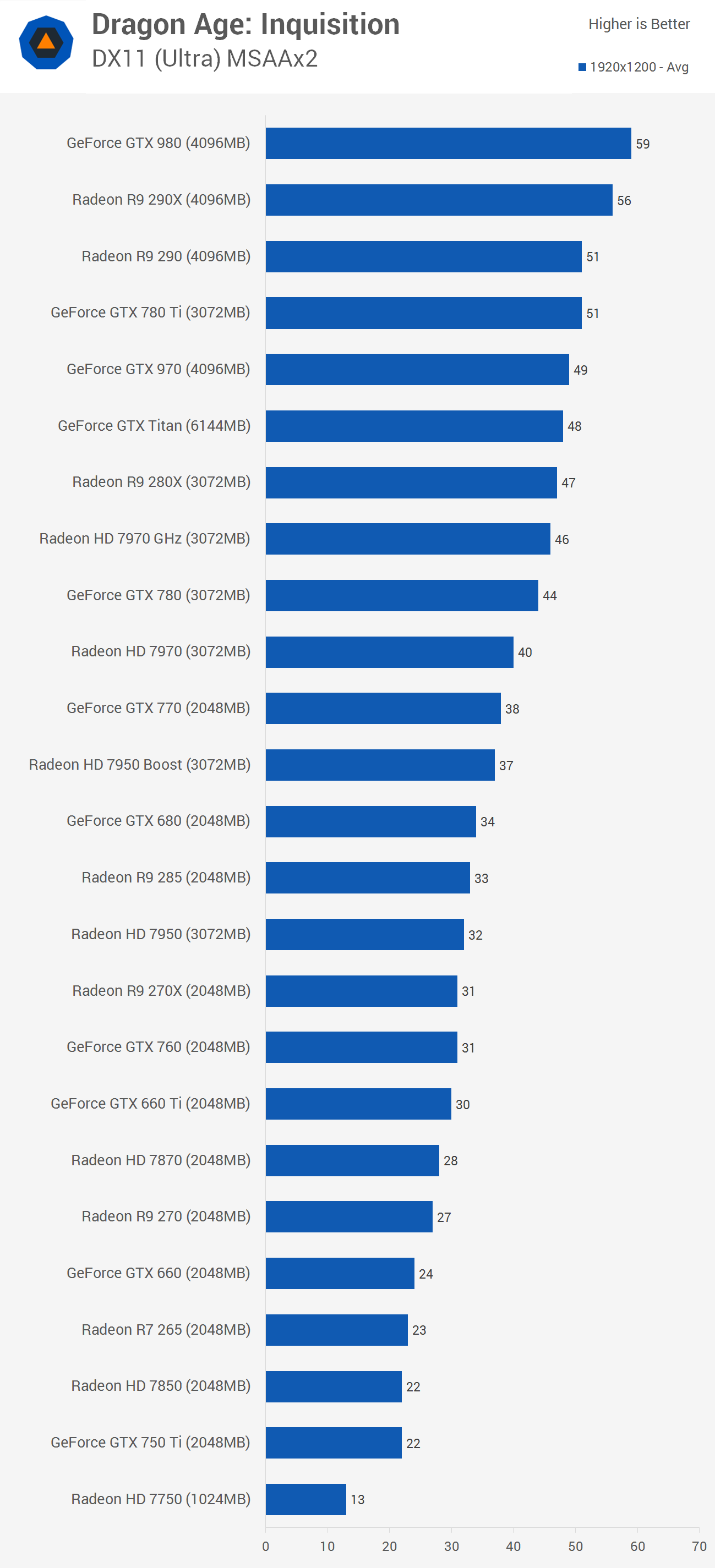

The results however, are not that impressive. The GTX 770 wipes the floor with the base GTX 960; heck even the R9 280 (which costs ~$200) manages to put the card in its place. The base 960 performs around 10% faster than the GTX 760 (stock) which is a barely acceptable margin as it is. The Ti variants are another story altogether, and this is where the going gets interesting. There are two GTX 960 Ti variants currently one with 1280 CUDA Cores and another with 1536 CUDA Cores. The performance difference is quite huge and I have a feeling that we are looking at a cut-down GM204 in the ‘Ti’ cards.

There you go folks, the first 100% confirmed benchmarks of the GTX 960 and company. As you can see, the GTX 960 is at the lower end of the chart, just above the GTX 760. The 1280 SP variant of the GTX 960 Ti fares much better, beating out the GTX 770 and the R9 280X. The 1536 SP variant of the GTX 960 on the other hand is simply brilliant. It is able to breeze past even a reference GTX 780. AMD’s R9 290 is about 7.5% faster than the GTX 960 Ti but considering the higher price (above the $300 mark) the Ti offers superior value. One of the reasons why I suspect that the GTX 960 uses a different core than the Ti variants is the huge performance gap between the three. IF the base card uses a 128 bit bus then it would help explain the lackluster performance despite Maxwell’s amazing compression technologies. Also, I think I can now safely say that the GTX 960 spotted at Zauba.com was a Ti variant and has a 256 bit bus and 4GB GDDR5 memory.

Based on the current pricing of competing AMD solutions, my estimates for pricing would be as follows: ~$280-$320 for the better GTX 960 Ti, ~$250 for the 1280SP Ti and ~$200 for the base variant. The cards will be launching on 22nd of January and I expect we will find out which of the three cards, if not all, Nvidia decides to go forward with.

from a couple of days earlier

http://wccftech.com/nvidia-geforce-gtx-960-gm206-210/

I am by no means claiming for certain that the GTX 960 will be powered by a cut GM204, rather that there is zero authentic evidence on the existence of a GM206 core so far. Infact the GTX 960 prototype that was spotted in Zauba had 4GB of GDDR5 and 256 Bit Bus Width, indicating a cut GM204 core. Therefore, I must admit, that if the GTX 960 turns out to house the GM206 after all, it will surprise me quite a bit. Ofcourse there is one other possibility (scrapers: this is obviously speculation) that Nivdia is prepping a GTX 960 along with a GTX 965 or GTX 960 Ti variant. Here the previous one could house the GM206 core and the latter could house the GM204 core. It is worth pointing out that the Mobility variant, GTX 965, exists already.

Read more: http://wccftech.com/nvidia-geforce-gtx-960-gm206-210/#ixzz3NtTO8YOF