RukusProvider

Banned

Even if the CPU and GPU are roughly around 360's raw power, having 1gb of ram for gaming will go a long way. The tablet overhead is the real wild card in all of this.

Remember:

They used a video with scroll lag to show off Nintendo TVii - and I don't believe that it will have scroll lag on the day it's being released.

And now you got screenshots with heavy jaggies. I think the final product will look slightly different.

Everything but the lighting model, yes it could. I'm not familiar with Ip5 though so maybe it can do the lighting as well. Realistically people won't begin seeing what the Wii U is truly capable of until 2nd generation games that are built from the ground up for Wii U or PC to Wii U, and only from good developers.

That conflicts with IBM Oban:

http://www.fudzilla.com/processors/item/25619-oban-initial-product-run-is-real

Honestly moving to x86 would hurt 360 backwards compatibility and it's too huge a selling point for them. I doubt all x86 rumors for XB3 and find them all wishful thinking but if you have a link to one that is compelling, I'll certainly take a look.

I always kinda thought the Oban was actually the WiiU SoC. The assumption that Oban was for MS was always just an educated guess based on prevailing rumors at the time. Back then everyone though 720 was definitely AMD+PPC, making it a reasonable guess.

That conflicts with IBM Oban:

http://www.fudzilla.com/processors/item/25619-oban-initial-product-run-is-real

and this from just last week with rumors of delays:

http://www.ign.com/articles/2012/09/06/xbox-720-could-be-delayed-by-manufacturing-trouble

Honestly moving to x86 would hurt 360 backwards compatibility and it's too huge a selling point for them. I doubt all x86 rumors for XB3 and find them all wishful thinking but if you have a link to one that is compelling, I'll certainly take a look.

So, what is the XBox Next? SemiAccurate has been saying for a while that all signs were pointing toward a PowerPC, specifically an IBM Power-EN variant. The dark horse was an x86 CPU, but it was a long shot. It looks like the long shot came through, moles are now openly talking about AMD x86 CPU cores and more surprisingly, a newer than expected GPU. How new? HD7000 series, or at least a variant of the GCN cores, heavily tweaked by Microsoft for their specific needs.

That conflicts with IBM Oban:

http://www.fudzilla.com/processors/item/25619-oban-initial-product-run-is-real

For some reason this shot clearly lacks the dof present in that smaller shot. Dof helps a big deal with aliasing.

This has happened before with shaders "disappearing" from different pikmin 3 captures.

The first one sure is weird, since it says that it is surprised that it is using HD7000, while the first reports from January were also using HD7000...Well there is this

http://semiaccurate.com/2012/09/04/microsoft-xbox-next-delay-rumors-abound/

& this

Both saying that there was a switch from IBM to AMD.

This is the link: http://youtu.be/tPt27Ozbc4s?t=4m3s stops at 5:09

4. 192 GCN SPs is only 3CUs... This is much much lower than anyone expects out of these consoles... GFLOPs performance would at 800MHz be ~120% of Xbox360 (192*.800*2=307.2 GFLOPs)

In this case they are going to be paired with a discrete GPU.

I am saying it shouldn't matter to you that I call that person disingenuous for reasons stated in my first reply. I assumed that you were taking issue with me making that claim.

If you do not mind who are the two, what company do they work for and what did they say?

The first one sure is weird, since it says that it is surprised that it is using HD7000, while the first reports from January were also using HD7000...

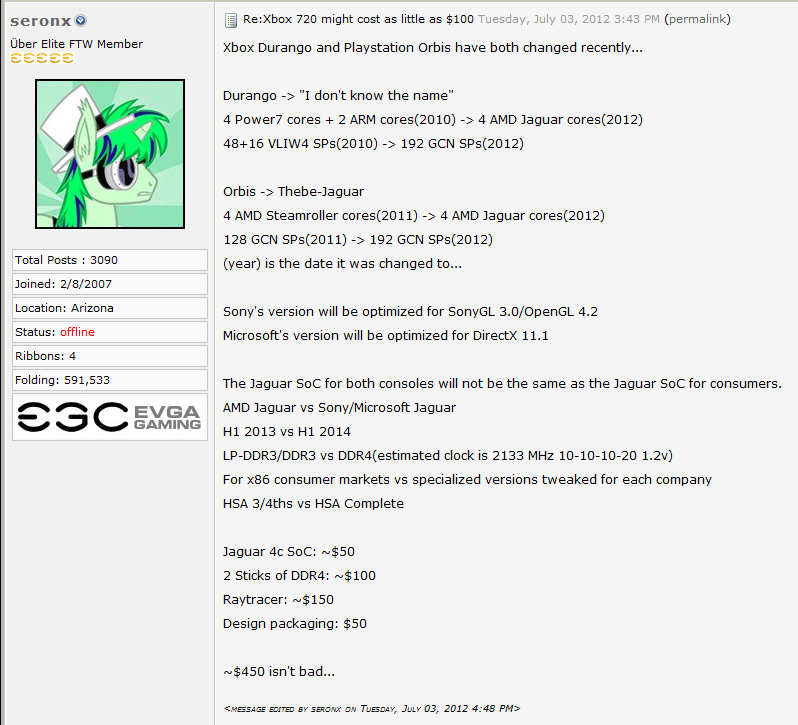

As for the second one, I don't know who Seronx is, but if his information is accurate:

1. No backwards compatibility for 360 or PS3 is basically confirmed.

2. Jaguar cores even custom ones, would still be based on the design of Jaguar, which are low frequency parts... Likely couldn't be clocked to even 2.5GHz. (Jaguar parts currently target sub 2GHz speeds)

3. All AMD CPUs lack any SMT, so it's 1 thread per core, meaning both these consoles would lack the extra 2 threads found in current gen hardware.

4. 192 GCN SPs is only 3CUs... This is much much lower than anyone expects out of these consoles... GFLOPs performance would at 800MHz be ~120% of Xbox360 (192*.800*2=307.2 GFLOPs)

For these reasons, I highly doubt he is a good source, but if he is, Wii U > XB3/PS4 so make sure you have your preorder.

The first one sure is weird, since it says that it is surprised that it is using HD7000, while the first reports from January were also using HD7000...

As for the second one, I don't know who Seronx is, but if his information is accurate:

1. No backwards compatibility for 360 or PS3 is basically confirmed.

2. Jaguar cores even custom ones, would still be based on the design of Jaguar, which are low frequency parts... Likely couldn't be clocked to even 2.5GHz. (Jaguar parts currently target sub 2GHz speeds)

3. All AMD CPUs lack any SMT, so it's 1 thread per core, meaning both these consoles would lack the extra 2 threads found in current gen hardware.

4. 192 GCN SPs is only 3CUs... This is much much lower than anyone expects out of these consoles... GFLOPs performance would at 800MHz be ~120% of Xbox360 (192*.800*2=307.2 GFLOPs)

For these reasons, I highly doubt he is a good source, but if he is, Wii U > XB3/PS4 so make sure you have your preorder.

Is that the $150 raytracer he lists? Is the guy an insider? because I have a hard time believing that Microsoft would walk away from their backwards compatibility, and into a weaker CPU when all reports we were getting is the complete opposite.

Is that the $150 raytracer he lists? Is the guy an insider? because I have a hard time believing that Microsoft would walk away from their backwards compatibility, and into a weaker CPU when all reports we were getting is the complete opposite.

I watched the NL footage, that wasn't impressive or anything current gen couldn't do.

These aren't, either.

The change to x86 AMD on PS4/Xbox720 will make straight ports to the Wii U even more tricky. Not withstanding the fact software will need to be re-engineered to use far less hardware threads on Wii U.

Which makes me think publishers will continue to treat the Wii U as a last gen console; recycle all their older PS3/360 tech/tools/engines and put their junior teams on Wii U games. Just like they did on the Wii.

Yeah the BC thing troubles me about Durango going X86. Especially since I think one of the recent rumors I trusted said MS was very concerned with BC.

Maybe they can do emulation. Maybe they dont care and ditch it. Maybe they are actually including a mini 360 chipset as the leak docs (this would be awful imo)

Wii U is a 3 core CPU, with the possibility that it uses 6 threads. With it's own ARM CPU for OS and a DSP for sound, Wii U is well equipped to only have 3 threads and might have 6.

Jaguar quad cores have a 100% chance of having 1 thread per core, so you are talking about 1 less thread at worst, and Wii U actually having 2 extra threads at best.

Jaguar also shouldn't be able to compete with a power7 architecture even one shrunken down. Jaguar cores are built for tablets and netbooks. Not laptops, those would be Trinity and it's successor.

This rumor is highly unlikely IMO, who here even wants it to be true? jaguar cores means 0% chance of backwards compatibility.

How about an SKU ~50 bucks more expensive that has BC? So $399 for the standard SKU, then $450 or $499 for one with BC and some other random crap/accessories.

I've got a question that I always wonder whenever somebody says that images will have to be rendered twice on Wii U. Is there a way for it to only be rendered once, just shown twice that would require less processing power?

http://www.youtube.com/watch?v=jDeDUZGhJA4

So here NBA 2k13 is being shown both on the TV and the Gamepad at the same time at all times. Does this mean the game is being rendered twice or is it some other trickery?

I've got a question that I always wonder whenever somebody says that images will have to be rendered twice on Wii U. Is there a way for it to only be rendered once, just shown twice that would require less processing power?

http://www.youtube.com/watch?v=jDeDUZGhJA4

So here NBA 2k13 is being shown both on the TV and the Gamepad at the same time at all times. Does this mean the game is being rendered twice or is it some other trickery?

People on GAF only read:

"Jaguar 4 core amd x86 cpu" and start orgasm over it without checking what that would actually mean. Most people on GAF have no clue about specs yet participate in specs threads. Wii U hardware/specs threads should have taught you that

You can have a scene rendered once and sent to both screens, yes.

I've got a question that I always wonder whenever somebody says that images will have to be rendered twice on Wii U. Is there a way for it to only be rendered once, just shown twice that would require less processing power?

http://www.youtube.com/watch?v=jDeDUZGhJA4

So here NBA 2k13 is being shown both on the TV and the Gamepad at the same time at all times. Does this mean the game is being rendered twice or is it some other trickery?

Wii U is a 3 core CPU, with the possibility that it uses 6 threads. With it's own ARM CPU for OS and a DSP for sound, Wii U is well equipped to only have 3 threads and might have 6.

Jaguar quad cores have a 100% chance of having 1 thread per core, so you are talking about 1 less thread at worst, and Wii U actually having 2 extra threads at best.

Jaguar also shouldn't be able to compete with a power7 architecture even one shrunken down. Jaguar cores are built for tablets and netbooks. Not laptops, those would be Trinity and it's successor.

This rumor is highly unlikely IMO, who here even wants it to be true? jaguar cores means 0% chance of backwards compatibility.

If it's the same image on both screens it shouldn't be rendered twice, just downscaled on the controller screen... i believe.

I've got a question that I always wonder whenever somebody says that images will have to be rendered twice on Wii U. Is there a way for it to only be rendered once, just shown twice that would require less processing power?

http://www.youtube.com/watch?v=jDeDUZGhJA4

So here NBA 2k13 is being shown both on the TV and the Gamepad at the same time at all times. Does this mean the game is being rendered twice or is it some other trickery?

This rumor is highly unlikely IMO, who here even wants it to be true? jaguar cores means 0% chance of backwards compatibility.

Yeah but....Jaguars! They've got claws n shit! Rawr!

I don't think they saw the "Jaguar" part.

i'm an armchair system designer at best but i think 8 jag cores might be pretty sweet.

thats a lot of cores that can do a lot of different things. dedicate 2-3 to os? you've still got 5-6 left over. i'm guessing devs can do some cell-like gpu helping with all those cores too, if they want.

lots of symmetric oooe general purpose cores might be pretty cool imo.

i'm an armchair system designer at best but i think 8 jag cores might be pretty sweet.

thats a lot of cores that can do a lot of different things. dedicate 2-3 to os? you've still got 5-6 left over. i'm guessing devs can do some cell-like gpu helping with all those cores too, if they want.

lots of symmetric oooe general purpose cores might be pretty cool imo.

You still need to allocate resources to scale the image as well as some memory for the controllers frame buffer.If it's the same image on both screens it shouldn't be rendered twice, just downscaled on the controller screen... i believe.

i'm an armchair system designer at best but i think 8 jag cores might be pretty sweet.

thats a lot of cores that can do a lot of different things. dedicate 2-3 to os? you've still got 5-6 left over. i'm guessing devs can do some cell-like gpu helping with all those cores too, if they want.

lots of symmetric oooe general purpose cores might be pretty cool imo.

Not really.

Using a 4 core Trinity would give a massive power boost and is far more suitable for a console than anything Jaguar

The change to x86 AMD on PS4/Xbox720 will make straight ports to the Wii U even more tricky. Not withstanding the fact software will need to be re-engineered to use far less hardware threads on Wii U.

Which makes me think publishers will continue to treat the Wii U as a last gen console; recycle all their older PS3/360 tech/tools/engines and put their junior teams on Wii U games. Just like they did on the Wii.

I wonder if that could be related to how they're taking screenshots and some smoothing filter or AA being applied via post processing or something? (note: no idea what I'm talking about here!)

I believe the screenshot tool in steam sometimes works in a similar way in that it doesn't capture some post-processing effects or whatever which makes screenshots look different than it actually looks while you're playing.

I say this (not only to start my third paragraph in a row with a stupid "I..." sentence) because I haven't noticed any jaggies in actual gameplay footage of those NintendoLand games.

I'm a leg rest system designer we should get together.

I heard the wii u is powered by magic, hey I should make a thread!

The magic of the wii-u open it up to see!!!!

Yeah but....Jaguars! They've got claws n shit! Rawr!

Meh, still too sparse to work out its performance. We need number of shaders, clockspeeds, cache amounts etc.

Still using a 13 year old CPU design for a modern console is extremely embarrassing though. I'm assuming that the ingame OS uses the 32mb of memory.

Check this out. All signs point to the NoA built simply being early or missing effects/post processing for whatever reason. The below picture is from NoJ