Facebook has updated its suicide prevention tools and is now making them available worldwide.

The tools, which let people flag posts from friends who may be at risk for self-harm or suicide, were previously available only for some English-language users. Other users could report posts through a form, but the new tools make the process quicker and less complicated.

In an announcement, Facebook said its suicide prevention resources will be available in all languages supported by the platform. The company’s global head of safety Antigone Davis and researcher Jennifer Guadagno wrote that the tools were “developed in collaboration with mental health organizations and with input from people who have personal experience with self-injury and suicide.”

The tools were first made available to some users in the United States last year with the help of Forefront, Lifeline, and Save.org. Facebook said it will continue to partner with suicide prevention and mental health organizations in different countries.

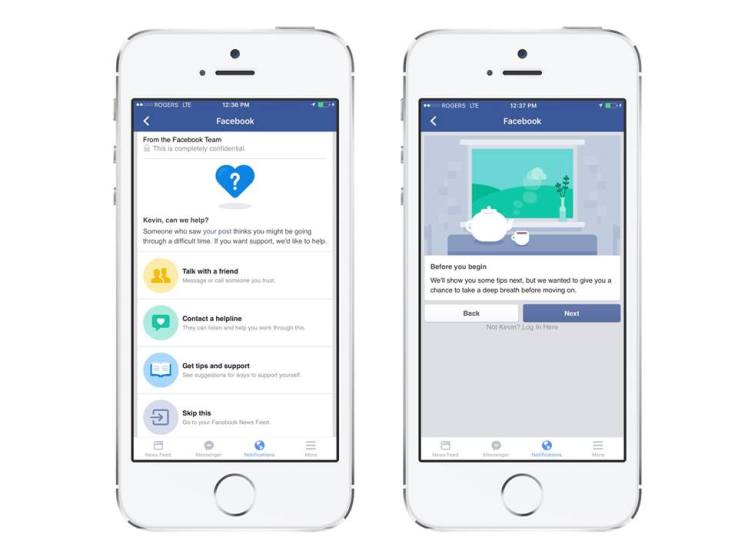

Users everywhere will soon be able to flag a friend’s post from a drop-down menu if they are worried about self-harm or suicide. Facebook gives them several options. For example, a list of resources, including numbers for suicide prevention organizations, can be shared anonymously, or a message of support can be sent (Facebook suggests wording).

The post may also be reviewed by Facebook’s global community operations team, which may then “reach out to this person with information that might be helpful to them,” according to its Help Center. If someone is at immediate risk of hurting themselves, however, Facebook warns that police should be contacted.

Facebook’s suicide prevention tools may help save lives—or at least raise awareness of an important issue.

Increasing rates of suicide around the world means that it has become public health crisis in many countries. In the U.S., suicide rates are at their highest in three decades, particularly among men of all ages and women aged 45 to 64.

The company, however, has to balance suicide prevention with the privacy concerns of its 1.65 billion monthly active users—especially since Facebook posts are already seen as a treasure trove of research data by many psychologists. Facebook itself was forced to apologize in July 2014 for conducting psychological experiments on users.

In fall 2014, United Kingdom charity Samaritans suspended its suicide prevention app, which let users monitor their friends’ Twitter feeds for signs of depression, just one week after its launch, following concerns about privacy and its potential misuse by online bullies.

TechCrunch has contacted Facebook for comment on how it will balance helping people with respecting their privacy.