I'm not sure that's right. The RX480 never drops below 60fps in DX12 and maintains a much higher lead over the 1060. There's no way it's frametime is worse in DX12. Even in DX11 the RX480 does not fall below 60fps but you could see the 1060 going below that in DX12. If you're playing this game on the RX480, DX12 is definitely the best way to play, the opposite is true for the 1060.

Or perhaps how bad NV's DX12 performance have been overall......I do agree that their DX11 performance is amazing and wish that AMD would have caught on, they've made some strides in that department with some gains on older DX11 titles on their hardware, but obviously Nvidia is still ahead. In the case of the GTX 1060, I see two things....

1.) It appears a bit more powerful than the RX480 in most DX 11 titles.

2.) The RX 480 appears a bit more powerful in most DX12 titles

Our best answer as to which hardware is better is if AMD's DX11's code was better and if NV's DX12 hardware was more in tune with DX12. I do believe AMD's hardware is more future proof here, but there's no doubt NV knocked it out of the pack with their DX11 drivers.

That is not a bad thing because Zen has much improved single core performance over previous AMD chips and it will be packing many cores as well. There's no doubt that battlefield would pull in more CPU resources in MP though.

Do you have the graphs of the fury vs 380 MP benches?

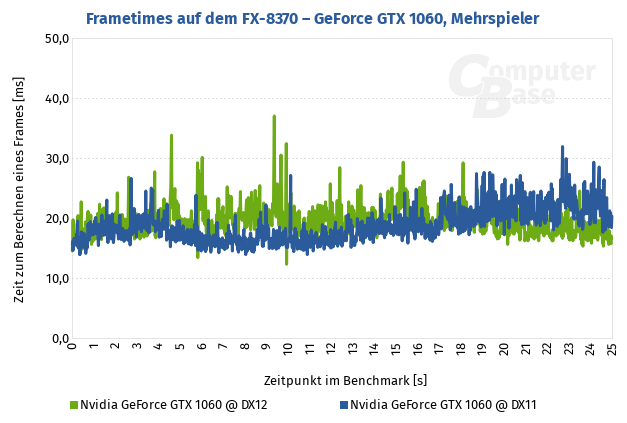

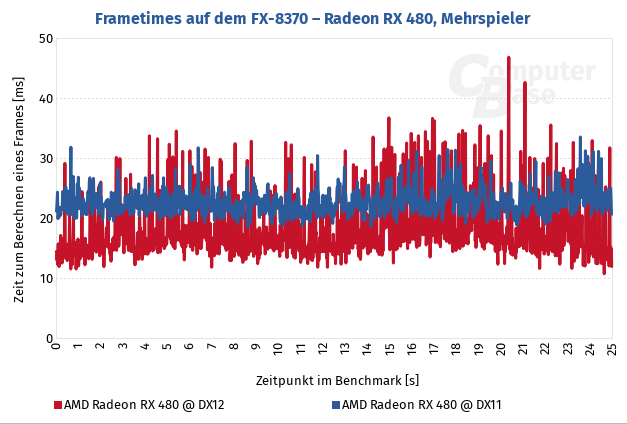

Durante linked the frametime charts just a few posts above us, you can see the difference in frametimes in both DX11 and DX12 for the RX 480, when paired with a FX-8370.

The RX 480's DX12 frametimes are all over the place, ranging from notably lower to notably higher than DX11. Overall it's a much more spiky experience, even if average frame rates are higher on DX12. The GTX 1060 actually has less pronounced frametime spikes in DX12 compared to the RX 480, however it's still spikes more than DX11. In both cases, DX11 provides a much smoother experience, even if DX12 can provide the occasional higher FPS scenes.

And you're quick to blame Nvidia on the DX12 performance issues, when said performance issues are much more in the hands of the game developers rather than NV/AMD nowadays. It's much harder for NV to ship a driver update that can bypass crappy developer code in DX12 or Vulkan when their DX12/Vulkan drivers don't handle as many responsibilities like older API's did.

AMD gets away looking better in crappy DX12 implementations, because their DX11 drivers were poor enough, particularly in regards to CPU overhead, that even bad DX12 paths are as good or better than their DX11 paths.

Nvidia gets shafted on crappy DX12 implementations, because their DX11 drivers perform so well, that it's much easier for performance regressions to appear.

https://www.computerbase.de/2016-10...gramm-battlefield-1-auf-dem-fx-8370-1920-1080 - Here's the link for the FX CPU performance. The game is horribly, horribly bottlenecked in MP on a FX CPU, it's so bottlenecked that the Fury X and GTX 1080 are CPU bottlenecked even at 4K, which is basically unheard of.