Do we have any idea of how current these specs are? Is this a week, month, or year or longer old specifications for the proposed Durango chipset?

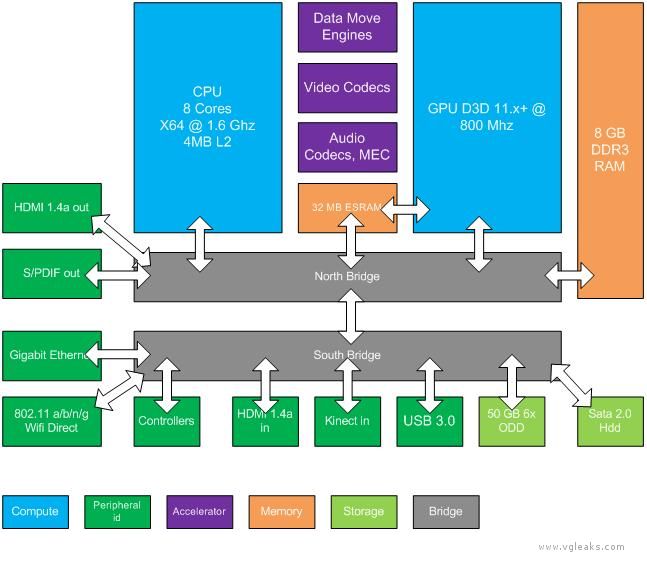

Also, this strongly supports the idea of the Kinect 2.0 shipping with the system. Microsoft is making it an integral part of the system that will have dedicated systems for it. It will continue being a camera you set up somewhere in your play space. Just because it says "Kinect In" doesn't mean it's a peripheral per say, it just shows that the console will not have the kinect hardware (i.e. cameras and audio equipment) baked in, it's just like the current connect is.

Also, this strongly supports the idea of the Kinect 2.0 shipping with the system. Microsoft is making it an integral part of the system that will have dedicated systems for it. It will continue being a camera you set up somewhere in your play space. Just because it says "Kinect In" doesn't mean it's a peripheral per say, it just shows that the console will not have the kinect hardware (i.e. cameras and audio equipment) baked in, it's just like the current connect is.