Cow Mengde

Banned

Games vary widely in terms of how demanding they are on the CPU. Rogue Leader, Beach Spikers,Last Story and Xenoblade are far beyond Mario Kart.

Yeah, my i5 6300 can't run Rogue Leader well in some stages.

Games vary widely in terms of how demanding they are on the CPU. Rogue Leader, Beach Spikers,Last Story and Xenoblade are far beyond Mario Kart.

Heh, I didn't mean to imply you had. That wasn't a straw man or anything. Only a random, semi-related BTW comment I had wanted to post sooner and not a response to anything you had mentioned.That's not an argument I remember making at all. I know they're not going to overclock better.

Those results are pretty impressive as it represents a 22.7% gain at 4K resolutions, a massive 31.2% gain at 1440P and then a very nice 26.9% gain at 1080P. Keep in mind that we are testing with a stock clocked AMD Ryzen 7 1700 processor with DDR4 2933MHz memory with CL14 timings!

Here is what Stardock and Oxide CEO Brad Wardell had to say about the game update in AMD's press release:

”I've always been vocal about taking advantage of every ounce of performance the PC has to offer. That's why I'm a strong proponent of DirectX 12 and Vulkan because of the way these APIs allow us to access multiple CPU cores, and that's why the AMD Ryzen processor has so much potential," said Stardock and Oxide CEO Brad Wardell. ”As good as AMD Ryzen is right now – and it's remarkably fast – we've already seen that we can tweak games like Ashes of the Singularity to take even more advantage of its impressive core count and processing power. AMD Ryzen brings resources to the table that will change what people will come to expect from a PC gaming experience."

The good news is that AMD and Oxide aren't done making Ryzen optimizations as they think there are still more things than can be done to squeeze even more performance out of the architecture with more real world tuning. Now that AMD has released Ryzen and game developers have plenty of chips to use they can look at the data from their instruction sequences and make some simple code changes that offer substantial performance gains. Game developers are able to use performance profilers like Intel VTune Amplifier 2017 to get the most performance possible from their multi-threaded processors and AMD hasn't developed a tool like for Ryzen just yet. Hopefully, the performance numbers that you see here are just the start and that more game titles will be optimized. This going to be a huge task for the AMD developer relations, but we know the North American Developer Relations team is being pretty aggressive

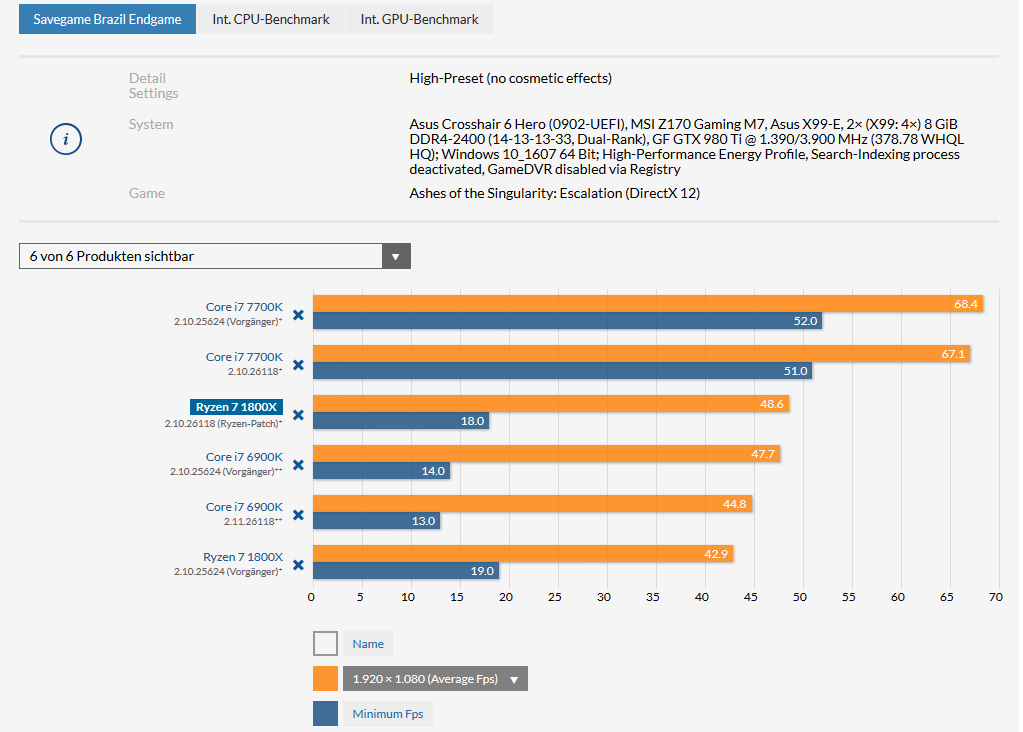

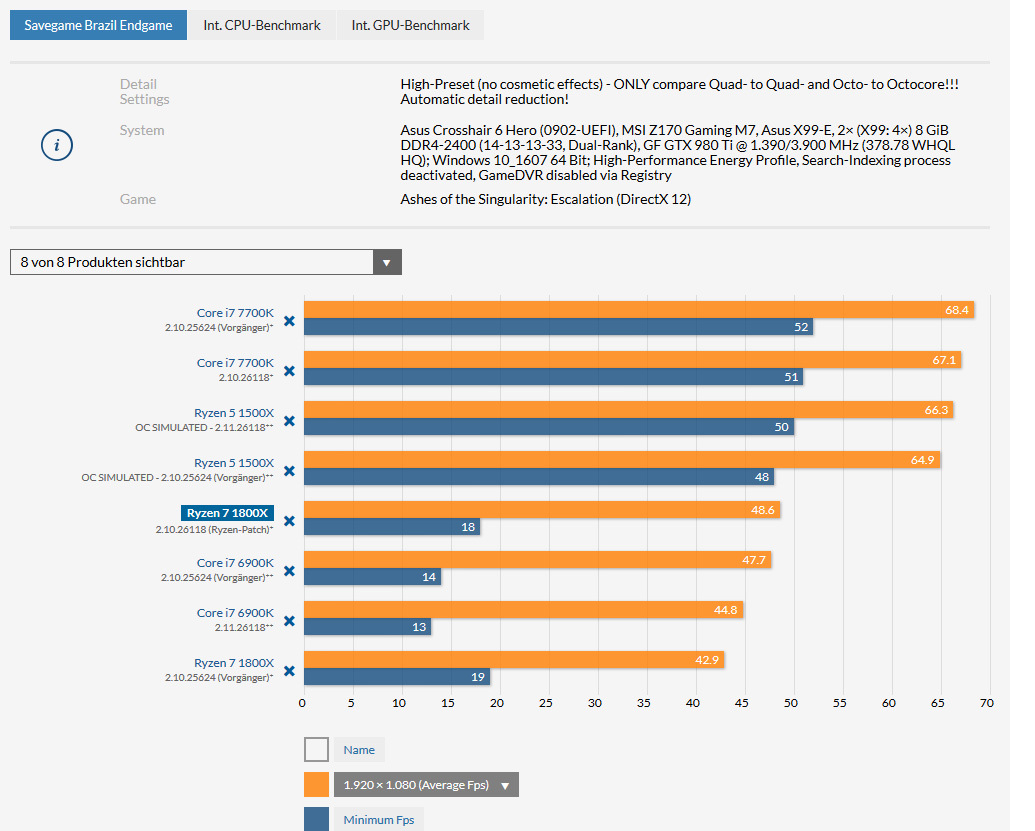

These are substantial performance improvements with the new engine code! At both 2400 MHz and 3200 MHz memory speeds, and at both High and Extreme presets in the game (all running in DX12 for what that's worth), the gaming performance on the GPU-centric is improved. At the High preset (which is the setting that AMD used in its performance data for the press release), we see a 31% jump in performance when running at the higher memory speed and a 22% improvement with the lower speed memory. Even when running at the more GPU-bottlenecked state of the Extreme preset, that performance improvement for the Ryzen processors with the latest Ashes patch is 17-20%!

So what exactly is happening to the engine with v26118? I haven't had a chance to have an in-depth conversation with anyone at AMD or Oxide yet on the subject, but at a high level, I was told that this is what happens when instructions and sequences are analyzed for an architecture specifically. ”For basically 5 years", I was told, Oxide and other developers have dedicated their time to ”instruction traces and analysis to maximize Intel performance" which helps to eliminate poor instruction setup. After spending some time with Ryzen and the necessary debug tools (and some AMD engineers), they were able to improve performance on Ryzen without adversely affecting Intel parts.

Those results are pretty impressive as it represents a 22.7% gain at 4K resolutions, a massive 31.2% gain at 1440P and then a very nice 26.9% gain at 1080P. Keep in mind that we are testing with a stock clocked AMD Ryzen 7 1700 processor with DDR4 2933MHz memory with CL14 timings!

I was told that this is what happens when instructions and sequences are analyzed for an architecture specifically. ”For basically 5 years", I was told, Oxide and other developers have dedicated their time to ”instruction traces and analysis to maximize Intel performance" which helps to eliminate poor instruction setup. After spending some time with Ryzen and the necessary debug tools (and some AMD engineers), they were able to improve performance on Ryzen without adversely affecting Intel parts.

Luckily it's done on an engine level. So the engine itself needs to be optimized for it.Well Oxide specifically coded their game around that CCX design, we're not sure if many other developers will do the same (I trust DICE will though).

Well Oxide specifically coded their game around that CCX design, we're not sure if many other developers will do the same (I trust DICE will though).

BTW is isolating to one CCX the ONLY solution around this Ryzen issue? I kinda want games to use all available threads

B-but the CCX design...

That's massive. Anyone with any sense should have known that the R7's had way more to give when in some cases the CPU load was 50% during some game benches. Once you wack in 3200Mhz+ ram you'd get performance indistinguishable from a 7700K. Who'd have thought?

There's publishers that have very poor post release support. Not gonna be getting Ryzen patches from these.Some were trying to imply that there was no way around the CPU Complex design being detrimental to performance. 400-hours of work to optimize for Ryzen is all it took apparently. That's like a team of 5 people working over a few weeks. And bang, you get 20-30% increase in fps, matching Intel's HEDT and with more performance to come. Of course, if devs don't bother with optimizations you get lower performance in some games, but why would they not if the optimizations are fairly trivial.

B-but the CCX design...

That's massive. Anyone with any sense should have known that the R7's had way more to give when in some cases the CPU load was 50% during some game benches. Once you wack in 3200Mhz+ ram you'd get performance indistinguishable from a 7700K. Who'd have thought?

It's only natural to be honest and I wouldn't consider it a problem with the CCX design. Intel has benefited from sticking with a similar design for a long time which means that compiler and engines won't need to be adjusted to take advantage of Intel's design with each new Intel CPU release.It's almost like this is exactly what people have been saying; that the problem with the CCX design is that many applications/games will need to optimize specifically for Ryzen CPUs rather than just making an application/game that is well multi-threaded.

The problem with an architecture which requires this is that not every developer is going to do it.

Hopefully at least Frostbite/Unreal/Unity will all be optimized for it, so games a year or two from now might start shipping with good support.

It's only natural to be honest and I wouldn't consider it a problem with the CCX design. Intel has benefited from sticking with a similar design for a long time which means that compiler and engines won't need to be adjusted to take advantage of Intel's design with each new Intel CPU release.

I think that AMD made a smart decision to start from the ground-up even if that meant that they lose previously done optimization in software.

It's almost like this is exactly what people have been saying; that the problem with the CCX design is that many applications/games will need to optimize specifically for Ryzen CPUs rather than just making an application/game that is well multi-threaded.

The problem with an architecture which requires this is that not every developer is going to do it.

Hopefully at least Frostbite/Unreal/Unity will all be optimized for it, so games a year or two from now might start shipping with good support.

I found a guide with details on base clock overclocking for the Crosshair VI, which states that the PCIe link speed will operate at Gen 3 speeds for 85104.85 MHz, Gen 2 speeds from 105144.8 MHz, and Gen 1 speeds at 145+ MHz.

So the fastest you can run the memory at while still keeping PCIe Gen 3 speeds is ~3050MT/s right now, since the 32x multiplier is still unstable.

No, disabling the Windows scheduler's thread migration by using CPU affinity is the only solution (unless Microsoft ever gets around making the scheduler saner). Inter-CCX latency is not that bad unless you are forced to repeatedly migrate/rebuild caches due to a forced thread migration "just because".BTW is isolating to one CCX the ONLY solution around this Ryzen issue?

Some were trying to imply that there was no way around the CPU Complex design being detrimental to performance. 400-hours of work to optimize for Ryzen is all it took apparently. That's like a team of 5 people working over a few weeks. And bang, you get 20-30% increase in fps, matching Intel's HEDT and with more performance to come. Of course, if devs don't bother with optimizations you get lower performance in some games, but why would they not if the optimizations are fairly trivial.

so do we have a thread for recommended ram/mobo for 1600x? Or should we ask here?

If that's the only change it's interesting that Intel's 6900K barely shows an improvement in the above benchmark. Is that an indication that Intel still caches the data despite the instruction not to? Or is this about cache writes, not reads?Actually, the issue in that Ashes of the Singularity benchmark is that they were using instructions that bypassed cache access by using instructions that are specifically designed not to be reused again.

We will soon be distributing AGESA point release 1.0.0.4 to our motherboard partners. We expect BIOSes based on this AGESA to start hitting the public in early April, though specific dates will depend on the schedules and QA practices of your motherboard vendor.

BIOSes based on this new code will have four important improvements for you

We will continue to update you on future AGESA releases when theyre complete, and were already working hard to bring you a May release that focuses on overclocked DDR4 memory.

- We have reduced DRAM latency by approximately 6ns. This can result in higher performance for latency-sensitive applications.

- We resolved a condition where an unusual FMA3 code sequence could cause a system hang.

- We resolved the overclock sleep bug where an incorrect CPU frequency could be reported after resuming from S3 sleep.

- AMD Ryzen Master no longer requires the High-Precision Event Timer (HPET).

That's not how it works, unfortunately. You need a Xeon for that ECC to work. You can thank Intel for that.This doesn't make any sense, you are literally the person that should be buying into HEDT. Why build two machines just to have AMD when you can build a single 6900K machine which breathes fire in both games and workstation applications, especially if you're going to overclock it until it cries? What do you specifically need ECC for?

If you really do need ECC, there's also this:

https://www.asus.com/Motherboards/X99E_WS/specifications/

X99 with ECC support. Put a 6900K in it. Have fun!

Using all three here.funny thing about compilers, usually Intel's is fastest one, and it has code to gimp AMD even not execute on it... but it can be patched out. I wonder how many people use IC vs GCC vs Clang.

B-but the CCX design...

That's massive. Anyone with any sense should have known that the R7's had way more to give when in some cases the CPU load was 50% during some game benches. Once you wack in 3200Mhz+ ram you'd get performance indistinguishable from a 7700K. Who'd have thought?

B-but this is exactly what you should expect from a CCX design - a lot of performance can be gained via software optimization on such NUMA system. AotS example is perfect in illustrating this.

Sigh. It's not about how you spread the threads, it's about THE FACT that threads of some nature will ALWAYS need to access some data in a "far" L3 of the other CCX. This is a hardware problem, it can't be fixed with OS or anything else, only worked around to some degree. Also - where can we see the results of Linux running the same software on Ryzen better than Windows? Even AMD has said already that there is no issues in how Windows 10 schedule work on Ryzen.

Dota 2 was updated (likely CPU affinity stuff):

- Fixed the display of particles in the portrait window.

- Fixed Shadow Fiend's Demon Eater (Arcana) steaming while in the river.

- Fixed Juggernaut's Bladeform Legacy - Origins style hero icons for pre-game and the courier button.

- Improved threading configuration for AMD Ryzen processors.

- Workshop: Increased head slot minimum budget for several heroes.

http://store.steampowered.com/news/28296/

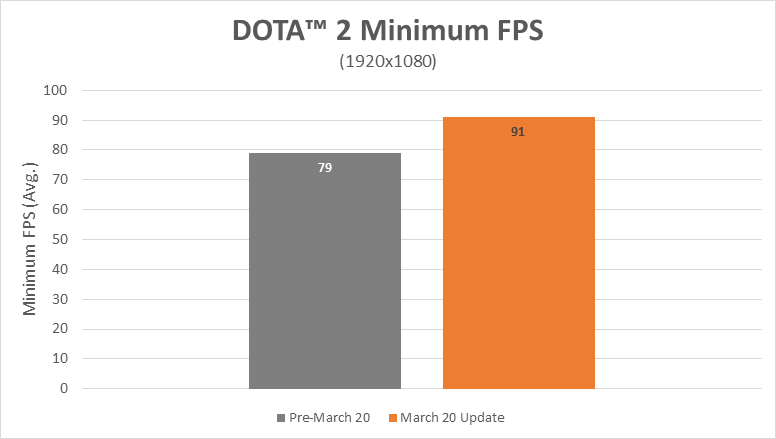

Players report gains of 20-25%.

Boosting minimum framerates in DOTA 2

Many gamers know that an intense battle in DOTA 2 can be surprisingly demanding, even on powerful hardware. But DOTA has an interesting twist: competitive gamers often tell us that the minimum framerate is what matters more than anything in life or death situations. Keeping that minimum framerate high and steady keeps the game smooth, minimizes input latency, and allows players to better stay abreast of every little change in the battle.

As part of our ongoing 1080p optimization efforts for the AMD Ryzen processor, we identified some fast changes that could be made within the code of DOTA to increase minimum framerates. In fact, those changes are already live on Steam as of the March 20 update!

We still wanted to show you the results, so we did a little A:B test with a high-intensity scene developed with the assistance of our friends in the Evil Geniuses eSports team. The results? +15% greater minimum framerates on the AMD Ryzen 7 1800X processor2, which lowers input latency by around 1.7ms.

Not bad for some quick wrenching under the hood, and were continuing to explore additional optimization opportunities in this title.

System configuration: AMD Ryzen 7 1800X Processor, 2x8GB DDR4-2933 (15-17-17-35), GeForce GTX 1080 (378.92 driver), Gigabyte GA-AX370-Gaming5, Windows® 10 x64 build 1607, 1920x1080 resolution

tournament-optimized quality settings - https://community.amd.com/servlet/JiveServlet/download/1455-69711/DOTA2FPSTesting.pdf

Lets talk BIOS updates

Finally, we wanted to share with you our most recent work on the AMD Generic Encapsulated Software Architecture for AMD Ryzen processors. We call it the AGESA for short.

As a brief primer, the AGESA is responsible for initializing AMD x86-64 processors during boot time, acting as something of a nucleus for the BIOS updates you receive for your motherboard. Motherboard vendors take the baseline capabilities of our AGESA releases and build on that infrastructure to create the files you download and flash.

We will soon be distributing AGESA point release 1.0.0.4 to our motherboard partners. We expect BIOSes based on this AGESA to start hitting the public in early April, though specific dates will depend on the schedules and QA practices of your motherboard vendor.

BIOSes based on this new code will have four important improvements for you

- We have reduced DRAM latency by approximately 6ns. This can result in higher performance for latency-sensitive applications.

- We resolved a condition where an unusual FMA3 code sequence could cause a system hang.

- We resolved the overclock sleep bug where an incorrect CPU frequency could be reported after resuming from S3 sleep.

- AMD Ryzen Master no longer requires the High-Precision Event Timer (HPET).

We will continue to update you on future AGESA releases when theyre complete, and were already working hard to bring you a May release that focuses on overclocked DDR4 memory.

Exactly.It's almost like this is exactly what people have been saying; that the problem with the CCX design is that many applications/games will need to optimize specifically for Ryzen CPUs rather than just making an application/game that is well multi-threaded.

The problem with an architecture which requires this is that not every developer is going to do it.

Hopefully at least Frostbite/Unreal/Unity will all be optimized for it, so games a year or two from now might start shipping with good support.

99% of all Windows games aren't compiled with any of those 3, but rather MSVC.funny thing about compilers, usually Intel's is fastest one, and it has code to gimp AMD even not execute on it... but it can be patched out. I wonder how many people use IC vs GCC vs Clang.

·feist·;233094445 said:Dota 2 Pre-patch: https://arstechnica.com/gadgets/2017/03/amd-ryzen-review/3/

~23% increase — Dota 2 pre-patch -vs- post-patch: https://www.chiphell.com/thread-1717769-1-1.html

As part of our ongoing 1080p optimization efforts for the AMD Ryzen™ processor, we identified some fast changes that could be made within the code of DOTA to increase minimum framerates. In fact, those changes are already live on Steam as of the March 20 update!

It's almost like this is exactly what people have been saying; that the problem with the CCX design is that many applications/games will need to optimize specifically for Ryzen CPUs rather than just making an application/game that is well multi-threaded.

The problem with an architecture which requires this is that not every developer is going to do it.

Hopefully at least Frostbite/Unreal/Unity will all be optimized for it, so games a year or two from now might start shipping with good support.

Exactly.

.

You should learn to read before saying what I've changed and what I didn't.You must think people have a short memory or something. You've completely changed your tune in light of AotS being optimised. This is you on page 36 of this thread:

These optimizations prove the exact opposite of what you're saying - that the CCX is a hardware problem which can be worked around to some degree by doing s/w optimizations specifically for the h/w in question. Which is what I've been saying all the time.These optimisations prove that the CPU Complex design isn't a 'hardware problem' like you and others tried to dramatically claim it was. It's not a problem at all. If a small amount of work from a little dev team like Oxide brings 20-30% performance increases initially, with more performance to come, then this hasn't just been 'worked around', it's completely vanished as an issue as the fps are indistinguishable from the Intel HEDTs.

There is no "problem" with my "rhetoric" in this thread. The problem is completely on your side.The problem with your rhetoric in this thread

Any news/benchmarks from the R5?

You should learn to read before saying what I've changed and what I didn't.

These optimizations prove the exact opposite of what you're saying - that the CCX is a hardware problem which can be worked around to some degree by doing s/w optimizations specifically for the h/w in question. Which is what I've been saying all the time.

.

its not a problem, its a compromise. Performance is not bad with it, it just could be better.

Not sure if anyone has seen this but it's pretty intresting. It's about the relationship between Ryzen performance and the video card drivers on Nvidia vs AMD cards. This guy ran some benchmarks and tests and believes that the lack of performance on Ryzen is due to an Nvidia driver bottleneck.

https://www.youtube.com/watch?v=0tfTZjugDeg

That sounds like it could be load line calibration if you had it turned all the way up.- weird CPU voltage readings sometimes over 1.5v even though it was set to 1.350 in BIOS

Not sure if anyone has seen this but it's pretty intresting. It's about the relationship between Ryzen performance and the video card drivers on Nvidia vs AMD cards. This guy ran some benchmarks and tests and believes that the lack of performance on Ryzen is due to an Nvidia driver bottleneck.

https://www.youtube.com/watch?v=0tfTZjugDeg

I'm using a Corsair 2400 MHz 14-16-16-31 16GB dual-channel kit (sorry, don't remember the model #).I feel the same way about the Asus Prime B350M-A shark sandwich. The lack of beta bios, and molasses release schedule for this board is disappointing to see from Asus. I'm willing to wait for the May update to see if the ram situation actually improves though. Which XMP settings and with what ram and size were you able to use on the Motar? I've been eyeing the Asrock AB350M Pro, just cause the placement of the M.2 drive.

He also says that a 30-35% difference in performance is unreasonable.

If we look at IPC, Intel was about 7% ahead if I remember correctly. Combine that with 25% higher clockspeeds and we're at 34% faster per-core performance.

The CCX is integral to Ryzen's design, so you're basically saying Ryzen itself is one big 'hardware problem', even though some trivial and initial software optimisations after a few weeks completely negate any performance losses compared to the 6900K. In fact the 1800X is slightly faster than Intel's 8-core HEDT according to PCGH, so according to you, we must also assume the 6900K has a hardware problem as well.

He also says that a 30-35% difference in performance is unreasonable.

If we look at IPC, Intel was about 7% ahead if I remember correctly. Combine that with 25% higher clockspeeds and we're at 34% faster per-core performance.

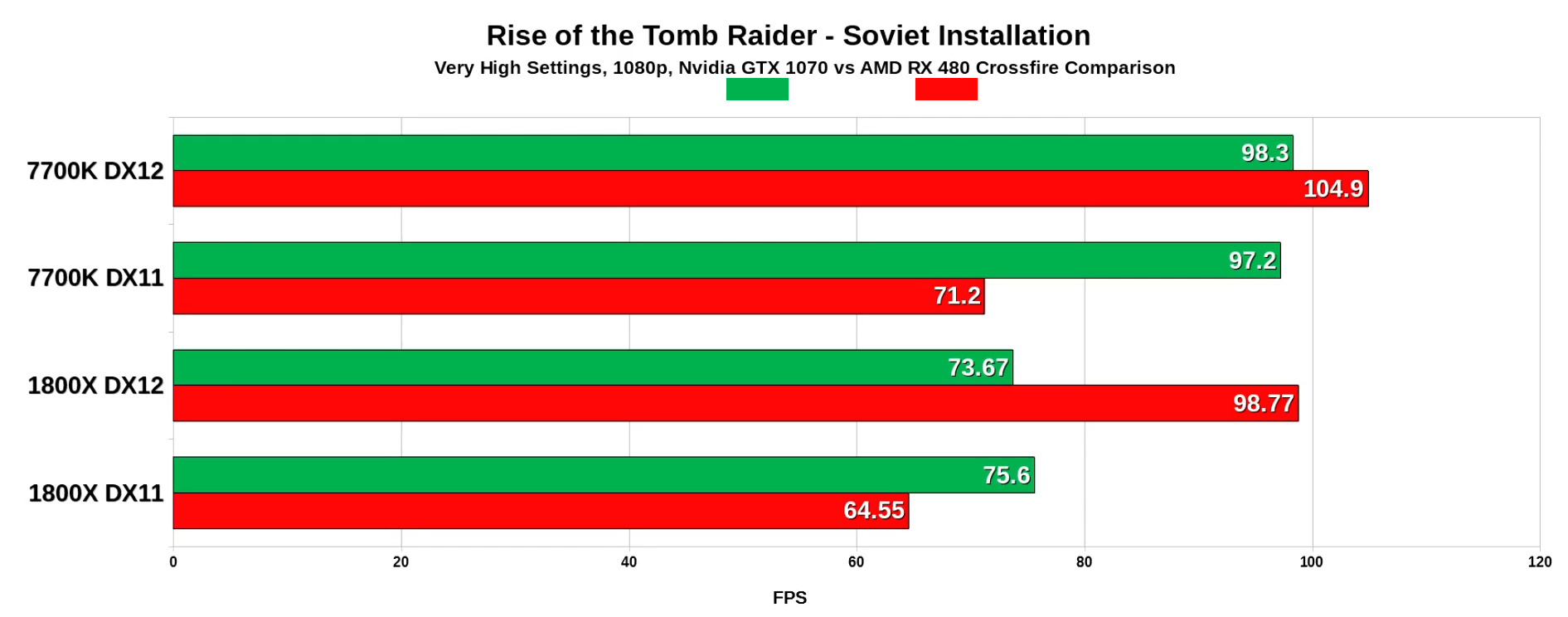

Somebody on another forum called it an Nvidia DX12 API bottleneck. It's probably Nvidia's DX12 driver needing adaption/optimization for Ryzen.Jim/AdoredTV just found out something really interesting: the CPU bottleneck in Tomb Raider DX12 is much less pronounced with ryzen as soon as you switch from an highly overclocked 1070 to a crossfire 480 setup (at stock speeds - which should put both setups roughly on par GPU power wise)

It only becomes a "hardware" problem if the software scheduler is too stupid to randomly spread and move around those small amount of heavy weight threads across the two CCXes and the developer of said scheduler pretends everything "works" as intended.The CCX is a design decision AMD made for Ryzen which result in problems you're seeing in games and other complex software which have a small amount of heavy weight threads. You can say it this way if you prefer - doesn't change the fact that this is a problem of Ryzen's h/w design.

Somebody on another forum called it an Nvidia DX12 API bottleneck. It's probably Nvidia's DX12 driver needing adaption/optimization for Ryzen.