LordOfChaos

Member

https://forums.anandtech.com/thread...dge-ryzen-9000.2607350/page-350#post-41186460

Revealed and supported by tech leaker Kepler_L2 and other tech enthusiasts.

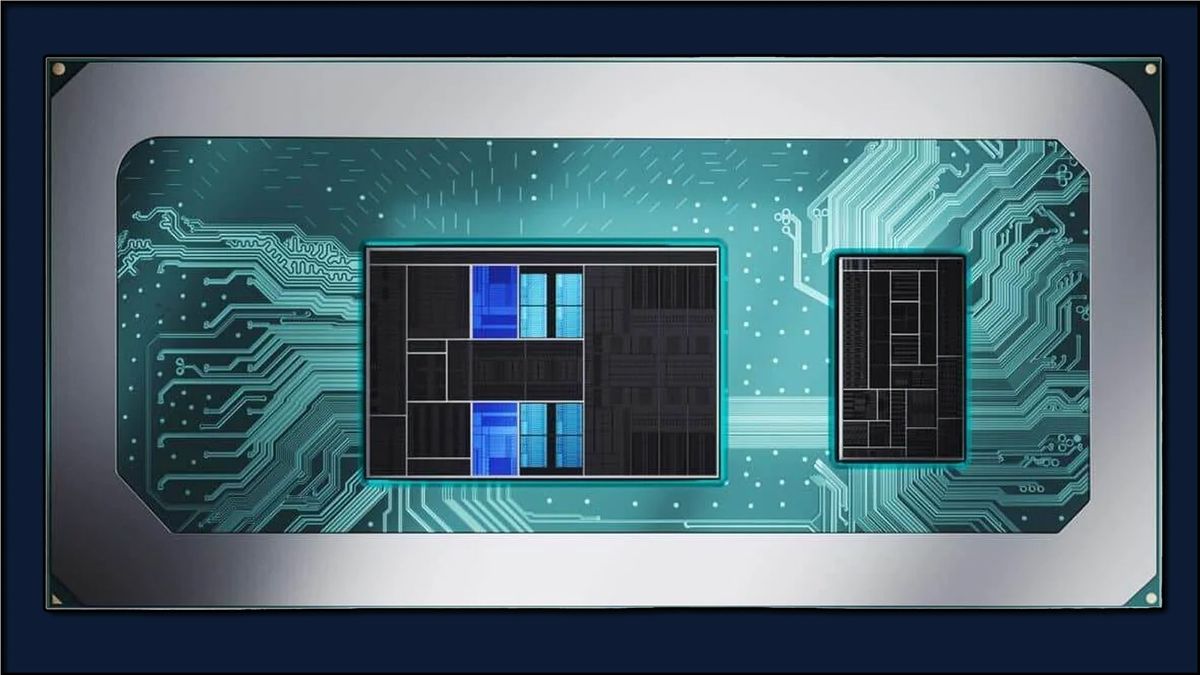

The idea is to have a large iGPU with 16 RDNA 3.5 compute units (fixed and enhanced version of RDNA3) and enable much higher gaming performance with the addition of a large 16MB shared cache.

However, this die area has been replaced with a large NPU due to demands from Microsoft for ever more AI processing power to run its upcoming Windows AI productivity features. It is also claimed that Microsoft is doubling down on AI and will demand even larger NPUs in the future.

Revealed and supported by tech leaker Kepler_L2 and other tech enthusiasts.

The idea is to have a large iGPU with 16 RDNA 3.5 compute units (fixed and enhanced version of RDNA3) and enable much higher gaming performance with the addition of a large 16MB shared cache.

However, this die area has been replaced with a large NPU due to demands from Microsoft for ever more AI processing power to run its upcoming Windows AI productivity features. It is also claimed that Microsoft is doubling down on AI and will demand even larger NPUs in the future.