Halo being ported to the more powerful PC yet performing worse than the original Xbox comes to mind.

Do you have a source ?

Halo being ported to the more powerful PC yet performing worse than the original Xbox comes to mind.

Apparently the X1 DX12 team will be doing a podcast with The Inner Circle team soon.

Am I correct in assuming those gains are for intel cpus? Would AMD cpus see a similar gain?

The slower the CPU the more relative gain it will show on average. So those gains are actually the biggest on AMD CPUs as they are the slowest.

Do you have a source ?

You're joking... right? Dat Geforce 5600 Ultra which is several generations ahead of the Geforce 3 inside the OG Xbox, is clocked at 350Mhz vs 233Mhz for Xbox, and memory bandwidth at 11.2GB/s vs Xbox' 6.4GB/s and despite being 2 full architectural generations ahead and killing it spec wise, it's looking mighty embarrassed at 14fps while running on the best CPU of 2003 lol.

Don't forget that Halo PC launched 2 years after the Xbox version and not only are those GPUs several generations ahead, but those numbers are pretty sad considering, so forget trying to use an equivalent GeForce 3 which is what Xbox was equipped with and a CPU from 2001, because the numbers would be embarrassing, in fact the more popular gaming GPU at the time was the Geforce 4 Ti series, typically on a P4 3.2 Ghz which we can clearly call "A more powerful PC" was the most common setup and people were very vocal about the performance issues.

If you purchased Halo PC when it launched and attempted to run it on a pretty good PC for 2003, you wouldn't be asking for sources, you would just know by experience.

Apparently the X1 DX12 team will be doing a podcast with The Inner Circle team soon.

I bought halo pc when it came out, had a 9800pro at the time and an athlon xp 2800+, ran really well for me (though it was a good pc at the time^^)

also 1280x1024 on my pc vs 640*480 on my xbox

geforce 4 ti was 2001 stuff btw, I had one before my 9800pro

The only negative memory I have of halo pc was how pathetic the netcode was, and I believe the game even had peer2peer multiplayer instead of dedicated servers (might be misremembering that one)

The multiplayer was simply not worth playing

I guess they are going to have an x1 dx12 team member on the podcast before GDC and then again after GDC.

You're joking... right? Dat Geforce 5600 Ultra which is several generations ahead of the Geforce 3 inside the OG Xbox, is clocked at 350Mhz vs 233Mhz for Xbox, and memory bandwidth at 11.2GB/s vs Xbox' 6.4GB/s and despite being 2 full architectural generations ahead and killing it spec wise, it's looking mighty embarrassed at 14fps while running on the best CPU of 2003 lol.

Don't forget that Halo PC launched 2 years after the Xbox version and not only are those GPUs several generations ahead, but those numbers are pretty sad considering, so forget trying to use an equivalent GeForce 3 which is what Xbox was equipped with and a CPU from 2001, because the numbers would be embarrassing, in fact the more popular gaming GPU at the time was the Geforce 4 Ti series, typically on a P4 3.2 Ghz which we can clearly call "A more powerful PC" was the most common setup and people were very vocal about the performance issues.

If you purchased Halo PC when it launched and attempted to run it on a pretty good PC for 2003, you wouldn't be asking for sources, you would just know by experience.

Im sort of new into this DX12 hype.

Is its performance boost going to carry over on a equivalent level to the xbox one since its mainly for PC? Or is the xbox one's hardware a limiting factor to the true effectiveness of the Xbox One.

Jesus IDK what to believe with all these 50%, 600%, 200%, 100% increases lines people are saying.

Some guy was saying Xbox one is running direct x 9 so once 12 comes up on it that x3 increase at least.

Another guy said DX12 was demo'd on a xbox one and it allowed some test to go up to 120fps.

I dont know what to believe, nor know what to even counter with in a argument, however what alot people are telling me doesnt add up to me.

My basic sense would say Direct X cannot substitute for performance hardware, and that DX take full advantage of something that has the ability. Sort of like putting a turbo in your Mom's mini van versus a sports card. Yeah the mini van may get a boost without worrying about blowing the engine, but its not going to fully substitute for the lack for power whilst the sports card you'll see a significant difference/increase.

I'm not 100% sure what DX12 will do for the Xbox but I can tell you what you've been hearing is complete nonsense. Someone else can explain why with better knowledge on it.

Some guy was saying Xbox one is running direct x 9 so once 12 comes up on it that x3 increase at least.

Another guy said DX12 was demo'd on a xbox one and it allowed some test to go up to 120fps.

Im sort of new into this DX12 hype.

Is its performance boost going to carry over on a equivalent level to the xbox one since its mainly for PC? Or is the xbox one's hardware a limiting factor to the true effectiveness of the Xbox One.

Jesus IDK what to believe with all these 50%, 600%, 200%, 100% increases lines people are saying.

Basically, we're going to see good efficiency improvements with DX running across multiple cores. With the CPU's in the X1/PS4 being lower clocked, higher core, this matches their architecture so we'll get improved GPU utilization. Due to it being a console, the latency on it's draw calls will be lower than what's seen on PC, whether this will be improved further, we're not sure. Although the interview with the developers of Metro, point to it being so.Thats what im looking for a solid explanation about its cause with xbox one.

Cause it sort of adds up for the PC cause of parts that can fully take advantage of the DX12 and what it has to offer, but when it comes to the Xbox one, sounds like hype of smoke and mirrors that doesnt exist. Although I think most of the hype is derived from fan boys who take a inch and go a mile type deal, they dont understand it fully or at all then just paint a utopia of "what they hope it to be" when its far from it.

That and like I said the MS exec came out and said to expect no dramatic change when all this hype came out and they are like OH OH HES DOWNPLAYING IT THERES A NON DISCLOSURE ACT - where people cant talk about hidden chips on the xbox one etc etc...

No, it's going to be mainly for pc.

It isn't going to be for every developer nor every game. Low level programming isn't easy.

For games that aren't cpu-bounded, expect small improvements, like 5%.

For somewhat cpu-bounded games, expect improvements of 25-30%.

For super cpu heavy games, expect improvements of 60%...

...and for mega cpu bounded mosnters (imagine a type of game which directly doesn't exist right now because it would be too much with actual apis, like a space games with thousands of physics driven ships, like the starswarm demo), expect improvements of 250%.

No, it's going to be mainly for pc.

It isn't going to be for every developer nor every game. Low level programming isn't easy.

For games that aren't cpu-bounded, expect small improvements, like 5%.

For somewhat cpu-bounded games, expect improvements of 25-30%.

For super cpu heavy games, expect improvements of 60%...

...and for mega cpu bounded mosnters (imagine a type of game which directly doesn't exist right now because it would be too much with actual apis, like a space games with thousands of physics driven ships, like the starswarm demo), expect improvements of 250%.

We don't have enough data to properly estimate the impact of DX12 with final drivers. Your numbers are taken out of your bumhole.

Lol thats what im saying, AND arent the numbers of increases coming from tech demos or "proof of concept" demos?

Or what have they exactly tested it on.

I got some guy on my group telling me some demo pumped out 120fps on xbox one hardware(didnt say if it was actual xbox one or similar hardware) and i said show me proof/vid/article.

Then another guy says well xbox one is a computer and hardware is similar to that of PC's therefore why wouldnt it take full advantage of the Dx12 situation, and I said because its lower level internals/economical stuff. Make sense?

The basis with Dx12 I didn't read up early on and now im so caught up between hype/lies/propaganda to figure out whats what. Especially with this Brad guy coming out on Inner Circle Podcast or w/e This still sounded like it was directed towards PC but these fanboys take a inch and go a mile with it.

but all these percentages, whats the increase pertaining to? resolution? FPS? general graphics?

It probably is in your head.To give an idea that's better than the bullcrap I was reading before.

So, to sum up:

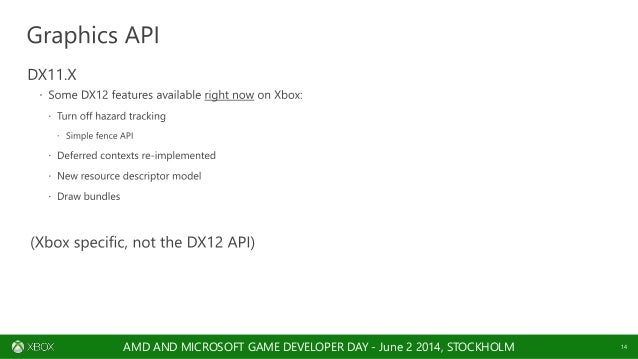

- The performance benefits from moving to DX12 on Xbox One will be less than the performance benefits on PC, because the DX11 version used on Xbox One already has extensions that can make draw calls cheaper.

So thats it? 300% increase? 60%? 40%

or you cannot comment? it seems its obvious of its benefits for the PC but almost nothing can be said for the xbox one other than itll help somewhat?

IDK how dudes can speculate so hard on dx12 and the effects on xbox one and speak like they are professionals? when they are merely minimum wage works at a grocery store I guess that makes them a expert.

That kind of language get you nowhere but off GAF.

If one wants to believe hype, they probably will until bashed over the head with reality.IDK how dudes can speculate so hard on dx12 and the effects on xbox one and speak like they are professionals The people I hear locally around me talking about it? when they are merely minimum wage works at a grocery store I guess that makes them a expert. My buddy is the one whos trying to tell me all this stuff and I ask where he hears this from and its from some dudes he works with, and it makes me mad cause he doesnt question them and just repeats this information hook line and sinker....doesnt understand it, but believes it word for word what they say.

So thats it? 300% increase? 60%? 40%

or you cannot comment? it seems its obvious of its benefits for the PC but almost nothing can be said for the xbox one other than itll help somewhat?

IDK how dudes can speculate so hard on dx12 and the effects on xbox one and speak like they are professionals The people I hear locally around me talking about it? when they are merely minimum wage works at a grocery store I guess that makes them a expert. My buddy is the one whos trying to tell me all this stuff and I ask where he hears this from and its from some dudes he works with, and it makes me mad cause he doesnt question them and just repeats this information hook line and sinker....doesnt understand it, but believes it word for word what they say.

I told him about how the MS exec came out and said DX12 cant change clock speeds and will not be that dramatic of a change. And he said really he said that? I said yes bro he came out and clarified it. He seemingly was shocked, cause he doesn't read anything online and as I said just believes "Speculation" to the full extent, speculation that is from a amateur source.

lol I chuckledThe little bars for DX12 definitely seem to be longer or shorter than the other two, depending on the test.

There are a lot of reasons why "now" is a good time. For some reason people have gotten the impression that because today we can do a pretty nice, portable low-overhead API, that this was always the case. It absolutely wasn't... GPU hardware itself has only recently gotten to the level of generality required to support something like DX12. It would not have worked nearly as nicely on a ~DX10-era GPU...

Another huge shift in the industry lately is the centralization of rendering technology into a small number of engines, written by a fairly small number of experts. Back when every studio write their own rendering code something like DX12 would have been far less viable. There are still people who do not want these lower-level APIs of course, but most of them have already moved to using Unreal/Unity/etc

function said: ↑The notion that it hasn't been blatantly clear to everyone for a long time that this was a problem is silliness. Obviously it has been undesirable from day one and it's something that has been discussed during my entire career in graphics. Like I said, it's a combination of secondary factors that make things like DX12 practical now, not some single industry event.

That's a twisted view of the world. Frankly it's not terribly difficult to make an API that maps well to one piece of hardware. See basically every console API ever. If you feel bad that the other "modern" APIs are apparently ripping off Mantle (and just trivially adding portability in an afternoon) then you should really feel sorry for Sony and others in the console space who came up with these ideas much earlier still (see libgcm). But the whole notion is stupid - it's a small industry and we all work with each other and build off good ideas from each other constantly.

Ultimately if Mantle turns out to be a waste of engineering time (which is far from clear yet), AMD has no one to blame but themselves in terms of developing and shipping a production version. If their intention was really to drive the entire industry forward they could have spent a couple months on a proof of concept and then been done with it.

The adaptive sync thing is particularly hilarious as that case is a clear reaction to what NVIDIA was doing... but building it on top of DP is precisely because this stuff was already in eDP! It's also highly related to other ideas that have been cooking there for some time (panel self refresh). Don't get fooled by marketing "we did it first" nonsense.

3dilettante said: ↑Yep, as you say I'm not even sure the notion of a "full story" is well-defined, despite human nature wanting a tidy narrativeCertainly multiple parties have been working on related problems for quite a long time here, and no one party has perfect information. As folks may or may not remember from the B3D API interview (http://www.beyond3d.com/content/articles/120/3), these trends were already clearly established in 2011. Even if Mantle was already under development then (and at least it seems like Mike didn't know about it if it was), I certainly didn't know about it

Yet - strangely enough - we were still considering all of these possibilities then.

Exactly, which is why it's ultimately a useless exercise. All of the statements can even be correct, because that's how the industry actually works: nothing ever comes from a vacuum.

I'm not sure I'm totally bought in to either sentence thereThe GPU is pretty good at the math for culling, but it's not great with the data structures. You can argue that for the number of objects you're talking about you might as well just brute force it, but as I showed a few years ago with light culling, that's actually not as power-efficient. Now certainly GPUs will likely become somewhat better at dealing with less regular data structures, but conversely as you note, CPUs are becoming better at math just as quickly, if not *more* quickly.

For the second part of the statement, maybe for a discrete GPU. I'm not as convinced that latencies absolutely "must be high" because we "must buffer lots of commands to keep the GPU pipeline fed" in the long run. I think there is going to be pressure to support much more direct interaction between CPU/GPU on SoCs for power efficiency reasons and in that world it is not at all clear what makes the most sense. Ex. we're going to be pretty close to a world where even a single ~3Ghz Haswell core can generate commands faster than the ~1Ghz GPU frontend can consume them in DX12 already so looping in the GPU frontend is not really a clear win if we end up in a world with significantly lower submission latencies.

It may go in the direction that you're saying and we certainly should pursue both ways, but I disagree that it's a guaranteed endpoint.

I'm talking about PC. On console it's somewhat less relevant as very few developers who are willing to target - say - PS3 are scared of a "low level" API like libgcm. And rightfully so... these APIs aren't really difficult per se, especially when they are only for one piece of pre-determined hardware.

Also note that when I say "engines", I'm talking about technology created by graphics "experts" that are used across multiple titles. Ex. Frostbite is obviously an engine even though it is not sold as middleware.

That further supports my point - if they haven't moved forward to even DX10/11 then it has nothing to do with the "ease of use" of the API and continuing to cater to programmers who want "safer, easier" APIs is wasted effort.

I don't think the resourcing has ever been a huge concern to be honest... as you yourself point out, to these big companies it's peanuts. I wouldn't be surprised if some misguided notion of "protecting" the advantages of the Xbox platform vs. PC have played a role in the past, but I don't think anyone has really said "we're not doing this because it would take some time".

Obviously DX12 as it is defined would not have worked on hardware 12 years ago, so you can hand wave about a "DX12-like API" but I think it's far from clear that you could do a similarly portable and efficient API more than a few years ago.

They couldn't support WDDM2.0 for one, which is an important part of the new API semantics. It hasn't been that long that GPUs have had properly secured per-process VA spaces - certainly 12 years ago there were a lot of GPUs still using physical addressing and KMD patching, hence the WDDM1.x design in the first place.

Ha, don't be fooled by their re-positioning - do the math and it's about the same cost as it was before for a AAA studio. The only difference is it's somewhat more accessible to indy devs now too, a la. Unity.

psorcerer said: ↑Meh, you can already do that with "compute", and the notion isn't even well-defined with the fixed function hardware. There are sort/serialization points in the very definition of the graphics pipeline - you can't just hand wave those away and "we'll just talk *directly* to the hardware this time guys!". Talking directly to the hardware *is* talking to the units that spawn threads, create rasterizer work, etc.

By all means expose the CP directly and make it better at what it does! But that's arguing my point: you're effectively just putting a (currently kinda crappy) CPU on the front of the GPU. That's totally fine, but the notion that the "GPU is a better CPU" is kind of silly if your idea of a GPU includes a CPU. And you're going to start to ask why you need a separate CPU-like core just to drive graphics on an SoC that already has very capable ones nearby...

That's kind of a big topic for another thread I think, but at a minimum you need a tighter integration of caches between CPU/GPU (at least something like Haswell's LLC) with some basic controls for coherency (even if explicit), shared virtual memory (consoles can get away with shared physical I guess) and efficient, low-latency atomics and signaling mechanisms between the two. The multiple compute queue's stuff in current gen is a good start but ultimately I'd like to see that a bit finer grained - i.e. warp-level work stealing on the execution units themselves rather than everything having to go through the frontend.

The little bars for DX12 definitely seem to be longer or shorter than the other two, depending on the test.

To get right down to business then, are AMDs APUs able to shift the performance bottleneck on to the GPU under DirectX 12? The short answer is yes. Highlighting just how bad the single-threaded performance disparity between Intel and AMD can be under DirectX 11, what is a clear 50%+ lead for the Core i3 with Extreme and Mid qualities becomes a dead heat as all 3 CPUs are able to keep the GPU fully fed. DirectX 12 provides just the kick that the AMD APU setups need to overcome DirectX 11s CPU submission bottleneck and push it on to the GPU. Consequently at Extreme quality we see a 64% performance increase for the Core i3, but a 170%+ performance increase for the AMD APUs.