Fixed fps in sync are always better than fluctuating. I'd rather buy a more powerful card than a Gsync capable card + monitor. Gsync setups will work out far more expensive.

Same, especially as I just bought a 27" IPS non Gsync monitor.

Fixed fps in sync are always better than fluctuating. I'd rather buy a more powerful card than a Gsync capable card + monitor. Gsync setups will work out far more expensive.

Even "up to" 45% is way, way better than I expected Mantle would ever do. I figured best case scenarios would be 10-12%, with 3-5% on average.

Color me surprised.

So AMD built a demo solely for the purpose of showing off Mantle's strengths (lots of objects) and then we're shocked at the result? This isn't going to magically boost Battlefield 4 45%. It may come into play in the future, but it's one of those "will multiplats ever bother?" type things.

Nope Mantle is taking full advantage from CPU scaling, and is completely dependant on GPU speed.

DX games are CPU limited, Mantle games are GPU limmited. FX8350 downclocked to 2GHz introduces zero framerate loss to StarSwarm demo.

Oxide Games devs have said that people with midrange CPUs will have no bottlenecks.

No, they couldn't. Mantle works like it does as it targets specific hardware. If MS did that, then Direct3D wouldn't have support for a lot of cards, which was the whole point of D3D/

Not saying that D3D couldn't do with an overhaul, but it couldn't and shouldn't work in the same way as Mantle.

Is it? A developer friend said that EA announcing they were supporting it with frostbite which the use for fucking everything now was the biggest third party news this gen in favor of the ps4 that no one paid attention to...

I need to ask further details I guess.

Fixed fps in sync are always better than fluctuating. I'd rather buy a more powerful card than a Gsync capable card + monitor. Gsync setups will work out far more expensive.

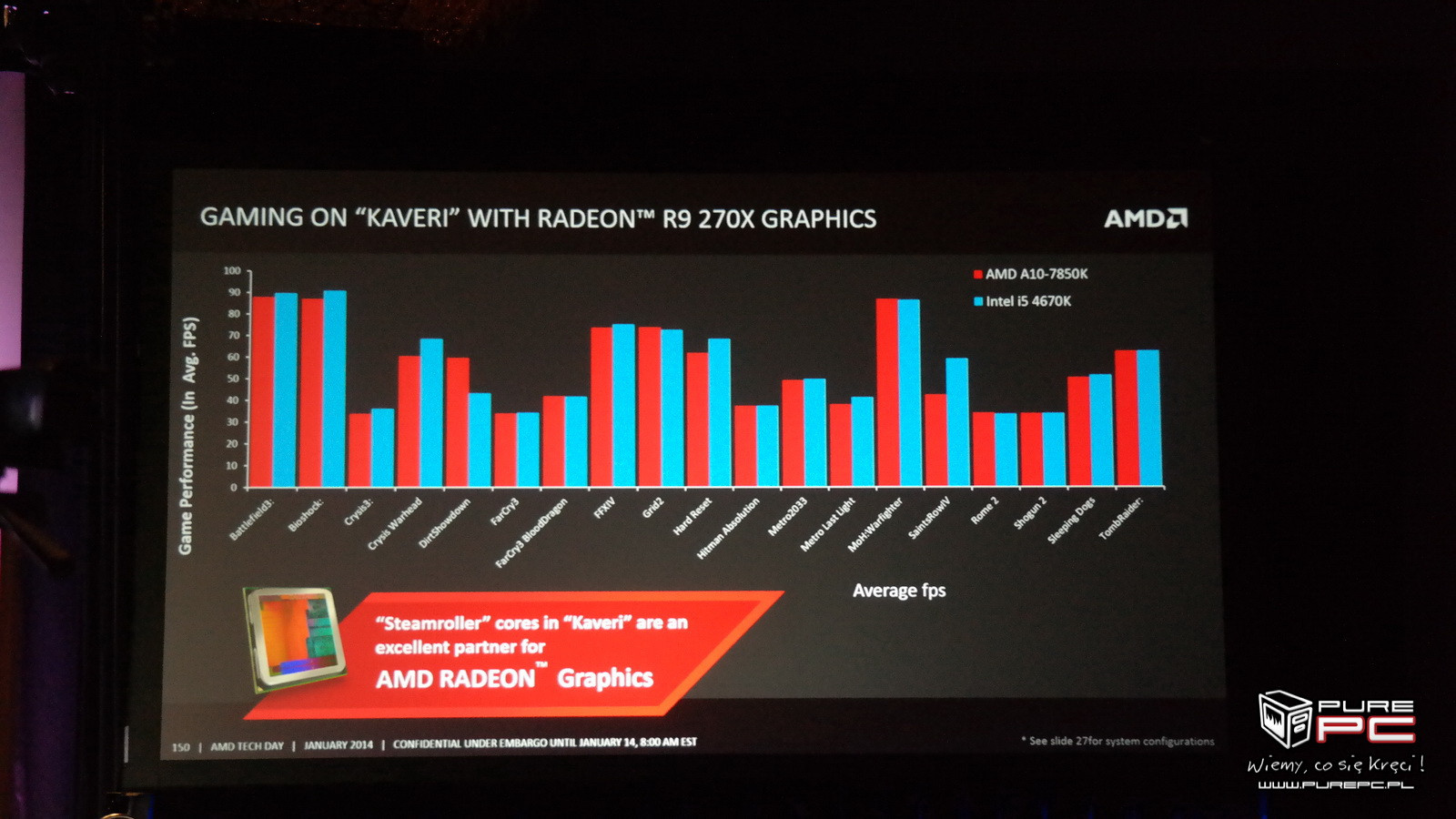

New Mantle slide - Kaveri A8-7600 [$120] vs i7-4770

Dont forget people, those are numbers for Kaveri APU. Im hoping for even better numbers for R9 290/290X GPUs.

Fixed fps in sync are always better than fluctuating. I'd rather buy a more powerful card than a Gsync capable card + monitor. Gsync setups will work out far more expensive.

but G-sync will work on all games old and new.

New Mantle slide - Kaveri A8-7600 [$120] vs i7-4770

If im not mistaken AMD and NVIDIA also have shitty multicore support in their pc drivers.

And Dx11 multithreaded rendering model is kinda strange.

If i remember it right you can make/prepare commandlists on different threads but you have to replay those commandlists on your main rendering thread. For openGL im not sure haven't looked into OpenGL much.

Mantle if im not mistaken allows you to push commandlists to the GPU from every thread?

Everybody likes to quote John Carmack on this, but he said this before Steam Machines specs were released.../URL]

Mantle will be dead by 2015.

Dollars to donuts the only support AMD has for it is out of pocket. None of these stupid things will ever take off if they need specific cards and limit themselves so much.

nvidia probably pays for physx shit to be put in games too.

The only things that will stick are the things that dont need extra money or resources from developers.

Would love to know how they're measuring the i7 performance with no API, unless they threw in some AMD card to measure it?

Let's see some actual numbers first. "Up to 45%" is much closer to my own estimations of 25-30% than to those of the people who expect magical 2-3x increases in performance.

Remember that time a gpu company put out a powerpoint about an upcoming product with accurate results and factual factualisations?

Me either!

lol. "Up to".

Im guessing itll be barely noticeable generally. 2 or 3 fps tops. Count on it!

Mantle will be dead by 2015.

Dollars to donuts the only support AMD has for it is out of pocket. None of these stupid things will ever take off if they need specific cards and limit themselves so much.

nvidia probably pays for physx shit to be put in games too.

The only things that will stick are the things that dont need extra money or resources from developers.

Oxide guys mentioned that the cost of implementation is 2 months of man hours. So, its cheap.

Johan Anderson said that once they implement Mantle in Frostbite 3, it will be standard feature of their engine that will be automatically used in all future FB3 games [15 of them that are in the pipeline, including Star Wars games, Mass Effect, DA3, Mirrors Edge, PvZ Garden Warfare]...

No, they couldn't. Mantle works like it does as it targets specific hardware. If MS did that, then Direct3D wouldn't have support for a lot of cards, which was the whole point of D3D/

Not saying that D3D couldn't do with an overhaul, but it couldn't and shouldn't work in the same way as Mantle.

45%. That's almost too good to be true, but at the same time that's what I was hoping for.

In hard GPU limited situations, it doesn't seem that Mantle will increase your fps (it will still help with frame time fluctuations, however)What I'm more interested in is how big the difference is in a typical game scenario on a fast CPU, and compared to a decent DirectX11 renderer. I guess we will see once it's finally public.

So AMD built a demo solely for the purpose of showing off Mantle's strengths (lots of objects) and then we're shocked at the result? This isn't going to magically boost Battlefield 4 45%. It may come into play in the future, but it's one of those "will multiplats ever bother?" type things.

I see.. but they could have just outdated the existing directX and added a directX+ that targets new hardware for increased performance whislt still supporting the existing standard. Couldn't they?

The point is a solution like Mantle would be better coming from a strictly software company.

Somebody is working overtime.

I'm shocked people believe all this to be honest, once you've been following PC gaming and hardware for long enough you see these kinds of claims every couple of years.

Someone actually clued up will investigate, identify how Mantle does X Y and Z completely incorrectly, causing buffer blah blah something or others and majorly bad AA or really shitty lighting in some situation or something like that.

I could believe a prioprietary API could get some benefits but 45% ? Overnight? Suuuuuuureeee

Oh and the developers then need to target a completely different output, the days of OGL or DirectX when playing a game seem mostly gone now, it's a lot of work, let's just add another one in, they won't mind that!

Yeah,... no.

Would love to know how they're measuring the i7 performance with no API, unless they threw in some AMD card to measure it?

.

mmmmmmmm I wonder how well a Kaveri system with a R9 would run in Dual Graphics mode.....gosh those benchmarks I cannot wait.

I remember when just the first AMD trinity stuff came out and the graphics boost from the APU was enough for some insane FPS jump.....so much anticipation from AMD this year.

AMD knows where their bread is being buttered now days as well.

In regards to Mantle, any improvements that can be made over Directx I think it is a good thing as hopefully it will put more pressure on Microsoft to improve it in the future.

There is no singular steam machine spec. There will be boxes available with AMD cards in them. The drivers just weren't ready when SteamOS went public.

It'll probably only Crossfire with an R7 card, and even then I'd try to avoid a multi GPU set up.

Haha there is no such processor from Intel as the i5 3870K. The only 3870K processor that exists is an AMD FM1 based APU.

It'll probably only Crossfire with an R7 card, and even then I'd try to avoid a multi GPU set up.

The most significant enhancement Kaveri would adopt is the HSA (Heterogeneous System Architecture) powered with the new HUMA enhancements which allow coherent memory access within the GPU and CPU. HUMA would make sure that both the CPU and GPU would have uniform access to an entire memory space which would be done through the memory controller. This would allow additional performance out of the APU incase the GPU gets bandwidth starved. This also suggests that faster memory speeds would result in better overall performance from the graphics card. Now with all the architectural talks done, lets get on with the A10-7850K itself.

On the graphics side, we are getting the latest GCN architecture over the VLIW4 featured on previous AMD APUs. The die has upto 8 GCN compute units which feature AMD AudioTrue technology, AMD Eyefinity tech, UVD, VCE, DMA Engine and the addition of coherent shared unified memory. Being based on the same GPU as Hawaii, the Kaveri APU die has 8 ACE (Asynchronous Compute Engine) which can manage 8 Queues and have access to L2 Cache and GDS .

The new APUs seem great for 720p gaming, but gains over an i5+dedicated GPU seem non-existant. It can be a decent stopgap if you don't want to spend those extra 150€ right away on a new GPU, but if you can afford both, a baseline i5 is still a bit cheaper.

Well its a hybrid crossfire......its a cool feature that I have always thought about using for my gaming rig....but end up going intel.nvidia instead.....however with AMD really gaining grounds in the power/performance ratio I have thought about going back. My old computer I have my cousin with the 6950(I think thats what it was) is still kicking strong and playing games perfectly.

so it's not really a full Crossfire but instead borrows extra video rendering from the APU and combines it with the power of the hard GPU.

it was announced a while back that mantle does not require GCN and works with NVidia cards as well.

this is good news going forward providing the performance gains over directx example isn't a best case scenario.

What are you talking about? With steam machines around the corner, this is the best time to come out with something like mantle. It's perfect for it, and if developers will use it for Steam OS, they will have little reason to not go for the Windows crossover.

This could actually take off.

It'Haha there is no such processor from Intel as the i5 3870K. The only 3870K processor that exists is an AMD FM1 based APU.