I should be receiving mine soon, hopefully! I'm very excited for this.

One thing I would like to know...I wonder if the FPGA board will be upgradable/reprogrammable in case of any major changes behind the tech. Hopefully they've included a service option of some sort. Of course, due to them selling this at a massive discount, I guess it's possible that they may not be allowed to expose the FPGA since it might undercut Altera's sales.

edit: incredible review Mark!

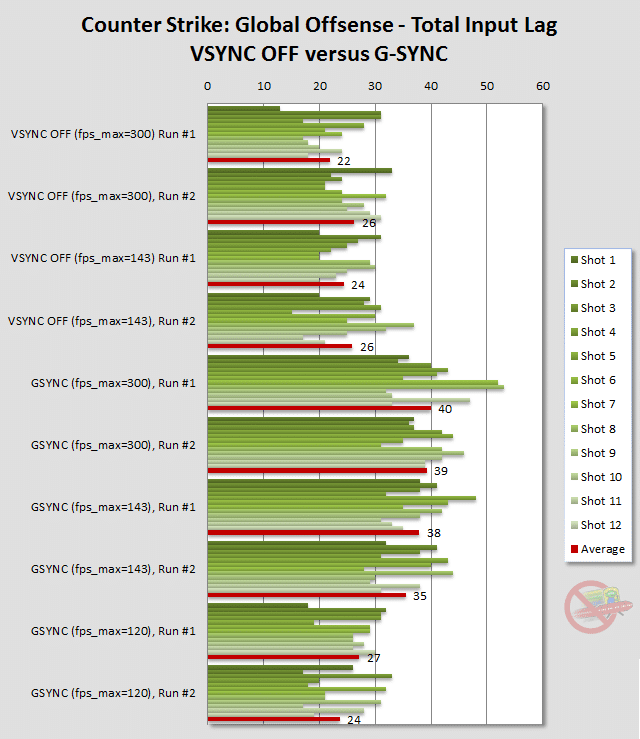

It looks like the input lag from high framerate G-Sync is way higher than predicted. I wonder why that is. At least capping at 120Hz seems like a good solution.

I'm really surprised. Theoretically we figured that the total input lag from >144Hz would only extend to 1 frame at most (6.9ms) as the framerate went to infinity but it seems like even at just 300fps we're looking at nearly 20ms of additional input lag. I wonder why that is. Maybe there is a buffer in place that only gets used when the GPU outputs too quickly, which incurs an additional input lag penalty.